Building Security Into Your Software Development Lifecycle

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Effectiveness of Software Testing Techniques in Enterprise: a Case Study

MYKOLAS ROMERIS UNIVERSITY BUSINESS AND MEDIA SCHOOL BRIGITA JAZUKEVIČIŪTĖ (Business Informatics) EFFECTIVENESS OF SOFTWARE TESTING TECHNIQUES IN ENTERPRISE: A CASE STUDY Master Thesis Supervisor – Assoc. Prof. Andrej Vlasenko Vilnius, 2016 CONTENTS INTRODUCTION .................................................................................................................................. 7 1. THE RELATIONSHIP BETWEEN SOFTWARE TESTING AND SOFTWARE QUALITY ASSURANCE ........................................................................................................................................ 11 1.1. Introduction to Software Quality Assurance ......................................................................... 11 1.2. The overview of Software testing fundamentals: Concepts, History, Main principles ......... 20 2. AN OVERVIEW OF SOFTWARE TESTING TECHNIQUES AND THEIR USE IN ENTERPRISES ...................................................................................................................................... 26 2.1. Testing techniques as code analysis ....................................................................................... 26 2.1.1. Static testing ...................................................................................................................... 26 2.1.2. Dynamic testing ................................................................................................................. 28 2.2. Test design based Techniques ............................................................................................... -

Studying the Feasibility and Importance of Software Testing: an Analysis

Dr. S.S.Riaz Ahamed / Internatinal Journal of Engineering Science and Technology Vol.1(3), 2009, 119-128 STUDYING THE FEASIBILITY AND IMPORTANCE OF SOFTWARE TESTING: AN ANALYSIS Dr.S.S.Riaz Ahamed Principal, Sathak Institute of Technology, Ramanathapuram,India. Email:[email protected], [email protected] ABSTRACT Software testing is a critical element of software quality assurance and represents the ultimate review of specification, design and coding. Software testing is the process of testing the functionality and correctness of software by running it. Software testing is usually performed for one of two reasons: defect detection, and reliability estimation. The problem of applying software testing to defect detection is that software can only suggest the presence of flaws, not their absence (unless the testing is exhaustive). The problem of applying software testing to reliability estimation is that the input distribution used for selecting test cases may be flawed. The key to software testing is trying to find the modes of failure - something that requires exhaustively testing the code on all possible inputs. Software Testing, depending on the testing method employed, can be implemented at any time in the development process. Keywords: verification and validation (V & V) 1 INTRODUCTION Testing is a set of activities that could be planned ahead and conducted systematically. The main objective of testing is to find an error by executing a program. The objective of testing is to check whether the designed software meets the customer specification. The Testing should fulfill the following criteria: ¾ Test should begin at the module level and work “outward” toward the integration of the entire computer based system. -

Command Line Interface

Command Line Interface Squore 21.0.2 Last updated 2021-08-19 Table of Contents Preface. 1 Foreword. 1 Licence. 1 Warranty . 1 Responsabilities . 2 Contacting Vector Informatik GmbH Product Support. 2 Getting the Latest Version of this Manual . 2 1. Introduction . 3 2. Installing Squore Agent . 4 Prerequisites . 4 Download . 4 Upgrade . 4 Uninstall . 5 3. Using Squore Agent . 6 Command Line Structure . 6 Command Line Reference . 6 Squore Agent Options. 6 Project Build Parameters . 7 Exit Codes. 13 4. Managing Credentials . 14 Saving Credentials . 14 Encrypting Credentials . 15 Migrating Old Credentials Format . 16 5. Advanced Configuration . 17 Defining Server Dependencies . 17 Adding config.xml File . 17 Using Java System Properties. 18 Setting up HTTPS . 18 Appendix A: Repository Connectors . 19 ClearCase . 19 CVS . 19 Folder Path . 20 Folder (use GNATHub). 21 Git. 21 Perforce . 23 PTC Integrity . 25 SVN . 26 Synergy. 28 TFS . 30 Zip Upload . 32 Using Multiple Nodes . 32 Appendix B: Data Providers . 34 AntiC . 34 Automotive Coverage Import . 34 Automotive Tag Import. 35 Axivion. 35 BullseyeCoverage Code Coverage Analyzer. 36 CANoe. 36 Cantata . 38 CheckStyle. .. -

Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants

SIMPLIFIED SOFTWARE LIFE-CYCLE DIAGRAM FEASIBILITY STUDY PROJECT TIME I SOFTWARE P FUNCTIONAL I SPECIFICATION! SOFTWARE SYSTEM DESIGN DETAILED MODULES CECIFICATION MODULES DESIGN SOFTWARE INTEGRATION AND TESTING SYSTEM TESTING ••COMMISSIONING I AND HANDOVER | DECOMMISSION DESIGN DESIGN SPECIFICATION VERIFICATION OPERATION AND MAINTENANCE SOFTWARE LIFE-CYCLE PHASES TECHNICAL REPORTS SERIES No. 282 Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants f INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1988 MANUAL ON QUALITY ASSURANCE FOR COMPUTER SOFTWARE RELATED TO THE SAFETY OF NUCLEAR POWER PLANTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GUATEMALA PARAGUAY ALBANIA HAITI PERU ALGERIA HOLY SEE PHILIPPINES ARGENTINA HUNGARY POLAND AUSTRALIA ICELAND PORTUGAL AUSTRIA INDIA QATAR BANGLADESH INDONESIA ROMANIA BELGIUM IRAN, ISLAMIC REPUBLIC OF SAUDI ARABIA BOLIVIA IRAQ SENEGAL BRAZIL IRELAND SIERRA LEONE BULGARIA ISRAEL SINGAPORE BURMA ITALY SOUTH AFRICA BYELORUSSIAN SOVIET JAMAICA SPAIN SOCIALIST REPUBLIC JAPAN SRI LANKA CAMEROON JORDAN SUDAN CANADA KENYA SWEDEN CHILE KOREA, REPUBLIC OF SWITZERLAND CHINA KUWAIT SYRIAN ARAB REPUBLIC COLOMBIA LEBANON THAILAND COSTA RICA LIBERIA TUNISIA COTE D'lVOIRE LIBYAN ARAB JAMAHIRIYA TURKEY CUBA LIECHTENSTEIN UGANDA CYPRUS LUXEMBOURG UKRAINIAN SOVIET SOCIALIST CZECHOSLOVAKIA MADAGASCAR REPUBLIC DEMOCRATIC KAMPUCHEA MALAYSIA UNION OF SOVIET SOCIALIST DEMOCRATIC PEOPLE'S MALI REPUBLICS REPUBLIC OF KOREA MAURITIUS UNITED ARAB -

Test-Driven Development in Enterprise Integration Projects

Test-Driven Development in Enterprise Integration Projects November 2002 Gregor Hohpe Wendy Istvanick Copyright ThoughtWorks, Inc. 2002 Table of Contents Summary............................................................................................................. 1 Testing Complex Business Applications......................................................... 2 Testing – The Stepchild of the Software Development Lifecycle?............................................... 2 Test-Driven Development............................................................................................................. 2 Effective Testing........................................................................................................................... 3 Testing Frameworks..................................................................................................................... 3 Layered Testing Approach ........................................................................................................... 4 Testing Integration Solutions............................................................................ 5 Anatomy of an Enterprise Integration Solution............................................................................. 5 EAI Testing Challenges................................................................................................................ 6 Functional Testing for Integration Solutions................................................................................. 7 EAI Testing Framework .................................................................................. -

Continuous Quality and Testing to Accelerate Application Development

Continuous Quality and Testing to Accelerate Application Development How to assess your current testing maturity level and practice continuous testing for DevOps Continuous Quality and Testing to Accelerate Application Development // 1 Table of Contents 03 Introduction 04 Why Is Continuous Quality and Testing Maturity Important to DevOps? 05 Continuous Testing Engineers Quality into DevOps 07 Best Practices for Well- Engineered Continuous Testing 08 Continuous Testing Maturity Levels Level 1: Chaos Level 2: Continuous Integration Level 3: Continuous Flow Level 4: Continuous Feedback Level 5: Continuous Improvement 12 Continuous Testing Maturity Assessment 13 How to Get Started with DevOps Testing? 14 Continuous Testing in the Cloud Choosing the right tools for Continuous Testing On-demand Development and Testing Environments with Infrastructure as Code The Right Tests at the Right Time 20 Get Started 20 Conclusion 21 About AWS Marketplace and DevOps Institute 21 Contributors Introduction A successful DevOps implementation reduces the bottlenecks related to testing. These bottlenecks include finding and setting up test environments, test configurations, and test results implementation. These issues are not industry specific. They can be experienced in manufacturing, service businesses, and governments alike. They can be reduced by having a thorough understanding and a disciplined, mature implementation of Continuous Testing and related recommended engineering practices. The best place to start addressing these challenges is having a good understanding of what Continuous Testing is. Marc Hornbeek, the author of Engineering DevOps, describes it as: “A quality assessment strategy in which most tests are automated and integrated as a core and essential part of DevOps. Continuous Testing is much more than simply ‘automating tests.’” In this whitepaper, we’ll address the best practices you can adopt for implementing Continuous Quality and Testing on the AWS Cloud environment in the context of the DevOps model. -

Devops Point of View an Enterprise Architecture Perspective

DevOps Point of View An Enterprise Architecture perspective Amsterdam, 2020 Management summary “It is not the strongest of the species that survive, nor the most intelligent, but the one most responsive to change.”1 Setting the scene Goal of this Point of View In the current world of IT and the development of This point of view aims to create awareness around the IT-related products or services, companies from transformation towards the DevOps way of working, to enterprise level to smaller sizes are starting to help gain understanding what DevOps is, why you need it use the DevOps processes and methods as a part and what is needed to implement DevOps. of their day-to-day organization process. The goal is to reduce the time involved in all the An Enterprise Architecture perspective software development phases, to achieve greater Even though it is DevOps from an Enterprise Architecture application stability and faster development service line perspective, this material has been gathered cycles. from our experiences with customers, combined with However not only on the technical side of the knowledge from subject matter experts and theory from organization is DevOps changing the playing within and outside Deloitte. field, also an organizational change that involves merging development and operations teams is Targeted audience required with an hint of cultural changes. And last but not least the skillset of all people It is specifically for the people within Deloitte that want to involved is changing. use this as an accelerator for conversations and proposals & to get in contact with the people who have performed these type of projects. -

Coverity Static Analysis

Coverity Static Analysis Quickly find and fix Overview critical security and Coverity® gives you the speed, ease of use, accuracy, industry standards compliance, and quality issues as you scalability that you need to develop high-quality, secure applications. Coverity identifies code critical software quality defects and security vulnerabilities in code as it’s written, early in the development process when it’s least costly and easiest to fix. Precise actionable remediation advice and context-specific eLearning help your developers understand how to fix their prioritized issues quickly, without having to become security experts. Coverity Benefits seamlessly integrates automated security testing into your CI/CD pipelines and supports your existing development tools and workflows. Choose where and how to do your • Get improved visibility into development: on-premises or in the cloud with the Polaris Software Integrity Platform™ security risk. Cross-product (SaaS), a highly scalable, cloud-based application security platform. Coverity supports 22 reporting provides a holistic, more languages and over 70 frameworks and templates. complete view of a project’s risk using best-in-class AppSec tools. Coverity includes Rapid Scan, a fast, lightweight static analysis engine optimized • Deployment flexibility. You for cloud-native applications and Infrastructure-as-Code (IaC). Rapid Scan runs decide which set of projects to do automatically, without additional configuration, with every Coverity scan and can also AppSec testing for: on-premises be run as part of full CI builds with conventional scan completion times. Rapid Scan can or in the cloud. also be deployed as a standalone scan engine in Code Sight™ or via the command line • Shift security testing left. -

A Comparison of SPARK with MISRA C and Frama-C

A Comparison of SPARK with MISRA C and Frama-C Johannes Kanig, AdaCore October 2018 Abstract Both SPARK and MISRA C are programming languages intended for high-assurance applications, i.e., systems where reliability is critical and safety and/or security requirements must be met. This document summarizes the two languages, compares them with respect to how they help satisfy high-assurance requirements, and compares the SPARK technology to several static analysis tools available for MISRA C with a focus on Frama-C. 1 Introduction 1.1 SPARK Overview Ada [1] is a general-purpose programming language that has been specifically designed for safe and secure programming. Information on how Ada satisfies the requirements for high-assurance software, including the avoidance of vulnerabilities that are found in other languages, may be found in [2, 3, 4]. SPARK [5, 6] is an Ada subset that is amenable to formal analysis and thus can bring increased confidence to software requiring the highest levels of assurance. SPARK excludes features that are difficult to analyze (such as pointers and exception handling). Its restrictions guarantee the absence of unspecified behavior such as reading the value of an uninitialized variable, or depending on the evaluation order of expressions with side effects. But SPARK does include major Ada features such as generic templates and object-oriented programming, as well as a simple but expressive set of concurrency (tasking) features known as the Ravenscar profile. SPARK has been used in a variety of high-assurance applications, including hypervisor kernels, air traffic management, and aircraft avionics. In fact, SPARK is more than just a subset of Ada. -

API Integration Tutorial: Testing, Security and API Management

API Integration Tutorial: Testing, security and API management Tutorial In this tutorial In this tutorial: A roundup of the leading API As application program interface integration increases, so do the management tools available challenges with maintaining management, testing, and security. today………………………………..…. p.2 This API integration tutorial compares leading API management What are some solid options tools currently available on the market, as well as strategies for for open source API RESTful API testing. management tools?.............. p.14 Get a better understanding of API integration and management The basics of establishing a strategies. RESTful API testing program ……………………………………………. p.17 Testing microservices and APIs in the cloud……………... p.24 How do I create a secure API for mobile?............................... p.29 About SearchMicroservices.com ……………………………………………. p.31 Page 1 of 31 Tutorial In this tutorial A roundup of the leading API management A roundup of the leading API tools available today management tools available today………………………………..…. p.2 Zachary Flower, Freelance writer, SearchMicroservices.com API management is a constantly growing market with more new products What are some solid options popping up every year. As a result, narrowing all of the potential choices for open source API down to one perfect platform can feel like an overwhelming task. The sheer management tools?.............. p.14 number of options out there makes choosing one an extremely tough decision. The basics of establishing a To help relieve the strain involved with this decision, we've put together this RESTful API testing program detailed product roundup covering 10 of the leading API management tools ……………………………………………. p.17 currently available on the market. -

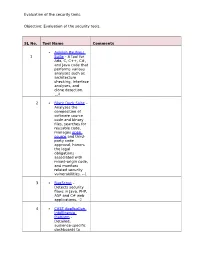

Evaluation of the Security Tools. SL No. Tool Name Comments 1

Evaluation of the security tools. Objective: Evaluation of the security tools. SL No. Tool Name Comments Axivion Bauhaus 1 Suite – A tool for Ada, C, C++, C#, and Java code that performs various analyses such as architecture checking, interface analyses, and clone detection. 4 2 Black Duck Suite – Analyzes the composition of software source code and binary files, searches for reusable code, manages open source and third- party code approval, honors the legal obligations associated with mixed-origin code, and monitors related security vulnerabilities. --1 3 BugScout – Detects security flaws in Java, PHP, ASP and C# web applications. -2 4 CAST Application Intelligence Platform – Detailed, audience-specific dashboards to Evaluation of the security tools. measure quality and productivity. 30+ languages, C, C++, Java, .NET, Oracle, PeopleSoft, SAP, Siebel, Spring, Struts, Hibernate and all major databases. 5 ChecKing – Integrated software quality portal that helps manage the quality of all phases of software development. It includes static code analyzers for Java, JSP, Javascript, HTML, XML, .NET (C#, ASP.NET, VB.NET, etc.), PL/SQL, embedded SQL, SAP ABAP IV, Natural/Adabas, C, C++, Cobol, JCL, and PowerBuilder. 6 ConQAT – Continuous quality assessment toolkit that allows flexible configuration of quality analyses (architecture conformance, clone detection, quality metrics, etc.) and dashboards. Supports Java, C#, C++, JavaScript, ABAP, Ada and many other languages. Evaluation of the security tools. 7 Coverity SAVE – A static code analysis tool for C, C++, C# and Java source code. Coverity commercialized a research tool for finding bugs through static analysis, the Stanford Checker, which used abstract interpretation to identify defects in source code. -

As Focused on Software Tools That Support Software Engineering, Along with Data Structures and Algorithms Generally

PETER C DILLINGER, Ph.D. 2110 N 89th St [email protected] Seattle WA 98103 http://www.peterd.org 404-509-4879 Overview My work in software has focused on software tools that support software engineering, along with data structures and algorithms generally. My core strength is seeing many paths to “success,” so I'm often the person consulted when others think they're stuck. Highlights ♦ Key developer and project lead in adapting and extending the legendary Coverity static analysis engine, for C/C++ bug finding, to find bugs with high accuracy in Java, C#, JavaScript, PHP, Python, Ruby, Swift, and VB. https://www.synopsys.com/blogs/software-security/author/pdillinger/ ♦ Inventor of a fast, scalable, and accurate method of detecting mistyped identifiers in dynamic languages such as JavaScript, PHP, Python, and Ruby without use of a natural language dictionary. Patent pending, app# 20170329697. Coverity feature: https://stackoverflow.com/a/34796105 ♦ Did the impossible with git: on wanting to “copy with history” as part of a refactoring, I quickly developed a way to do it despite the consensus wisdom. https://stackoverflow.com/a/44036771 ♦ Did the impossible with Bloom filters: made the data structure simultaneously fast and accurate with a simple hashing technique, now used in tools including LevelDB and RocksDB. https://en.wikipedia.org/wiki/Bloom_filter (Search "Dillinger") ♦ Early coder / Linux user: started BASIC in 1st grade; first game hack in 3rd grade; learned C in middle school; wrote Tetris in JavaScript in high school (1997); steady Linux user since 1998. Work Coverity, August 2009 to October 2017, acquired by Synopsys in 2014 Software developer, tech lead, and manager for static and dynamic program analysis projects.