Chess Algorithms Theory and Practice

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Norges Sjakkforbunds Årsberetning 2015 - 2016

Norges Sjakkforbund Landskamp mellom Norge og Sverige i Stavanger 2016 Her er damelagene med Jonathan Tisdall (norsk toppsjakksjef), Monika Machlik, Edit Machlik, Svetlana Agrest, Silje Bjerke, Sheila Barth Sahl, Inna Agrest, Ellen Hagesæther, Olga Dolzhikova, Ellinor Frisk, Jessica Bengtsson, Emilia Horn, Nina Blazekovic, Ari Ziegler (svensk lagleder) De norske damene vant 7,5 – 4,5 og Norge vant sammenlagt 23,5 – 12,5. Årsberetning og kongresspapirer 95. kongress Tromsø 10. juli 2016 Innholdsfortegnelse Innkalling ................................................................................................................................................. 3 Forslag til dagsorden ............................................................................................................................... 4 Styrets beretning ..................................................................................................................................... 5 Organisasjon ............................................................................................................................................ 6 Administrasjon ........................................................................................................................................ 8 Norsk Sjakkblad ..................................................................................................................................... 11 Samarbeid med andre .......................................................................................................................... -

Hans Olav Lahlums Generelle Forhndsomtale Av Eliteklassen 2005

Hans Olav Lahlums generelle forhåndsomtale av eliteklassen 2005 Eliteklassen 2005 tangerer fjorårets deltakerrekord på 22 spillere - og det avspeiler gledeligvis en åpenbar nivåhevning sammenlignet med situasjonen for få år tilbake. Ettersom regjerende mester GM Berge Østenstad grunnet forhåpentligvis forbigående motivasjon- og formabstinens står over årets NM, beholder eliteklassen fra Molde i 2004 statusen som historiens sterkeste. Men det er med knepen margin til årets utgave, som med seks GM-er og fem IM-er er klart sterkere enn alle utgaver fra før 2004 - og dertil historisk som den første med kvinnelig deltakelse! Hva angår kongepokalen er eksperttipsene sjeldent entydige før start i eliteklassen 2005: Kampen om førsteplassen vil ifølge de aller fleste deltakerne stå mellom den fortsatt toppratede sjakkongen GM Simen Agdestein, sjakkronprinsen GM Magnus Carlsen og vårens formmester GM Leif Erlend Johannessen. At årets Norgesmester finnes blant de tre synes overveiende sannsynlig, men at det blir akkurat de tre som står igjen på pallen til premieutdelingen er langt mindre åpenbart: Tross laber aktivitet er bunnsolide GM Einar Gausel en formidabel utfordrer i så måte, og den kronisk uberegnelige nye GM Kjetil A Lie så vel som den kronisk uberegnelige gamle GM Rune Djurhuus har også åpenbart potensiale for en medalje. I klassen for GM-nappkandidater er den formsterke IM-duoen Helge A Nordahl og Frode Elsness de mest spennende kandidatene å følge, men resultatene fra vinterens seriesjakk indikerer at eks-Norgesmester Roy Fyllingen også bør holdes under overvåkning. IM Bjarke Barth Sahl (tidligere Kristensen) har nok GM-napp, men mangler desto mer ELO på å bli GM, så feltets eldste spiller kan i år konsentrere seg om å spille for en premieplass. -

Preparation of Papers for R-ICT 2007

Chess Puzzle Mate in N-Moves Solver with Branch and Bound Algorithm Ryan Ignatius Hadiwijaya / 13511070 Program Studi Teknik Informatika Sekolah Teknik Elektro dan Informatika Institut Teknologi Bandung, Jl. Ganesha 10 Bandung 40132, Indonesia [email protected] Abstract—Chess is popular game played by many people 1. Any player checkmate the opponent. If this occur, in the world. Aside from the normal chess game, there is a then that player will win and the opponent will special board configuration that made chess puzzle. Chess lose. puzzle has special objective, and one of that objective is to 2. Any player resign / give up. If this occur, then that checkmate the opponent within specifically number of moves. To solve that kind of chess puzzle, branch and bound player is lose. algorithm can be used for choosing moves to checkmate the 3. Draw. Draw occur on any of the following case : opponent. With good heuristics, the algorithm will solve a. Stalemate. Stalemate occur when player doesn‟t chess puzzle effectively and efficiently. have any legal moves in his/her turn and his/her king isn‟t in checked. Index Terms—branch and bound, chess, problem solving, b. Both players agree to draw. puzzle. c. There are not enough pieces on the board to force a checkmate. d. Exact same position is repeated 3 times I. INTRODUCTION e. Fifty consecutive moves with neither player has Chess is a board game played between two players on moved a pawn or captured a piece. opposite sides of board containing 64 squares of alternating colors. -

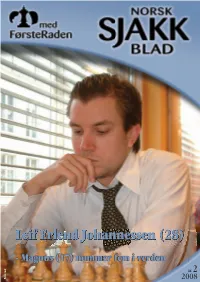

Leif Erlend Johannessen (28) - Magnus (17) Nummer Fem I Verden

Leif Erlend Johannessen (28) - Magnus (17) nummer fem i verden nr. 2 Foto: red. Foto: 2008 Tønsberg Schackklubb som ble 90 år 1. april ønsker alle VELKOMMEN TIL LANDSTURNERINGEN 2008 5. JULI TIL 13. JULI Kamparenaen blir Slagenhallen på Tolvsrød 3,5 km fra sentrum. Fullstendig innbydelse med hva vi kan tilby av sjakk og annet ligger på vår nettside: www.nm2008.sjakkweb.no Selve NM spilles over 9 runder Monrad med en runde pr. dag, ingen dobbeltrunde. Starttidspunktet er, med unntak av første og siste dag, kl 11.00. Tenketiden pr. spiller er 2 timer på 40 trekk og deretter 20 trekk på 1 time og ½ time på resten av partiet. Klasseinndeling: Det spilles i følgende klasser: Elite, Mester, klasse 1, 2, 3, 4 og 5, seniorklassen bestående av to grupper A og B, juniorklasse A og B, kadettklasse A og B, lilleputtklasse og miniputtklasse. Fullstendig reglement om Landsturneringen finner du på vårt nettsted nevnt ovenfor og under Sjakkaktuelt (forbundets nettside) Startavgiften er kr. 800, for juniorer kr. 500 og for kadetter, lilleputt og miniputt Kr. 400 Startavgiftene sendes til: Tønsberg Schackklubb v/Kristoffer Haugan Fuskegata 47 3158 ANDEBU Kontonr: 1503.01.87323 NB. Navn, fødseldato og klubb SKAL oppgis ved betaling. Påmeldingsfristen er 18. juni 2008. Ved etterpåmelding forhøyes startavgiften med kr. 200 til henholdsvis kr. 1000, 700 og 600. Påmeldte etter 22. juni 2008 må betale 50% tilleggsavgift. Ny startavgift blir da henholdsvis kr. 1.200, 750 eller 600. Siste mulige påmeldingsfrist er onsdag 2. juli 2008 (gjelder ikke Eli- teklassen). Påmeldte etter denne datoen kan risikere å bli avvist. -

BULLETIN 8 Søndag 7

BULLETIN 8 Søndag 7. juli. Pris kr 20 Den beste vant Norgesmestertittelen og Kongepokalen gikk til stormester Jon Ludvig Hammer (23) som hadde god kontroll på partituviklingen i siste runde mot Rune Djurhuus og sikret seg seieren med en remis. Dermed kunne publikum glede seg over å få hylle en Norgesmester og ikke bare stikkampspillere som i utrolige åtte av de ni foregående årene. Årets nybakte norgesmester Jon Ludvig Hammer med kongepokal på toppen av pallen. De øvrige premierte elitespillerne er fra venstre Frode Elsness, Atle Grønn, Leif E. Johannessen, Rune Djurhuus, Berge Østenstad, Frode Urkedal og Aryan Tari. Det var heller ikke mye tvil om at Jon Ludvig var en fortjent og riktig mester av Lillehammer 2013. Han spilte nok jevnt best, ble den eneste ubeseirede spilleren i klassen og endte med ett helt poengs margin til Frode Elsness, som han i et svakt øyeblikk avga en kanskje noe unødvendig remis til. For norsk sjakks del var det også best at den yngste og mest seriøst satsende stormesteren vår ved siden av Magnus Carlsen lyktes med å legge feltet bak seg og bli norgesmester for første gang. Gledelig var det også at yngstemann, Aryan Tari, bekreftet den jevnt over sterke framgangen sin med en lovende IMtittel etter sitt tredje napp i tittelen på drøyt tre måneder. Siste og avgjørende remis Fartsfylt kafésjakk Hvit: Jon Ludvig Hammer, OSS Espen Thorstensen fra Kongsvinger revansjerte en bukk i 5. runde i klasse med en imponerende seier i Svart: Rune Djurhuus, Nordstrand kveldens Kafesjakkturnering. Espen har mange Kongeindisk E98 ganger vist seg som en ekstra sterk spiller med 1.d4 Sf6 2.c4 g6 3.Sc3 Lg7 4.e4 d6 5.Sf3 0–0 6.Le2 e5 7.0–0 redusert tenketid som her var 15 minutter per spiller. -

Norsk Sjakkblad

#2/2018 Norsk Sjakkblad Drømmeduell i VM: Carlsen - Caruana ALLE DE GODE NYHETENE Det lønner seg å følge med i Nordens største sjakkbutikk! Kr 880,- Kr 225,- Kr 295,- Kr 250,- OG DE BESTE TILBUDENE Vi har alltid mange gode tilbud på blant annet glimrende bøker. Tilbudene i denne annonsen gjelder til 15. september, eller så lenge lageret varer. Se ellers i nettbutikken! NÅ kr 100,- NÅ kr 100,- NÅ kr 125 (halv pris!) NÅ kr 100,- Klubber i NSF og USF har alltid minst 10% rabatt på sjakkmateriell ved samlede kjøp fra kr 2000 og gode tilbud på større kjøp. Og svært gunstige bokpremiepakker! Butikk: Hauges gate 84A, 3019 Drammen. Tlf. 32 82 10 64. Mandag – fredag kl 10 – 1630, torsdag til kl 1830. Epost: [email protected] Nr. 2/2018. 84. årgang. Norsk Sjakkblad et medlemsblad for Norges Sjakkforbund Artikler www.sjakk.no 06 KANDIDATTURNERINGEN Redaktør Arrangørskandaler og Leif Erlend Johannessen kamp på tørre never preget Redaksjon Faste spalter turneringen i Berlin. Yonne Tangelder 12 ALTIBOX NORWAY Kontakt (redaksjonen) LEDER 04 CHESS [email protected] Redaksjonen har ordet. Carlsen vant generalprøven, Tekst og bilder av redaksjonen 05 NYTT FRA NSF men Caruana tok der ikke annet er oppgitt. Nyheter fra NSF. turneringsseieren. Bidragsytere Peter Heine Nielsen, Jarl Henning 18 J´ADOUBE! 20 ANDRÉ BJERKE Ulrichsen, Atle Grønn, Thomas Skråblikk på sjakkverden. Det er 100 år siden han ble Wasenius, Sverre Johnsen, Per født og 50 år siden «Spillet i Ofstad, Øystein Brekke og Karim 23 DU TREKKER mitt liv». Ali. Kombinasjoner fra Riyadh. 24 MOSS SK 100 ÅR Forsidebilde 28 ÅPNING PÅ 1-2-3 Årets NM-arrangør letter på Maria Emelianova Sverre Johnsen om Mottatt sløret. -

Should Chess Players Learn Computer Security?

1 Should Chess Players Learn Computer Security? Gildas Avoine, Cedric´ Lauradoux, Rolando Trujillo-Rasua F Abstract—The main concern of chess referees is to prevent are indeed playing against each other, instead of players from biasing the outcome of the game by either collud- playing against a little girl as one’s would expect. ing or receiving external advice. Preventing third parties from Conway’s work on the chess grandmaster prob- interfering in a game is challenging given that communication lem was pursued and extended to authentication technologies are steadily improved and miniaturized. Chess protocols by Desmedt, Goutier and Bengio [3] in or- actually faces similar threats to those already encountered in der to break the Feige-Fiat-Shamir protocol [4]. The computer security. We describe chess frauds and link them to their analogues in the digital world. Based on these transpo- attack was called mafia fraud, as a reference to the sitions, we advocate for a set of countermeasures to enforce famous Shamir’s claim: “I can go to a mafia-owned fairness in chess. store a million times and they will not be able to misrepresent themselves as me.” Desmedt et al. Index Terms—Security, Chess, Fraud. proved that Shamir was wrong via a simple appli- cation of Conway’s chess grandmaster problem to authentication protocols. Since then, this attack has 1 INTRODUCTION been used in various domains such as contactless Chess still fascinates generations of computer sci- credit cards, electronic passports, vehicle keyless entists. Many great researchers such as John von remote systems, and wireless sensor networks. -

Mating the Castled King

Mating the Castled King By Danny Gormally Quality Chess www.qualitychess.co.uk First edition 2014 by Quality Chess UK Ltd Copyright 2014 Danny Gormally © Mating the Castled King All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any fo rm or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without prior permission of the publisher. Paperback ISBN 978-1 -907982-71-2 Hardcover ISBN 978-1 -907982-72-9 All sales or enquiries should be directed to Quality Chess UK Ltd, 20 Balvie Road, Milngavie, Glasgow G62 7TA, United Kingdom Phone +44 141 204 2073 e-mail: [email protected] website: www.qualitychess.co.uk Distributed in North America by Globe Pequot Press, P.O. Box 480, 246 Goose Lane, Guilford, CT 06437-0480, US www.globepequot.com Distributed in Rest of the Wo rld by Quality Chess UK Ltd through Sunrise Handicrafts, ul. Skromna 3, 20-704 Lublin, Poland Ty peset by Jacob Aagaard Proofreading by Andrew Greet Edited by Colin McNab Cover design by Carole Dunlop and www.adamsondesign.com Cover Photo by capture365.com Photo page 174 by Harald Fietz Printed in Estonia by Tallinna Raamatutriikikoja LLC Contents Key to Symbols used 4 Preface 5 Chapter 1 - A Few Helpful Ideas 7 Chapter 2 - 160 Mating Finishes 16 Bishop Clearance 17 Back-rank Mate 22 Bishop and Knight 30 Breakthrough on the g-file 40 Breakthrough on the b-file 49 Destroying a Defensive Knight 54 Breakthrough on the h-file 63 Dragging out the King 79 Exposing the King 97 -

Learn Chess the Right Way

Learn Chess the Right Way Book 5 Finding Winning Moves! by Susan Polgar with Paul Truong 2017 Russell Enterprises, Inc. Milford, CT USA 1 Learn Chess the Right Way Learn Chess the Right Way Book 5: Finding Winning Moves! © Copyright 2017 Susan Polgar ISBN: 978-1-941270-45-5 ISBN (eBook): 978-1-941270-46-2 All Rights Reserved No part of this book maybe used, reproduced, stored in a retrieval system or transmitted in any manner or form whatsoever or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without the express written permission from the publisher except in the case of brief quotations embodied in critical articles or reviews. Published by: Russell Enterprises, Inc. PO Box 3131 Milford, CT 06460 USA http://www.russell-enterprises.com [email protected] Cover design by Janel Lowrance Front cover Image by Christine Flores Back cover photo by Timea Jaksa Contributor: Tom Polgar-Shutzman Printed in the United States of America 2 Table of Contents Introduction 4 Chapter 1 Quiet Moves to Checkmate 5 Chapter 2 Quite Moves to Win Material 43 Chapter 3 Zugzwang 56 Chapter 4 The Grand Test 80 Solutions 141 3 Learn Chess the Right Way Introduction Ever since I was four years old, I remember the joy of solving chess puzzles. I wrote my first puzzle book when I was just 15, and have published a number of other best-sellers since, such as A World Champion’s Guide to Chess, Chess Tactics for Champions, and Breaking Through, etc. With over 40 years of experience as a world-class player and trainer, I have developed the most effective way to help young players and beginners – Learn Chess the Right Way. -

Yearbook.Indb

PETER ZHDANOV Yearbook of Chess Wisdom Cover designer Piotr Pielach Typesetting Piotr Pielach ‹www.i-press.pl› First edition 2015 by Chess Evolution Yearbook of Chess Wisdom Copyright © 2015 Chess Evolution All rights reserved. No part of this publication may be reproduced, stored in a retrieval sys- tem or transmitted in any form or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without prior permission of the publisher. isbn 978-83-937009-7-4 All sales or enquiries should be directed to Chess Evolution ul. Smutna 5a, 32-005 Niepolomice, Poland e-mail: [email protected] website: www.chess-evolution.com Printed in Poland by Drukarnia Pionier, 31–983 Krakow, ul. Igolomska 12 To my father Vladimir Z hdanov for teaching me how to play chess and to my mother Tamara Z hdanova for encouraging my passion for the game. PREFACE A critical-minded author always questions his own writing and tries to predict whether it will be illuminating and useful for the readers. What makes this book special? First of all, I have always had an inquisitive mind and an insatiable desire for accumulating, generating and sharing knowledge. Th is work is a prod- uct of having carefully read a few hundred remarkable chess books and a few thousand worthy non-chess volumes. However, it is by no means a mere compilation of ideas, facts and recommendations. Most of the eye- opening tips in this manuscript come from my refl ections on discussions with some of the world’s best chess players and coaches. Th is is why the book is titled Yearbook of Chess Wisdom: it is composed of 366 self-suffi - cient columns, each of which is dedicated to a certain topic. -

Some Chess-Specific Improvements for Perturbation-Based Saliency Maps

Some Chess-Specific Improvements for Perturbation-Based Saliency Maps Jessica Fritz Johannes Furnkranz¨ Johannes Kepler University Johannes Kepler University Linz, Austria Linz, Austria [email protected] juffi@faw.jku.at Abstract—State-of-the-art game playing programs do not pro- vide an explanation for their move choice beyond a numerical score for the best and alternative moves. However, human players want to understand the reasons why a certain move is considered to be the best. Saliency maps are a useful general technique for this purpose, because they allow visualizing relevant aspects of a given input to the produced output. While such saliency maps are commonly used in the field of image classification, their adaptation to chess engines like Stockfish or Leela has not yet seen much work. This paper takes one such approach, Specific and Relevant Feature Attribution (SARFA), which has previously been proposed for a variety of game settings, and analyzes it specifically for the game of chess. In particular, we investigate differences with respect to its use with different chess Fig. 1: SARFA Example engines, to different types of positions (tactical vs. positional as well as middle-game vs. endgame), and point out some of the approach’s down-sides. Ultimately, we also suggest and evaluate a neural networks [19], there is also great demand for explain- few improvements of the basic algorithm that are able to address able AI models in other areas of AI, including game playing. some of the found shortcomings. An approach that has recently been proposed specifically for Index Terms—Explainable AI, Chess, Machine learning games and related agent architectures is specific and relevant feature attribution (SARFA) [15].1 It has been shown that it I. -

Chess Composer Werner 2

Studies with Stephenson I was delighted recently to receive another 1... Êg2 2. Îxf2+ Êh1 3 Í any#, or new book by German chess composer Werner 2... Êg3/ Êxh3 3 Îb3#. Keym. It is written in English and entitled Anything but Average , with the subtitle Now, for you to solve, is a selfmate by one ‘Chess Classics and Off-beat Problems’. of the best contemporary composers in the The book starts with some classic games genre, Andrei Selivanov of Russia. In a and combinations, but soon moves into the selfmate White is to play and force a reluctant world of chess composition, covering endgame Black to mate him. In this case the mate has studies, directmates, helpmates, selfmates, to happen on or before Black’s fifth move. and retroanalysis. Werner has made a careful selection from many sources and I was happy to meet again many old friends, but also Andrei Selivanov delighted to encounter some new ones. would happen if it were Black to move. Here 1st/2nd Prize, In the world of chess composition, 1... Êg2 2 Îxf2+ Êh1 3 Í any#; 2... Êg3/ Êxh3 Uralski Problemist, 2000 separate from the struggle that is the game, 3 Îb3# is clear enough, but after 1...f1 Ë! many remarkable things can happen and you there is no mate in a further two moves as will find a lot of them in this book. Among the 2 Ía4? is refuted by 2... Ëxb1 3 Íc6+ Ëe4+! . most remarkable are those that can occur in So, how to provide for this good defence? problems involving retroanalysis.