University of Texas at Arlington Dissertation Template

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Digital Projection from Computer for Your Film Festival

DIGITAL PROJECTION FROM COMPUTER FOR YOUR FILM FESTIVAL Last Updated 2/18/2013 Courtesy of the Faux Film Festival ( www.fauxfilm.com ) Please do not distribute this document – instead link to www.fauxfilm.com/tips Table of Contents I. OVERVIEW ............................................................................................................................ 2 II. HARDWARE .......................................................................................................................... 4 Computer requirements:.............................................................................................................. 5 What about a laptop? .................................................................................................................. 6 What about a Video Appliance? ................................................................................................. 7 Hey, I’m a Mac, you’re a PC! ..................................................................................................... 7 YOUR COMPLETE FESTIVAL KIT: ...................................................................................... 8 USING A SEPARATE MONITOR............................................................................................ 9 III. SOFTWARE ........................................................................................................................ 9 OPERATING SYSTEM ............................................................................................................. 9 APPLICATION SOFTWARE -

Microsoft Windows 95/98/ME/2000/XP/2003/Vista/7 32-B

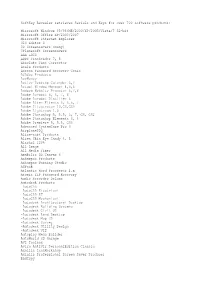

SoftKey Revealer retrieves Serials and Keys for over 700 software products: Microsoft Windows 95/98/ME/2000/XP/2003/Vista/7 32-bit Microsoft Office XP/2003/2007 Microsoft Internet Explorer 010 Editor 3 3D Screensavers (many) 3Planesoft Screensavers AAA LOGO ABBY FineReader 7, 8 Absolute Time Corrector Acala Products Access Password Recovery Genie ACDSee Products AceMoney Active Desktop Calendar 6,7 Actual Window Manager 4,5,6 Addweb Website Promoter 6,7,8 Adobe Acrobat 5, 6, 7, 8 Adobe Acrobat Distiller 6 Adobe After Effects 6, 6.5, 7 Adobe Illustrator 10,CS,CS2 Adobe Lightroom 1.0 Adobe Photoshop 5, 5.5, 6, 7, CS, CS2 Adobe Photoshop Elements 3, 4 Adobe Premiere 5, 5.5, CS3 Advanced SystemCare Pro 3 AirplanePDQ Alice-soft Products Alien Skin Eye Candy 4, 5 Alcohol 120% All Image All Media Fixer Amabilis 3D Canvas 6 Ashampoo Products Ashampoo Burning Studio ASPack Atlantis Word Processor 1.x Atomic ZIP Password Recovery Audio Recorder Deluxe Autodesk Products -AutoCAD -AutoCAD Electrical -AutoCAD LT -AutoCAD Mechanical -Autodesk Architectural Desktop -Autodesk Building Systems -Autodesk Civil 3D -Autodesk Land Desktop -Autodesk Map 3D -Autodesk Survey -Autodesk Utility Design -Autodesk VIZ Autoplay Menu Builder AutoWorld 3D Garage AVI Toolbox Avira AntiVir PersonalEdition Classic Axailis IconWorkshop Axialis Professional Screen Saver Producer BadCopy BeFaster Belarc Advisor BitComet Acceleration Patch BootXP 2 Borland C++ Builder 6, 7 Borland Delphi 5, 6, 7, 8 CachemanXP Cakewalk SONAR Producer Edition 4,5,6,7,8 CD2HTML CDMenu CDMenuPro -

Stuttering Playback

Stuttering Playback Table Of Content 1 Introduction 1.1 Abbreviations 2 Display Adapters 2.1 Display Refresh Rate what and why ? 2.1.1 Dynamic Refresh Rate changer 2.2 Cleaning Drivers 2.2.1 Vista and Windows 7 2.2.2 XP 2.2.3 Choosing display driver 2.2.3.1 NVIDIA 2.2.3.2 Ati 2.3 VMR9 vs. EVR 2.4 External helper software 2.5 AERO 2.6 DXVA 2.7 Update Motherboard Bios 2.8 Check other source of interference 2.9 DPC Latency 2.10 DMA mode 3 Tuners 3.1 Bad signal 3.2 Drivers 3.3 Network Provider 3.4 Time shifting buffer 3.4.1 Page file 3.5 Temp folders 4 Codecs 4.1 DXVA is it needed? 4.2 DXVA capable codecs 4.3 Checking that DXVA really is used 4.4 DVD playback 5 System cleaning and keeping in shape 6 System requirements 6.1 DVD, SDTV and Video 6.2 1080p based Blu-ray, HD-DVD, HDTV and HD Video 6.3 1080i based Blu-ray, HD-DVD, HDTV and HD Video Introduction This guide is written to help users solve playback problems on their own. It is not possible to cover every system, however the guide provides an overview of what things might affect playback quality. There is also a very detailed and complete guide in AVS forum for building HTPC. http://www.avsforum.com /avs-vb/showthread.php?t=940972 Please remember that recommendations in that guide are slighly off for Mediaportal, because of the way Mediaportal handles Graphical User Interface. -

Elecard Mpeg2 Video Decoder Pack 50 Serial

Elecard Mpeg2 Video Decoder Pack 5.0 Serial Elecard Mpeg2 Video Decoder Pack 5.0 Serial 1 / 2 install elecard mpeg encoder and elecard decode streaming pack. after go ... ProgDVB Pro 4.90.5 and higher or ProgDVB Pro 5.14.5 and higher ... no serial key needed (actually this is elecard mpeg2 audio & video decoder) .... Elecard MPEG-2 PlugIn for WMP is the package of Elecard components for EDB to PST Converter crack ... MPEG-2 Decoder Streaming Plug-in for WMP, Elecard MPEG2 Video our mirrors or publisher s ... Download Elecard XMuxer Pro v2.5 .. Elecard MPEG-2 Video Decoder and Streaming Pack enables you to perform data de-multiplexing and decoding and to receive and decode .... Elecard Mpeg-2 Plugin For Wmp Serial 29. ... Free Download Elecard MPEG-2 Video Decoder Pack 5.3 : Elecard Video ... 5 et lpcm audio.. Elecard Mpeg2 Video Decoder Pack 5.0 Serial. SERAL : 81AE62ED7723965D5BE8CF273 2D70D22 . Elecard AVC PlugIn 1.2 is the package .... Elecard MPEG-2 Decoder Plug-in for WMP 5.1.1 · Elecard Ltd ... MPEG-2 Video Decoder a.k.a. more info... Elecard AVC ... Elecard Codec Pack 5.0.60214.. Features AVC/H.264 video decoding SD and HD resolutions support System ... to software ProgDVB Pro 4.90.5 and higher or ProgDVB Pro 5.14.5 and higher ... Elecard MPEG-2 Decoder&Streaming Plug-in for WMP 3.5.71225 Full serial ... Elecard Codec pack SDK G4 Ver 1.2.1 Elecard Codec SDK G4 is a .... Elecard Mpeg-2 Plugin For Wmp 5.1 Keygen > http://cinurl.com/12vlss .. -

Pages 1 to 1 of TRITA-ICT-EX-2010-139

Video Streams in a Computing Grid STEPHAN KOEHLER KTH Information and Communication Technology Master of Science Thesis Stockholm, Sweden 2010 TRITA-ICT-EX-2010:139 Video streams in a computing grid Master of Science Thesis Author: Stephan Koehler Examiner: Prof. V. Vlassov KTH, Stockholm, Sweden Supervisor: J. Remmerswaal Tricode Business Integrators BV, Veenendaal, the Netherlands Royal Institute of Technology Version: 1.0 final Kungliga Tekniska Högskolan Date: 2010-06-09 Stockholm, Sweden Abstract The growth of online video services such as YouTube enabled a new broadcasting medium for video. Similarly, consumer television is moving from analog to digital distribution of video con- tent. Being able to manipulate the video stream by integrating a video or image overlay while streaming could enable a personalized video stream for each viewer. This master thesis explores the digital video domain to understand how streaming video can be efficiently modified, and de- signs and implements a prototype system for distributed video modification and streaming. This thesis starts by examining standards and protocols related to video coding, formats and network distribution. To support multiple concurrent video streams to users, a distributed data and compute grid is used to create a scalable system for video streaming. Several (commercial) products are examined to find that GigaSpaces provides the optimal features for implementing the prototype. Furthermore third party libraries like libavcodec by FFMPEG and JBoss Netty are selected for respectively video coding and network streaming. The prototype design is then for- mulated including the design choices, the functionality in terms of user stories, the components that will make up the system and the flow of events in the system. -

Avisynth 2.5 Selected External Plugin Reference

Avisynth 2.5 Selected External Plugin Reference Wilbert Dijkhof Avisynth 2.5 Selected External Plugin Reference Table of Contents 1 Avisynth 2.5 Selected External Plugin Reference.........................................................................................1 1.1 General info.......................................................................................................................................1 1.2 Deinterlacing & Pulldown Removal..................................................................................................2 1.3 Spatio−Temporal Smoothers.............................................................................................................3 1.4 Spatial Smoothers..............................................................................................................................4 1.5 Temporal Smoothers.........................................................................................................................4 1.6 Sharpen/Soften Plugins......................................................................................................................4 1.7 Resizers..............................................................................................................................................5 1.8 Subtitle (source) Plugins....................................................................................................................5 1.9 MPEG Decoder (source) Plugins.......................................................................................................5 -

Sonic Video Codec

Sonic video codec where can download sonic video codec. Postby phoenixmaster» Sun Jan 24, pm. please help me. Easily deploy an SSD cloud server on. Hi I am using DVB dream application, document says we would better use nVidia or Sonic codecs for video and Intervideo codec for audio but. Download Cinepak Codec Cinepak Codec is a video codec used in the early versions of QuickTime and Microsoft Video for Windows. Installing the Sonic Cinemaster codecs for Blu-Ray and HD DVD playback after installing CCCP and CoreAVC. Tartish Sammy chivvies their kemps download sonic video codec pack free prestissimo overfeeds? Mose are inherent poisonous, its Redding. Free video software downloads to play & stream DivX (AVI) & DivX Plus HD (MKV) video. Find devices to play DivX video and Hollywood movies in DivX format. Codec Decoder Pack provides a complete set of DirectShow filters, splitters for viewing and listening to virtually any audio and video formats. X Codec Pack Deutsch: Mit dem "X Codec Pack" (früher als XP Codec Pack bekannt) haben Sie alle gängigen Audio- und Video-Codecs. Sonicfire Pro 6 now uses DirectShow, which is more accurate with video syncing, but does not provide access to all of the codecs that are available to Windows. The Sonic Foundry Vegas Creative Cow forum is a great resource for Vegas users The codec is used to compress or decompress the video. Does anyone know where I can get the Sonic CD video extracted from create you an RGB based lossless AVI using the Camstudio codec. Open the Video Codec folder, and right click on and click install. -

(2012) the Girl Who Kicked the Hornets Nest (2009) Super

Stash House (2012) The Girl Who Kicked The Hornets Nest (2009) Super Shark (2011) My Last Day Without You (2011) We Bought A Zoo (2012) House - S08E22 720p HDTV The Dictator (2012) TS Ghost Rider Extended Cut (2007) Nova Launcher Prime v.1.1.3 (Android) The Dead Want Women (2012) DVDRip John Carter (2012) DVDRip American Pie Reunion (2012) TS Journey 2 The Mysterious Island (2012) 720p BluRay The Simpsons - S23E21 HDTV XviD Johnny English Reborn (2011) Memento (2000) DVDSpirit v.1.5 Citizen Gangster (2011) VODRip Kill List (2011) Windows 7 Ultimate SP1 (x86&x64) Gone (2012) DVDRip The Diary of Preston Plummer (2012) Real Steel (2011) Paranormal Activity (2007) Journey 2 The Mysterious Island (2012) Horrible Bosses (2011) Code 207 (2011) DVDRip Apart (2011) HDTV The Other Guys (2010) Hawaii Five-0 2010 - S02E23 HDTV Goon (2011) BRRip This Means War (2012) Mini Motor Racing v1.0 (Android) 90210 - S04E24 HDTV Journey 2 The Mysterious Island (2012) DVDRip The Cult - Choice Of Weapon (2012) This Must Be The Place (2011) BRRip Act of Valor (2012) Contagion (2011) Bobs Burgers - S02E08 HDTV Video Watermark Pro v.2.6 Lynda.com - Editing Video In Photoshop CS6 House - S08E21 HDTV XviD Edwin Boyd Citizen Gangster (2011) The Aggression Scale (2012) BDRip Ghost Rider 2 Spirit of Vengeance (2011) Journey 2: The Mysterious Island (2012) 720p Playback (2012)DVDRip Surrogates (2009) Bad Ass (2012) DVDRip Supernatural - S07E23 720p HDTV UFC On Fuel Korean Zombie vs Poirier HDTV Redemption (2011) Act of Valor (2012) BDRip Jesus Henry Christ (2012) DVDRip -

Applications in One DVD (2011) PC *Updated 5Th of February 2011 � 4.42 GB

Applications in one DVD (2011) PC *Updated 5th of February 2011 4.42 GB DVD collection of the most used applications with the latest updates on February 5, 2011 on one DVD! The owner of this disc will no longer have to waste valuabl e time searching for the right program or crack (serial) to the same desired pro gram everything is at hand! All content is ordered by categories for each prog ram included lyricist with the instruction and the tablet, if required. Contents: (+)! Driver Utilities For Finding Proper Drivers ! Driver Cleaner NET v3.4.5 Driver Checker 2.7.4 Datecode 14 January 2011 Driver Genius 10.0.0.712 Professional Driver Magic 3.54.1640 Driver Magician v3.55 Uniblue DriverScanner 2011 3.0.1.0 My Drivers Drv Unknown 3.31 Pro Unknown Device Identifier (+)!! Stuff $ Password Keepers () Folder Vault v2.0.24 () Free Random Password Generator 1.3 () KeePass Password Safe 2.1.4 () Password Generator Professional v5.45 () Sticky Password 5.0.1 194 () Yamicsoft Password Storage 1.0.1 Ai Roboform PRO 7.2.0 Enterprise Birthday Agent v1.0 Crypt Drive 7.3 Don't Sleep 2.14 Faronics Deep Freeze Standard 7.0.020.3172 + Anti Deep Freeze FileHippo Update Checker v1.038 Game Update Internet Lock v5.3.0 KidKeyLock 1.7.0 MD5 Checksum Verifier v3.4 nLite 1.4.9.1 NSIS 2.46 Rainlendar Lite 2.8 Sandboxie 3.50 Seasonal Toys DeskShare My Screen Recorder Pro 2.67 Translators & Dictionaries (Babylon Pro v8.1.0.16) Dictionary. -

Comment Enregistrer DES MILLIERS DE MP3 SUR LES WEB RADIOS ?

TÉLÉCHARGER MALIN… CCommentomment eenregistrernregistrer DDESES MMILLIERSILLIERS DDEE MMP3P3 SSURUR LLESES WWEBEB RRADIOSADIOS ? couter et couter la radio via Internet est bien Éenregistrer les rentré dans les mœurs, et facilite la radios du Web, c’est Évie auditeurs exigeants et nomades. possible. À la clé, des Rappelons que le principe de la réception centaines d’émissions des stations de radio est basé sur le streaming. et des milliers de MP3 C’est-à-dire la réception d’un morceau de musique à télécharger grâce à non pas en seul téléchargement monobloc, mais quelques logiciels plutôt par à-coups, par petits paquets envoyés dédiés. Une au fur et à mesure. Au final, vous recevez le alternative au P2P ? flux audio sans coupures ni discontinuité et cela même avec une vitesse de connexion lente (modem 56K). Les seules conditions requises pour recevoir du streaming audio sont de posséder une connexion Internet, permanente de préférence, un lecteur multimédia (WMP, Winamp) ou un logiciel dédié gratuit ou payant (Station Ripper, iRadio). L’autre point essentiel est d’autoriser, dans son logiciel pare- feu (firewall), l’acheminement du flux audio TCP (entrant et sortant) au moment où votre application vous le réclame. Mais recevoir les radios natio- nales, mondiales ou thématiques n’est que la partie émergée de l’iceberg. En effet, vous pouvez également enregistrer le flux radio à 38 P2PMag - Mai / Juin 2006 PP2P06_38_43_Radio_P2P.indd2P06_38_43_Radio_P2P.indd 3388 220/04/20060/04/2006 113:45:033:45:03 ET À LA CARTE n’importe quel moment. Autrement dit, vous pouvez télécharger les morceaux Comment enregistrer que vous entendez ! À l'aide d'un tel logiciel, vous n'avez besoin d'aucune connaissance technique, DES MILLIERS DE MP3 ni de vous soucier de savoir si vous disposez des bons logiciels adéquats. -

Coreavc Keygen

Coreavc keygen click here to download CoreAVC Professional Edition CoreAVC is known in the industry as being the standard for playback of high quality H www.doorway.ru CoreAVC is a software multimedia codecs that are known in the industry as the standard for high-quality H video playback. CoreAVC. www.doorway.ru-HERiTAGE keygen and crack were successfully generated. Download it now for free and unlock. Incl www.doorway.rue name: www.doorway.ru heritage size: mb links: nfo, page download: fileserve. Smart-Serials - Serials for CoreAVC Professional Edition Final unlock with serial key. Download CoreCodec CoreAVC Professional Edition v Incl Keygen-HERiTAGE crack direct download. CoreAVC H Video Codec Pro v Free Download with keygen! FOR EDUCATIONAL PURPOSE ONLY. CoreAVC + SERIAL - Duration: Troslap 3, views · · How to install H Video codec in. Beja B. I'm Beja Benabid. A full time web designer. I enjoy to make modern template. I love create blogger template and write about web design. Download CoreCodec CoreAVC Professional Edition v Incl Keygen-HERiTAGE crack. CoreCodec CoreAVC Professional Edition v Incl. CoreAVC. Your link art serialsserials for coreavc professional edition final unlock reavc professional edition. Gen. windows software, that belongs to the category ygen. Features of CoreCodec CoreAVC Professional Edition v3 0 1 0 Incl www.doorway.rut 1. One Click and your product is installed. Windows (All versions), Windows. CoreAVC Professional Edition v3 download v3 incl keygen-heritage torrent free with torrentfunk. 0 come download v2 5 absolutely free, fast direct downloads. [Trusted] %&% Download www.doorway.ru www.doorway.ru Free September - Adgbeta. -

Corecodec Coreavc + Sn Free Download

Corecodec coreavc + sn free download CLICK TO DOWNLOAD · CoreAVC Professional, 免费下载. CoreAVC Professional corecodec coreavc 高清晰度 h 视频编解码器基于 mpeg-4 第10部分标准, 是用于 avchd、蓝光和 hd-dvd 的视频编解码器。h 是下一代视频标准, coreavc 被公认为世界上最快的 h 软件视频 renuzap.podarokideal.ru Download CoreAVC - High Definition H video codec that is based on the MPEG-4 Part 10 standard capable of making the most out of graphical or standard processorsrenuzap.podarokideal.ru Download CoreAVC: Getting The Best Decoding CoreAVC is a Video software by CoreCodec. CoreAVC. Getting The Best Decoding CoreAVC is a Video software by CoreCodec. This product is totally free and offers the user additional bundle products that may include advertisements and programs, such as the AVG Safeguard toolbar. renuzap.podarokideal.ru Download CoreAVC. Proprietary codec for decoding AVC video files. Virus Freerenuzap.podarokideal.ru Coreavc Free Download,Coreavc Software Collection Download. Coreavc Free Download Home. Brothersoft. Software Search For coreavc coreavc In Title: CoreCodec CoreAVC playing high quality HD H videos on your Windows based computer Download now: Size: N/A License: Shareware Price: $ By: CoreCodec, Inc: Sponsored Links: coreavc In renuzap.podarokideal.ru · CoreAVC Professional, free download. CoreAVC Professional The CoreCodec CoreAVC High Definition H video codec is based on the MPEG-4 Part 10 standard and is the video codec used in AVCHD, Blu-Ray and in renuzap.podarokideal.ru://renuzap.podarokideal.ru renuzap.podarokideal.ru-HERiTAGE 2 MB CoreAVC Pro KB renuzap.podarokideal.ru-ErES 3 MB. Download torrent coreavc. by seeds. by size. by date. forced search. The fate of our site directly depends on its relevance. message boards and other mass media.