Why We Need a Public Infrastructure for Data on Open Access

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Piracy of Scientific Papers in Latin America: an Analysis of Sci-Hub Usage Data

Developing Latin America Piracy of scientific papers in Latin America: An analysis of Sci-Hub usage data Juan D. Machin-Mastromatteo Alejandro Uribe-Tirado Maria E. Romero-Ortiz This article was originally published as: Machin-Mastromatteo, J.D., Uribe-Tirado, A., and Romero-Ortiz, M. E. (2016). Piracy of scientific papers in Latin America: An analysis of Sci-Hub usage data. Information Development, 32(5), 1806–1814. http://dx.doi.org/10.1177/0266666916671080 Abstract Sci-Hub hosts pirated copies of 51 million scientific papers from commercial publishers. This article presents the site’s characteristics, it criticizes that it might be perceived as a de-facto component of the Open Access movement, it replicates an analysis published in Science using its available usage data, but limiting it to Latin America, and presents implications caused by this site for information professionals, universities and libraries. Keywords: Sci-Hub, piracy, open access, scientific articles, academic databases, serials crisis Scientific articles are vital for students, professors and researchers in universities, research centers and other knowledge institutions worldwide. When academic publishing started, academies, institutions and professional associations gathered articles, assessed their quality, collected them in journals, printed and distributed its copies; with the added difficulty of not having digital technologies. Producing journals became unsustainable for some professional societies, so commercial scientific publishers started appearing and assumed printing, sales and distribution on their behalf, while academics retained the intellectual tasks. Elsevier, among the first publishers, emerged to cover operations costs and profit from sales, now it is part of an industry that grew from the process of scientific communication; a 10 billion US dollar business (Murphy, 2016). -

Open Access Initiatives and Networking in the Global South Iryna Kuchma

Open Access Initiatives and Networking in the Global South Iryna Kuchma This short study highlights the impact of open access in the Global South. Featuring collaborative open access initiatives in Algeria, Kenya, Myanmar, Nigeria, Nepal, Palestine, Tanzania, Uganda and Latin American countries, it showcases success and describes the challenges that we still face. It also questions a notion of a journal article – perhaps already becoming obsolete – and discusses the growing preprints initiatives to speed up the availability of research results. The value of regional journal and repository networks enhancing open access content in Europe and Latin America is also discussed as well as the impact human networks make in the Global South. Health research dissemination Dr. Bessie Mukami is a general physician at Embu General Provincial Hospital, a relatively large teaching hospital in Embu, a town located approximately 120 kilometres northeast of Nairobi towards Mount Kenya. Embu serves as the provincial headquarters of Eastern Province in Kenya and is also the county headquarters of Embu County. “You have, maybe, one doctor to ten thousand people”, says Dr. Mukami. And as she speaks, her fingers click through pages of open access medical journals on a laptop. Subscribing to medical journals is very expensive, and it can be difficult for doctors to consult each other because of the long distances between hospitals. Open access is solving one of the biggest problems Dr. Mukami has: “Instead of calling other doctors for information, the information is open and available, and you search for what you really want”, she says. Dr. Gerald Nderitu, the medical superintendent at the same hospital and an experienced surgeon of 16 years, also relies on open access research output to help his patients. -

Open Research Data: SNSF Monitoring Report 2017-2018

Open Research Data: SNSF monitoring report 2017-2018 Katrin Milzow, Martin von Arx, Corn´eliaSommer, Julia Cahenzli, and Lionel Periniy February 2020 Abstract The Swiss National Science Foundation (SNSF) introduced its policy on Open Research Data (ORD) in 2017. This monitoring report gives an overview of the first experiences and provides an analysis of the adherence of researchers to the ORD policy. Data collected in this report come from the applications funded in the project funding scheme, some career funding schemes, and the Sin- ergia programme between October 2017 and December 2018. The report provides an analysis of the plausibility of the data management plans (DMPs), the requested costs for granting access to research data and the characteristics of the mentioned data repositories. Keywords: Open Research Data, Data Management Plan, Data sharing, Data repositories, Science Policy. y Open Research Data Group, Swiss National Science Foundation (SNSF). E-mail address: [email protected] Contents 1 Introduction 2 2 ORD Policy and Processes 2 2.1 ORD Policy . .2 2.1.1 Core Principles . .2 2.1.2 FAIR Data Principles . .3 2.2 Processes . .3 2.2.1 Costs for Granting Access to Research Data . .3 2.2.2 Assessment of the DMP . .4 3 Data 4 4 Descriptive Analysis 4 4.1 Costs for Granting Access to Research Data . .4 4.2 Overall Assessment of the DMPs . .5 4.3 Detailed Assessment of the DMPs . .5 4.4 Data Repositories . .7 5 Conclusion 10 6 Challenges and Perspectives 11 7 References 12 1 SNSF monitoring report Open Research Data 1 Introduction The Swiss National Science Foundation (SNSF) introduced its policy on Open Research Data (ORD) in 2017. -

Strategische Und Operative Handlungsoptionen Für Wissenschaftliche Einrichtungen Zur Gestaltung Der Open-Access-Transformation

! ! ! !"#$"%&'()*%+,-.+/0%#$"'1%+2$-.3,-&(/0"'/-%-+ 45#+6'((%-()*$4"3')*%+7'-#')*",-&%-+8,#+ 9%("$3",-&+.%#+:0%-;<))%((;=#$-(4/#>$"'/-+ ! "#$$%&'('#)*! "#$!%$&'()#()!*+,!'-'*+./,01+(!2$'*+,! ")+')&!,-#.)$),-#(%! /"&0!,-#.01! ! +/()+$+/013! '(!*+$!41/&5,561/,01+(!7'-#&383! *+$!9#.:5&*3;<(/=+$,/383!"#!>+$&/(! ! =5(!9+/("!4'.6+&! ! ! ?/+!4$8,/*+(3/(!*+$!9#.:5&*3;<(/=+$,/383!"#!>+$&/(@!! 4$5AB!?$B;C()B!?$B!D':/(+!E#(,3! ! ?/+!?+-'(/(!*+$!41/&5,561/,01+(!7'-#&383@! 4$5AB!?$B!2':$/+&+!F+3"&+$! ! ! 2#3'013+$! %$,3)#3'013+$@!! ! 4$5AB!?$B!4+3+$!D01/$.:'01+$! GH+/3)#3'013+$@!! 4$5AB!?$B!I5&A$'.!95$,3.'((! ! ?'3#.!*+$!?/,6#3'3/5(@!JKB!F'/!LMLJ! !"#$%&'()*+),-#",'. G#,'..+(A',,#()!BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB!NC! O:,3$'03!BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB!NCC! ?'(-,')#()!BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB!NCCC! O:-P$"#(),=+$"+/01(/,!BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB!CQ! R':+&&+(=+$"+/01(/,!BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB!QCC! O::/&*#(),=+$"+/01(/,!BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB!QCCC! -

1 C-TEDD UTC DMP the Data Management Plan

C-TEDD UTC DMP The Data management plan created using the DMPTool Center for Transportation Equity, Decisions and Dollars (C-TEDD) Contact(s): Dr. Shima Hamidi; Robert Edamala, Chief Information Security Officer & Director of Information Security, University of Texas at Arlington Copyright information: The above plan creator(s) have agreed that others may use as much of the text of this plan as they would like in their own plans, and customize it as necessary. You do not need to credit the creators as the source of the language used, but using any of their plan's text does not imply that the creator(s) endorse, or have any relationship to, your project or proposal. Data description The C-TEDD UTC will require each individual researcher to submit detailed data descriptions for their individual research projects, following the guidelines below: 1. Name the data, the data collection project, or the data-producing program. 2. Describe the purpose of the research. 3. Describe the data that will be generated, in terms of nature and scale (e.g., field measurements, laboratory measurements, modeling, numerical data, image data, text sequences, audio data, video data, database, modeling, archival/textual data, interview, survey, field observation, etc.). 4. Describe methods and procedures for creating the data (e.g. simulated, observed, experimental, software, physical collections, sensors, satellite, enforcement activities, researcher- generated databases, tables, and/or spreadsheets, instrument generated digital data output such as video and images). 5. Discuss the period of time over which the data will be collected, and the frequency of update. 6. If using existing data, describe the relationship between the data you are collecting and previously collected data. -

Towards a Plan(HS)S: DARIAH's Position on Plans

Towards a Plan(HS)S: DARIAH’s position on PlanS On 4 September 2018, national science funders from 11 EU countries announced the launch of cOAlition S to express the collective will of making full and immediate Open Access to research publications a reality. The key principles to achieve this are articulated in their 10-point Plan S. According to the plan, from 2020, all scientific publications that result from research funded by public grants provided by participating national and European research councils and funding bodies must be published in compliant Open Access journals or on compliant Open Access platforms. To achieve this, research funders will cover the costs of publications as part of research grants. The plan has sparked intense debates from the moment of its release. In addition to the signatories, there is a large group of key stakeholders, both on European and national levels, who have expressed their support and endorsement for the principles even if they still have not formally added their signatures to it. DARIAH fully endorses the principles of Open Access and is in favour of powerful Open Access policies aiming to accelerate the transition towards full and immediate Open Access to scientific publications within a reasonably short time. As such, we support the main ambitions set out by the plan, namely the elimination of paywalls, copyright retention, and the rejection of hybrid models of Open Access publishing. Committed to rapid realization of these aims, we consider PlanS a powerful step in returning ownership of research to a position where it is working for the good of society. -

Southeastern Transportation Research, Innovation, Development & Education Center Data Management Plan

Southeastern Transportation Research, Innovation, Development & Education Center Data Management Plan Data description The STRIDE Center requires that PIs, for all funded projects, submit detailed data descriptions upon completion of the research. This requirement is included in the scope of work of each project. This deliverable should include the following elements: 1. A list of all data obtained, along with the data producing program, if applicable. 2. Describe the purpose of the research. 3. Describe the data your project will generate in terms of nature and scale (e.g., numerical data, image data, text sequences, video, audio, database, modeling data, source code, etc.). 4. Describe methods for creating the data (e.g., simulated; observed; experimental; software; physical collections; sensors; satellite; enforcement activities; researcher-generated databases, tables, and/or spreadsheets; instrument generated digital data output such as images and video; etc.). 5. Discuss the period of time data were collected and the frequency of any updates, if applicable. 6. If the project used existing data, describe the relationship between the data collected under the project and previously collected data. 7. List potential users of the data. 8. Discuss the potential value that the data generated by your project will have over the long- term for the STRIDE Center and for the public. 9. If you request permission not to make data publicly accessible, explain the rationale for lack of public access. 10. Indicate who will be responsible for managing the data at the project level. The STRIDE Center's research coordinator will monitor all research project final reports to ensure they have addressed all of the above questions. -

Repository Solutions

Repository Solutions Technology Watch Report 1 (AP4.5) März 2020 Version 1.0 Datum 31.03.2020 Autoren Denis Arnold (IDS) / Bernhard Fisseni (IDS) / Felix Helfer (ULe) / Stefan Buddenbohm (SUB) / Peter Kiraly (GWDG) Projekt CLARIAH-DE Förderer Bundesministerium für Bildung und Forschung Förderkennzeichen 01UG1910A bis I Laufzeit 01.03.2019 bis 31.03.2021 Table of Contents Introduction 2 Requirements & Specifications 3 Comparative List of Repository Systems 5 3.1 DSpace 5 3.2 Fedora 6 3.3 Islandora and other Fedora branchings 7 3.4 Haplo 7 3.5 Invenio 8 3.6 MyCoRe 8 3.7 EPrints 9 3.8 Dataverse 9 Discussion 10 Sources 11 Appendix 12 1. Introduction This is the first of three reports composing the technology watch, which is in turn the subject of CLARIAH-DE AP4.5. The aim of all three reports is to give a detailed overview of technological developments relevant to the project and its partners, and offer recommendations concerning their adaptation within CLARIAH-DE. CLARIAH-DE is the merger of the two established German research infrastructures CLARIN-D and DARIAH-DE. An important task within this merge is the evaluation and – where possible – integration of infrastructure components or services. Parallel with the actual report an evaluation of current PID solutions is created1. The technology focused on in this report are repository solutions. With digital research infrastructures making up the core of the project, in turn, the storage, management and dissemination of research data form an essential task in this environment, and digital repositories provide the tools to fulfill it. -

Self-Archiving in Open Access Institutional Repositories: Whose Court Is the Ball In?

1 International Journal of Information Research, Vol.1 No.1 September , 2011 SELF-ARCHIVING IN OPEN ACCESS INSTITUTIONAL REPOSITORIES: WHOSE COURT IS THE BALL IN? Rupak Chakravarty Assistant Professor, Department of Library and Information Science, Panjab University, Chandigarh, India. ABSTRACT States that Open Access (OA) is powerful. As the open access movement is gathering momentum, it is becoming more powerful and more. It has the potential to liberate knowledge from the monopoly of the publishers to a great extent. But, are we serious in our efforts in supporting this movement? What efforts are we putting to make the knowledge available all and sundry without any financial obligation? Aims to raise such issues and provide facts which can guide institutional authorities to adopt author friendly policies and provide suitable platform to make them better contributor in open access movement. Provides a true picture of the current status pertaining to archiving in repositories and establishes the fact that now the ball is very much in the author’s court. KEY WORDS: Open Access, Self-Archiving, Institutional Repositories, SHERPA/RoMEO Open Access Open access calls for the free availability of scholarly literature on the Internet. It has been defined by various organizations and individuals whose writings and efforts have a great impact on the open access movement. Peter Suber (2007) has defined open access as “immediate, free and unrestricted online access to digital scholarly material primarily peer-reviewed research articles in journals”. According to Swan (2005), the Open Access research literature is composed of free, online copies of peer-reviewed journal articles and conference papers as well as technical reports, theses and working papers. -

Open Access: What We Can Learn from Articles Published in Geochemistry Journals in 2018 and 2019

Short communication to be submitted to Results in Geochemistry not peer reviewed Open Access: what we can learn from articles published in geochemistry journals in 2018 and 2019 Olivier Pourret1*, Dasapta Erwin Irawan2, Jonathan P. Tennant3, Andrew Hursthouse4, Eric D. van Hullebusch5 1UniLaSalle, AGHYLE, 19 rue Pierre Waguet, Beauvais, France 2Faculty of Earth Sciences and Technology, Institut Teknologi Bandung, Bandung, Indonesia 3Institute for Globally Distributed Open Research and Education, UK 4School of Computing, Engineering & Physical Sciences, University of the West of Scotland, Paisley PA1 2BE, UK 5Université de Paris, Institut de Physique du Globe de Paris, CNRS, Paris, France *Corresponding author: [email protected] 1 Short communication to be submitted to Results in Geochemistry not peer reviewed Abstract In this short communication, we look at Open Access (OA) practices in geochemistry based on articles published in 2018 and 2019 in a list of 56 journals and whether Article Processing Charge (APC) and Journal Impact Factor (JIF) drive publication or not. More than 40% of articles in 2018- 2019 were published OA, and about 70% of them in fully OA journals with a mean APC of US$ 900 whereas the remaining were published in historical hybrid journals with higher APC of more than $US 1,800. A good correlation is observed between the number of OA articles published in hybrids journals and JIF whereas there is a positive relationship between the number of OA articles published in fully OA journals and APC. For OA articles published in hybrid journals (i.e. the majority of historical journals in geochemistry), it seems that the proportion of OA articles tends to increase in journals with higher JIF. -

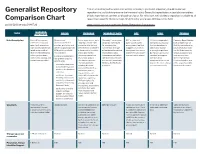

Generalist Repository Comparison Chart

This chart is designed to assist researchers in finding a generalist repository should no domain Generalist Repository repository be available to preserve their research data. Generalist repositories accept data regardless of data type, format, content, or disciplinary focus. For this chart, we included a repository available to all Comparison Chart researchers specific to clinical trials (Vivli) to bring awareness to those in this field. doi: 10.5281/zenodo.3946720 https://fairsharing.org/collection/GeneralRepositoryComparison HARVARD TOPIC DRYAD FIGSHARE MENDELEY DATA OSF VIVLI ZENODO DATAVERSE Brief Description Harvard Dataverse is Open-source, A free, open access, data Mendeley Data is a free OSF is a free and Vivli is an independent, Powering Open Science, a free data repository community-led data repository where users repository specialized open source project non-profit organization built on Open Source. open to all researchers curation, publishing, and can make all outputs of for research data. management tool that that has developed a Built by reserachers for from any discipline, both preservation platform for their research available in Search more than 20+ supports researchers global data-sharing researchers. Run from inside and outside of CC0 publicly available a discoverable, reusable, million datasets indexed throughout their entire and analytics platform. the CERN data centre, the Harvard community, research data and citable manner. from 1000s of data project lifecycle in open Our focus is on sharing whose purpose is long where you can share, Dryad is an independent Users can upload files repositories and collect science best practices. individual participant- term preservation archive, cite, access, and non-profit that works of any type and are and share datasets with level data from for the High Energy explore research data. -

The Content of Sci-Hub and Its Usage[Version 1; Peer Review: 2

F1000Research 2017, 6:541 Last updated: 27 SEP 2021 RESEARCH ARTICLE Looking into Pandora's Box: The Content of Sci-Hub and its Usage [version 1; peer review: 2 approved, 2 approved with reservations] Bastian Greshake Institute of Cell Biology and Neuroscience, Goethe University Frankfurt, Frankfurt, Germany v1 First published: 21 Apr 2017, 6:541 Open Peer Review https://doi.org/10.12688/f1000research.11366.1 Latest published: 21 Apr 2017, 6:541 https://doi.org/10.12688/f1000research.11366.1 Reviewer Status Invited Reviewers Abstract Despite the growth of Open Access, potentially illegally circumventing 1 2 3 4 paywalls to access scholarly publications is becoming a more mainstream phenomenon. The web service Sci-Hub is amongst the version 1 biggest facilitators of this, offering free access to around 62 million 21 Apr 2017 report report report report publications. So far it is not well studied how and why its users are accessing publications through Sci-Hub. By utilizing the recently 1. April Hathcock, New York University , New released corpus of Sci-Hub and comparing it to the data of ~28 million downloads done through the service, this study tries to address some York, USA of these questions. The comparative analysis shows that both the 2. Gabriel Gardner , California State usage and complete corpus is largely made up of recently published articles, with users disproportionately favoring newer articles and 35% University, Long Beach, USA of downloaded articles being published after 2013. These results hint Stephen McLaughlin, University of Texas at that embargo periods before publications become Open Access are frequently circumnavigated using Guerilla Open Access approaches Austin, Austin, USA like Sci-Hub.