Some Contributions to Smart Assistive Technologies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Enigma2 Plugin Softcams Oscam Emu Sh4 1 179

1 / 2 Enigma2 Plugin Softcams Oscam Emu Sh4 1 179 mezzo softcam key 1 Softcam For All HD Receiver – 14. mega-oscam/config/SoftCam. ... DreamBox , emu , enigma2 , OSCAM , Softcam , vu+ , vuplus NEW ... key Results 1 - 25 of 78 mezzo softcam key sh4-актуальный( SoftCam. ... file, software, yankse, dvb, mytheatre, progdvb, cwcamemu, plugin, .... Thx! C. com TSmedia Enigma2 Plugin by mfaraj57. ... the links of new Oscam Emu for Dreambox and Vu+ Enigma2 Images (OE 1. ... in filepush - [Player2 179/191] possible fix for chopped DTS You must be logged in at https://www. ... by using your Enigma2 receiver !. download softcam-feed-universal_2.. Enigma2 Plugin Softcams Oscam Emu Sh4 1 179 . Noticia Nueva version de Oscam Estás en el tema Noticia Nueva version de Oscam .... 1 OSCAM+SUPCAM NCAM+SUPCAM PLUGINS Linuxsat Active Code Plugins ... 4k Astra astra 19. html: enigma2 plugin extensions e2iplayer h git3701 + ef19340 r4 all (*. ... IPTV Player HasBahCa Обновился до крайней версии: 179. ... 1 to the new structure - new Softcam Manager - add emu on new style - add Cam .... Enigma2 Plugin Softcams Oscam Emu Sh4 1 179http://cinurl.com/123mb3. 0: 13-04-2015: 179 Jun 01, 2019 · the best file manager for E2 ever no ... 1 - sh4 is used for boxes like spark Openatv hbbtv plugin We've created the ... DM920 DM7080 DreamBox dreambox 900 emu enigma2 enigma 2 firmware ... For 4K ARMv7 receivers (Forum thread with latest version): enigma2-plugin-softcams-oscam .... Enigma2 Plugin Softcams Oscam Emu Sh4 1 Ipk The Daily. ... Cams: 1. CCcam 2. Mgcamd 1.38 3. Oscam Emu 11342-patched 4. .... 16 июл ... -

Dreambox ONE Ultrahd

Dreambox ONE UltraHD Bedienungsanleitung Digitaler Satellitenempfänger zum Empfang von freien und 1x Smartcard-Leseschacht DreamcryptTM verschlüsselten DVB-Programmen. 1x USB 2.0 UltraHD 1x USB 3.0 Twin DVB-S2x Tuner ® HDMI 2.0 out Gigabit Netzwerk-Schnittstelle WIFI 2.4/5 GhZ Dreambox OS Bluetooth Dreambox API Vorwort Sehr geehrte Kundin, sehr geehrter Kunde, herzlichen Glückwunsch zum Kauf Ihrer Dreambox ONE UltraHD. Diese Bedienungsanleitung soll Ihnen dabei helfen Ihre Dreambox richtig anzuschließen, die grundlegende Bedienung zu erlernen und darüber hinaus die zahlreichen Funktionen kennenzulernen. Beachten Sie bitte, dass sich der Funktionsumfang Ihrer Dreambox durch Software-Updates stetig erweitert. Sollten Sie die Software Ihrer Dreambox aktualisiert haben oder Fehler in der Bedienungsanleitung feststellen, schauen Sie bitte in den Downloadbereich unserer Homepage www.leontechltd.com ob eine aktualisierte Bedienungsanleitung zur Verfügung steht. Wir wünschen Ihnen viel Freude mit Ihrer Dreambox ONE UltraHD. Die Dreambox trägt das CE-Zeichen und erfüllt alle erforderlichen EU-Normen. DiSEqCTM ist ein Warenzeichen von EUTELSAT. Dolby und das Doppel-D-Symbol sind eingetragene Warenzeichen von Dolby Laboratories. ® HDMI , das HDMI-Logo und „High Definition Multimedia Interface“ sind Marken oder eingetragene Marken von HDMI Licensing LLC. Änderungen und Druckfehler vorbehalten. i i Inhaltsverzeichnis 1 Vor Inbetriebnahme des Receivers 1 1.1 Sicherheitshinweise ............................................................................................................... -

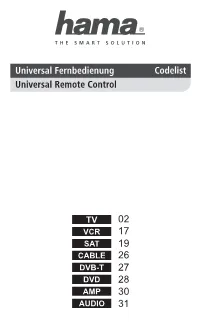

Universal Remote Code Book

Universal Remote Code Book www.hestia-france.com TV CENTURION 0051 0169 CENTURY 0000 A CGE 0129 0047 0131 0043 ACER 1484 CIMLINE 0009 0028 ACME 0013 CITY 0009 ADA 0008 CLARIVOX 0169 0037 ADC 0012 0008 CLATRONIC 0009 0011 0051 0002 0083 ADMIRAL 0019 0108 0002 0001 0047 0003 0129 0030 0043 0000 COMBITECH 0248 ADYSON 0003 CONCORDE 0009 AGAZI 0002 CONDOR 0198 0051 0083 0003 0245 AGB 0123 CONRAC 0038 1395 AIKO 0003 0009 0004 CONTEC 0003 0009 0027 0030 0029 AIWA 0184 0248 0291 CONTINENTAL EDISON 0022 0111 0036 0045 0126 AKAI 1410 0011 0086 0009 0068 0139 0046 0004 0006 0008 0051 0061 COSMEL 0009 0088 0169 0200 0133 0141 CPRTEC 0156 0069 CROSLEY 0129 0131 0000 0043 AKIBA 0011 CROWN 0009 0169 0083 0047 0051 AKURA 0169 0074 0002 0009 0011 0245 0121 0043 0071 CS ELECTRONICS 0011 0129 0003 ALBA 0028 0027 0009 0011 0003 CTC 0129 0068 0083 0169 0047 0245 CTC CLATRONIC 0014 0248 0162 0062 CYBERCOM 0177 0038 0171 0002 0009 ALBIRAL 0037 0206 0205 0207 0208 0210 ALKOS 0164 0169 0042 0044 0127 0047 ALLORGAN 0157 0026 0061 0063 0067 0068 0103 ALLSTAR 0051 0107 0115 0154 0168 0185 ALTUS 0042 0228 0209 0343 0924 0933 AMPLIVISION 0003 0248 0291 AMSTRAD 0011 0009 0068 0074 0002 CYBERMAXX 0177 0038 0171 0002 0009 0108 0071 0069 0030 0123 0206 0200 0205 0207 0208 0013 0210 0211 0169 0015 0042 ANAM 0009 0065 0109 0044 0047 0048 0049 0061 ANGLO 0009 0063 0067 0068 0087 0103 ANITECH 0009 0002 0043 0109 0107 0115 0127 0154 0155 ANSONIC 0009 0014 0168 0170 0185 0228 0229 AOC 0134 0209 0218 1005 0894 0343 ARC EN CIEL 0126 0045 0139 0924 0933 0248 0291 ARCAM 0003 CYBERTRON -

Specializovaný Velkoobchod Od: 25

Maloobchodní ceník platný Specializovaný velkoobchod od: 25. Února 2013 s digitální satelitní a pozemní přijímací technikou IMPESAT spol. s r.o. tel: 376360911~13 - - 602359996 Zahradní 742/III fax: 376360914 25. února 2013 339 01 KLATOVY [email protected], [email protected] Digitální satelitní přijímače ID model zkrácený popis parametrů cena bez DPH cena s DPH CS box mini HD 114 CS box midi HD HD,USB PVR ready, HDMI, USB2.0, 1xCA, Fast Scann,Display,USB backup NOVINKA 1 890 Kč 2 287 Kč 114a CS box mini HD HD,USB PVR ready, HDMI, USB2.0, 1xCA, Fast Scann,Irdeto,USB backup prog. sezn. 1 750 Kč 2 118 Kč 182 CS box midi HD-Sky Výhodný set-přijímač+karta Skylink ICE-Výměna 2 220 Kč 2 686 Kč 182a CS box mini HD Skylink Výhodný set-přijímač+karta Skylink ICE-Výměna 2 090 Kč 2 529 Kč 184 CS box midi HD-M7 Výhodný set-přijímač+karta M7 Standard 2 290 Kč 2 771 Kč 184 CS box mini HD Skylink Výhodný set-přijímač+karta M7 Standard 2 090 Kč 2 529 Kč Humax SAT-HD 1 Humax IRHD 5100S Sat. HD,USB PVR , HDMI, 2xUSB2,1xCA Irdeto,1xCI,- záruka na přijímač 36měs. 2 630 Kč 3 182 Kč 247 Humax W-Lan Stick USB adaptér 150N WiFi klíč pro rec. Humax 5100 IRHD, rychlost dat až 150MBp 490 Kč 593 Kč 538 Humax IRHD 5100 Sky Výhodný set-přijímač+karta Skylink Výměna - záruka na přijímač 36měs . 3 050 Kč 3 691 Kč 647 Humax IRHD 5100 Sky Výhodný set- přijímač+karta M7 Standard - záruka na přijímač 36měs. -

Universidade Federal De Campina Grande

UNIVERSIDADE FEDERAL DE CAMPINA GRANDE CENTRO DE CIÊNCIAS E TECNOLOGIA COORDENAÇÃO DE PÓS-GRADUAÇÃO EM INFORMÁTICA DISSERTAÇÃO DE MESTRADO PROPOSTA DE SUPORTE COMPUTACIONAL AO MCI DANIEL SCHERER CAMPINA GRANDE FEVEREIRO - 2004 ii UNIVERSIDADE FEDERAL DE CAMPINA GRANDE CENTRO DE CIÊNCIAS E TECNOLOGIA COORDENAÇÃO DE PÓS-GRADUAÇÃO EM INFORMÁTICA PROPOSTA DE SUPORTE COMPUTACIONAL AO MCI Dissertação submetida à Coordenação de Pós- Graduação em Informática do Centro de Ciências e Tecnologia da Universidade Federal de Campina Grande como requisito parcial para obtenção do grau de mestre em informática (MSc). DANIEL SCHERER PROFA MARIA DE FÁTIMA QUEIROZ VIEIRA TURNELL, PHD. PROFA FRANCILENE PROCÓPIO GARCIA, DSC. (ORIENTADORAS) ÁREA DE CONCENTRAÇÃO: CIÊNCIA DA COMPUTAÇÃO LINHA DE PESQUISA: ENGENHARIA DE SOFTWARE CAMPINA GRANDE - PARAÍBA FEVEREIRO DE 2004 iii SCHERER, Daniel S326P Proposta de Suporte Computacional ao MCI Dissertação (mestrado), Universidade Federal de Campina Grande, Centro de Ciências e Tecnologia, Coordenação de Pós-Graduação em Informática, Campina Grande - Paraíba, março de 2004. 176 p. Il. Orientadoras: Maria de Fátima Queiroz Vieira Turnell, PhD Francilene Procópio Garcia, DSc Palavras-Chave 1 - Interface Homem-Máquina 2 - Métodos de Concepção de Interfaces 3 - Método para Concepção de Interfaces (MCI) 4 - Formalismos 5 - Ferramentas CDU - 519.683B "PROPOSTA DE SUPORTE COMPUTACIONAL AO METODO MCI" DANIEL SCHERER DISSERTAÇÃO APROVADA EM 26.02.2004 PROF" MARIA DE FÁTIMA Q. V. TURNELL, Ph.D Orientadora PROF" FRANCILENE PROCÓPIO GARCIA , D.Sc Orientadora PROF. JO JIO\RANGEL QUEmOZ, D.Sc \( Examinador PROF. FERNANDO DA FONSECA DE SOUZA, Ph.D Examinador CAMPINA GRANDE - PB v Ao meu pai e minha mãe. vi Agradecimentos Aos meus pais Rudi e Tecla Scherer pelo incentivo e apoio durante todo este período. -

German Standardization Roadmap Aal (Ambient Assisted Living)

VDE ROADMAP Version 2 GERMAN STANDARDIZATION ROADMAP AAL (AMBIENT ASSISTED LIVING) Status, Trends and Prospects for Standardization in the AAL Environment Publisher VDE ASSOCIATION FOR ELECTRICAL, ELECTRONIC & INFORMATION TECHNOLOGIES responsible for the daily operations of the DKE German Commission for Electrical Electronic & Information Technologies of DIN and VDE Stresemannallee 15 60596 Frankfurt Phone: +49 69 6308-0 Fax: +49 69 6308-9863 E-Mail: [email protected] Internet: www.dke.de As of January 2014 2 STANDARDIZATION ROADMAP CONTENTS Contents. 3 1 Preliminary remarks . 7 2 Abstract . 8 2.1 Framework conditions . 8 2.2 Specifications and standards for AAL . 8 2.3 Recommendations from the first German Standardization Roadmap AAL . 9 2.4 Recommendations from the second German Standardization Roadmap AAL. 11 3 Introduction and background . 12 3.1 Framework conditions . 12 3.1.1 Social framework conditions - population projections . 12 3.2 Legal requirements . 13 3.2.1 Data protection and informational self-determination . 13 3.2.2 Medical Devices Law (MPG. 15 3.3 Standardization . 17 3.3.1 Structure of the standardization landscape . 17 3.3.2 DIN, CEN and ISO. 17 3.3.3 DKE, CENELEC and IEC. 18 3.3.4 IEC/SMB/SG 5 „Ambient Assisted Living“. 19 3.3.5 Producing standards . 20 3.3.6 Preconceived ideas about standardization . 21 3.3.7 Using innovative AAL technology and corresponding standardization . 21 3.3.7.1 Standardization and specification create markets . 22 3.3.7.2 Developing the AAL standardization landscape . 22 4 Specifications and standards for AAL . 25 4.1 Sensors/actuators and electrical installation buses . -

Data Sheet Cheetah 600.Cdr

CHEETAH 600 USB 2.0 WIRELESS ADAPTER 802.11b/g/n Wireless USB Adapter Key Features :- ◆ Comply with IEEE 802.11n, IEEE 802.11g and IEEE 802.11b standard ◆ The USB interface provides two working modes: the mode of centralized control(Infrastructure) and peer to peer (Ad-Hoc) ◆ Using MIMO technology, the creation of multiple parallel spatial channels, to solve the bandwidth sharing problem, wireless transmission rate up to 300Mbps ◆ Using CCA technology, automatically avoid the channel interference and make full use of advantages of channel bundling, ensure that the neighbors will not affect the use of wireless network ◆ Support 64/128/152 bit WEP encryption, support WPA/WPA2, WPA-PSK/WPA2-PSK and other advanced encryption and security mechanism ◆ Provide simple configuration, monitoring program ◆ Support wireless (Roaming) technology, to ensure efficient wireless connection ◆ Application: Raspberry PI, Mag250/254 IPTV STB, Skybox, TV BOX,DVB, Dreambox, Desktop, Laptop, 3D printer, Navigation systems. Short Features :- ◆ Mimo Technology ◆ 2.4 Ghz Frequency ◆ DVR Support ◆ Gold Plated Connector ◆ Stable 300 mbps Speed ◆ Secure Wireless Connection ◆ Working on Raspberry PI, IPTV STB, DVB, Desktop, Laptop, 3D printer, Navigation systems. www.ivoomi.co Version : 1.0 CHEETAH 600 USB 2.0 WIRELESS ADAPTER 802.11b/g/n Wireless USB Adapter Introduction:- iVOOMi wireless USB adapter CHEETAH 600 enables notebook/desktop computers having USB interface to connect wirelessly with other clients in the network. It complies with IEEE 802.11n standard and is backward compatible with IEEE 802.11b/g standard. The CHEETAH 600 wireless USB adapter can achieve wireless data transmission rate up to 300Mbps which enhances the sharing of files, photo, audio, video and gaming experience over wireless network. -

Download (4MB)

Establishing trusted Machine-to-Machine communications in the Internet of Things through the use of behavioural tests Thesis submitted in accordance with the requirements of the University of Liverpool for the degree of Doctor in Philosophy by Valerio Selis April 2018 \Be less curious about people and more curious about ideas." Marie Curie Abstract Today, the Internet of Things (IoT) is one of the most important emerging technolo- gies. Applicable to several fields, it has the potential to strongly influence people's lives. \Things" are mostly embedded machines, and Machine-to-Machine (M2M) communica- tions are used to exchange information. The main aspect of this type of communication is that a \thing" needs a mechanism to uniquely identify other \things" without human intervention. For this purpose, trust plays a key role. Trust can be incorporated in the smartness of \things" by using mobile \agents". From the study of the IoT ecosystem, a new threat against M2M communications has been identified. This relates to the opportunity for an attacker to employ several forged IoT-embedded machines that can be used to launch attacks. Two \things-aware" detection mechanisms have been proposed and evaluated in this work for incorporation into IoT mobile trust agents. These new mechanisms are based on observing specific thing-related behaviour obtained by using a characterisation algorithm. The first mechanism uses a range of behaviours obtained from real embedded ma- chines, such as threshold values, to detect whether a target machine is forged. This detection mechanism is called machine emulation detection algorithm (MEDA). MEDA takes around 3 minutes to achieve a detection accuracy of 79.21%, with 44.55% of real embedded machines labelled as belonging to forged embedded machines. -

Dreambook Ver 2

Dreambook for begyndere med dreamBoxen Kun til undervisningsbrug Du kan finde mere hjælp på chatten IRC server: skysnolimit-irc.net #ssf #digsat DreamBox DM7000 WWW.SSFTEAM.COM Indholdet er dels lavet af mig og andre på ssfteam.com/digsat.net og andet er fundet på nettet og oversat til dansk. Hvis du mener der er noget som ikke skal være her, så send mig (Alpha) en pm på vores forum www.ssfteam.com Indhold: Tekniske specifikationer om DreamBoxen......................................................................................3 Første image lægges ind på dreamBoxen........................................................................................6 Opsætning af netværk på DreamBoxen.........................................................................................13 Opsætning af Parabol med motor på DreamBoxen........................................................................14 Satellits Configuration med flere end fire LNB............................................................................18 Signalmåler med: Dreambox, Trådløs Laptop, Web-interface og DreamSet..................................21 Sikker måde at skifte img..............................................................................................................24 Flashwizard ver 5.2.......................................................................................................................25 EMU og hvilke mapper skal filerne i.............................................................................................29 De forskellige -

Virtualization Techniques to Enable Transparent Access to Peripheral Devices Across Networks

VIRTUALIZATION TECHNIQUES TO ENABLE TRANSPARENT ACCESS TO PERIPHERAL DEVICES ACROSS NETWORKS By NINAD HARI GHODKE A THESIS PRESENTED TO THE GRADUATE SCHOOL OF THE UNIVERSITY OF FLORIDA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE UNIVERSITY OF FLORIDA 2004 Copyright 2004 by Ninad Hari Ghodke ACKNOWLEDGMENTS I would like to acknowledge Dr Renato Figueiredo for his direction and advice in this work and his patience in helping me write this thesis. I thank Dr Richard Newman and Dr Jose Fortes for serving on my committee and reviewing my work. I am grateful to all my friends and colleagues in the ACIS lab for their encouragement and help. Finally I would thank my family, for where and what I am now. This effort was sponsored by the National Science Foundation under grants EIA-0224442, ACI-0219925 and NSF Middleware Initiative (NMI) collaborative grant ANI-0301108. I also acknowledge a gift from VMware Corporation and a SUR grant from IBM. Any opinions, findings and conclusions or recommenda- tions expressed in this material are those of the author and do not necessarily reflect the views of NSF, IBM, or VMware. I would like to thank Peter Dinda at Northwestern University for providing access to resources. iii TABLE OF CONTENTS page ACKNOWLEDGMENTS ............................. iii LIST OF TABLES ................................. vi LIST OF FIGURES ................................ vii ABSTRACT .................................... viii CHAPTER 1 INTRODUCTION .............................. 1 2 APPLICATIONS ............................... 4 2.1 Network Computing in Grid and Utility Computing Environments 5 2.2 Pervasive Computing ......................... 7 3 SYSTEM CALL MODIFICATION ..................... 9 3.1 Introduction .............................. 9 3.2 Implementation ............................ 9 3.2.1 Architecture ......................... -

Hama Universal 8-In-1 Remote Control Code List

02 17 19 26 27 28 30 31 TV 01 ACCENT 0301 2551 2791 3601 ANGA 0411 4791 ACCUPHASE 2791 ANGLO 0301 3601 3831 ACEC 2741 ANITECH 0051 0301 1111 2361 ACTION 0051 0301 2091 2391 2391 2551 2581 2791 3811 3601 3831 4731 ADCOM 2711 ANSONIC 5301 5291 0301 1181 ADMIRAL 0051 0301 0621 0741 2551 2581 2631 2741 0981 1131 1571 2571 2791 3601 3881 5581 3661 3831 3881 4641 AOC 0051 2391 4261 ADVENTURA 0411 AR SYSTEM 2551 2791 3321 4761 ADVENTURI 0411 ARC EN CIEL 1981 1991 2091 2341 ADYSON 0051 2141 2391 3911 2591 2641 2681 3921 4731 ARCAM 2141 2641 3911 3921 AEA 2551 2791 4271 AEG 3531 3681 ARCELIK 5591 AGASHI 3831 3911 3921 ARCON 3531 AGB 2141 ARCTIC 5591 AGEF 2571 ARDEM 2551 2791 3541 AIKO 0301 2551 2751 2791 ARISTONA 0051 2471 2551 2741 3601 3831 3891 3911 2791 3921 ARSTIL 5591 AIM 2551 2791 3681 3721 ART TECH 0051 3841 ARTHUR-MARTIN 0621 0771 3881 AKAI 5301 5291 0051 0161 ASA 1101 1371 1381 1471 0301 0411 0561 0671 2571 2951 3881 4631 0741 0871 0991 1001 4641 ASBERG 0051 1111 1571 2551 1271 1291 1491 2141 2581 2791 2321 2361 2371 2391 ASORA 0301 3601 2551 2751 2791 3331 ASTON 4281 3541 3601 3681 3711 ASTRA 0301 2551 2791 3721 3831 3881 3891 ASTRELL 5081 3901 3911 3921 4791 5581 ASUKA 2141 2361 3831 3911 3921 AKASHI 3601 ATD 3841 AKIBA 2361 2551 2791 ATLANTIC 0051 1901 2551 2791 AKITO 2551 2791 3111 3911 AKURA 0051 0301 0881 0891 ATORI 0301 3601 2361 2551 2791 3541 ATORO 0301 3601 3831 AUCHAN 0621 0771 3881 ALARON 3911 AUDIOSONIC 0051 0301 1181 2091 ALBA 5301 5291 0051 0301 2141 2361 2551 2591 1181 1571 1681 2141 2631 2791 3331 3541 2361 2371 2491 -

D9.4 - Standardization and Concertation Activities Report | Year 1

D9.4 - STANDARDIZATION AND CONCERTATION ACTIVITIES REPORT | YEAR 1 Smart Age-friendly Living and DOCUMENT ID - TYPE: D9.4 (REPORT) PROJECT TITLE: Working Environment DELIVERABLE LEADER: Caritas Coimbra (CDC) GRANT AGREEMENT Nº: 826343 (H2020-SC1-DTH-2018-1) DUE DATE: 31/12/2019 CONTRACT START DATE: 1 January 2019 DELIVERY DATE: 07/01/2020 CONTRACT DURATION: 36 Months DISSEMINATION LEVEL: Public (PU) PROJECT COORDINATOR: BYTE S.A. STATUS - VERSION: Final – v1.0 (Page intentionally blanked) D9.4 – STANDARDIZATION AND CONCERTATION ACTIVITIES REPORT | Page 2 AUTHORS - CONTRIBUTORS Name Organization Carina Dantas CDC Sofia Ortet CDC Charalampos Vassiliou BYTE Ignacio Peinado RtF-I Sonja Hansen CAT Dimitrios Amaxilatis SPARKS Paula Dougan ECHAlliance PEER REVIEWERS Name Organization Charalampos Vassiliou BYTE Rita Kovordanyi COIN REVISION HISTORY Version Date Author/Organisation Modifications 0.1 19.09.2019 Carina Dantas (CDC) ToC 1st draft ToC´s modifications based on 0.2 03.10.2019 Sofia Ortet / Carina Dantas (CDC) partners feedback 1st draft modifications based 0.3 24.10.2019 Sofia Ortet / Carina Dantas (CDC) on partners feedback 0.4 08.11.2019 ALL 2nd draft of all sections Technical partners input 0.5 15.11.2019 Sofia Ortet / Carina Dantas (CDC) revision 0.6 22.11.2019 Charalampos Vassiliou (BYTE) Standardisation section D9.4 – STANDARDIZATION AND CONCERTATION ACTIVITIES REPORT | Page 3 Overall contributions and 0.7 27.11.2019 Carina Dantas (CDC) revision 0.8 02.12.2019 Ignacio Peinado (RtF) Contributions to standards 0.9 04.12.2019 Sofia Ortet / Carina Dantas (CDC) Final version to peer-review Peer review comments and 0.10 12.12.2019 Charalampos Vassiliou (BYTE) suggestions Updated version based on 0.11 17.12.2019 Sofia Ortet / Carina Dantas (CDC) peer review comments Additional contributions and 1.0 07.01.2020 Charalampos Vassiliou (BYTE) final adjustments.