Ultimate Tic-Tac-Toe

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Paper and Pencils for Everyone

(CM^2) Math Circle Lesson: Game Theory of Gomuku and (m,n,k-games) Overview: Learning Objectives/Goals: to expose students to (m,n,k-games) and learn the general history of the games through out Asian cultures. SWBAT… play variations of m,n,k-games of varying degrees of difficulty and complexity as well as identify various strategies of play for each of the variations as identified by pattern recognition through experience. Materials: Paper and pencils for everyone Vocabulary: Game – we will create a working definition for this…. Objective – the goal or point of the game, how to win Win – to do (achieve) what a certain game requires, beat an opponent Diplomacy – working with other players in a game Luck/Chance – using dice or cards or something else “random” Strategy – techniques for winning a game Agenda: Check in (10-15min.) Warm-up (10-15min.) Lesson and game (30-45min) Wrap-up and chill time (10min) Lesson: Warm up questions: Ask these questions after warm up to the youth in small groups. They may discuss the answers in the groups and report back to you as the instructor. Write down the answers to these questions and compile a working definition. Try to lead the youth so that they do not name a specific game but keep in mind various games that they know and use specific attributes of them to make generalizations. · What is a game? · Are there different types of games? · What make something a game and something else not a game? · What is a board game? · How is it different from other types of games? · Do you always know what your opponent (other player) is doing during the game, can they be sneaky? · Do all of games have the same qualities as the games definition that we just made? Why or why not? Game history: The earliest known board games are thought of to be either ‘Go’ from China (which we are about to learn a variation of), or Senet and Mehen from Egypt (a country in Africa) or Mancala. -

Ai12-General-Game-Playing-Pre-Handout

Introduction GDL GGP Alpha Zero Conclusion References Artificial Intelligence 12. General Game Playing One AI to Play All Games and Win Them All Jana Koehler Alvaro´ Torralba Summer Term 2019 Thanks to Dr. Peter Kissmann for slide sources Koehler and Torralba Artificial Intelligence Chapter 12: GGP 1/53 Introduction GDL GGP Alpha Zero Conclusion References Agenda 1 Introduction 2 The Game Description Language (GDL) 3 Playing General Games 4 Learning Evaluation Functions: Alpha Zero 5 Conclusion Koehler and Torralba Artificial Intelligence Chapter 12: GGP 2/53 Introduction GDL GGP Alpha Zero Conclusion References Deep Blue Versus Garry Kasparov (1997) Koehler and Torralba Artificial Intelligence Chapter 12: GGP 4/53 Introduction GDL GGP Alpha Zero Conclusion References Games That Deep Blue Can Play 1 Chess Koehler and Torralba Artificial Intelligence Chapter 12: GGP 5/53 Introduction GDL GGP Alpha Zero Conclusion References Chinook Versus Marion Tinsley (1992) Koehler and Torralba Artificial Intelligence Chapter 12: GGP 6/53 Introduction GDL GGP Alpha Zero Conclusion References Games That Chinook Can Play 1 Checkers Koehler and Torralba Artificial Intelligence Chapter 12: GGP 7/53 Introduction GDL GGP Alpha Zero Conclusion References Games That a General Game Player Can Play 1 Chess 2 Checkers 3 Chinese Checkers 4 Connect Four 5 Tic-Tac-Toe 6 ... Koehler and Torralba Artificial Intelligence Chapter 12: GGP 8/53 Introduction GDL GGP Alpha Zero Conclusion References Games That a General Game Player Can Play (Ctd.) 5 ... 18 Zhadu 6 Othello 19 Pancakes 7 Nine Men's Morris 20 Quarto 8 15-Puzzle 21 Knight's Tour 9 Nim 22 n-Queens 10 Sudoku 23 Blob Wars 11 Pentago 24 Bomberman (simplified) 12 Blocker 25 Catch a Mouse 13 Breakthrough 26 Chomp 14 Lights Out 27 Gomoku 15 Amazons 28 Hex 16 Knightazons 29 Cubicup 17 Blocksworld 30 .. -

A Scalable Neural Network Architecture for Board Games

A Scalable Neural Network Architecture for Board Games Tom Schaul, Jurgen¨ Schmidhuber Abstract— This paper proposes to use Multi-dimensional II. BACKGROUND Recurrent Neural Networks (MDRNNs) as a way to overcome one of the key problems in flexible-size board games: scalability. A. Flexible-size board games We show why this architecture is well suited to the domain There is a large variety of board games, many of which and how it can be successfully trained to play those games, even without any domain-specific knowledge. We find that either have flexible board dimensions, or have rules that can performance on small boards correlates well with performance be trivially adjusted to make them flexible. on large ones, and that this property holds for networks trained The most prominent of them is the game of Go, research by either evolution or coevolution. on which has been considering board sizes between the min- imum of 5x5 and the regular 19x19. The rules are simple[5], I. INTRODUCTION but the strategies deriving from them are highly complex. Players alternately place stones onto any of the intersections Games are a particularly interesting domain for studies of the board, with the goal of conquering maximal territory. of machine learning techniques. They form a class of clean A player can capture a single stone or a connected group and elegant environments, usually described by a small set of his opponent’s stones by completely surrounding them of formal rules and clear success criteria, and yet they often with his own stones. A move is not legal if it leads to a involve highly complex strategies. -

A Scalable Neural Network Architecture for Board Games

A Scalable Neural Network Architecture for Board Games Tom Schaul, Jurgen¨ Schmidhuber Abstract— This paper proposes to use Multi-dimensional II. BACKGROUND Recurrent Neural Networks (MDRNNs) as a way to overcome one of the key problems in flexible-size board games: scalability. A. Flexible-size board games We show why this architecture is well suited to the domain There is a large variety of board games, many of which and how it can be successfully trained to play those games, even without any domain-specific knowledge. We find that either have flexible board dimensions, or have rules that can performance on small boards correlates well with performance be trivially adjusted to make them flexible. on large ones, and that this property holds for networks trained The most prominent of them is the game of Go, research by either evolution or coevolution. on which has been considering board sizes between the min- imum of 5x5 and the regular 19x19. The rules are simple[4], I. INTRODUCTION but the strategies deriving from them are highly complex. Players alternately place stones onto any of the intersections Games are a particularly interesting domain for studies of of the board, with the goal of conquering maximal territory. machine learning techniques. They form a class of clean and A player can capture a single stone or a connected group elegant environments, usually described by a small set of of his opponent’s stones by completely surrounding them formal rules, have very clear success criteria, and yet they with his own stones. A move is not legal if it leads to a often involve highly complex strategies. -

Chapter 6 Two-Player Games

Introduction to Using Games in Education: A Guide for Teachers and Parents Chapter 6 Two-Player Games There are many different kinds of two-person games. You may have played a variety of these games such as such as chess, checkers, backgammon, and cribbage. While all of these games are competitive, many people play them mainly for social purposes. A two-person game environment is a situation that facilitates communication and companionship. Two major ideas illustrated in this chapter: 1. Look ahead: learning to consider what your opponent will do as a response to a move that you are planning. 2. Computer as opponent. In essence, this makes a two-player game into a one- player game. In addition, we will continue to explore general-purpose, high-road transferable, problem-solving strategies. Tic-Tac-Toe To begin, we will look at the game of tic-tac-toe (TTT). TTT is a two-player game, with players taking turns. One player is designated as X and the other as O. A turn consists of marking an unused square of a 3x3 grid with one’s mark (an X or an O). The goal is to get three of one’s mark in a file (vertical, horizontal, or diagonal). Traditionally, X is the first player. A sample game is given below. Page 95 Introduction to Using Games in Education: A Guide for Teachers and Parents X X X O X O Before X's O's X's game first first second begins move move move X X X X O X X O X O O O X O X O X O X O X O O's X's O's X wins on second third third X's fourth move move move move Figure 6.1. -

Gomoku-Narabe" (五 目 並 べ, "Five Points in a Row"), the Game Is Quite Ancient

Gomoku (Japanese for "five points") or, as it is sometimes called, "gomoku-narabe" (五 目 並 べ, "five points in a row"), the game is quite ancient. The birthplace of Gomoku is considered to be China, the Yellow River Delta, the exact time of birth is unknown. Scientists name different dates, the oldest of which is the 20th century BC. All this allows us to trace the history of similar games in Japan to about 100 AD. It was probably at this time that the pebbles played on the islands from the mainland. - presumably in 270 AD, with Chinese emigrants, where they became known under different names: Nanju, Itsutsu-ishi (old-time "five stones"), Goban, Goren ("five in a row") and Goseki ("five stones "). In the first book about the Japanese version of the game "five-in-a-row", published in Japan in 1858, the game is called Kakugo (Japanese for "five steps). At the turn of the 17th-18th centuries, it was already played by everyone - from old people to children. Players take turns taking turns. Black is the first to go in Renju. Each move the player places on the board, at the point of intersection of the lines, one stone of his color. The winner is the one who can be the first to build a continuous row of five stones of the same color - horizontally, vertically or diagonally. A number of fouls - illegal moves - have been determined for the player who plays with black. He cannot build "forks" 3x3 and 4x4 and a row of 6 or more stones, as well as any forks with a multiplicity of more than two. -

Knots, Molecules, and the Universe: an Introduction to Topology

KNOTS, MOLECULES, AND THE UNIVERSE: AN INTRODUCTION TO TOPOLOGY AMERICAN MATHEMATICAL SOCIETY https://doi.org/10.1090//mbk/096 KNOTS, MOLECULES, AND THE UNIVERSE: AN INTRODUCTION TO TOPOLOGY ERICA FLAPAN with Maia Averett David Clark Lew Ludwig Lance Bryant Vesta Coufal Cornelia Van Cott Shea Burns Elizabeth Denne Leonard Van Wyk Jason Callahan Berit Givens Robin Wilson Jorge Calvo McKenzie Lamb Helen Wong Marion Moore Campisi Emille Davie Lawrence Andrea Young AMERICAN MATHEMATICAL SOCIETY 2010 Mathematics Subject Classification. Primary 57M25, 57M15, 92C40, 92E10, 92D20, 94C15. For additional information and updates on this book, visit www.ams.org/bookpages/mbk-96 Library of Congress Cataloging-in-Publication Data Flapan, Erica, 1956– Knots, molecules, and the universe : an introduction to topology / Erica Flapan ; with Maia Averett [and seventeen others]. pages cm Includes index. ISBN 978-1-4704-2535-7 (alk. paper) 1. Topology—Textbooks. 2. Algebraic topology—Textbooks. 3. Knot theory—Textbooks. 4. Geometry—Textbooks. 5. Molecular biology—Textbooks. I. Averett, Maia. II. Title. QA611.F45 2015 514—dc23 2015031576 Copying and reprinting. Individual readers of this publication, and nonprofit libraries acting for them, are permitted to make fair use of the material, such as to copy select pages for use in teaching or research. Permission is granted to quote brief passages from this publication in reviews, provided the customary acknowledgment of the source is given. Republication, systematic copying, or multiple reproduction of any material in this publication is permitted only under license from the American Mathematical Society. Permissions to reuse portions of AMS publication content are handled by Copyright Clearance Center’s RightsLink service. -

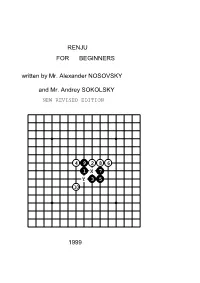

RENJU for BEGINNERS Written by Mr. Alexander NOSOVSKY and Mr

RENJU FOR BEGINNERS written by Mr. Alexander NOSOVSKY and Mr. Andrey SOKOLSKY NEW REVISED EDITION 4 9 2 8 6 1 X 7 Y 3 5 10 1999 PREFACE This excellent book on Renju for beginners written by Mr Alexander Nosovsky and Mr Andrey Sokolsky was the first reference book for the former USSR players for a long time. As President of the RIF (The Renju International Federation) I am very glad that I can introduce this book to all the players around the world. Jonkoping, January 1990 Tommy Maltell President of RIF PREFACE BY THE AUTHORS The rules of this game are much simpler, than the rules of many other logical games, and even children of kindergarten age can study a simple variation of it. However, Renju does not yield anything in the number of combinations, richness in tactical and strategical ideas, and, finally, in the unexpectedness and beauty of victories to the more popular chess and checkers. A lot of people know a simple variation of this game (called "five-in-a-row") as a fascinating method to spend their free time. Renju, in its modern variation, with simple winnings prohibited for Black (fouls 3x3, 4x4 and overline ) becomes beyond recognition a serious logical-mind game. Alexander Nosovsky Vice-president of RIF Former two-times World Champion in Renju by mail. Andrey Sokolsky Former vice-president of RIF . CONTENTS CHAPTER 1. INTRODUCTION. 1.1 The Rules of Renju . 1.2 From the History and Geography of Renju . CHAPTER 2. TERMS AND DEFINITIONS . 2.1 Accessories of the game . -

3.4 Temporal Difference Learning 6 3.5 Ways of Learning 7 3.6 Features 8 4

Training a Back-Propagation Network with Temporal Difference Learning and a database for the board game Pente Valentijn Muijrers 3275183 [email protected] Supervisor: Gerard Vreeswijk 7,5 ECTS 22 januari 2011 Abstract This paper will give a view on how to make a back-propagation network which can play Pente by learning from database games with temporal difference learning. The aspects of temporal difference learning and neural networks are described. The method of how a neural network can learn to play the game Pente is also explained. An experiment is described and analyzed and shows how the neural network has learned the game of Pente and in what kind of behavior the learning strategy resulted. The best performance after training on one single game was reached with a learning rate of 0.001. After learning 300000 games from the database the network did not perform very well. A third experiment with 4000 self-play games was also conducted and showed that the network placed the game more to the middle of the board than to the sides which is some form of intelligence. First this paper will give a view of the aspects of temporal difference learning in neural networks and which choices in this project were made during testing. Then the game of Pente will be explained and the way of how this game can be learned by a neural network will be justified. At the end of this paper the experiments of this project will be explained and the results will be evaluated. Then the conclusions will be discussed and analyzed. -

Let's Forget About Guys Packet 2.Pdf

Let’s Forget About Guys By Eric Chen, Michael Coates, and Jayanth Sundaresan Packet 2 Note to players: Specific two-word term required. 1. In a 1980 issue of The Globe and Mail, Jacques Favart argued that this activity must die because it is a waste of time and stifles creativity. In the US, tests for this activity were replaced with a type of test called moves in the field. A 41-item list that is central to this activity contains descriptions like “RFO, LFO” and categories like Paragraph Brackets and Change Double Threes. Because this activity was not amenable to TV and drained enormous amounts of practice time, its weight in international competitions was gradually reduced from 60 to 20 percent before it was eliminated in 1990. Trixi Schuba (“SHOO-buh”) won gold at the 1972 Olympics over Karen Magnussen and Janet Lynn due to her mastery of this activity. This activity consists of reproducing a set of diagrams codified by the ISU and tests the athlete’s ability to execute clean turns and draw perfect circles in the ice. For the point, name this once-mandatory activity which lends its name to the sport of figure skating. ANSWER: compulsory figures [or school figures, prompt on answers like drawing figures; do not accept or prompt on “ice skating” or “figure skating”] 2. A group of students from Prague invented a game in this city that uses a tennis ball with its felt removed. Charles de Gaulle named a soccer team from this city the honorary team of Free France for its resistance efforts during World War II. -

EVOLUTIONARY AI in BOARD GAMES an Evaluation of the Performance of an Evolutionary Algorithm in Two Perfect Information Board Games with Low Branching Factor

EVOLUTIONARY AI IN BOARD GAMES An evaluation of the performance of an evolutionary algorithm in two perfect information board games with low branching factor Bachelor Degree Project in Informatics G2E, 22.5 credits, ECTS Spring term 2015 Viktor Öberg Supervisor: Joe Steinhauer Examiner: Anders Dahlbom Abstract It is well known that the branching factor of a computer based board game has an effect on how long a searching AI algorithm takes to search through the game tree of the game. Something that is not as known is that the branching factor may have an additional effect for certain types of AI algorithms. The aim of this work is to evaluate if the win rate of an evolutionary AI algorithm is affected by the branching factor of the board game it is applied to. To do that, an experiment is performed where an evolutionary algorithm known as “Genetic Minimax” is evaluated for the two low branching factor board games Othello and Gomoku (Gomoku is also known as 5 in a row). The performance here is defined as how many times the algorithm manages to win against another algorithm. The results from this experiment showed both some promising data, and some data which could not be as easily interpreted. For the game Othello the hypothesis about this particular evolutionary algorithm appears to be valid, while for the game Gomoku the results were somewhat inconclusive. For the game Othello the performance of the genetic minimax algorithm was comparable to the alpha-beta algorithm it played against up to and including depth 4 in the game tree. -

Gomokunet: a Novel Unet-Style Network for Gomoku Zero Learning Via Exploiting Positional Information and Multiscale Features

GomokuNet: A Novel UNet-style Network for Gomoku Zero Learning via Exploiting Positional Information and Multiscale Features Yifan Gao Lezhou Wu Haoyue Li Northeastern University Northeastern University Northeastern University Shenyang, China Shenyang, China Shenyang, China [email protected] [email protected] [email protected] Abstract—Since the tremendous success of the AlphaGo family way to predict the move of Gomoku with CNN. Other works (AlphaGo, AlphaGo Zero, and Alpha Zero), zero learning has [8]–[10] look into the neural network structures of Gomoku become the baseline method for many board games, such as trained by the zero learning method in the absence of expert Gomoku and Chess. Recent works on zero learning have demon- strated that improving the performance of neural networks used knowledge. However, the neural network structures in the in zero learning programs remains nontrivial and challenging. former researches are relatively simple and cannot be used Considering both positional information and multiscale features, to train a powerful Gomoku AI model. this paper presents a novel positional attention-based UNet- Many improvements on CNN have been employed in the style model (GomokuNet) for Gomoku AI. An encoder-decoder game of Go. Residual networks [11] used by AlphaGo con- architecture is adopted as the backbone network to guarantee the fusion of multiscale features. Positional information modules tributed the first significant performance improvement. Com- are incorporated into our model in order to further capture the pared with the typical CNN, AlphaGo Zero’s performance location information of the board. Quantitative results obtained increased by 600 Elo with the help of the residual networks.