COLUMBIA Columbia University DIGITAL KNOWLEDGE VENTURES

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Neuregulin Induces the Expression of Transcription Factors and Myosin Heavy Chains Typical of Muscle Spindles in Cultured Human Muscle

Neuregulin induces the expression of transcription factors and myosin heavy chains typical of muscle spindles in cultured human muscle Christian Jacobson*, David Duggan†, and Gerald Fischbach‡§ *Microarray Unit, Genetics and Genomics Section, National Institute of Arthritis and Musculoskeletal and Skin Diseases, National Institutes of Health, Bethesda, MD 20892; †Translational Genomics Research Institute (TGen), Phoenix, AZ 85004; and ‡Department of Pharmacology, Columbia University College of Physicians and Surgeons, New York, NY 10032 Contributed by Gerald Fischbach, June 29, 2004 Neuregulin (NRG) (also known as ARIA, GGF, and other names) is (DRG) (28), proprioceptive sensory neurons in particular, ex- a heparin sulfate proteoglycan secreted into the neuromuscular press NRG early in development (14, 29, 30). While these junction by innervating motor and sensory neurons. An integral experiments were ongoing, reports appeared implicating NRG in part of synapse formation, we have analyzed NRG-induced the development of muscle spindles. Hippenmeyer et al. (14) changes in gene expression over 48 h in primary human myotubes. showed that NRG induces the expression of early growth We show that in addition to increasing the expression of acetyl- response 3 (Egr3), a transcription factor that is critical to the choline receptors on the myotube surface, NRG treatment results differentiation of muscle spindle fibers (31). Evidence for NRG’s in a transient increase of several members of the early growth role in spindle formation is re-enforced by the phenotypic response (Egr) family of transcription factors. Three Egrs, Egr1, -2, similarities between conditional Erb2 knockout animals and and -3, are induced within the first hour of NRG treatment, with Egr3 null mice (13, 15, 24). -

Neuregulin and Erbb Receptors Play a Critical Role in Neuronal Migration

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Neuron, Vol. 19, 39±50, July, 1997, Copyright 1997 by Cell Press Neuregulin and erbB Receptors Play a Critical Role in Neuronal Migration Carlos Rio,*³ Heather I Rieff,*²³ Peimin Qi,* the external germinal layer (EGL) and migrate inward and Gabriel Corfas*² along Bergmann radial fibers to their final destination, *Division of Neuroscience and Department the internal granule cell layer (IGL) (Rakic, 1971). In ro- of Neurology dents, this migration takes place during the first 2 post- Children's Hospital and Harvard Medical natal weeks. Granule cell migration has been studied School in vitro in both cerebellar slices and in cocultures of 300 Longwood Avenue dissociated cerebellar granule cells and astroglia. These ² Program in Neuroscience studies have implicated a number of molecules in steps Harvard Medical School of the migration process, for example, cell adhesion 220 Longwood Avenue molecules, i.e., AMOG (Antonicek et al., 1987; Gloor et Boston, Massachusetts 02115 al. 1990) and astrotactin (Zheng et al., 1996); ion chan- nels, i.e., NMDA receptors (Komuro and Rakic, 1993) and Ca21 channels (Komuro and Rakic, 1992). In addition, Summary studies of mutant mouse lines with defects in brain de- velopment have led to the identification of other mole- The migration of neuronal precursors along radial glial cules, including potassium channels in the case of the fibers is a critical step in the formation of the nervous weaver mutant (Rakic and Sidman, 1973; Patil et al., system. In this report, we show that neuregulin±erbB 1995); the extracellular matrix protein reelin in the reeler receptor signaling plays a crucial role in the migration mutant (Caviness and Sidman, 1973; D'Arcangelo et al., of cerebellar granule cells along radial glial fibers. -

And Neuropeptide Y-Immunoreactive Neurons in Rat and Monkey Neocortex’

0270.6474/84/0410-2497$02.00/O The Journal of Neuroscience Copyright 0 Society for Neuroscience Vol. 4, No. 10, pp. 2497-2517 Printed in U.S.A. October 1984 MORPHOLOGY, DISTRIBUTION, AND SYNAPTIC RELATIONS OF SOMATOSTATIN- AND NEUROPEPTIDE Y-IMMUNOREACTIVE NEURONS IN RAT AND MONKEY NEOCORTEX’ S. H. C. HENDRY,* E. G. JONES,*** AND P. C. EMSONS *James L. O’Leary Division of Experimental Neurology and Neurological Surgery and McDonnell Center for Studies of Higher Brain Function, Washington University School of Medicine, Saint Louis, Missouri 63110 and $ MRC Neurochemical Pharmacology Unit, Medical Research Council Centre, Hills Road, Cambridge CB2 2&H, England Received January 6,1984, Revised March 23,1934; Accepted March 30,1964 Abstract Neurons in the monkey and rat cerebral cortex immunoreactive for somatostatin tetradecapeptide (SRIF) and for neuropeptide Y (NPY) were examined in the light and electron microscope. Neurons immunoreactive for either peptide are found in all areas of monkey cortex examined as well as throughout the rat cerebral cortex and in the subcortical white matter of both species. In monkey and rat cortex, SRIF-positive neurons are morphologically very similar to NPY-positive neurons. Of the total population of SRIF-positive and NPY- positive neurons in sensory-motor and parietal cortex of monkeys, a minimum of 24% was immunoreactive for both peptides. Most cell bodies are small (8 to 10 pm in diameter) and are present through the depth of the cortex but are densest in layers II-III, in layer VI, and in the subjacent white matter. From the cell bodies several processes commonly emerge, branch two or three times, become beaded, and extend for long distances through the cortex. -

Alumni Director Cover Page.Pub

Harvard University Program in Neuroscience History of Enrollment in The Program in Neuroscience July 2018 Updated each July Nicholas Spitzer, M.D./Ph.D. B.A., Harvard College Entered 1966 * Defended May 14, 1969 Advisor: David Poer A Physiological and Histological Invesgaon of the Intercellular Transfer of Small Molecules _____________ Professor of Neurobiology University of California at San Diego Eric Frank, Ph.D. B.A., Reed College Entered 1967 * Defended January 17, 1972 Advisor: Edwin J. Furshpan The Control of Facilitaon at the Neuromuscular Juncon of the Lobster _______________ Professor Emeritus of Physiology Tus University School of Medicine Albert Hudspeth, M.D./Ph.D. B.A., Harvard College Entered 1967 * Defended April 30, 1973 Advisor: David Poer Intercellular Juncons in Epithelia _______________ Professor of Neuroscience The Rockefeller University David Van Essen, Ph.D. B.S., California Instute of Technology Entered 1967 * Defended October 22, 1971 Advisor: John Nicholls Effects of an Electronic Pump on Signaling by Leech Sensory Neurons ______________ Professor of Anatomy and Neurobiology Washington University David Van Essen, Eric Frank, and Albert Hudspeth At the 50th Anniversary celebraon for the creaon of the Harvard Department of Neurobiology October 7, 2016 Richard Mains, Ph.D. Sc.B., M.S., Brown University Entered 1968 * Defended April 24, 1973 Advisor: David Poer Tissue Culture of Dissociated Primary Rat Sympathec Neurons: Studies of Growth, Neurotransmier Metabolism, and Maturaon _______________ Professor of Neuroscience University of Conneccut Health Center Peter MacLeish, Ph.D. B.E.Sc., University of Western Ontario Entered 1969 * Defended December 29, 1976 Advisor: David Poer Synapse Formaon in Cultures of Dissociated Rat Sympathec Neurons Grown on Dissociated Rat Heart Cells _______________ Professor and Director of the Neuroscience Instute Morehouse School of Medicine Peter Sargent, Ph.D. -

Scientists Discover Master Regulator of Motor Neuron Firing 16 March 2009

Scientists discover master regulator of motor neuron firing 16 March 2009 neuromuscular junction, where axons of motor neurons meet the muscle. At this junction, these tendril-like extensions synapse specifically onto clusters of receptors, allowing the brain to transmit messages that signal the muscle to either contract or relax. The deterioration of the neuromuscular junction is at the center of Lou Gehrig’s disease. Since the 1980s, the master architect of the neuromuscular junction was thought to be a specific form of a protein known as agrin. Designated Z+ agrin, this form of the protein is found only in neurons and has an extra piece of RNA spliced into the transcript. To many, a key question that remained was which splicing factor is responsible for slipping in this extra piece, the Z+ exon. All wired up. At the neuromuscular junction, axons of motor neurons (green) synapse onto receptor proteins In their work, Darnell and Ruggiu not only unmask (red) that form clusters on various muscles (blue) the splicing factor but also reveal that this factor, throughout the body. Research now shows that an RNA not Z+ agrin, is the master regulator that regulatory protein called Nova is not only the master orchestrates the formation of the neuromuscular architect behind this wiring but the gatekeeper behind junction. Past experiments had led scientists to the function of the motor neuron itself. conclude that Z+ agrin might be necessary and sufficient for the neuromuscular junction to form properly, but Darnell and Ruggiu show that while Z+ agrin is necessary, other proteins are also (PhysOrg.com) -- When the Human Genome needed. -

Columbia University Medical Center HEAL and HEALTH

ANNUAL REPORT 2004–2005 heal Columbia University Medical Center HEAL AND HEALTH. THOSE INTERDEPENDENT CONCEPTS, SO CLOSELY LINKED THAT THEY ARE NEARLY THE SAME WORD, LIE AT THE HEART OF THE MISSION OF COLUMBIA UNIVERSITY’S COLLEGE OF PHYSICIANS & SURGEONS. ONE OF THE EARLIEST FACTS A YOUNG DOCTOR-TO-BE LEARNS Contents Dean’s Letter 2 DURING MEDICAL SCHOOL IS THAT THE BODY IS ORGANIZED INTO Human System 4 SYSTEMS—CARDIOVASCULAR, RESPIRATORY, NERVOUS, MUSCULO- Nervous System 8 SKELETAL, AND SO ON—THAT WORK IN AN INTEGRATED WAY TO Immune System 15 KEEP THAT BODY HEALTHY AND FUNCTIONING. WHEN THE Respiratory System 19 INTEGRATED SYSTEMS OF THE HUMAN BODY WORK PROPERLY Cardiovascular System 22 Systems of Education and Care 28 TOGETHER, WE HAVE HEALTH. WHEN THESE SYSTEMS FALTER, WE Dedication 32 MUST HEAL. College of Physicians & Surgeons 33 THIS YEAR’S ANNUAL REPORT FOCUSES ON COLUMBIA’S Development 34 Generous Donors 37 ACHIEVEMENTS DURING 2004 AND 2005 THROUGH THE PRISM OF In Memoriam 43 THE BODY’S INTEGRATED SYSTEMS. AT THE SAME TIME, WE EXAM- Financial Highlights 44 INE COLUMBIA’S ESSENTIAL ROLE TO PROMOTE HEALING AND Trustees Committee of the ADVANCE HEALTH IN THE “SYSTEM” OF OUR LOCAL COMMUNITY Health Sciences 46 Advisory Council 46 AND THE WIDER WORLD. Administration 46 Executive Committee of the Faculty Council 47 Department Chairs 47 Interdepartmental Centers 48 Departmental Centers 48 Affiliated Hospitals 50 From the Dean The role of a great medical center is to strategies to prevent cancer from ever oc- promote health and healing in the broadest curring. context: from the development of new Few institutions have the financial and Gerald D. -

WCBR Program 01

Welcome to the Thirty-Fourth Annual Winter Conference on Brain Research The Winter Conference on Brain Research (WCBR) was founded in 1968 to promote free exchange of information and ideas within neuroscience. It was the intent of the founders that both formal and informal interactions would occur between clinical and laboratory based neuroscientists. During the past thirty years neuroscience has grown and expanded to include many new fields and methodologies. This diversity is also reflected by WCBR participants and in our program. A primary goal of the WCBR is to enable participants to learn about the current status of areas of neuroscience other than their own. Another objective is to provide a vehicle for scientists with common interests to discuss current issues in an informal setting. On the other hand, WCBR is not designed for presentations limited to communicating the latest data to a small group of specialists; this is best done at national society meetings. The program includes panels (reviews for an audience not necessarily familiar with the area presented), workshops (informal discussions of current issues and data), and a number of posters. The annual confer- ence lecture will be presented at breakfast on Sunday, January 21. Our guest speaker will be Dr. Gerald Fischbach, Director of the National Institute for Neurological Disorders and Stroke at the National Institutes of Health. On Tuesday, January 23, a town meeting will be held for the Steamboat Springs community. Here Dr. Charles O Brien, and WCBR participants will discuss the neurobiological basis of mental illness. Also, participants in the WCBR outreach program will present sessions at local schools throughout the week to pique stu- dents interest in science. -

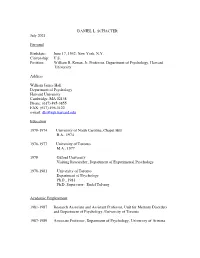

DANIEL L. SCHACTER July 2021 Personal

DANIEL L. SCHACTER July 2021 Personal Birthdate: June 17, 1952; New York, N.Y. Citizenship: U.S. Position: William R. Kenan, Jr. Professor, Department of Psychology, Harvard University Address William James Hall Department of Psychology Harvard University Cambridge, MA 02138 Phone: (617) 495-3855 FAX: (617) 496-3122 e-mail: [email protected] Education 1970-1974 University of North Carolina, Chapel Hill B.A., 1974 1976-1977 University of Toronto M.A., 1977 1978 Oxford University Visiting Researcher, Department of Experimental Psychology 1978-1981 University of Toronto Department of Psychology Ph.D., 1981 Ph.D. Supervisor: Endel Tulving Academic Employment 1981-1987 Research Associate and Assistant Professor, Unit for Memory Disorders and Department of Psychology, University of Toronto 1987-1989 Associate Professor, Department of Psychology, University of Arizona 2 1989-1991 Professor, Department of Psychology and Cognitive Science Program, University of Arizona 1991-1995 Professor, Department of Psychology, Harvard University 1995-2005 Chair, Department of Psychology, Harvard University 1999 Visiting Professor, Institute for Cognitive Neuroscience, University College London 2002- William R. Kenan, Jr. Professor of Psychology, Harvard University 2009 (spring) Acting Chair, Department of Psychology, Harvard University Selected Awards/Honors Arthur Benton Award, International Neuropsychological Society, 1989 Distinguished Scientific Award for Early Career Contribution to Psychology in Human Learning and Cognition, American Psychological -

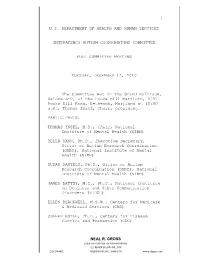

Transcript of the December 14, 2010 IACC Meeting

1 U.S. DEPARTMENT OF HEALTH AND HUMAN SERVICES INTERAGENCY AUTISM COORDINATING COMMITTEE FULL COMMITTEE MEETING TUESDAY, DECEMBER 14, 2010 The Committee met in The Grand Ballroom, Salons A-D, of the Pooks Hill Marriott, 5151 Pooks Hill Road, Bethesda, Maryland at 10:00 a.m., Thomas Insel, Chair, presiding. PARTICIPANTS: THOMAS INSEL, M.D., Chair, National Institute of Mental Health (NIMH) DELLA HANN, Ph.D., Executive Secretary, Office of Autism Research Coordination (OARC), National Institute of Mental Health (NIMH) SUSAN DANIELS, Ph.D., Office of Autism Research Coordination (OARC), National Institute of Mental Health (NIMH) JAMES BATTEY, M.D., Ph.D., National Institute on Deafness and Other Communication Disorders (NIDCD) ELLEN BLACKWELL, M.S.W., Centers for Medicare & Medicaid Services (CMS) COLEEN BOYLE, Ph.D., Centers for Disease Control and Prevention (CDC) NEAL R. GROSS COURT REPORTERS AND TRANSCRIBERS 1323 RHODE ISLAND AVE., N.W. (202) 234-4433 WASHINGTON, D.C. 20005-3701 www.nealrgross.com 2 PARTICIPANTS (continued): JOSEPHINE BRIGGS, M.D., National Center for Complementary and Alternative Medicine (NCCAM) (representing Francis Collins, M.D., Ph.D.) HENRY CLAYPOOL, U.S. Department of Health and Human Services (DHHS), Office on Disability (attended by phone) JUDITH COOPER, Ph.D., National Institute on Deafness and other Communication Disorders (NIDCD)(representing James Battey, M.D., Ph.D.) GERALDINE DAWSON, Ph.D., Autism Speaks GERALD FISCHBACH, M.D., Simons Foundation LEE GROSSMAN, Autism Society (attended by phone) ALAN GUTTMACHER, M.D., Eunice Kennedy Shriver National Institute of Child Health and Human Development (NICHD) GAIL HOULE, Ph.D., U.S. Department of Education WALTER KOROSHETZ, M.D., National Institute of Neurological Disorders and Stroke (NINDS) CINDY LAWLER, Ph.D., National Institute of Environmental Health Sciences (NIEHS) (representing Linda Birnbaum, Ph.D.) SHARON LEWIS, Administration for Children and Families (ACF) CHRISTINE MCKEE, J.D. -

Benefactors of Columbia University Feted in Special Three-Part Event

C olumbia U niversity RECORD June 11, 2004 12 Top Neuroscience Researchers Convene at ‘Brain and Mind’ Symposium Rockefeller University’s Roder- BY SUSAN CONOVA ick MacKinnon, the 2003 Nobel Prize winner in chemistry, then dis- esearchers from around the cussed his own basic research, world gathered to discuss which has revealed the structure Rthe latest discoveries on the and function of the neurons’ ion brain and the mind, spanning the channels, or as MacKinnon called entire spectrum of neuroscience, them, “the brain’s electrical from the atomic level up to higher- impulse generators.” order systems that construct con- The impulses must be sent sciousness, on May 13-14 in Lern- through brain’s highly organized er Hall as part of the Columbia 250 circuits to control movement, cog- symposium series . nition, and consciousness. Al- Organized by Thomas Jessell, though many circuits are not professor of biochemistry and understood, Jessell said, he out- molecular biophysics, and Joanna lined his progress on circuits that Rubinstein, senior associate dean control movement, showing how for institutional and global initia- the basic circuits are hard-wired by tives at Columbia University Med- one’s genes, but experiences, for ical Center, the day-and-a-half instance, of learning to walk or PHOTOS BY EILEEN BARROSO symposium was divided into three play the violin refine the circuits. Richard Axel delivered a talk at the “Brain and Mind” conference at Lerner Hall. half-day sessions: “Brain Struc- 2000 Nobel Prize winner Eric ture,” “Brain Function and Dis- Kandel, University Professor of ease” and “Biology of Mind.” Physiology and Psychiatry, showed how new techniques in frontal cortex, which is not nor- decisions are helping researchers In the first session, “Brain Struc- showed the audience just exactly brain imaging are changing the mally believed to be involved in discover which neural circuits are ture,” Gerald Fischbach, executive how those circuits are refined dur- way scientists think about brain addiction,” she said. -

Funding Committee Meeting Minutes March 4, 2010

Empire State Stem Cell Board Funding Committee Meeting Minutes March 4, 2010 The Empire State Stem Cell Board Funding Committee held a meeting on Thursday, March 4, 2010, at the Department of Health offices, 90 Church Street, New York, New York. Commissioner Richard F. Daines, M.D., presided as Chairperson. Funding Committee Members Present: Dr. Richard F. Daines, Chairperson Dr. David Hohn, Vice Chair* Mr. Kenneth Adams Dr. Bruce Holm* Dr. Bradford Berk* Dr. Allen Spiegel Dr. Richard Dutton Dr. Michael Stocker Dr. Gerald Fischbach Ms. Madelyn Wils *via videoconference Funding Committee Members Absent: Mr. Robin Elliott Dr. Hilda Hutcherson Department of Health Staff Present: Mr. Martin Algaze Dr. Matthew Kohn Ms. Bonnie Brautigam Ms. Beth Roxland Mr. Thomas Conway Ms. Lakia Rucker Ms. Judy Doesschate Dr. Lawrence Sturman Observers Present: Dr. Harold Brem Dr. Onyedika Chukwuemeka Ms. Elizabeth Misa Motion to Convene in Executive Session Dr. Daines advised members and the public that the Committee needed to go into executive session to discuss the evaluations of the applications submitted in response to the Request for Applications (RFA) for Investigator-Initiated Research Projects (IIRP) and Innovative, Developmental or Exploratory Activities (IDEA) in Stem Cell Research. Dr. Dutton made a motion to go into executive session. Mr. Adams seconded the motion. The motion passed. Dr. Daines then asked members of the public and non-essential staff to leave the room and advised them that the Committee was expected to reconvene in public session at approximately 11:15 a.m. Executive Session Dr. Daines noted that a few members of the Committee would need to be recused for the discussion of specific applications, but that Dr. -

Synaptic Release of Excitatory Amino Acid Neurotransmitter Mediates Anoxic Neuronal Death’

0270.6474/84/0407-1884$02.00/0 The Journal of Neuroscience Copyright 0 Society for Neuroscience Vol. 4, No. 7, pp. 1884-1891 Printed in U.S.A. July 1984 SYNAPTIC RELEASE OF EXCITATORY AMINO ACID NEUROTRANSMITTER MEDIATES ANOXIC NEURONAL DEATH’ STEVEN ROTHMAN Departments of Pediatrics, Neurology, and Anatomy and Neurobiology, Washington University School of Medicine, St. Louis. Missouri 63110 Received December 27, 1983; Revised February 16, 1984; Accepted February 27, 1984 Abstract The pathophysiology of hypoxic neuronal death, which is difficult to study in vivo, was further defined in vitro by placing dispersed cultures of rat hippocampal neurons into an anoxic atmosphere. Previous experiments had demonstrated that the addition of high concentrations of magnesium, which blocks transmitter release, protected anoxic neurons. These more recent experiments have shown that y-D-glutamylglycine (DGG), a postsynaptic blocker of excitatory amino acids, was highly effective in preventing anoxic neuronal death. DGG also completely protected the cultured neurons from the toxicity of exogenous glutamate (GLU) and aspartate (ASP). In parallel physiology experiments, DGG blocked the depolarization produced by GLU and ASP, and dramatically reduced EPSPs in synaptically coupled pairs of neurons. These results provide convincing evidence that the synaptic release of excitatory transmitter, most likely GLU or ASP, mediates the death of anoxic neurons. This result has far-reaching implications regarding the interpretation of the existing literature on cerebral hypoxia. Furthermore, it suggests new strategies that may be effective in preventing the devastating insults produced by cerebral hypoxia and ischemia in man. Cerebral hypoxia, either as an isolated event, or as a duced by hypoxia, unaccompanied by other complicating concomitant of occlusive cerebrovascular disease, peri- variables.