The Part of Cognitive Science That Is Philosophy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Daniel Dennett's Science of the Soul

Daniel Dennett’s Science of the Soul - The New Yorker 3/20/17, 9:38 AM P!FI"S MARCH 27, 2017 I#UE DANIEL DENNE$’S SCIENCE OF THE SOUL A philosopher’s lifelong quest to understand the making of the mind. By Joshua Rothman Daniel Dennett’s naturalistic account of consciousness draws some people in and puts others off. “There ain’t no magic here,” he says. “Just stage magic.” PHOTOGRAPH BY IRINA ROZOVSKY FOR THE NEW YORKER our billion years ago, Earth was a lifeless place. Nothing struggled, F thought, or wanted. Slowly, that changed. Seawater leached chemicals from rocks; near thermal vents, those chemicals jostled and combined. Some hit upon the trick of making copies of themselves that, in turn, made more copies. The replicating chains were caught in oily bubbles, which protected them and made replication easier; eventually, they began to venture out into the open sea. A new level of order had been achieved on Earth. Life had begun. The tree of life grew, its branches stretching toward complexity. Organisms developed systems, subsystems, and sub-subsystems, layered in ever-deepening regression. They used these systems to anticipate their future and to change it. When they looked within, some found that they had selves—constellations of memories, ideas, and purposes that emerged from the systems inside. They experienced being alive and had thoughts about that experience. They developed language and used it to know themselves; they began to ask how they had been made. This, to a !rst approximation, is the secular story of our creation. -

A Naturalistic Perspective on Intentionality. Interview with Daniel Dennett

Mind & Society, 6, 2002, Vol. 3, pp. 1-12 2002, Rosenberg & Sellier, Fondazione Rosselli A naturalistic perspective on intentionality. Interview with Daniel Dennett by Marco Mirolli Marco Mirolli You describe yourself as a philosopher of mind and you reject the exhortations to get more involved in the abstract problems of ontology and of philosophy of science in general, even if the problems of the philosophy of mind and those of philosophy of science are deeply mutually dependent. I think this is an important issue because, while you say that your philosophy presupposes only the standard scientific ontology and epistemology 1, it seems to me that what you really presuppose is rather a Quinean view of ontology and epistemology, which is not so standard, even if it may well be the one we should accept. Daniel Dennett Well, maybe so, let's see. Certainly I have seen almost no reason to adopt any other ontology than Quine's; and when I look at the work in the phi- losophy of science and more particularly at the work in science, I do not find any ground yet for abandoning a Quinean view of ontology for something fancier. It could happen, but I haven't seen any reason for doing this; so if you want to say that I am vulnerable on this, that's really true. I have stated that I don't see any of the complexities of science or philosophy of mind raising ontological issues that are more sophisticated than those a Quinean ontology could handle; but I may be wrong. -

Psychological Arguments for Free Will DISSERTATION Presented In

Psychological Arguments for Free Will DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Andrew Kissel Graduate Program in Philosophy The Ohio State University 2017 Dissertation Committee: Richard Samuels, Advisor Declan Smithies Abraham Roth Copyrighted by Andrew Kissel 2017 Abstract It is a widespread platitude among many philosophers that, regardless of whether we actually have free will, it certainly appears to us that we are free. Among libertarian philosophers, this platitude is sometimes deployed in the context of psychological arguments for free will. These arguments are united under the idea that widespread claims of the form, “It appears to me that I am free,” on some understanding of appears, justify thinking that we are probably free in the libertarian sense. According to these kinds of arguments, the existence of free will is supposed to, in some sense, “fall out” of widely accessible psychological states. While there is a long history of thinking that widespread psychological states support libertarianism, the arguments are often lurking in the background rather than presented at face value. This dissertation consists of three free-standing papers, each of which is motivated by taking seriously psychological arguments for free will. The dissertation opens with an introduction that presents a framework for mapping extant psychological arguments for free will. In the first paper, I argue that psychological arguments relying on widespread belief in free will, combined with doxastic conservative principles, are likely to fail. In the second paper, I argue that psychological arguments involving an inference to the best explanation of widespread appearances of freedom put pressure on non-libertarians to provide an adequate alternative explanation. -

Free Will and Determinism Debate on the Philosophy Forum

Free Will and Determinism debate on the Philosophy Forum. 6/2004 All posts by John Donovan unless noted otherwise. The following link is an interesting and enjoyable interview with Daniel Dennett discussing the ideas in his latest book where he describes how "free will" in humans and physical "determinism" are entirely compatible notions if one goes beyond the traditional philosophical arguments and examines these issues from an evolutionary viewpoint. http://www.reason.com/0305/fe.rb.pulling.shtml A short excerpt: Reason: A response might be that you’re just positing a more complicated form of determinism. A bird may be more "determined" than we are, but we nevertheless are determined. Dennett: So what? Determinism is not a problem. What you want is freedom, and freedom and determinism are entirely compatible. In fact, we have more freedom if determinism is true than if it isn’t. Reason: Why? Dennett: Because if determinism is true, then there’s less randomness. There’s less unpredictability. To have freedom, you need the capacity to make reliable judgments about what’s going to happen next, so you can base your action on it. Imagine that you’ve got to cross a field and there’s lightning about. If it’s deterministic, then there’s some hope of knowing when the lightning’s going to strike. You can get information in advance, and then you can time your run. That’s much better than having to rely on a completely random process. If it’s random, you’re at the mercy of it. A more telling example is when people worry about genetic determinism, which they completely don’t understand. -

Synthetic Philosophy

UvA-DARE (Digital Academic Repository) Synthetic Philosophy Schliesser, E. DOI 10.1007/s10539-019-9673-3 Publication date 2019 Document Version Final published version Published in Biology and Philosophy License CC BY Link to publication Citation for published version (APA): Schliesser, E. (2019). Synthetic Philosophy. Biology and Philosophy, 34(2), [19]. https://doi.org/10.1007/s10539-019-9673-3 General rights It is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), other than for strictly personal, individual use, unless the work is under an open content license (like Creative Commons). Disclaimer/Complaints regulations If you believe that digital publication of certain material infringes any of your rights or (privacy) interests, please let the Library know, stating your reasons. In case of a legitimate complaint, the Library will make the material inaccessible and/or remove it from the website. Please Ask the Library: https://uba.uva.nl/en/contact, or a letter to: Library of the University of Amsterdam, Secretariat, Singel 425, 1012 WP Amsterdam, The Netherlands. You will be contacted as soon as possible. UvA-DARE is a service provided by the library of the University of Amsterdam (https://dare.uva.nl) Download date:25 Sep 2021 Biology & Philosophy (2019) 34:19 https://doi.org/10.1007/s10539-019-9673-3 REVIEW ESSAY Synthetic philosophy Eric Schliesser1 Received: 4 November 2018 / Accepted: 11 February 2019 © The Author(s) 2019 Abstract In this essay, I discuss Dennett’s From Bacteria to Bach and Back: The Evolution of Minds (hereafter From Bacteria) and Godfrey Smith’s Other Minds: The Octopus and The Evolution of Intelligent Life (hereafter Other Minds) from a methodologi- cal perspective. -

DANIEL DENNETT and the SCIENCE of RELIGION Richard F

DANIEL DENNETT AND THE SCIENCE OF RELIGION Richard F. Green Department of Mathematics and Statistics University of Minnesota-Duluth Duluth, MN 55812 ABSTRACT Daniel Dennett (2006) recently published a book, “Breaking the Spell: Religion as a Natural Phenomenon,” which advocates the scientific study of religion. Dennett is a philosopher with wide-ranging interests including evolution and cognitive psychology. This talk is basically a review of Dennett’s religion book, but it will include comments about other work about religion, some mentioned by Dennett and some not. I will begin by describing my personal interest in religion and philosophy, including some recent reading. Then I will comment about the study of religion, including the scientific study of religion. Then I will describe some of Dennett’s ideas about religion. I will conclude by trying outlining the ideas that should be considered in order to understand religion. This talk is mostly about ideas, particularly religious beliefs, but not so much churches, creeds or religious practices. These other aspects of religion are important, and are the subject of study by a number of disciplines. I will mention some of the ways that people have studied religion, mainly to provide context for the particular ideas that I want to emphasize. I share Dennett’s reticence about defining religion. I will offer several definitions of religion, but religion is probably better defined by example than by attempting to specify its essence. A particular example of a religion is Christianity, even more particularly, Roman Catholicism. MOTIVATION My interest in philosophy and religion I. Beginnings I read a few books about religion and philosophy when I was in high school and imagined that college would be full of people arguing about religion and philosophy. -

New Atheism and the Scientistic Turn in the Atheism Movement MASSIMO PIGLIUCCI

bs_bs_banner MIDWEST STUDIES IN PHILOSOPHY Midwest Studies In Philosophy, XXXVII (2013) New Atheism and the Scientistic Turn in the Atheism Movement MASSIMO PIGLIUCCI I The so-called “New Atheism” is a relatively well-defined, very recent, still unfold- ing cultural phenomenon with import for public understanding of both science and philosophy.Arguably, the opening salvo of the New Atheists was The End of Faith by Sam Harris, published in 2004, followed in rapid succession by a number of other titles penned by Harris himself, Richard Dawkins, Daniel Dennett, Victor Stenger, and Christopher Hitchens.1 After this initial burst, which was triggered (according to Harris himself) by the terrorist attacks on September 11, 2001, a number of other authors have been associated with the New Atheism, even though their contributions sometimes were in the form of newspapers and magazine articles or blog posts, perhaps most prominent among them evolutionary biologists and bloggers Jerry Coyne and P.Z. Myers. Still others have published and continue to publish books on atheism, some of which have had reasonable success, probably because of the interest generated by the first wave. This second wave, however, often includes authors that explicitly 1. Sam Harris, The End of Faith: Religion, Terror, and the Future of Reason (New York: W.W. Norton, 2004); Sam Harris, Letter to a Christian Nation (New York: Vintage, 2006); Richard Dawkins, The God Delusion (Boston: Houghton Mifflin Harcourt, 2006); Daniel C. Dennett, Breaking the Spell: Religion as a Natural Phenomenon (New York: Viking Press, 2006); Victor J. Stenger, God:The Failed Hypothesis: How Science Shows That God Does Not Exist (Amherst, NY: Prometheus, 2007); Christopher Hitchens, God Is Not Great: How Religion Poisons Everything (New York: Twelve Books, 2007). -

A Pragmatic and Empirical Approach to Free Will Andrea Lavazza(Α)

RIVISTA INTERNAZIONALE DI FILOSOFIA E PSICOLOGIA ISSN 2039-4667; E-ISSN 2239-2629 DOI: 10.4453/rifp.2017.0020 Vol. 8 (2017), n. 3, pp. 247-258 STUDI A Pragmatic and Empirical Approach to Free Will Andrea Lavazza(α) Ricevuto: 10 febbraio 2017; accettato: 24 agosto 2017 █ Abstract The long dispute between incompatibilists (namely, the advocates of the contemporary ver- sion of the illusory nature of freedom) and compatibilists is further exemplified in the discussion between Sam Harris and Daniel Dennett. In this article, I try to add to the discussion by outlining a concept of free will linked to five operating conditions and put forward a proposal for its operationalization and quantifi- cation. The idea is to empirically and pragmatically define free will as needed for moral blame and legal liability, while separating this from the debate on global determinism, local determinism, automatisms and priming phenomena on a psychological level. This is made possible by weakening the claims of de- terminisms and psychological automatisms, based on the latest research, and by giving a well-outlined definition of free will as I want to defend it. KEYWORDS: Compatibilism; Daniel Dennett; Free Will Quantification; Global Determinism; Local De- terminism █ Riassunto Un approccio pragmatico ed empirico al libero arbitrio – La lunga disputa tra incompatibilisti (va- le a dire i sostenitori della versione contemporanea dell’illusorietà del libero arbitrio) e compatibilisti trova un’esemplificazione nel dibattito tra Sam Harris e Daniel Dennett. In questo articolo cerco di contribuire alla discussione delineando un concetto di libero arbitrio legato a cinque condizioni operative e proponendo la sua operazionalizzazione e quantificazione. -

The Organism – Reality Or Fiction?

The organism – reality or fiction? organism CHARLES T WOLFE SCOUTS THE ANSWERS forum/ hat is an “organism”? A state We murder to dissect, or as the famous physicist of matter, or a particular type Niels Bohr warned, we may kill the organism of living being chosen as an with our too-detailed measurements. experimental object, like the Historically, the word “organism” emerged in Wfruit fly or the roundworm c. elegans, which the late seventeenth and early eighteenth centu- 96 are “model organisms”? Organisms are real, in ries, in particular, in the debate between the a trivial sense, since flies and Tasmanian tigers philosopher and polymath Gottfried Wilhelm and Portuguese men-o-war are (or were, in the Leibniz and the chemist and physician Georg- case of the Tasmanian tiger) as real as tables and Ernest Stahl, the author of a 1708 essay On the chairs and planets. But at the same time, they difference between mechanism and organism. are meaningful constructs, as when we describe Both Leibniz and Stahl agree that organisms Hegel or Whitehead as philosophers of organism are not the same as mere mechanisms, but in the sense that they insist on the irreducible they differ on how to account for this differ- properties of wholes – sometimes, living wholes ence. For Leibniz, it is more of a difference in in particular. In addition, the idea of organism complexity (for him, organisms are machines is sometimes appealed to in a polemical way, as which are machines down to their smallest parts), when biologists or philosophers angrily oppose a whereas for Stahl, the organism is a type of whole more “holistic” sense of organism to a seemingly governed by the soul (at all levels of our bodily cold-hearted, analytic and dissective attitude functioning, from the way I blink if an object associated with “mechanism” and “reductionism”. -

The Science Studio with Daniel Dennett

The Science Studio With Daniel Dennett ROGER BINGHAM: My guest today in the Science Studio is Dan Dennett, who is the professor of philosophy and co-director for the Center For Cognitive Studies at Tufts University, co-founder and co-director of the Curricula Software Studio at Tufts, and he helped design museum exhibits on computers for the Smithsonian, the Museum of Science in Boston, and the Computer Museum in Boston. But more importantly he’s known as the author of many books, including most recently Breaking the Spell: Religion as a Natural Phenomenon. So, Dan Dennett, let me just take you back to an interview, because this is Beyond Belief Two: Enlightenment 2.0. You were supposed to have been at the first meeting last year. There’s an interview that you did some time ago with our old friend, Susan Blackmore, where she asked you “I assume you don’t believe in God; do you think anything of the person survives physical death?” You got very close to physical death about a year ago. DANIEL DENNETT: I did, yeah. BINGHAM: Would you like to recount that wonderful experience? DENNETT: I had had a triple bypass in ‘99, but I was fine; I was in good shape. But then I had a so-called aortic dissection. My aorta, the main pipe from the heart up to the rest of the body, sort of blew out. It was held together by the scar tissue from the old operation; that’s what saved my life, first. And so I had that all replaced - I now have a Dacron aorta and a carbon fiber aortic valve, and I’m fine, as you can see. -

Where's the Action? Epiphenomenalism and the Problem of Free Will Shaun Gallagher Department of Philosophy University of Central

Gallagher, S. 2006. Where's the action? Epiphenomenalism and the problem of free will. In W. Banks, S. Pockett, and S. Gallagher. Does Consciousness Cause Behavior? An Investigation of the Nature of Volition (109-124). Cambridge, MA: MIT Press. Where's the action? Epiphenomenalism and the problem of free will Shaun Gallagher Department of Philosophy University of Central Florida [email protected] Some philosophers argue that Descartes was wrong when he characterized animals as purely physical automata – robots devoid of consciousness. It seems to them obvious that animals (tigers, lions, and bears, as well as chimps, dogs, and dolphins, and so forth) are conscious. There are other philosophers who argue that it is not beyond the realm of possibilities that robots and other artificial agents may someday be conscious – and it is certainly practical to take the intentional stance toward them (the robots as well as the philosophers) even now. I'm not sure that there are philosophers who would deny consciousness to animals but affirm the possibility of consciousness in robots. In any case, and in whatever way these various philosophers define consciousness, the majority of them do attribute consciousness to humans. Amongst this group, however, there are philosophers and scientists who want to reaffirm the idea, explicated by Shadworth Holloway Hodgson in 1870, that in regard to action the presence of consciousness does not matter since it plays no causal role. Hodgson's brain generated the following thought: neural events form an autonomous causal chain that is independent of any accompanying conscious mental states. Consciousness is epiphenomenal, incapable of having any effect on the nervous system. -

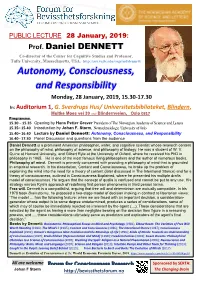

Autonomy, Consciousness, and Responsibility

PUBLIC LECTURE 28 January, 2019: Prof. Daniel DENNETT Co-director of the Center for Cognitive Studies and Professor, Tufts University, Massachusetts, USA. http://ase.tufts.edu/cogstud/dennett/ Autonomy, Consciousness, and Responsibility Monday, 28 January, 2019, 15.30-17.30 In: Auditorium 1, G. Sverdrups Hus/ Universitetsbiblioteket, Blindern, Moltke Moes vei 39 ved/ Blindernveien, Oslo 0317 Programme: 15.30 – 15.35 Opening by Hans Petter Graver President of The Norwegian Academy of Science and Letters 15.35– 15.40 Introduction by Johan F. Storm, Neurophysiology, University of Oslo 15.40– 16.40 Lecture by Daniel Dennett: Autonomy, Consciousness, and Responsibility 16.40– 17.30 Panel Discussion and questions from the audience Daniel Dennett is a prominent American philosopher, writer, and cognitive scientist whose research centers on the philosophy of mind, philosophy of science, and philosophy of biology. He was a student of W. V. Quine at Harvard University, and Gilbert Ryle at the University of Oxford, where he received his PhD in philosophy in 1965. He is one of the most famous living philosophers and the author of numerous books. Philosophy of mind. Dennett is primarily concerned with providing a philosophy of mind that is grounded in empirical research. In his dissertation, Content and Consciousness, he broke up the problem of explaining the mind into the need for a theory of content (later discussed in The Intentional Stance) and for a theory of consciousness, outlined in Consciousness Explained, where he presented his multiple drafts model of consciousness. He argues that the concept of qualia is confused and cannot be put to any use.