Green Information Technology Green Information Technology a Sustainable Approach

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Warkitting: the Drive-By Subversion of Wireless Home Routers

Warkitting: the Drive-by Subversion of Wireless Home Routers Alex Tsow Markus Jakobsson Liu Yang Susanne Wetzel School of Informatics Department of Computer Science Indiana University Stevens Institute of Technology Bloomington, IN 47408 Hoboken, NJ 07030 {atsow, markus}@indiana.edu {lyang, swetzel}@cs.stevens.edu Abstract In this paper we introduce the notion of warkitting as the drive-by subversion of wireless home routers through unauthorized access by mobile WiFi clients. We describe how such at- tacks can be performed, evaluate the vulnerability of currently deployed wireless routers based on experimental data, and examine the impact of these attacks on Internet fraud. Our analysis shows that it is possible in practice to carry out warkitting attacks with low cost equipment widely available today and that the volume of credential theft possible through warkitting ex- ceeds current estimates of credential theft due to phishing. We discuss how to detect a warkit- ting attack in progress and show how to analyze warkitted routers for evidence linking it to the attackers. Keywords: Warkit, WAPkit, WAPjack, wireless home routers, phishing, pharming, Internet fraud, intrusion detection 1 Introduction The convenience of wireless networking has led to widespread adoption of wireless access points in home and small business settings. While some wireless access points are administered by se- curity conscious users, several recent studies show that a large number of wireless access points run with default settings. The default settings from the most established vendors are optimized for ease of setup instead of secure access. Most commonly, the default settings disable wireless en- cryption, permit wireless access to router administration, and guard administration with published passwords. -

CATÁLOGO DE CURSOS 2021 .Com.Mx Spcgroup Ofrecemos Soluciones Personalizadas Para La Industria Que Busca Trascender

CATÁLOGO DE CURSOS 2021 .com.mx spcgroup Ofrecemos soluciones personalizadas para la industria que busca trascender. Somos expertos en consultoría y capacitación a compañías principalmente del ramo manufacturero y de servicios. Nuestra experiencia está enfocada en áreas como Sistemas de Gestión de la Calidad, herramientas y metodologías de Mejora Continua, Supply Chain y Desarrollo del Potencial Humano. La exigencia de la sociedad actual, ha creado la urgente necesidad de eficientizar el trabajo del hombre, por consecuencia se requiere de mayor preparación en temas de calidad, productividad y sobre todo gestión de negocios. Miles de profesionales en México, U.S.A, Caribe y América del Sur avalan nuestra calidad educativa. spcgroup.com.mx 811 477 7475, 811 477 7476 y 442 258 1587 [email protected] ÍNDICE SISTEMAS DE CALIDAD ISO 9001 2015 Sensibilización a Requerimientos (8 horas) .........................................................................................................07 ISO 9001 2015 Interpretación de Requerimientos (16 horas) .......................................................................................................08 ISO 9001 2015 Gestión de Riesgos base ISO 31000 2018 (16 horas) .........................................................................................09 ISO 9001 2015 Auditor Interno base ISO 19011 2018 (16 horas) .................................................................................................10 IATF 16949 2016 Sensibilización Requerimientos (8 horas) .........................................................................................................11 -

Special Edition TQM&E No.3 Vol.33 2005

INTERNATIONAL JOURNAL ol. 33 2005 3 V o TOTAL QUALITY MANAGEMENT & EXCELLENCE MENADžMENT TOTALNIM KVALITETOM & IZVRSNOST 452-0680 N '' Special edition '' Papers second reviewed and presented at the Third International Working Conference ''Total Quality Management - Advanced and Intelligent Approaches'', held from 30th May to 1st June, 2005, at Belgrade, Serbia. UDK 658.5 YU ISSN 1 YUSQ - QUALITY AND STANDARDIZATION ASSOCIATION OF SERBIA & MONTENEGRO YUSQ - QUALITY AND STANDARDIZATION ASSOCIATION OF SERBIA & MONTENEGRO ASSOCIATION SERBIA & MONTENEGRO FOR QUALITY AND STANDARDS Kneza Miloša 9, 11000 Beograd, Serbia, Phone/fax: ++ 381 11 323 55 15 INTERNATIONAL JOURNAL TOTAL QUALITY MANAGEMENT & EXCELLENCE MENADžMENT TOTALNIM KVALITETOM & IZVRSNOST '' Special edition '' Papers second reviewed and presented at the Third International Working Conference ''Total Quality Management – Advanced and Intelligent Approaches'', held from 30th May to 1st June, 2005, at Belgrade, Serbia. UDK 658.5 YU ISSN 1452-0680 No 3. Vol. 33 2005 INTERNATIONAL JOURNAL „TOTAL QUALITY MANAGEMENT & EXCELLENCE” Vol. XXXIII, No.3, 2005 FOUNDER AND PUBLISHER: Association Serbia and Montenegro for Quality and Standards (YUSQ), Belgrade EDITOR IN CHIEF: Prof. Dr Vidosav D. Majstorović, mech. eng. Mechanical Engineering Faculty, University of Belgrade, Serbia INTERNATIONAL EDITORIAL BOARD: Prof. dr Guenter ARNDT Prof. dr Gunnar SOHLENIUS University of Wollongong, Wollongong, Royal Institute of Technology, Stockholm, Australia Sweden Prof. dr Daniel BRISAUND Prof. dr Dragutin STANIVUKOVIĆ University of Grenoble, Grenoble, France FTN, Novi Sad, S&M Prof. dr Barrie DALE Prof. dr Herbert OSANNA UMIST, Manchester, England Technical University, Wien, Austria Michel DEBENHAM Prof. dr Tilo PFIEFER Institute of QA, London, England RWTH Aachen, Aachen, Germany Sofija DJURDJEVIC Prof. dr Hendrik VanBRUSSEL YUQS, Belgrade, S&M Katolike University Leuven, Heverlez, Belgium Prof. -

Quality of Sustainability Reporting Based on Global Reporting Initiative (GRI) Guidelines: The

Quality of Sustainability Reporting Based on Global Reporting Initiative (GRI) Guidelines: The Impact of Company Characteristics by Wanting He A Thesis submitted to the Faculty of Graduate Studies of The University of Manitoba In partial fulfillment of the requirement of the degree of MASTER OF SCIENCE Department of Supply Chain Management I.H. Asper School of Business University of Manitoba Winnipeg, Manitoba, Canada Copyright © 2018 by Wanting He i Abstract With the rise in stakeholders’ demand for more transparency and information disclosure, companies are increasingly publishing sustainability reports to improve their public image. The purpose of this thesis is to explore characteristics of companies that have high quality sustainability reports. Based on the extant literature; location, organization size, third-party verification, and ISO certification are potential factors that influence a company’s reporting performance. A statistical rating method, developed using Global Reporting Initiative (GRI) principles, is used to grade sustainability reports. GRI appears to be the most widely used and dominant sustainability reporting guideline. Hypotheses are tested using ANOVA and regression techniques. The results reveal that firm size and ISO certification influence the quality of sustainability reports. Key words: sustainability report; GRI; firm size; third-party verification; location; ISO ii Acknowledgements I would like to express my deepest and sincere gratitude to my awesome advisor Dr. Paul D, Larson, for his guidance and encouragement all the way during my master study. I would also like to thank my committee members, Dr. Sara Hajmohammad and Prof. Kristina Hunter, for their invaluable time and insightful comments to improve this thesis. Furthermore, I want to thank my family, for their support of any decision I made. -

Hacking Roomba®

Hacking Roomba® Tod E. Kurt Wiley Publishing, Inc. Hacking Roomba® Published by Wiley Publishing, Inc. 10475 Crosspoint Boulevard Indianapolis, IN 46256 www.wiley.com Copyright © 2007 by Wiley Publishing, Inc., Indianapolis, Indiana Published simultaneously in Canada ISBN-13: 978-0-470-07271-4 ISBN-10: 0-470-07271-7 Manufactured in the United States of America 10 9 8 7 6 5 4 3 2 1 No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600. Requests to the Publisher for permission should be addressed to the Legal Department, Wiley Publishing, Inc., 10475 Crosspoint Blvd., Indianapolis, IN 46256, (317) 572-3447, fax (317) 572-4355, or online at http://www.wiley.com/go/permissions. Limit of Liability/Disclaimer of Warranty: The publisher and the author make no representations or warranties with respect to the accuracy or completeness of the contents of this work and specifically disclaim all warranties, including without limitation warranties of fitness for a particular purpose. No warranty may be created or extended by sales or promotional materials. The advice and strategies contained herein may not be suitable for every situation. This work is sold with the understanding that the publisher is not engaged in rendering legal, accounting, or other professional services. -

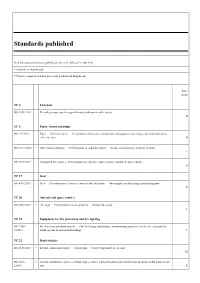

Standards Published

Standards published New International Standards published between 01 July and 31 July 2013 * Available in English only ** French version of standard previously published in English only Price group TC 2 Fasteners ISO 10511:2012 Prevailing torque type hexagon thin nuts (with non-metallic insert) B TC 6 Paper, board and pulps ISO 217:2013 Paper — Untrimmed sizes — Designation and tolerances for primary and supplementary ranges, and indication of ma- chine direction B ISO 10775:2013 * Paper, board and pulps — Determination of cadmium content — Atomic absorption spectrometric method C ISO 3037:2013 Corrugated fibreboard — Determination of edgewise crush resistance (unwaxed edge method) D TC 17 Steel ISO 4967:2013 Steel — Determination of content of non-metallic inclusions — Micrographic method using standard diagrams R TC 20 Aircraft and space vehicles ISO 6517:2013 * Air cargo — Certified lower deck containers — Design and testing L TC 21 Equipment for fire protection and fire fighting ISO 7240- * Fire detection and alarm systems — Part 14: Design, installation, commissioning and service of fire detection and fire 14:2013 alarm systems in and around buildings T TC 22 Road vehicles ISO 6623:2013 * Internal combustion engines — Piston rings — Scraper rings made of cast iron M ISO 6626- * Internal combustion engines — Piston rings — Part 2: Coil-spring-loaded oil control rings of narrow width made of cast 2:2013 iron R ISO 18669- * Internal combustion engines — Piston pins — Part 1: General specifications 1:2013 M ISO 15031- * Road vehicles -

Taxonomy of WRT54G(S) Hardware and Custom Firmware

Edith Cowan University Research Online ECU Publications Pre. 2011 2005 Taxonomy of WRT54G(S) Hardware and Custom Firmware Marwan Al-Zarouni Edith Cowan University Follow this and additional works at: https://ro.ecu.edu.au/ecuworks Part of the Computer Sciences Commons Al-Zarouni, M. (2005). Taxonomy of WRT54G(S) hardware and custom firmware. In Proceedings of 3rd Australian Information Security Management Conference (pp. 1-10). Edith Cowan University. Available here This Conference Proceeding is posted at Research Online. https://ro.ecu.edu.au/ecuworks/2946 Taxonomy of WRT54G(S) Hardware and Custom Firmware Marwan Al-Zarouni School of Computer and Information Science Edith Cowan University E-mail: [email protected] Abstract This paper discusses the different versions of hardware and firmware currently available for the Linksys WRT54G and WRT54GS router models. It covers the advantages, disadvantages, and compatibility issues of each one of them. The paper goes further to compare firmware added features and associated filesystems and then discusses firmware installation precautions and ways to recover from a failed install. Keywords WRT54G, Embedded Linux, Wireless Routers, Custom Firmware, Wireless Networking, Firmware Hacking. BACKGROUND INFORMATION The WRT54G is a 802.11g router that combines the functionality of three different network devices; it can serve as a wireless Access Point (AP), a four-port full-duplex 10/100 switch, and a router that ties it all together (ProductReview, 2005). The WRT54G firmware was based on embedded Linux which is open source. This led to the creation of several sites and discussion forums that were dedicated to the router which in turn led to the creation of several variants of its firmware. -

ISO 14031:2021 16065197Cf4d/Iso-14031-2021

INTERNATIONAL ISO STANDARD 14031 Third edition 2021-03 Environmental management — Environmental performance evaluation — Guidelines Management environnemental — Évaluation de la performance environnementale — Lignes directrices iTeh STANDARD PREVIEW (standards.iteh.ai) ISO 14031:2021 https://standards.iteh.ai/catalog/standards/sist/0990790c-4bc0-4417-8081- 16065197cf4d/iso-14031-2021 Reference number ISO 14031:2021(E) © ISO 2021 ISO 14031:2021(E) iTeh STANDARD PREVIEW (standards.iteh.ai) ISO 14031:2021 https://standards.iteh.ai/catalog/standards/sist/0990790c-4bc0-4417-8081- 16065197cf4d/iso-14031-2021 COPYRIGHT PROTECTED DOCUMENT © ISO 2021 All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below or ISO’s member body in the country of the requester. ISO copyright office CP 401 • Ch. de Blandonnet 8 CH-1214 Vernier, Geneva Phone:Website: +41 www.iso.org 22 749 01 11 Email: [email protected] iiPublished in Switzerland © ISO 2021 – All rights reserved ISO 14031:2021(E) Contents Page Foreword ........................................................................................................................................................................................................................................iv -

Revenuesuite from Dmarc

www.beradio.conn May 2005 THE RADIO TECHNOLOGY6E41 D ES RF Engineering Preparations for AM IBOC Trends in Technology Inside automation RevenueSuitefrom dMarc After you've closed the logs, new RevenueSuite starts selling Tap the riches in your remnant inveitory. Ideal for your Scott SS32 or Maestro. Automatically. Effortlessly. Call.* more information. B nADC .11.1-1Nn DIAGNOSTICS I DATASERVICES I SARBANES-OXLEY I REVENUESUITE CHECK OUT OUR LATEST! The NEW AUDIOARTS D-75 DIGITAL RADIO CONSOLE A CLEAN, CLEAR on -air design: straightforward layout, easy tabletop installation, and best of all completely modular. A TRUE plug -and -play radio board from the Wheatstone digital design team! 4--IleAUDIOARTs EAIGIAIEERIAIG sales@wheatstone. corn / tel 252-638-7000 / www.audioarts.net Copyright C 2005 by Wheatstone Corporanon We're Reshaping The Future Of Radio From A Solid Foundation Of Leadership. The newest force in radio was forged from a rich heritage of leadership that is decades strong. We're bringing a breath of fresh air and a re -energized spirit to an industry we helped to build. At Team Harris Radio, we've brought together the industry's largest and most comprehensive range of products, services and people dedicated to advancirg radio.All working together in perfect harmony and focused on the success of your business.From our innovative products to our forward -looking services, management tools and expert support teams, we're dedicated to our mutual future of pioneering and growth. So whether you're audience is around the corner or around the worla, Harris Radio is on the air with the resources you need to succeed. -

Index Images Download 2006 News Crack Serial Warez Full 12 Contact

index images download 2006 news crack serial warez full 12 contact about search spacer privacy 11 logo blog new 10 cgi-bin faq rss home img default 2005 products sitemap archives 1 09 links 01 08 06 2 07 login articles support 05 keygen article 04 03 help events archive 02 register en forum software downloads 3 security 13 category 4 content 14 main 15 press media templates services icons resources info profile 16 2004 18 docs contactus files features html 20 21 5 22 page 6 misc 19 partners 24 terms 2007 23 17 i 27 top 26 9 legal 30 banners xml 29 28 7 tools projects 25 0 user feed themes linux forums jobs business 8 video email books banner reviews view graphics research feedback pdf print ads modules 2003 company blank pub games copyright common site comments people aboutus product sports logos buttons english story image uploads 31 subscribe blogs atom gallery newsletter stats careers music pages publications technology calendar stories photos papers community data history arrow submit www s web library wiki header education go internet b in advertise spam a nav mail users Images members topics disclaimer store clear feeds c awards 2002 Default general pics dir signup solutions map News public doc de weblog index2 shop contacts fr homepage travel button pixel list viewtopic documents overview tips adclick contact_us movies wp-content catalog us p staff hardware wireless global screenshots apps online version directory mobile other advertising tech welcome admin t policy faqs link 2001 training releases space member static join health -

Environmental Benchmarking for Ipc Industries

ENVIRONMENTAL BENCHMARKING FOR IPC INDUSTRIES FINAL REPORT Prepared for the Environmental Protection Agency by Clean Technology Centre, Cork Institute of Technology Authors: Noel Duffy, Colman Mc Carthy and Matthias Zoehrer ENVIRONMENTAL PROTECTION AGENCY An Ghníomhaireacht um Chaomhnú Comhshaoil PO Box 3000, Johnstown Castle, Co.Wexford, Ireland Telephone: +353-53-60600 Fax: +353-53-60699 Email: [email protected] Website: www.epa.ie © Environmental Protection Agency 2002 ACKNOWLEDGEMENTS This report has been prepared as part of the Environmental Research Technological Development and Innovation Programme under the Productive Sector Operational Programme 2000-2006. The programme is financed by the Irish Government under the National Development Plan. It is administered on behalf of the Department of the Environment and Local Government by the Environmental Protection Agency which has the statutory function of co-ordinating and promoting environmental research. DISCLAIMER Although every effort has been made to ensure the accuracy of the material contained in this publication, complete accuracy cannot be guaranteed. Neither the Environmental Protection Agency nor the author(s) accept any responsibility whatsoever for loss or damage occasioned or claimed to have been occasioned, in part or in full, as a consequence of any person acting, or refraining from acting, as a result of a matter contained in this publication. All or part of this publication may be reproduced without further permission, provided the source is acknowledged. ENVIRONMENTAL BENCHMARKING FOR IPC INDUSTRIES FINAL REPORT Published by the Environmental Protection Agency, Ireland PRINTED ON RECYCLED PAPER ISBN:1-84095- to come 06/01/300 Price: IR£5/E6.35 Contents Executive Summary……………………………………………………………………i 1 INTRODUCTION.............................................................................................. -

Table of Contents From

Standards, Publications & Software Table of Contents from 34 Construction 89 Medical 110 Petroleum Global Engineering Documents®, the retail arm of IHS, is your single source for individual standards, 136 Telecommunications publications, and integrated collections via hardcopy or electronic delivery. Accessing critical technical 1 Aerospace/Aviation information has never been easier! 7 Annual Book of ASTM Standards global.ihs.com 17 Automotive/Heavy Equipment 25 Boiler & Pressure Vessel Code Copyright © 2004 by Information Handling Services Inc. All rights reserved. 30 CE Marking No portion of this material may be reprinted in any form without the experssed written permission of the publisher, Information Handling Services. Global Engineering Documents and logo are trademarks of Information Handling Services Inc. Registered U.S. Patent and Trademark Office. 32 Color Charts & Standards All other trademarks, brands and product names are the property of their respective owners. 41 Drawing & Drafting 46 Electrical/Electronics 67 Electromagnetic Compatibility/Frequency 70 Environmental 76 Fire Protection 79 Gears 82 Hardware 86 Hydraulics 104 Metals 108 Occupational Health and Safety 120 Plastics 124 Public Health 126 Quality 133 Safety 151 Transportation Management Systems 154 U.S. Government & Military 165 Welding 171 Timesaving Services Free Catalogs Available from Global . 171 Reference Materials & Services . 172 Standards Developing Organization Index . 174 Catalog Index . 177 PRIORITY CODE G040 To order or for more information: 800-854-7179 (USA/Canada) • fax: 303-397-2740 • global.ihs.com Aerospace/Aviation AV DATA® Aerospace Industries Association With AV-DATA® you have access to critical aviation information Global is the worldwide distributor of AIA standards and to help ensure worldwide regulatory compliance, airworthiness, publications.