Broom: Sweeping out Garbage Collection from Big Data Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An In-Depth Study with All Rust Cves

Memory-Safety Challenge Considered Solved? An In-Depth Study with All Rust CVEs HUI XU, School of Computer Science, Fudan University ZHUANGBIN CHEN, Dept. of CSE, The Chinese University of Hong Kong MINGSHEN SUN, Baidu Security YANGFAN ZHOU, School of Computer Science, Fudan University MICHAEL R. LYU, Dept. of CSE, The Chinese University of Hong Kong Rust is an emerging programing language that aims at preventing memory-safety bugs without sacrificing much efficiency. The claimed property is very attractive to developers, and many projects start usingthe language. However, can Rust achieve the memory-safety promise? This paper studies the question by surveying 186 real-world bug reports collected from several origins which contain all existing Rust CVEs (common vulnerability and exposures) of memory-safety issues by 2020-12-31. We manually analyze each bug and extract their culprit patterns. Our analysis result shows that Rust can keep its promise that all memory-safety bugs require unsafe code, and many memory-safety bugs in our dataset are mild soundness issues that only leave a possibility to write memory-safety bugs without unsafe code. Furthermore, we summarize three typical categories of memory-safety bugs, including automatic memory reclaim, unsound function, and unsound generic or trait. While automatic memory claim bugs are related to the side effect of Rust newly-adopted ownership-based resource management scheme, unsound function reveals the essential challenge of Rust development for avoiding unsound code, and unsound generic or trait intensifies the risk of introducing unsoundness. Based on these findings, we propose two promising directions towards improving the security of Rust development, including several best practices of using specific APIs and methods to detect particular bugs involving unsafe code. -

Project Snowflake: Non-Blocking Safe Manual Memory Management in .NET

Project Snowflake: Non-blocking Safe Manual Memory Management in .NET Matthew Parkinson Dimitrios Vytiniotis Kapil Vaswani Manuel Costa Pantazis Deligiannis Microsoft Research Dylan McDermott Aaron Blankstein Jonathan Balkind University of Cambridge Princeton University July 26, 2017 Abstract Garbage collection greatly improves programmer productivity and ensures memory safety. Manual memory management on the other hand often delivers better performance but is typically unsafe and can lead to system crashes or security vulnerabilities. We propose integrating safe manual memory management with garbage collection in the .NET runtime to get the best of both worlds. In our design, programmers can choose between allocating objects in the garbage collected heap or the manual heap. All existing applications run unmodified, and without any performance degradation, using the garbage collected heap. Our programming model for manual memory management is flexible: although objects in the manual heap can have a single owning pointer, we allow deallocation at any program point and concurrent sharing of these objects amongst all the threads in the program. Experimental results from our .NET CoreCLR implementation on real-world applications show substantial performance gains especially in multithreaded scenarios: up to 3x savings in peak working sets and 2x improvements in runtime. 1 Introduction The importance of garbage collection (GC) in modern software cannot be overstated. GC greatly improves programmer productivity because it frees programmers from the burden of thinking about object lifetimes and freeing memory. Even more importantly, GC prevents temporal memory safety errors, i.e., uses of memory after it has been freed, which often lead to security breaches. Modern generational collectors, such as the .NET GC, deliver great throughput through a combination of fast thread-local bump allocation and cheap collection of young objects [63, 18, 61]. -

Ensuring the Spatial and Temporal Memory Safety of C at Runtime

MemSafe: Ensuring the Spatial and Temporal Memory Safety of C at Runtime Matthew S. Simpson, Rajeev K. Barua Department of Electrical & Computer Engineering University of Maryland, College Park College Park, MD 20742-3256, USA fsimpsom, [email protected] Abstract—Memory access violations are a leading source of dereferencing pointers obtained from invalid pointer arith- unreliability in C programs. As evidence of this problem, a vari- metic; and dereferencing uninitialized, NULL or “manufac- ety of methods exist that retrofit C with software checks to detect tured” pointers.1 A temporal error is a violation caused by memory errors at runtime. However, these methods generally suffer from one or more drawbacks including the inability to using a pointer whose referent has been deallocated (e.g. with detect all errors, the use of incompatible metadata, the need for free) and is no longer a valid object. The most well-known manual code modifications, and high runtime overheads. temporal violations include dereferencing “dangling” point- In this paper, we present a compiler analysis and transforma- ers to dynamically allocated memory and freeing a pointer tion for ensuring the memory safety of C called MemSafe. Mem- more than once. Dereferencing pointers to automatically allo- Safe makes several novel contributions that improve upon previ- ous work and lower the cost of safety. These include (1) a method cated memory (stack variables) is also a concern if the address for modeling temporal errors as spatial errors, (2) a compati- of the referent “escapes” and is made available outside the ble metadata representation combining features of object- and function in which it was defined. -

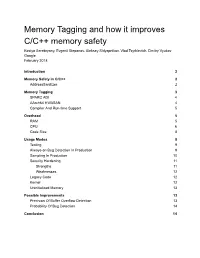

Memory Tagging and How It Improves C/C++ Memory Safety Kostya Serebryany, Evgenii Stepanov, Aleksey Shlyapnikov, Vlad Tsyrklevich, Dmitry Vyukov Google February 2018

Memory Tagging and how it improves C/C++ memory safety Kostya Serebryany, Evgenii Stepanov, Aleksey Shlyapnikov, Vlad Tsyrklevich, Dmitry Vyukov Google February 2018 Introduction 2 Memory Safety in C/C++ 2 AddressSanitizer 2 Memory Tagging 3 SPARC ADI 4 AArch64 HWASAN 4 Compiler And Run-time Support 5 Overhead 5 RAM 5 CPU 6 Code Size 8 Usage Modes 8 Testing 9 Always-on Bug Detection In Production 9 Sampling In Production 10 Security Hardening 11 Strengths 11 Weaknesses 12 Legacy Code 12 Kernel 12 Uninitialized Memory 13 Possible Improvements 13 Precision Of Buffer Overflow Detection 13 Probability Of Bug Detection 14 Conclusion 14 Introduction Memory safety in C and C++ remains largely unresolved. A technique usually called “memory tagging” may dramatically improve the situation if implemented in hardware with reasonable overhead. This paper describes two existing implementations of memory tagging: one is the full hardware implementation in SPARC; the other is a partially hardware-assisted compiler-based tool for AArch64. We describe the basic idea, evaluate the two implementations, and explain how they improve memory safety. This paper is intended to initiate a wider discussion of memory tagging and to motivate the CPU and OS vendors to add support for it in the near future. Memory Safety in C/C++ C and C++ are well known for their performance and flexibility, but perhaps even more for their extreme memory unsafety. This year we are celebrating the 30th anniversary of the Morris Worm, one of the first known exploitations of a memory safety bug, and the problem is still not solved. -

Memory Management with Explicit Regions by David Edward Gay

Memory Management with Explicit Regions by David Edward Gay Engineering Diploma (Ecole Polytechnique F´ed´erale de Lausanne, Switzerland) 1992 M.S. (University of California, Berkeley) 1997 A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the GRADUATE DIVISION of the UNIVERSITY of CALIFORNIA at BERKELEY Committee in charge: Professor Alex Aiken, Chair Professor Susan L. Graham Professor Gregory L. Fenves Fall 2001 The dissertation of David Edward Gay is approved: Chair Date Date Date University of California at Berkeley Fall 2001 Memory Management with Explicit Regions Copyright 2001 by David Edward Gay 1 Abstract Memory Management with Explicit Regions by David Edward Gay Doctor of Philosophy in Computer Science University of California at Berkeley Professor Alex Aiken, Chair Region-based memory management systems structure memory by grouping objects in regions under program control. Memory is reclaimed by deleting regions, freeing all objects stored therein. Our compiler for C with regions, RC, prevents unsafe region deletions by keeping a count of references to each region. RC's regions have advantages over explicit allocation and deallocation (safety) and traditional garbage collection (better control over memory), and its performance is competitive with both|from 6% slower to 55% faster on a collection of realistic benchmarks. Experience with these benchmarks suggests that modifying many existing programs to use regions is not difficult. An important innovation in RC is the use of type annotations that make the structure of a program's regions more explicit. These annotations also help reduce the overhead of reference counting from a maximum of 25% to a maximum of 12.6% on our benchmarks. -

In This Issue

FALL 2019 IN THIS ISSUE JONATHAN BISS CELEBRATING BEETHOVEN: PART I November 5 DANISH STRING QUARTET November 7 PILOBOLUS COME TO YOUR SENSES November 14–16 GABRIEL KAHANE November 23 MFA IN Fall 2019 | Volume 16, No. 2 ARTS LEADERSHIP FEATURE In This Issue Feature 3 ‘Indecent,’ or What it Means to Create Queer Jewish Theatre in Seattle Dialogue 9 Meet the Host of Tiny Tots Concert Series 13 We’re Celebrating 50 Years Empowering a new wave of Arts, Culture and Community of socially responsible Intermission Brain arts professionals Transmission 12 Test yourself with our Online and in-person trivia quiz! information sessions Upcoming Events seattleu.edu/artsleaderhip/graduate 15 Fall 2019 PAUL HEPPNER President Encore Stages is an Encore arts MIKE HATHAWAY Senior Vice President program that features stories Encore Ad 8-27-19.indd 1 8/27/19 1:42 PM KAJSA PUCKETT Vice President, about our local arts community Sales & Marketing alongside information about GENAY GENEREUX Accounting & performances. Encore Stages is Office Manager a publication of Encore Media Production Group. We also publish specialty SUSAN PETERSON Vice President, Production publications, including the SIFF JENNIFER SUGDEN Assistant Production Guide and Catalog, Official Seattle Manager ANA ALVIRA, STEVIE VANBRONKHORST Pride Guide, and the Seafair Production Artists and Graphic Designers Commemorative Magazine. Learn more at encorespotlight.com. Sales MARILYN KALLINS, TERRI REED Encore Stages features the San Francisco/Bay Area Account Executives BRIEANNA HANSEN, AMELIA HEPPNER, following organizations: ANN MANNING Seattle Area Account Executives CAROL YIP Sales Coordinator Marketing SHAUN SWICK Senior Designer & Digital Lead CIARA CAYA Marketing Coordinator Encore Media Group 425 North 85th Street • Seattle, WA 98103 800.308.2898 • 206.443.0445 [email protected] encoremediagroup.com Encore Arts Programs and Encore Stages are published monthly by Encore Media Group to serve musical and theatrical events in the Puget Sound and San Francisco Bay Areas. -

Automated and Modular Refinement Reasoning for Concurrent Programs

Automated and modular refinement reasoning for concurrent programs Chris Hawblitzel Erez Petrank Shaz Qadeer Serdar Tasiran Microsoft Technion Microsoft Ko¸cUniversity Abstract notable successes using the refinement approach in- clude the work of Abrial et al. [2] and the proof of full We present civl, a language and verifier for concur- functional correctness of the seL4 microkernel [37]. rent programs based on automated and modular re- This paper presents the first general and automated finement reasoning. civl supports reasoning about proof system for refinement verification of shared- a concurrent program at many levels of abstraction. memory multithreaded software. Atomic actions in a high-level description are refined We present our verification approach in the context to fine-grain and optimized lower-level implementa- of civl, an idealized concurrent programming lan- tions. A novel combination of automata theoretic guage. In civl, a program is described as a collection and logic-based checks is used to verify refinement. of procedures whose implementation can use the stan- Modular specifications and proof annotations, such dard features such as assignment, conditionals, loops, as location invariants and procedure pre- and post- procedure calls, and thread creation. Each procedure conditions, are specified separately, independently at accesses shared global variables only through invoca- each level in terms of the variables visible at that tions of atomic actions. A subset of the atomic ac- level. We have implemented as an extension to civl tions may be refined by new procedures and a new the language and verifier. We have used boogie civl program is obtained by replacing the invocation of an to refine a realistic concurrent garbage collection al- atomic action by a call to the corresponding proce- gorithm from a simple high-level specification down dure refining the action. -

An Evolutionary Study of Linux Memory Management for Fun and Profit Jian Huang, Moinuddin K

An Evolutionary Study of Linux Memory Management for Fun and Profit Jian Huang, Moinuddin K. Qureshi, and Karsten Schwan, Georgia Institute of Technology https://www.usenix.org/conference/atc16/technical-sessions/presentation/huang This paper is included in the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16). June 22–24, 2016 • Denver, CO, USA 978-1-931971-30-0 Open access to the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16) is sponsored by USENIX. An Evolutionary Study of inu emory anagement for Fun and rofit Jian Huang, Moinuddin K. ureshi, Karsten Schwan Georgia Institute of Technology Astract the patches committed over the last five years from 2009 to 2015. The study covers 4587 patches across Linux We present a comprehensive and uantitative study on versions from 2.6.32.1 to 4.0-rc4. We manually label the development of the Linux memory manager. The each patch after carefully checking the patch, its descrip- study examines 4587 committed patches over the last tions, and follow-up discussions posted by developers. five years (2009-2015) since Linux version 2.6.32. In- To further understand patch distribution over memory se- sights derived from this study concern the development mantics, we build a tool called MChecker to identify the process of the virtual memory system, including its patch changes to the key functions in mm. MChecker matches distribution and patterns, and techniues for memory op- the patches with the source code to track the hot func- timizations and semantics. Specifically, we find that tions that have been updated intensively. -

Proceedings, the 57Th Annual Meeting, 1981

PROCEEDINGS THE FIFTY-SEVENTH ANNUAL MEETING National Association of Schools of Music NUMBER 70 APRIL 1982 NATIONAL ASSOCIATION OF SCHOOLS OF MUSIC PROCEEDINGS OF THE 57th ANNUAL MEETING Dallas, Texas I98I COPYRIGHT © 1982 ISSN 0190-6615 NATIONAL ASSOCIATION OF SCHOOLS OF MUSIC 11250 ROGER BACON DRIVE, NO. 5, RESTON, VA. 22090 All rights reserved including the right to reproduce this book or parts thereof in any form. CONTENTS Speeches Presented Music, Education, and Music Education Samuel Lipman 1 The Preparation of Professional Musicians—Articulation between Elementary/Secondary and Postsecondary Training: The Role of Competitions Robert Freeman 11 Issues in the Articulation between Elementary/Secondary and Postsecondary Training Kenneth Wendrich 19 Trends and Projections in Music Teacher Placement Charles Lutton 23 Mechanisms for Assisting Young Professionals to Organize Their Approach to the Job Market: What Techniques Should Be Imparted to Graduating Students? Brandon Mehrle 27 The Academy and the Marketplace: Cooperation or Conflict? Joseph W.Polisi 30 "Apples and Oranges:" Office Applications of the Micro Computer." Lowell M. Creitz 34 Microcomputers and Music Learning: The Development of the Illinois State University - Fine Arts Instruction Center, 1977-1981. David L. Shrader and David B. Williams 40 Reaganomics and Arts Legislation Donald Harris 48 Reaganomics and Art Education: Realities, Implications, and Strategies for Survival Frank Tirro 52 Creative Use of Institutional Resources: Summer Programs, Interim Programs, Preparatory Divisions, Radio and Television Programs, and Recordings Ray Robinson 56 Performance Competitions: Relationships to Professional Training and Career Entry Eileen T. Cline 63 Teaching Aesthetics of Music Through Performance Group Experiences David M.Smith 78 An Array of Course Topics for Music in General Education Robert R. -

Why Is Memory Safety Still a Concern?

Why is memory safety still a concern? PhD Candidacy Exam Mohamed (Tarek Ibn Ziad) Hassan [email protected] Department of Computer Science Columbia University April 9th, 2020 10:30 A.M. Location: TBD 1 Candidate Research Area Statement Languages like C and C++ are the gold standard for implementing a wide range of software systems such as safety critical firmware, operating system kernels, and network protocol stacks for performance and flexibility reasons. As those languages do not guarantee the validity of memory accesses (i.e., enforce memory safety), seemingly benign program bugs can lead to silent memory corruption, difficult-to-diagnose crashes, and most importantly; security exploitation. Attackers can compromise the security of the whole computing ecosystem by exploiting a memory safety error with a suitably crafted input. Since the spread of Morris worm in 1988 and despite massive advances in memory safety error mitigations, memory safety errors have risen to be the most exploited vulnerabilities. As part of my research studies in Columbia, I have worked on designing and implementing hardware primitives [1,2] that provide fine-grained memory safety protection with low performance overheads com- pared to existing work. My ongoing work in this research include extending the above primitives to system level protection in addition to addressing their current limitations. 2 Faculty Committee Members The candidate respectfully solicits the guidance and expertise of the following faculty members and welcomes suggestions for other important papers and publications in the exam research area. • Prof. Simha Sethumadhavan • Prof. Steven M. Bellovin • Prof. Suman Jana 1 Why is memory safety still a concern? PhD Candidacy Exam 3 Exam Syllabus The papers have broad coverage in the space of memory safety vulnerabilities and mitigations, which are needed to (1) make a fair assessment of current defensive and offensive techniques and (2) explore new threats and defensive opportunities. -

Diehard: Probabilistic Memory Safety for Unsafe Languages

DieHard: Probabilistic Memory Safety for Unsafe Languages Emery D. Berger Benjamin G. Zorn Dept. of Computer Science Microsoft Research University of Massachusetts Amherst One Microsoft Way Amherst, MA 01003 Redmond, WA 98052 [email protected] [email protected] Abstract Invalid frees: Passing illegal addresses to free can corrupt the Applications written in unsafe languages like C and C++ are vul- heap or lead to undefined behavior. nerable to memory errors such as buffer overflows, dangling point- Double frees: Repeated calls to free of objects that have already ers, and reads of uninitialized data. Such errors can lead to program been freed undermine the integrity of freelist-based allocators. crashes, security vulnerabilities, and unpredictable behavior. We present DieHard, a runtime system that tolerates these errors while Tools like Purify [17] and Valgrind [28, 34] allow programmers to probabilistically maintaining soundness. DieHard uses randomiza- pinpoint the exact location of these memory errors (at the cost of a tion and replication to achieve probabilistic memory safety through 2-25X performance penalty), but only reveal those bugs found dur- the illusion of an infinite-sized heap. DieHard’s memory manager ing testing. Deployed programs thus remain vulnerable to crashes randomizes the location of objects in a heap that is at least twice or attack. Conservative garbage collectors can, at the cost of in- as large as required. This algorithm not only prevents heap corrup- creased runtime and additional memory [10, 20], disable calls to tion but also provides a probabilistic guarantee of avoiding memory free and so eliminate three of the above errors (invalid frees, dou- errors. -

Simple, Fast and Safe Manual Memory Management

Simple, Fast and Safe Manual Memory Management Piyus Kedia Manuel Costa Matthew Aaron Blankstein ∗ Microsoft Research, India Parkinson Kapil Vaswani Princeton University, USA [email protected] Dimitrios Vytiniotis [email protected] Microsoft Research, UK manuelc,mattpark,kapilv,[email protected] Abstract cause the latter are more efficient. As a consequence, many Safe programming languages are readily available, but many applications continue to have exploitable memory safety applications continue to be written in unsafe languages be- bugs. One of the main reasons behind the higher efficiency cause of efficiency. As a consequence, many applications of unsafe languages is that they typically use manual mem- continue to have exploitable memory safety bugs. Since ory management, which has been shown to be more efficient garbage collection is a major source of inefficiency in the than garbage collection [19, 21, 24, 33, 34, 45]. Thus, replac- implementation of safe languages, replacing it with safe ing garbage collection with manual memory management in manual memory management would be an important step safe languages would be an important step towards solv- towards solving this problem. ing this problem. The challenge is how to implement man- Previous approaches to safe manual memory manage- ual memory management efficiently, without compromising ment use programming models based on regions, unique safety. pointers, borrowing of references, and ownership types. We Previous approaches to safe manual memory manage- propose a much simpler programming model that does not ment use programming models based on regions [20, 40], require any of these concepts. Starting from the design of an unique pointers [22, 38], borrowing of references [11, 12, imperative type safe language (like Java or C#), we just add 42], and ownership types [10, 12, 13].