The Quadratic Formula You May Recall the Quadratic Formula for Roots of Quadratic Polynomials Ax2 + Bx + C

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Solving Cubic Polynomials

Solving Cubic Polynomials 1.1 The general solution to the quadratic equation There are four steps to finding the zeroes of a quadratic polynomial. 1. First divide by the leading term, making the polynomial monic. a 2. Then, given x2 + a x + a , substitute x = y − 1 to obtain an equation without the linear term. 1 0 2 (This is the \depressed" equation.) 3. Solve then for y as a square root. (Remember to use both signs of the square root.) a 4. Once this is done, recover x using the fact that x = y − 1 . 2 For example, let's solve 2x2 + 7x − 15 = 0: First, we divide both sides by 2 to create an equation with leading term equal to one: 7 15 x2 + x − = 0: 2 2 a 7 Then replace x by x = y − 1 = y − to obtain: 2 4 169 y2 = 16 Solve for y: 13 13 y = or − 4 4 Then, solving back for x, we have 3 x = or − 5: 2 This method is equivalent to \completing the square" and is the steps taken in developing the much- memorized quadratic formula. For example, if the original equation is our \high school quadratic" ax2 + bx + c = 0 then the first step creates the equation b c x2 + x + = 0: a a b We then write x = y − and obtain, after simplifying, 2a b2 − 4ac y2 − = 0 4a2 so that p b2 − 4ac y = ± 2a and so p b b2 − 4ac x = − ± : 2a 2a 1 The solutions to this quadratic depend heavily on the value of b2 − 4ac. -

Factoring Polynomials

EAP/GWL Rev. 1/2011 Page 1 of 5 Factoring a polynomial is the process of writing it as the product of two or more polynomial factors. Example: — Set the factors of a polynomial equation (as opposed to an expression) equal to zero in order to solve for a variable: Example: To solve ,; , The flowchart below illustrates a sequence of steps for factoring polynomials. First, always factor out the Greatest Common Factor (GCF), if one exists. Is the equation a Binomial or a Trinomial? 1 Prime polynomials cannot be factored Yes No using integers alone. The Sum of Squares and the Four or more quadratic factors Special Cases? terms of the Sum and Difference of Binomial Trinomial Squares are (two terms) (three terms) Factor by Grouping: always Prime. 1. Group the terms with common factors and factor 1. Difference of Squares: out the GCF from each Perfe ct Square grouping. 1 , 3 Trinomial: 2. Sum of Squares: 1. 2. Continue factoring—by looking for Special Cases, 1 , 2 2. 3. Difference of Cubes: Grouping, etc.—until the 3 equation is in simplest form FYI: A Sum of Squares can 1 , 2 (or all factors are Prime). 4. Sum of Cubes: be factored using imaginary numbers if you rewrite it as a Difference of Squares: — 2 Use S.O.A.P to No Special √1 √1 Cases remember the signs for the factors of the 4 Completing the Square and the Quadratic Formula Sum and Difference Choose: of Cubes: are primarily methods for solving equations rather 1. Factor by Grouping than simply factoring expressions. -

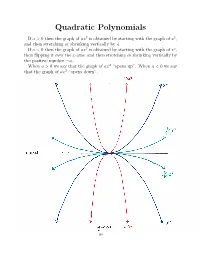

Quadratic Polynomials

Quadratic Polynomials If a>0thenthegraphofax2 is obtained by starting with the graph of x2, and then stretching or shrinking vertically by a. If a<0thenthegraphofax2 is obtained by starting with the graph of x2, then flipping it over the x-axis, and then stretching or shrinking vertically by the positive number a. When a>0wesaythatthegraphof− ax2 “opens up”. When a<0wesay that the graph of ax2 “opens down”. I Cit i-a x-ax~S ~12 *************‘s-aXiS —10.? 148 2 If a, c, d and a = 0, then the graph of a(x + c) 2 + d is obtained by If a, c, d R and a = 0, then the graph of a(x + c)2 + d is obtained by 2 R 6 2 shiftingIf a, c, the d ⇥ graphR and ofaax=⇤ 2 0,horizontally then the graph by c, and of a vertically(x + c) + byd dis. obtained (Remember by shiftingshifting the the⇥ graph graph of of axax⇤ 2 horizontallyhorizontally by by cc,, and and vertically vertically by by dd.. (Remember (Remember thatthatd>d>0meansmovingup,0meansmovingup,d<d<0meansmovingdown,0meansmovingdown,c>c>0meansmoving0meansmoving thatleft,andd>c<0meansmovingup,0meansmovingd<right0meansmovingdown,.) c>0meansmoving leftleft,and,andc<c<0meansmoving0meansmovingrightright.).) 2 If a =0,thegraphofafunctionf(x)=a(x + c) 2+ d is called a parabola. If a =0,thegraphofafunctionf(x)=a(x + c)2 + d is called a parabola. 6 2 TheIf a point=0,thegraphofafunction⇤ ( c, d) 2 is called thefvertex(x)=aof(x the+ c parabola.) + d is called a parabola. The point⇤ ( c, d) R2 is called the vertex of the parabola. -

Nature of the Discriminant

Name: ___________________________ Date: ___________ Class Period: _____ Nature of the Discriminant Quadratic − b b 2 − 4ac x = b2 − 4ac Discriminant Formula 2a The discriminant predicts the “nature of the roots of a quadratic equation given that a, b, and c are rational numbers. It tells you the number of real roots/x-intercepts associated with a quadratic function. Value of the Example showing nature of roots of Graph indicating x-intercepts Discriminant b2 – 4ac ax2 + bx + c = 0 for y = ax2 + bx + c POSITIVE Not a perfect x2 – 2x – 7 = 0 2 b – 4ac > 0 square − (−2) (−2)2 − 4(1)(−7) x = 2(1) 2 32 2 4 2 x = = = 1 2 2 2 2 Discriminant: 32 There are two real roots. These roots are irrational. There are two x-intercepts. Perfect square x2 + 6x + 5 = 0 − 6 62 − 4(1)(5) x = 2(1) − 6 16 − 6 4 x = = = −1,−5 2 2 Discriminant: 16 There are two real roots. These roots are rational. There are two x-intercepts. ZERO b2 – 4ac = 0 x2 – 2x + 1 = 0 − (−2) (−2)2 − 4(1)(1) x = 2(1) 2 0 2 x = = = 1 2 2 Discriminant: 0 There is one real root (with a multiplicity of 2). This root is rational. There is one x-intercept. NEGATIVE b2 – 4ac < 0 x2 – 3x + 10 = 0 − (−3) (−3)2 − 4(1)(10) x = 2(1) 3 − 31 3 31 x = = i 2 2 2 Discriminant: -31 There are two complex/imaginary roots. There are no x-intercepts. Quadratic Formula and Discriminant Practice 1. -

Formula Sheet 1 Factoring Formulas 2 Exponentiation Rules

Formula Sheet 1 Factoring Formulas For any real numbers a and b, (a + b)2 = a2 + 2ab + b2 Square of a Sum (a − b)2 = a2 − 2ab + b2 Square of a Difference a2 − b2 = (a − b)(a + b) Difference of Squares a3 − b3 = (a − b)(a2 + ab + b2) Difference of Cubes a3 + b3 = (a + b)(a2 − ab + b2) Sum of Cubes 2 Exponentiation Rules p r For any real numbers a and b, and any rational numbers and , q s ap=qar=s = ap=q+r=s Product Rule ps+qr = a qs ap=q = ap=q−r=s Quotient Rule ar=s ps−qr = a qs (ap=q)r=s = apr=qs Power of a Power Rule (ab)p=q = ap=qbp=q Power of a Product Rule ap=q ap=q = Power of a Quotient Rule b bp=q a0 = 1 Zero Exponent 1 a−p=q = Negative Exponents ap=q 1 = ap=q Negative Exponents a−p=q Remember, there are different notations: p q a = a1=q p q ap = ap=q = (a1=q)p 1 3 Quadratic Formula Finally, the quadratic formula: if a, b and c are real numbers, then the quadratic polynomial equation ax2 + bx + c = 0 (3.1) has (either one or two) solutions p −b ± b2 − 4ac x = (3.2) 2a 4 Points and Lines Given two points in the plane, P = (x1; y1);Q = (x2; y2) you can obtain the following information: p 2 2 1. The distance between them, d(P; Q) = (x2 − x1) + (y2 − y1) . x + x y + y 2. -

(Trying To) Solve Higher Order Polynomial Equations. Featuring a Recall of Polynomial Long Division

(Trying to) solve Higher order polynomial equations. Featuring a recall of polynomial long division. Some history: The quadratic formula (Dating back to antiquity) allows us to solve any quadratic equation. ax2 + bx + c = 0 What about any cubic equation? ax3 + bx2 + cx + d = 0? In the 1540's Cardano produced a \cubic formula." It is too complicated to actually write down here. See today's extra credit. What about any quartic equation? ax4 + bx3 + cx2 + dx + e = 0? A few decades after Cardano, Ferrari produced a \quartic formula" More complicated still than the cubic formula. In the 1820's Galois (age ∼19) proved that there is no general algebraic formula for the solutions to a degree 5 polynomial. In fact there is no purely algebraic way to solve x5 − x − 1 = 0: Galois died in a duel at age 21. This means that, as disheartening as it may feel, we will never get a formulaic solution to a general polynomial equation. The best we can get is tricks that work sometimes. Think of these tricks as analogous to the strategies you use to factor a degree 2 polynomial. Trick 1 If you find one solution, then you can find a factor and reduce to a simpler polynomial equation. Example. 2x2 − x2 − 1 = 0 has x = 1 as a solution. This means that x − 1 MUST divide 2x3 − x2 − 1. Use polynomial long division to write 2x3 − x2 − 1 as (x − 1) · (something). Now find the remaining two roots 1 2 For you: Find all of the solutions to x3 + x2 + x + 1 = 0 given that x = −1 is a solution to this equation. -

Calculus Method of Solving Quadratic Equations

International Journal of Mathematics Research. ISSN 0976-5840 Volume 9, Number 2 (2017), pp. 155-159 © International Research Publication House http://www.irphouse.com Calculus Method of Solving Quadratic Equations Debjyoti Biswadev Sengupta#1 Smt. Sulochanadevi Singhania School, Jekegram, Thane(W), Maharashtra-400606, India. Abstract This paper introduces a new application of differential calculus and integral calculus to solve various forms of quadratic equations. Keywords: quadratic equations, differential calculus, integral calculus, infinitesimal integral calculus, constant of infinity. I. INTRODUCTION This paper is based on the usage of the principles of differential calculus and integral calculus to solve quadratic equations. It is hypothesized that if we use calculus to solve quadratic equations, we will arrive at the same solution that we may get from the quadratic formula method. It is also hypothesized that the quadratic formula can be derived from the quadratic formula. The paper involves the use of differential and integral calculus which takes solving of quadratic equations to the next level. The formulas which we will come across the paper, or use them, are shown in Table 1 under Heading IV. The paper shall be dealt under 2 headings. Heading II will deal with the use of differential calculus in solving quadratic equations while Heading III will deal with the use of integral calculus in quadratic equations, as far as solving of quadratic equations is concerned. Heading V is hugely concerned about the advantages of using calculus to solve quadratic equation. 156 Debjyoti Biswadev Sengupta II. USE OF DIFFERENTIAL CALCULUS TO SOLVE QUADRATIC EQUATIONS To solve quadratic equations, we shall require the use of first order differential calculus only. -

IVC Factsheet Quadratics

Imperial Valley College Math Lab QUADRATICS SUMMARY SHEET (EQUATIONS, FUNCTIONS, AND GRAPHS) The two basic forms of quadratic equations are: 푦 = 푎푥2 + 푏푥 + 푐 푦 = 푎(푥 − ℎ)2 + 푘 The two basic forms of quadratic functions are: 푓(푥) = 푎푥2 + 푏푥 + 푐 푓(푥) = 푎(푥 − ℎ)2 + 푘 SQUARE ROOT PROPERTY If 푋2 = 푘 , then 푋 = ±√푘 . [ can be any algebraic expression, and 푘 is any real number]. Ex: (푥 − 4)2 = 5 → 푥 − 4 = ±√5 → 푥 = 4 ± √5 QUADRATIC FORMULA −푏 ± √푏2−4푎푐 If 푎푥2 + 푏푥 + 푐 = 0 , then 푥 = . 2푎 Remark: Notice that the equation must be set equal to zero first! 2 Ex: 4푥 + 5푥 − 3 = 0 First note that 푎 = 4 , 푏 = 5, and 푐 = −3 . −5 ± √52−4(4)(−3) −5 ± √25−(−48) −5 ± √73 Substitute carefully: 푥 = = = . 2(4) 8 8 Watch the signs! Especially when a or c is negative. THE DISCRIMINANT The type of solution to a quadratic equation depends upon what happens under the radical sign (√ ) . Therefore, if you look at the Quadratic Formula above, you’ll see that the “푏2 − 4푎푐 ” part is important. Definition: For the quadratic equation 푎푥2 + 푏푥 + 푐 = 0 , the discriminant is 푏2 − 4푎푐 . If 푏2 − 4푎푐 > 0 and is a perfect square, then 푎푥2 + 푏푥 + 푐 = 0 has two rational solutions. If 푏2 − 4푎푐 > 0 but is not a perfect square, then 푎푥2 + 푏푥 + 푐 = 0 has two irrational solutions. If 푏2 − 4푎푐 = 0 , then 푎푥2 + 푏푥 + 푐 = 0 has one rational solution. If 푏2 − 4푎푐 < 0 , then 푎푥2 + 푏푥 + 푐 = 0 has two complex solutions (involving the imaginary number ). -

Discriminant & Solutions

DISCRIMINANT & SOLUTIONS Quadratic equations can be written in the form ��# + �� + � = 0. (To use discriminants, if you have a quadratic (parabolic) equation in the vertex form, � = � � − ℎ # + �, you need to set � = 0 ; multiply through and convert to standard form as above.) ./± /1.234 The quadratic formula gives you the roots (where the function becomes zero); � = . #3 The part under the square root sign is called the discriminant, because it tells you where these roots appear on a graph. There are only three possibilities; the discriminant is positive, zero, or negative. 1: Positive Discriminant. This gives 2 unequal solutions that are real; they pass through the x- axis. They may be rational (if a, b, and c are all rational) or irrational. Examples: ������ ����ℎ: �# − � − 2; � = 1 , � = −1, � = −2. �# − 4�� = 9 > 0. So we have two real roots; {-1, 2}. �������� ����ℎ: − �# − � + 2; � = −1, � = −1, � = 2. �# − 4�� = 9 > 0. So we have two real roots; {-2, 1}; they are different from the above equation because a and c are different. 2: Discriminant = Zero. This means there will be one repeated solution that is a real number. Graphically, it touches the x-axis but doesn’t cross it; although it touches in only one point, there are still two roots at that x value. Examples: ������ ����ℎ: �# − 2� + 1; � = 1 , � = −2, � = 1. �# − 4�� = 0. So we have a repeated root; {1, 1}. �������� ����ℎ: − �# − 2� − 1; � = −1, � = −2, � = −1. �# − 4�� = 0. So we have a repeated root; {-1, -1}; it’s different from the above equation because a and c are different. 3: Negative Discriminant. There are NO real solutions (because the graph doesn’t cross the x- axis), but there are two imaginary roots that are complex conjugates. -

Name___Derivation of the Quadratic Formula

Name___________________ Derivation of the Quadratic Formula General form of a quadratic equation: ax 2 + bx + c = 0, ∀a,b,c ∈ℜ, a ≠ 0 Algebraic Representations Directions b c x 2 + x + = 0, € a ≠ 0 Step 1: a a b c x 2 + x = − Step 2: € a a 2 2 2 b ⎛ b ⎞ c ⎛ b ⎞ Step 3: x + x + ⎜ ⎟ = − + ⎜ ⎟ a ⎝ 2a ⎠ a ⎝ 2a ⎠ 2 2 ⎛ b ⎞ c ⎛ b ⎞ Step 4: ⎜ x + ⎟ = − + ⎜ ⎟ ⎝ 2a ⎠ a ⎝ 2a ⎠ 2 2 ⎛ b ⎞ c b Step 5: ⎜ x + ⎟ = − + 2 ⎝ 2a ⎠ a 4a 2 2 ⎛ b ⎞ c 4a b Step 6: ⎜ x + ⎟ = − • + 2 ⎝ 2a ⎠ a 4a 4a 2 2 ⎛ b ⎞ b − 4ac Step 7: ⎜ x + ⎟ = 2 ⎝ 2a ⎠ 4a 2 ⎛ b ⎞ b2 − 4ac Step 8: ⎜ x + ⎟ = ± 2 ⎝ 2a ⎠ 4a 2 ⎛ b ⎞ b − 4ac Step 9: ⎜ x + ⎟ = ± 2 ⎝ 2a ⎠ 4a b b 2 − 4ac Step 10: x + = ± 2a 4a 2 b b2 − 4ac x + = ± Step 11: 2a 2a b b2 − 4ac x = − ± Step 12: 2a 2a − b ± b2 − 4ac x = Step 13: 2a MCC@WCCUSD 12/02/11 The steps below are in mixed order. Your task is to arrange them in the correct order. 1) Cut out the 13 steps below. 2) Use the algebraic expressions on the first page to help arrange the steps in the correct order. 3) Paste them in the correct order in the 2nd column. Simplify 4a2 on the right side of the equation. b Subtract from both sides of the equation. € 2a Divide the general form of a quadratic equation by a. € Factor the trinomial on the left side of the equation. Combine the fractions on the right side of the equation. -

Quadratic Functions, Parabolas, Problem Solving

pg097 [R] G1 5-36058 / HCG / Cannon & Elich cr 11-14-95 QC3 2.5 Quadratic Functions, Parabolas, and Problem Solving 97 58. If f~x! 5 2x 1 3 and the domain D of f is given by 63. Area Given the three lines y 5 m~x 1 4!, D 5 $x _ x 2 1 x 2 2 # 0%,then y 52m~x14!,andx52, for what value of m will (a) draw a graph of f,and thethreelinesformatrianglewithanareaof24? (b) find the range of f.(Hint: What values of y occur on 64. (a) Is the point ~21, 3! on the circle the graph?) x 2 1 y 2 2 4x 1 2y 2 20 5 0? 59. The length L of a metal rod is a linear function of its (b) If the answer is yes, find an equation for the line temperature T, where L is measured in centimeters and that is tangent to the circle at (21,3);iftheanswer T in degrees Celsius. The following measurements have is no, find the distance between the x-intercept been made: L 5 124.91 when T 5 0, and L 5 125.11 points of the circle. when T 5 100. 65. (a) Show that points A~1, Ï3! and B~2Ï3, 1! are on (a) Find a formula that gives L as a function of T. the circle x 2 1 y 2 5 4. (b) What is the length of the rod when its temperature (b) Find the area of the shaded region in the diagram. -

MATH 135 Calculus 1, Spring 2016 1.2 Linear and Quadratic Functions

MATH 135 Calculus 1, Spring 2016 Worksheet for Sections 1.2 and 1.3 1.2 Linear and Quadratic Functions Linear Functions A linear function is one of the form f(x) = mx + b, where m and b are arbitrary constants. It is \linear" in x (no exponents, fractions, trig, etc.). The graph of a linear function is a line. The constant m is the slope of the line and this number has the same value everywhere on the line. If (x1; y1) and (x2; y2) are any two points on the line, then the slope is given by y − y ∆y m = 2 1 = (∆ =\Delta," means change). x2 − x1 ∆x This number will be the same no matter which two points on the line are chosen. Two important equations for a line are: 1. Slope-intercept form: y = mx + b (m is the slope and b is the y-intercept.) 2. Point-slope form: y − y0 = m(x − x0)(m is the slope and (x0; y0) is any point on the line.) If m > 0, then the line is increasing while if m < 0, the line is decreasing. When m = 0, the line has zero slope and is horizontal (a constant function). Two lines are parallel when they have the same slope, while two lines are perpendicular if the product of their slopes is −1. Exercise 0.1 Find the equation of the line with the given information. (a) The line passing through the points (−2; 3) and (4; 1). (b) The line parallel to 2y + 5x = 0, passing through (2; 5).