Pattern Theory: the Mathematics of Perception

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A MATHEMATICIAN's SURVIVAL GUIDE 1. an Algebra Teacher I

A MATHEMATICIAN’S SURVIVAL GUIDE PETER G. CASAZZA 1. An Algebra Teacher I could Understand Emmy award-winning journalist and bestselling author Cokie Roberts once said: As long as algebra is taught in school, there will be prayer in school. 1.1. An Object of Pride. Mathematician’s relationship with the general public most closely resembles “bipolar” disorder - at the same time they admire us and hate us. Almost everyone has had at least one bad experience with mathematics during some part of their education. Get into any taxi and tell the driver you are a mathematician and the response is predictable. First, there is silence while the driver relives his greatest nightmare - taking algebra. Next, you will hear the immortal words: “I was never any good at mathematics.” My response is: “I was never any good at being a taxi driver so I went into mathematics.” You can learn a lot from taxi drivers if you just don’t tell them you are a mathematician. Why get started on the wrong foot? The mathematician David Mumford put it: “I am accustomed, as a professional mathematician, to living in a sort of vacuum, surrounded by people who declare with an odd sort of pride that they are mathematically illiterate.” 1.2. A Balancing Act. The other most common response we get from the public is: “I can’t even balance my checkbook.” This reflects the fact that the public thinks that mathematics is basically just adding numbers. They have no idea what we really do. Because of the textbooks they studied, they think that all needed mathematics has already been discovered. -

2012-13 Annual Report of Private Giving

MAKING THE EXTRAORDINARY POSSIBLE 2012–13 ANNUAL REPORT OF PRIVATE GIVING 2 0 1 2–13 ANNUAL REPORT OF PRIVATE GIVING “Whether you’ve been a donor to UMaine for years or CONTENTS have just made your first gift, I thank you for your Letter from President Paul Ferguson 2 Fundraising Partners 4 thoughtfulness and invite you to join us in a journey Letter from Jeffery Mills and Eric Rolfson 4 that promises ‘Blue Skies ahead.’ ” President Paul W. Ferguson M A K I N G T H E Campaign Maine at a Glance 6 EXTRAORDINARY 2013 Endowments/Holdings 8 Ways of Giving 38 POSSIBLE Giving Societies 40 2013 Donors 42 BLUE SKIES AHEAD SINCE GRACE, JENNY AND I a common theme: making life better student access, it is donors like you arrived at UMaine just over two years for others — specifically for our who hold the real keys to the ago, we have truly enjoyed our students and the state we serve. While University of Maine’s future level interactions with many alumni and I’ve enjoyed many high points in my of excellence. friends who genuinely care about this personal and professional life, nothing remarkable university. Events like the surpasses the sense of reward and Unrestricted gifts that provide us the Stillwater Society dinner and the accomplishment that accompanies maximum flexibility to move forward Charles F. Allen Legacy Society assisting others to fulfill their are one of these keys. We also are luncheon have allowed us to meet and potential. counting on benefactors to champion thank hundreds of donors. -

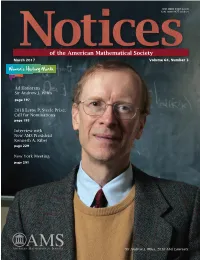

Sir Andrew J. Wiles

ISSN 0002-9920 (print) ISSN 1088-9477 (online) of the American Mathematical Society March 2017 Volume 64, Number 3 Women's History Month Ad Honorem Sir Andrew J. Wiles page 197 2018 Leroy P. Steele Prize: Call for Nominations page 195 Interview with New AMS President Kenneth A. Ribet page 229 New York Meeting page 291 Sir Andrew J. Wiles, 2016 Abel Laureate. “The definition of a good mathematical problem is the mathematics it generates rather Notices than the problem itself.” of the American Mathematical Society March 2017 FEATURES 197 239229 26239 Ad Honorem Sir Andrew J. Interview with New The Graduate Student Wiles AMS President Kenneth Section Interview with Abel Laureate Sir A. Ribet Interview with Ryan Haskett Andrew J. Wiles by Martin Raussen and by Alexander Diaz-Lopez Allyn Jackson Christian Skau WHAT IS...an Elliptic Curve? Andrew Wiles's Marvelous Proof by by Harris B. Daniels and Álvaro Henri Darmon Lozano-Robledo The Mathematical Works of Andrew Wiles by Christopher Skinner In this issue we honor Sir Andrew J. Wiles, prover of Fermat's Last Theorem, recipient of the 2016 Abel Prize, and star of the NOVA video The Proof. We've got the official interview, reprinted from the newsletter of our friends in the European Mathematical Society; "Andrew Wiles's Marvelous Proof" by Henri Darmon; and a collection of articles on "The Mathematical Works of Andrew Wiles" assembled by guest editor Christopher Skinner. We welcome the new AMS president, Ken Ribet (another star of The Proof). Marcelo Viana, Director of IMPA in Rio, describes "Math in Brazil" on the eve of the upcoming IMO and ICM. -

PRESENTAZIONE E LAUDATIO DI DAVID MUMFOD by ALBERTO

PRESENTAZIONE E LAUDATIO DI DAVID MUMFOD by ALBERTO CONTE David Mumford was born in 1937 in Worth (West Sussex, UK) in an old English farm house. His father, William Mumford, was British, ... a visionary with an international perspective, who started an experimental school in Tanzania based on the idea of appropriate technology... Mumford's father worked for the United Nations from its foundations in 1945 and this was his job while Mumford was growing up. Mumford's mother was American and the family lived on Long Island Sound in the United States, a semi-enclosed arm of the North Atlantic Ocean with the New York- Connecticut shore on the north and Long Island to the south. After attending Exeter School, Mumford entered Harvard University. After graduating from Harvard, Mumford was appointed to the staff there. He was appointed professor of mathematics in 1967 and, ten years later, he became Higgins Professor. He was chairman of the Mathematics Department at Harvard from 1981 to 1984 and MacArthur Fellow from 1987 to 1992. In 1996 Mumford moved to the Division of Applied Mathematics of Brown University where he is now Professor Emeritus. Mumford has received many honours for his scientific work. First of all, the Fields Medal (1974), the highest distinction for a mathematician. He was awarded the Shaw Prize in 2006, the Steele Prize for Mathematical Exposition by the American Mathematical Society in 2007, and the Wolf Prize in 2008. Upon receiving this award from the hands of Israeli President Shimon Peres he announced that he will donate the money to Bir Zeit University, near Ramallah, and to Gisha, an Israeli organization that advocates for Palestinian freedom of movement, by saying: I decided to donate my share of the Wolf Prize to enable the academic community in occupied Palestine to survive and thrive. -

Density of Algebraic Points on Noetherian Varieties 3

DENSITY OF ALGEBRAIC POINTS ON NOETHERIAN VARIETIES GAL BINYAMINI Abstract. Let Ω ⊂ Rn be a relatively compact domain. A finite collection of real-valued functions on Ω is called a Noetherian chain if the partial derivatives of each function are expressible as polynomials in the functions. A Noether- ian function is a polynomial combination of elements of a Noetherian chain. We introduce Noetherian parameters (degrees, size of the coefficients) which measure the complexity of a Noetherian chain. Our main result is an explicit form of the Pila-Wilkie theorem for sets defined using Noetherian equalities and inequalities: for any ε> 0, the number of points of height H in the tran- scendental part of the set is at most C ·Hε where C can be explicitly estimated from the Noetherian parameters and ε. We show that many functions of interest in arithmetic geometry fall within the Noetherian class, including elliptic and abelian functions, modular func- tions and universal covers of compact Riemann surfaces, Jacobi theta func- tions, periods of algebraic integrals, and the uniformizing map of the Siegel modular variety Ag . We thus effectivize the (geometric side of) Pila-Zannier strategy for unlikely intersections in those instances that involve only compact domains. 1. Introduction 1.1. The (real) Noetherian class. Let ΩR ⊂ Rn be a bounded domain, and n denote by x := (x1,...,xn) a system of coordinates on R . A collection of analytic ℓ functions φ := (φ1,...,φℓ): Ω¯ R → R is called a (complex) real Noetherian chain if it satisfies an overdetermined system of algebraic partial differential equations, i =1,...,ℓ ∂φi = Pi,j (x, φ), (1) ∂xj j =1,...,n where P are polynomials. -

Download PDF of Summer 2016 Colloquy

Nonprofit Organization summer 2016 US Postage HONORING EXCELLENCE p.20 ONE DAY IN MAY p.24 PAID North Reading, MA Permit No.8 What’s the BUZZ? Bees, behavior & pollination p.12 What’s the Buzz? 12 Bees, Behavior, and Pollination ONE GRADUATE STUDENT’S INVESTIGATION INTO BUMBLEBEE BEHAVIOR The 2016 Centennial Medalists 20 HONORING FRANCIS FUKUYAMA, DAVID MUMFORD, JOHN O’MALLEY, AND CECILIA ROUSE Intellectual Assembly 22 ALUMNI DAY 2016 One Day in May 24 COMMENCEMENT 2016 summer/16 An alumni publication of Harvard University’s Graduate School of Arts and Sciences 3 FROM UNIVERSITY HALL 4 NEWS & NOTES Harvard Horizons, Health Policy turns 25, new Alumni Council leadership. 8 Q&A WITH COLLEEN CAVANAUGH A path-breaking biologist provides new evolutionary insights. 10 SHELF LIFE Elephants, Manchuria, the Uyghur nation and more. 26 NOTED News from our alumni. 28 ALUMNI CONNECTIONS Dudley 25th, Life Lab launches, and recent graduates gathering. summer Cover Image: Patrick Hruby Facing Image: Commencement Begins /16 Photograph by Tony Rinaldo CONTRIBUTORS Xiao-Li Meng dean, PhD ’90 Jon Petitt director of alumni relations and publications Patrick Hruby is a Los Angeles–based Ann Hall editor freelance illustrator and designer with Visual Dialogue design an insatiable appetite for color. His work Colloquy is published three times a year by the Graduate School Alumni has appeared in The New York Times, Association (GSAA). Governed by its Alumni Council, the GSAA represents Fortune Magazine, and WIRED, among and advances the interests of alumni of the Graduate School of Arts and Sciences through alumni events and publications. others. -

Statistical Pattern Recognition: a Review

4 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 22, NO. 1, JANUARY 2000 Statistical Pattern Recognition: A Review Anil K. Jain, Fellow, IEEE, Robert P.W. Duin, and Jianchang Mao, Senior Member, IEEE AbstractÐThe primary goal of pattern recognition is supervised or unsupervised classification. Among the various frameworks in which pattern recognition has been traditionally formulated, the statistical approach has been most intensively studied and used in practice. More recently, neural network techniques and methods imported from statistical learning theory have been receiving increasing attention. The design of a recognition system requires careful attention to the following issues: definition of pattern classes, sensing environment, pattern representation, feature extraction and selection, cluster analysis, classifier design and learning, selection of training and test samples, and performance evaluation. In spite of almost 50 years of research and development in this field, the general problem of recognizing complex patterns with arbitrary orientation, location, and scale remains unsolved. New and emerging applications, such as data mining, web searching, retrieval of multimedia data, face recognition, and cursive handwriting recognition, require robust and efficient pattern recognition techniques. The objective of this review paper is to summarize and compare some of the well-known methods used in various stages of a pattern recognition system and identify research topics and applications which are at the forefront of this exciting and challenging field. Index TermsÐStatistical pattern recognition, classification, clustering, feature extraction, feature selection, error estimation, classifier combination, neural networks. æ 1INTRODUCTION Y the time they are five years old, most children can depend in some way on pattern recognition.. -

1. GIT and Μ-GIT

1. GIT and µ-GIT Valentina Georgoulas Joel W. Robbin Dietmar A. Salamon ETH Z¨urich UW Madison ETH Z¨urich Dietmar did the heavy lifting; Valentina and I made him explain it to us. • A key idea comes from Xiuxiong Chen and Song Sun; they were doing an • analogous infinite dimensional problem. I learned a lot from Sean. • The 1994 edition of Mumford’s book lists 926 items in the bibliography; I • have read fewer than 900 of them. Dietmar will talk in the Geometric Analysis Seminar next Monday. • Follow the talk on your cell phone. • Calc II example of GIT: conics, eccentricity, major axis. • Many important problems in geometry can be reduced to a partial differential equation of the form µ(x)=0, where x ranges over a complexified group orbit in an infinite dimensional sym- plectic manifold X and µ : X g is an associated moment map. Here we study the finite dimensional version.→ Because we want to gain intuition for the infinite dimensional problems, our treatment avoids the structure theory of compact groups. We also generalize from projective manifolds (GIT) to K¨ahler manifolds (µ-GIT). In GIT you start with (X, J, G) and try to find Y with R(Y ) R(X)G. • ≃ In µ-GIT you start with (X,ω,G) and try to solve µ(x) = 0. • GIT = µ-GIT + rationality. • The idea is to find analogs of the GIT definitions for K¨ahler manifolds, show that the µ-GIT definitions and the GIT definitions agree for projective manifolds, and prove the analogs of the GIT theorems in the K¨ahler case. -

The Legacy of Norbert Wiener: a Centennial Symposium

http://dx.doi.org/10.1090/pspum/060 Selected Titles in This Series 60 David Jerison, I. M. Singer, and Daniel W. Stroock, Editors, The legacy of Norbert Wiener: A centennial symposium (Massachusetts Institute of Technology, Cambridge, October 1994) 59 William Arveson, Thomas Branson, and Irving Segal, Editors, Quantization, nonlinear partial differential equations, and operator algebra (Massachusetts Institute of Technology, Cambridge, June 1994) 58 Bill Jacob and Alex Rosenberg, Editors, K-theory and algebraic geometry: Connections with quadratic forms and division algebras (University of California, Santa Barbara, July 1992) 57 Michael C. Cranston and Mark A. Pinsky, Editors, Stochastic analysis (Cornell University, Ithaca, July 1993) 56 William J. Haboush and Brian J. Parshall, Editors, Algebraic groups and their generalizations (Pennsylvania State University, University Park, July 1991) 55 Uwe Jannsen, Steven L. Kleiman, and Jean-Pierre Serre, Editors, Motives (University of Washington, Seattle, July/August 1991) 54 Robert Greene and S. T. Yau, Editors, Differential geometry (University of California, Los Angeles, July 1990) 53 James A. Carlson, C. Herbert Clemens, and David R. Morrison, Editors, Complex geometry and Lie theory (Sundance, Utah, May 1989) 52 Eric Bedford, John P. D'Angelo, Robert E. Greene, and Steven G. Krantz, Editors, Several complex variables and complex geometry (University of California, Santa Cruz, July 1989) 51 William B. Arveson and Ronald G. Douglas, Editors, Operator theory/operator algebras and applications (University of New Hampshire, July 1988) 50 James Glimm, John Impagliazzo, and Isadore Singer, Editors, The legacy of John von Neumann (Hofstra University, Hempstead, New York, May/June 1988) 49 Robert C. Gunning and Leon Ehrenpreis, Editors, Theta functions - Bowdoin 1987 (Bowdoin College, Brunswick, Maine, July 1987) 48 R. -

Calculus Redux

THE NEWSLETTER OF THE MATHEMATICAL ASSOCIATION OF AMERICA VOLUME 6 NUMBER 2 MARCH-APRIL 1986 Calculus Redux Paul Zorn hould calculus be taught differently? Can it? Common labus to match, little or no feedback on regular assignments, wisdom says "no"-which topics are taught, and when, and worst of all, a rich and powerful subject reduced to Sare dictated by the logic of the subject and by client mechanical drills. departments. The surprising answer from a four-day Sloan Client department's demands are sometimes blamed for Foundation-sponsored conference on calculus instruction, calculus's overcrowded and rigid syllabus. The conference's chaired by Ronald Douglas, SUNY at Stony Brook, is that first surprise was a general agreement that there is room for significant change is possible, desirable, and necessary. change. What is needed, for further mathematics as well as Meeting at Tulane University in New Orleans in January, a for client disciplines, is a deep and sure understanding of diverse and sometimes contentious group of twenty-five fac the central ideas and uses of calculus. Mac Van Valkenberg, ulty, university and foundation administrators, and scientists Dean of Engineering at the University of Illinois, James Ste from client departments, put aside their differences to call venson, a physicist from Georgia Tech, and Robert van der for a leaner, livelier, more contemporary course, more sharply Vaart, in biomathematics at North Carolina State, all stressed focused on calculus's central ideas and on its role as the that while their departments want to be consulted, they are language of science. less concerned that all the standard topics be covered than That calculus instruction was found to be ailing came as that students learn to use concepts to attack problems in a no surprise. -

Two Hundred and Twenty-Second Commencement for the Conferring of Degrees

UNIVERSITY of PENNSYLVANIA Two Hundred and Twenty-Second Commencement for the Conferring of Degrees PHILADELPHIA CIVIC CENTER CONVENTION HALL Monday, May 22, 1978 10:00 A.M. Guests will find this diagram helpful in locating in the Contents on the opposite page under Degrees the approximate seating of the degree candidates. in Course. Reference to the paragraph on page The seating roughly corresponds to the order by seven describing the colors of the candidates school in which the candidates for degrees are hoods according to their fields of study may further presented, beginning at top left with the Faculty of assist guests in placing the locations of the various Arts and Sciences. The actual sequence is shown schools. Contents Page Seating Diagram of the Graduating Students 2 The Commencement Ceremony 4 Commencement Notes 6 Degrees in Course 8 The Faculty of Arts and Sciences 8 The College of General Studies 16 The College of Engineering and Applied Science 17 The Wharton School 23 The Wharton Evening School 27 The Wharton Graduate Division 28 The School of Nursing 33 The School of Allied Medical Professions 35 The Graduate Faculties 36 The School of Medicine 41 The Law School 42 The Graduate School of Fine Arts 44 The School of Dental Medicine 47 The School of Veterinary Medicine 48 The Graduate School of Education 49 The School of Social Work 51 The Annenberg School of Communications 52 Certificates 53 General Honors Program 53 Medical Technology 53 Occupational Therapy 54 Physical Therapy 56 Dental Hygiene 57 Advanced Dental Education 57 Social Work 58 Commissions 59 Army 59 Navy 60 Principal Undergraduate Academic Honor Societies 61 Prizes and Awards 64 Class of 1928 69 Events Following Commencement 71 The Commencement Marshals 72 Academic Honors Insert The Commencement Ceremony MUSIC Valley Forge Military Academy and Junior College Band CAPTAIN JAMES M. -

Crash Prediction and Collision Avoidance Using Hidden

CRASH PREDICTION AND COLLISION AVOIDANCE USING HIDDEN MARKOV MODEL A Thesis Submitted to the Faculty of Purdue University by Avinash Prabu In Partial Fulfillment of the Requirements for the Degree of Master of Science in Electrical and Computer Engineering August 2019 Purdue University Indianapolis, Indiana ii THE PURDUE UNIVERSITY GRADUATE SCHOOL STATEMENT OF THESIS APPROVAL Dr. Lingxi Li, Chair Department of Electrical and Computer Engineering Dr. Yaobin Chen Department of Electrical and Computer Engineering Dr. Brian King Department of Electrical and Computer Engineering Approved by: Dr. Brian King Head of Graduate Program iii Dedicated to my family, friends and my Professors, for their support and encouragement. iv ACKNOWLEDGMENTS Firstly, I would like to thank Dr. Lingxi Li for his continuous support and en- couragement. He has been a wonderful advisor and an exceptional mentor, without whom, my academic journey will not be where it is now. I would also like to to thank Dr. Yaobin Chen, whose course on Control theory has had a great impact in shaping this thesis. I would like to extend my appreciation to Dr. Brian King, who has been a great resource of guidance throughout the course of my Master's degree. A special thanks to Dr. Renran Tian, who is my principle investigator at TASI, IUPUI, for his guidance and encouragement. I also wish to thank Sherrie Tucker, who has always been available to answer my questions, despite her busy schedule. I would like to acknowledge my parents, Mr. & Mrs. Prabhu and my sister Chathurma for their continuous belief and endless love towards me.