SIMD Programming and What You Must Know About CPU Peak FLOPS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IEEE Paper Template in A4

Vasantha.N.S. et al, International Journal of Computer Science and Mobile Computing, Vol.6 Issue.6, June- 2017, pg. 302-306 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 6.017 IJCSMC, Vol. 6, Issue. 6, June 2017, pg.302 – 306 Enhancing Performance in Multiple Execution Unit Architecture using Tomasulo Algorithm Vasantha.N.S.1, Meghana Kulkarni2 ¹Department of VLSI and Embedded Systems, Centre for PG Studies, VTU Belagavi, India ²Associate Professor Department of VLSI and Embedded Systems, Centre for PG Studies, VTU Belagavi, India 1 [email protected] ; 2 [email protected] Abstract— Tomasulo’s algorithm is a computer architecture hardware algorithm for dynamic scheduling of instructions that allows out-of-order execution, designed to efficiently utilize multiple execution units. It was developed by Robert Tomasulo at IBM. The major innovations of Tomasulo’s algorithm include register renaming in hardware. It also uses the concept of reservation stations for all execution units. A common data bus (CDB) on which computed values broadcast to all reservation stations that may need them is also present. The algorithm allows for improved parallel execution of instructions that would otherwise stall under the use of other earlier algorithms such as scoreboarding. Keywords— Reservation Station, Register renaming, common data bus, multiple execution unit, register file I. INTRODUCTION The instructions in any program may be executed in any of the 2 ways namely sequential order and the other is the data flow order. The sequential order is the one in which the instructions are executed one after the other but in reality this flow is very rare in programs. -

Computer Science 246 Computer Architecture Spring 2010 Harvard University

Computer Science 246 Computer Architecture Spring 2010 Harvard University Instructor: Prof. David Brooks [email protected] Dynamic Branch Prediction, Speculation, and Multiple Issue Computer Science 246 David Brooks Lecture Outline • Tomasulo’s Algorithm Review (3.1-3.3) • Pointer-Based Renaming (MIPS R10000) • Dynamic Branch Prediction (3.4) • Other Front-end Optimizations (3.5) – Branch Target Buffers/Return Address Stack Computer Science 246 David Brooks Tomasulo Review • Reservation Stations – Distribute RAW hazard detection – Renaming eliminates WAW hazards – Buffering values in Reservation Stations removes WARs – Tag match in CDB requires many associative compares • Common Data Bus – Achilles heal of Tomasulo – Multiple writebacks (multiple CDBs) expensive • Load/Store reordering – Load address compared with store address in store buffer Computer Science 246 David Brooks Tomasulo Organization From Mem FP Op FP Registers Queue Load Buffers Load1 Load2 Load3 Load4 Load5 Store Load6 Buffers Add1 Add2 Mult1 Add3 Mult2 Reservation To Mem Stations FP adders FP multipliers Common Data Bus (CDB) Tomasulo Review 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 LD F0, 0(R1) Iss M1 M2 M3 M4 M5 M6 M7 M8 Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 M3 Wb SUBI R1, R1, 8 Iss Ex Wb BNEZ R1, Loop Iss Ex Wb LD F0, 0(R1) Iss Iss Iss Iss M Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 -

1 Introduction

Cambridge University Press 978-0-521-76992-1 - Microprocessor Architecture: From Simple Pipelines to Chip Multiprocessors Jean-Loup Baer Excerpt More information 1 Introduction Modern computer systems built from the most sophisticated microprocessors and extensive memory hierarchies achieve their high performance through a combina- tion of dramatic improvements in technology and advances in computer architec- ture. Advances in technology have resulted in exponential growth rates in raw speed (i.e., clock frequency) and in the amount of logic (number of transistors) that can be put on a chip. Computer architects have exploited these factors in order to further enhance performance using architectural techniques, which are the main subject of this book. Microprocessors are over 30 years old: the Intel 4004 was introduced in 1971. The functionality of the 4004 compared to that of the mainframes of that period (for example, the IBM System/370) was minuscule. Today, just over thirty years later, workstations powered by engines such as (in alphabetical order and without specific processor numbers) the AMD Athlon, IBM PowerPC, Intel Pentium, and Sun UltraSPARC can rival or surpass in both performance and functionality the few remaining mainframes and at a much lower cost. Servers and supercomputers are more often than not made up of collections of microprocessor systems. It would be wrong to assume, though, that the three tenets that computer archi- tects have followed, namely pipelining, parallelism, and the principle of locality, were discovered with the birth of microprocessors. They were all at the basis of the design of previous (super)computers. The advances in technology made their implementa- tions more practical and spurred further refinements. -

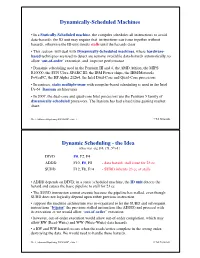

Dynamic Scheduling

Dynamically-Scheduled Machines ! • In a Statically-Scheduled machine, the compiler schedules all instructions to avoid data-hazards: the ID unit may require that instructions can issue together without hazards, otherwise the ID unit inserts stalls until the hazards clear! • This section will deal with Dynamically-Scheduled machines, where hardware- based techniques are used to detect are remove avoidable data-hazards automatically, to allow ‘out-of-order’ execution, and improve performance! • Dynamic scheduling used in the Pentium III and 4, the AMD Athlon, the MIPS R10000, the SUN Ultra-SPARC III; the IBM Power chips, the IBM/Motorola PowerPC, the HP Alpha 21264, the Intel Dual-Core and Quad-Core processors! • In contrast, static multiple-issue with compiler-based scheduling is used in the Intel IA-64 Itanium architectures! • In 2007, the dual-core and quad-core Intel processors use the Pentium 5 family of dynamically-scheduled processors. The Itanium has had a hard time gaining market share.! Ch. 6, Advanced Pipelining-DYNAMIC, slide 1! © Ted Szymanski! Dynamic Scheduling - the Idea ! (class text - pg 168, 171, 5th ed.)" !DIVD ! !F0, F2, F4! ADDD ! !F10, F0, F8 !- data hazard, stall issue for 23 cc! SUBD ! !F12, F8, F14 !- SUBD inherits 23 cc of stalls! • ADDD depends on DIVD; in a static scheduled machine, the ID unit detects the hazard and causes the basic pipeline to stall for 23 cc! • The SUBD instruction cannot execute because the pipeline has stalled, even though SUBD does not logically depend upon either previous instruction! • suppose the machine architecture was re-organized to let the SUBD and subsequent instructions “bypass” the previous stalled instruction (the ADDD) and proceed with its execution -> we would allow “out-of-order” execution! • however, out-of-order execution would allow out-of-order completion, which may allow RW (Read-Write) and WW (Write-Write) data hazards ! • a RW and WW hazard occurs when the reads/writes complete in the wrong order, destroying the data. -

2.5 Classification of Parallel Computers

52 // Architectures 2.5 Classification of Parallel Computers 2.5 Classification of Parallel Computers 2.5.1 Granularity In parallel computing, granularity means the amount of computation in relation to communication or synchronisation Periods of computation are typically separated from periods of communication by synchronization events. • fine level (same operations with different data) ◦ vector processors ◦ instruction level parallelism ◦ fine-grain parallelism: – Relatively small amounts of computational work are done between communication events – Low computation to communication ratio – Facilitates load balancing 53 // Architectures 2.5 Classification of Parallel Computers – Implies high communication overhead and less opportunity for per- formance enhancement – If granularity is too fine it is possible that the overhead required for communications and synchronization between tasks takes longer than the computation. • operation level (different operations simultaneously) • problem level (independent subtasks) ◦ coarse-grain parallelism: – Relatively large amounts of computational work are done between communication/synchronization events – High computation to communication ratio – Implies more opportunity for performance increase – Harder to load balance efficiently 54 // Architectures 2.5 Classification of Parallel Computers 2.5.2 Hardware: Pipelining (was used in supercomputers, e.g. Cray-1) In N elements in pipeline and for 8 element L clock cycles =) for calculation it would take L + N cycles; without pipeline L ∗ N cycles Example of good code for pipelineing: §doi =1 ,k ¤ z ( i ) =x ( i ) +y ( i ) end do ¦ 55 // Architectures 2.5 Classification of Parallel Computers Vector processors, fast vector operations (operations on arrays). Previous example good also for vector processor (vector addition) , but, e.g. recursion – hard to optimise for vector processors Example: IntelMMX – simple vector processor. -

Instruction Latencies and Throughput for AMD and Intel X86 Processors

Instruction latencies and throughput for AMD and Intel x86 processors Torbj¨ornGranlund 2019-08-02 09:05Z Copyright Torbj¨ornGranlund 2005{2019. Verbatim copying and distribution of this entire article is permitted in any medium, provided this notice is preserved. This report is work-in-progress. A newer version might be available here: https://gmplib.org/~tege/x86-timing.pdf In this short report we present latency and throughput data for various x86 processors. We only present data on integer operations. The data on integer MMX and SSE2 instructions is currently limited. We might present more complete data in the future, if there is enough interest. There are several reasons for presenting this report: 1. Intel's published data were in the past incomplete and full of errors. 2. Intel did not publish any data for 64-bit operations. 3. To allow straightforward comparison of an important aspect of AMD and Intel pipelines. The here presented data is the result of extensive timing tests. While we have made an effort to make sure the data is accurate, the reader is cautioned that some errors might have crept in. 1 Nomenclature and notation LNN means latency for NN-bit operation.TNN means throughput for NN-bit operation. The term throughput is used to mean number of instructions per cycle of this type that can be sustained. That implies that more throughput is better, which is consistent with how most people understand the term. Intel use that same term in the exact opposite meaning in their manuals. The notation "P6 0-E", "P4 F0", etc, are used to save table header space. -

United States Patent (19) 11 Patent Number: 5,680,565 Glew Et Al

USOO568.0565A United States Patent (19) 11 Patent Number: 5,680,565 Glew et al. 45 Date of Patent: Oct. 21, 1997 (54) METHOD AND APPARATUS FOR Diefendorff, "Organization of the Motorola 88110 Super PERFORMING PAGE TABLE WALKS INA scalar RISC Microprocessor.” IEEE Micro, Apr. 1996, pp. MCROPROCESSOR CAPABLE OF 40-63. PROCESSING SPECULATIVE Yeager, Kenneth C. "The MIPS R10000 Superscalar Micro INSTRUCTIONS processor.” IEEE Micro, Apr. 1996, pp. 28-40 Apr. 1996. 75 Inventors: Andy Glew, Hillsboro; Glenn Hinton; Smotherman et al. "Instruction Scheduling for the Motorola Haitham Akkary, both of Portland, all 88.110" Microarchitecture 1993 International Symposium, of Oreg. pp. 257-262. Circello et al., “The Motorola 68060 Microprocessor.” 73) Assignee: Intel Corporation, Santa Clara, Calif. COMPCON IEEE Comp. Soc. Int'l Conf., Spring 1993, pp. 73-78. 21 Appl. No.: 176,363 "Superscalar Microprocessor Design" by Mike Johnson, Advanced Micro Devices, Prentice Hall, 1991. 22 Filed: Dec. 30, 1993 Popescu, et al., "The Metaflow Architecture.” IEEE Micro, (51) Int. C. G06F 12/12 pp. 10-13 and 63–73, Jun. 1991. 52 U.S. Cl. ........................... 395/415: 395/800; 395/383 Primary Examiner-David K. Moore 58) Field of Search .................................. 395/400, 375, Assistant Examiner-Kevin Verbrugge 395/800, 414, 415, 421.03 Attorney, Agent, or Firm-Blakely, Sokoloff, Taylor & 56 References Cited Zafiman U.S. PATENT DOCUMENTS 57 ABSTRACT 5,136,697 8/1992 Johnson .................................. 395/586 A page table walk is performed in response to a data 5,226,126 7/1993 McFarland et al. 395/.394 translation lookaside buffer miss based on a speculative 5,230,068 7/1993 Van Dyke et al. -

Identifying Bottlenecks in a Multithreaded Superscalar

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by KITopen Identifying Bottlenecks in a Multithreaded Sup erscalar Micropro cessor Ulrich Sigmund and Theo Ungerer VIONA DevelopmentGmbH Karlstr D Karlsruhe Germany University of Karlsruhe Dept of Computer Design and Fault Tolerance D Karlsruhe Germany Abstract This pap er presents a multithreaded sup erscalar pro cessor that p ermits several threads to issue instructions to the execution units of a wide sup erscalar pro cessor in a single cycle Instructions can simul taneously b e issued from up to threads with a total issue bandwidth of instructions p er cycle Our results show that the threaded issue pro cessor reaches a throughput of instructions p er cycle Intro duction Current micropro cessors utilize instructionlevel parallelism by a deep pro cessor pip eline and by the sup erscalar technique that issues up to four instructions p er cycle from a single thread VLSItechnology will allow future generations of micropro cessors to exploit instructionlevel parallelism up to instructions p er cycle or more However the instructionlevel parallelism found in a conventional instruction stream is limited The solution is the additional utilization of more coarsegrained parallelism The main approaches are the multipro cessor chip and the multithreaded pro ces pro cessors on a sor The multipro cessor chip integrates two or more complete single chip Therefore every unit of a pro cessor is duplicated and used indep en dently of its copies on the chip -

SIMD Extensions

SIMD Extensions PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 12 May 2012 17:14:46 UTC Contents Articles SIMD 1 MMX (instruction set) 6 3DNow! 8 Streaming SIMD Extensions 12 SSE2 16 SSE3 18 SSSE3 20 SSE4 22 SSE5 26 Advanced Vector Extensions 28 CVT16 instruction set 31 XOP instruction set 31 References Article Sources and Contributors 33 Image Sources, Licenses and Contributors 34 Article Licenses License 35 SIMD 1 SIMD Single instruction Multiple instruction Single data SISD MISD Multiple data SIMD MIMD Single instruction, multiple data (SIMD), is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously. Thus, such machines exploit data level parallelism. History The first use of SIMD instructions was in vector supercomputers of the early 1970s such as the CDC Star-100 and the Texas Instruments ASC, which could operate on a vector of data with a single instruction. Vector processing was especially popularized by Cray in the 1970s and 1980s. Vector-processing architectures are now considered separate from SIMD machines, based on the fact that vector machines processed the vectors one word at a time through pipelined processors (though still based on a single instruction), whereas modern SIMD machines process all elements of the vector simultaneously.[1] The first era of modern SIMD machines was characterized by massively parallel processing-style supercomputers such as the Thinking Machines CM-1 and CM-2. These machines had many limited-functionality processors that would work in parallel. -

UNIT 8B a Full Adder

UNIT 8B Computer Organization: Levels of Abstraction 15110 Principles of Computing, 1 Carnegie Mellon University - CORTINA A Full Adder C ABCin Cout S in 0 0 0 A 0 0 1 0 1 0 B 0 1 1 1 0 0 1 0 1 C S out 1 1 0 1 1 1 15110 Principles of Computing, 2 Carnegie Mellon University - CORTINA 1 A Full Adder C ABCin Cout S in 0 0 0 0 0 A 0 0 1 0 1 0 1 0 0 1 B 0 1 1 1 0 1 0 0 0 1 1 0 1 1 0 C S out 1 1 0 1 0 1 1 1 1 1 ⊕ ⊕ S = A B Cin ⊕ ∧ ∨ ∧ Cout = ((A B) C) (A B) 15110 Principles of Computing, 3 Carnegie Mellon University - CORTINA Full Adder (FA) AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 4 Carnegie Mellon University - CORTINA 2 Another Full Adder (FA) http://students.cs.tamu.edu/wanglei/csce350/handout/lab6.html AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 5 Carnegie Mellon University - CORTINA 8-bit Full Adder A7 B7 A2 B2 A1 B1 A0 B0 1-bit 1-bit 1-bit 1-bit ... Cout Full Full Full Full Cin Adder Adder Adder Adder S7 S2 S1 S0 AB 8 ⁄ ⁄ 8 C 8-bit C out FA in ⁄ 8 S 15110 Principles of Computing, 6 Carnegie Mellon University - CORTINA 3 Multiplexer (MUX) • A multiplexer chooses between a set of inputs. D1 D 2 MUX F D3 D ABF 4 0 0 D1 AB 0 1 D2 1 0 D3 1 1 D4 http://www.cise.ufl.edu/~mssz/CompOrg/CDAintro.html 15110 Principles of Computing, 7 Carnegie Mellon University - CORTINA Arithmetic Logic Unit (ALU) OP 1OP 0 Carry In & OP OP 0 OP 1 F 0 0 A ∧ B 0 1 A ∨ B 1 0 A 1 1 A + B http://cs-alb-pc3.massey.ac.nz/notes/59304/l4.html 15110 Principles of Computing, 8 Carnegie Mellon University - CORTINA 4 Flip Flop • A flip flop is a sequential circuit that is able to maintain (save) a state. -

An Introduction to Gpus, CUDA and Opencl

An Introduction to GPUs, CUDA and OpenCL Bryan Catanzaro, NVIDIA Research Overview ¡ Heterogeneous parallel computing ¡ The CUDA and OpenCL programming models ¡ Writing efficient CUDA code ¡ Thrust: making CUDA C++ productive 2/54 Heterogeneous Parallel Computing Latency-Optimized Throughput- CPU Optimized GPU Fast Serial Scalable Parallel Processing Processing 3/54 Why do we need heterogeneity? ¡ Why not just use latency optimized processors? § Once you decide to go parallel, why not go all the way § And reap more benefits ¡ For many applications, throughput optimized processors are more efficient: faster and use less power § Advantages can be fairly significant 4/54 Why Heterogeneity? ¡ Different goals produce different designs § Throughput optimized: assume work load is highly parallel § Latency optimized: assume work load is mostly sequential ¡ To minimize latency eXperienced by 1 thread: § lots of big on-chip caches § sophisticated control ¡ To maXimize throughput of all threads: § multithreading can hide latency … so skip the big caches § simpler control, cost amortized over ALUs via SIMD 5/54 Latency vs. Throughput Specificaons Westmere-EP Fermi (Tesla C2050) 6 cores, 2 issue, 14 SMs, 2 issue, 16 Processing Elements 4 way SIMD way SIMD @3.46 GHz @1.15 GHz 6 cores, 2 threads, 4 14 SMs, 48 SIMD Resident Strands/ way SIMD: vectors, 32 way Westmere-EP (32nm) Threads (max) SIMD: 48 strands 21504 threads SP GFLOP/s 166 1030 Memory Bandwidth 32 GB/s 144 GB/s Register File ~6 kB 1.75 MB Local Store/L1 Cache 192 kB 896 kB L2 Cache 1.5 MB 0.75 MB -

Cuda C Programming Guide

CUDA C PROGRAMMING GUIDE PG-02829-001_v10.0 | October 2018 Design Guide CHANGES FROM VERSION 9.0 ‣ Documented restriction that operator-overloads cannot be __global__ functions in Operator Function. ‣ Removed guidance to break 8-byte shuffles into two 4-byte instructions. 8-byte shuffle variants are provided since CUDA 9.0. See Warp Shuffle Functions. ‣ Passing __restrict__ references to __global__ functions is now supported. Updated comment in __global__ functions and function templates. ‣ Documented CUDA_ENABLE_CRC_CHECK in CUDA Environment Variables. ‣ Warp matrix functions now support matrix products with m=32, n=8, k=16 and m=8, n=32, k=16 in addition to m=n=k=16. www.nvidia.com CUDA C Programming Guide PG-02829-001_v10.0 | ii TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. From Graphics Processing to General Purpose Parallel Computing............................... 1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model.............3 1.3. A Scalable Programming Model.........................................................................4 1.4. Document Structure...................................................................................... 5 Chapter 2. Programming Model............................................................................... 7 2.1. Kernels......................................................................................................7 2.2. Thread Hierarchy........................................................................................