Programming with Multiple Virtual Address Spaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

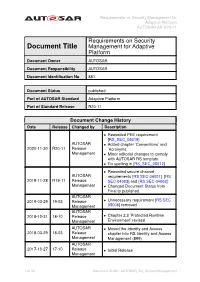

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Hardware Configuration with Dynamically-Queried Formal Models

Master’s Thesis Nr. 180 Systems Group, Department of Computer Science, ETH Zurich Hardware Configuration With Dynamically-Queried Formal Models by Daniel Schwyn Supervised by Reto Acherman Dr. David Cock Prof. Dr. Timothy Roscoe April 2017 – October 2017 Abstract Hardware is getting increasingly complex and heterogeneous. With different compo- nents having different views of the system, the traditional assumption of unique phys- ical addresses has become an illusion. To adapt to such hardware, an operating system (OS) needs to understand the complex address translation chains and be able to handle the presence of multiple address spaces. This thesis takes a recently proposed model that formally captures these aspects and applies it to hardware configuration in the Barrelfish OS. To this end, I present Sockeye, a domain specific language that uses the model to de- scribe hardware. I then show, that code relying on knowledge about the address spaces in a system can be statically generated from these specifications. Furthermore, the model is successfully applied to device management, showing that it can also be used to configure hardware at runtime. The implementation presented here does not rely on any platform specific code and it reduced the amount of such code in Barrelfish’s device manager by over 30%. Applying the model to further hardware configuration tasks is expected to have similar effects. i Acknowledgements First of all, I want to thank my advisers Timothy Roscoe, David Cock and Reto Acher- mann for their guidance and support. Their feedback and critical questions were a valuable contribution to this thesis. I’d also like to thank the rest of the Barrelfish team and other members of the Systems Group at ETH for the interesting discussions during meetings and coffee breaks. -

Application Performance and Flexibility on Exokernel Systems

Application Performance and Flexibility On Exokernel Systems –M. F. Kaashoek, D.R. Engler, G.R. Ganger et. al. Presented by Henry Monti Exokernel ● What is an Exokernel? ● What are its advantages? ● What are its disadvantages? ● Performance ● Conclusions 2 The Problem ● Traditional operating systems provide both protection and resource management ● Will protect processes address space, and manages the systems virtual memory ● Provides abstractions such as processes, files, pipes, sockets,etc ● Problems with this? 3 Exokernel's Solution ● The problem is that OS designers must try and accommodate every possible use of abstractions, and choose general solutions for resource management ● This causes the performance of applications to suffer, since they are restricted to general abstractions, interfaces, and management ● Research has shown that more application control of resources yields better performance 4 (Separation vs. Protection) ● An exokernel solves these problems by separating protection from management ● Creates idea of LibOS, which is like a virtual machine with out isolation ● Moves abstractions (processes, files, virtual memory, filesystems), to the user space inside of LibOSes ● Provides a low level interface as close to the hardware as possible 5 General Design Principles ● Exposes as much as possible of the hardware, most of the time using hardware names, allowing libOSes access to things like individual data blocks ● Provides methods to securely bind to resources, revoke resources, and an abort protocol that the kernel itself -

Message Passing for Programming Languages and Operating Systems

Master’s Thesis Nr. 141 Systems Group, Department of Computer Science, ETH Zurich Message Passing for Programming Languages and Operating Systems by Martynas Pumputis Supervised by Prof. Dr. Timothy Roscoe, Dr. Antonios Kornilios Kourtis, Dr. David Cock October 7, 2015 Abstract Message passing as a mean of communication has been gaining popu- larity within domains of concurrent programming languages and oper- ating systems. In this thesis, we discuss how message passing languages can be ap- plied in the context of operating systems which are heavily based on this form of communication. In particular, we port the Go program- ming language to the Barrelfish OS and integrate the Go communi- cation channels with the messaging infrastructure of Barrelfish. We show that the outcome of the porting and the integration allows us to implement OS services that can take advantage of the easy-to-use concurrency model of Go. Our evaluation based on LevelDB benchmarks shows comparable per- formance to the Linux port. Meanwhile, the overhead of the messag- ing integration causes the poor performance when compared to the native messaging of Barrelfish, but exposes an easier to use interface, as shown by the example code. i Acknowledgments First of all, I would like to thank Timothy Roscoe, Antonios Kornilios Kourtis and David Cock for giving the opportunity to work on the Barrelfish OS project, their supervision, inspirational thoughts and critique. Next, I would like to thank the Barrelfish team for the discussions and the help. In addition, I would like to thank Sebastian Wicki for the conversations we had during the entire period of my Master’s studies. -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

Advanced X86

Advanced x86: BIOS and System Management Mode Internals Input/Output Xeno Kovah && Corey Kallenberg LegbaCore, LLC All materials are licensed under a Creative Commons “Share Alike” license. http://creativecommons.org/licenses/by-sa/3.0/ ABribuEon condiEon: You must indicate that derivave work "Is derived from John BuBerworth & Xeno Kovah’s ’Advanced Intel x86: BIOS and SMM’ class posted at hBp://opensecuritytraining.info/IntroBIOS.html” 2 Input/Output (I/O) I/O, I/O, it’s off to work we go… 2 Types of I/O 1. Memory-Mapped I/O (MMIO) 2. Port I/O (PIO) – Also called Isolated I/O or port-mapped IO (PMIO) • X86 systems employ both-types of I/O • Both methods map peripheral devices • Address space of each is accessed using instructions – typically requires Ring 0 privileges – Real-Addressing mode has no implementation of rings, so no privilege escalation needed • I/O ports can be mapped so that they appear in the I/O address space or the physical-memory address space (memory mapped I/O) or both – Example: PCI configuration space in a PCIe system – both memory-mapped and accessible via port I/O. We’ll learn about that in the next section • The I/O Controller Hub contains the registers that are located in both the I/O Address Space and the Memory-Mapped address space 4 Memory-Mapped I/O • Devices can also be mapped to the physical address space instead of (or in addition to) the I/O address space • Even though it is a hardware device on the other end of that access request, you can operate on it like it's memory: – Any of the processor’s instructions -

CS 414/415 Systems Programming and Operating Systems

1 MODERN SYSTEMS: EXTENSIBLE KERNELS AND CONTAINERS CS6410 Hakim Weatherspoon Motivation 2 Monolithic Kernels just aren't good enough? Conventional virtual memory isn't what userspace programs need (Appel + Li '91) Application-level control of caching gives 45% speedup (Cao et al '94) Application-specific VM increases performance (Krueger '93, Harty + Cheriton '92) Filesystems for databases (Stonebraker '81) And more... Motivation 3 Lots of problems… Motivation 4 Lots of problems…Lots of design opportunities! Motivation 5 Extensibility Security Performance Can we have all 3 in a single OS? From Stefan Savage’s SOSP 95 presentation Context for these papers 1990’s Researchers (mostly) were doing special purpose OS hacks Commercial market complaining that OS imposed big overheads on them OS research community began to ask what the best way to facilitate customization might be. In the spirit of the Flux OS toolkit… 2010’s containers: single-purpose appliances Unikernels: (“sealable”) single-address space Compile time specialized Motivation 7 1988-1995: lots of innovation in OS development Mach 3, the first “true” microkernel SPIN, Exokernel, Nemesis, Scout, SPACE, Chorus, Vino, Amoeba, etc... And even more design papers Motivation 8 Exploring new spaces Distributed computing Secure computing Extensible kernels (exokernel, unikernel) Virtual machines (exokernel) New languages (spin) New memory management (exokernel, unikernel) Exokernel Dawson R. Engler, M. Frans Kaashoek and James O’Toole Jr. Engler’s Master’s -

Virtual Memory in X86

Fall 2017 :: CSE 306 Virtual Memory in x86 Nima Honarmand Fall 2017 :: CSE 306 x86 Processor Modes • Real mode – walks and talks like a really old x86 chip • State at boot • 20-bit address space, direct physical memory access • 1 MB of usable memory • No paging • No user mode; processor has only one protection level • Protected mode – Standard 32-bit x86 mode • Combination of segmentation and paging • Privilege levels (separate user and kernel) • 32-bit virtual address • 32-bit physical address • 36-bit if Physical Address Extension (PAE) feature enabled Fall 2017 :: CSE 306 x86 Processor Modes • Long mode – 64-bit mode (aka amd64, x86_64, etc.) • Very similar to 32-bit mode (protected mode), but bigger address space • 48-bit virtual address space • 52-bit physical address space • Restricted segmentation use • Even more obscure modes we won’t discuss today xv6 uses protected mode w/o PAE (i.e., 32-bit virtual and physical addresses) Fall 2017 :: CSE 306 Virt. & Phys. Addr. Spaces in x86 Processor • Both RAM hand hardware devices (disk, Core NIC, etc.) connected to system bus • Mapped to different parts of the physical Virtual Addr address space by the BIOS MMU Data • You can talk to a device by performing Physical Addr read/write operations on its physical addresses Cache • Devices are free to interpret reads/writes in any way they want (driver knows) System Interconnect (Bus) : all addrs virtual DRAM Network … Disk (Memory) Card : all addrs physical Fall 2017 :: CSE 306 Virt-to-Phys Translation in x86 0xdeadbeef Segmentation 0x0eadbeef Paging 0x6eadbeef Virtual Address Linear Address Physical Address Protected/Long mode only • Segmentation cannot be disabled! • But can be made a no-op (a.k.a. -

Protection in the Think Exokernel Christophe Rippert, Jean-Bernard Stefani

Protection in the Think exokernel Christophe Rippert, Jean-Bernard Stefani To cite this version: Christophe Rippert, Jean-Bernard Stefani. Protection in the Think exokernel. 4th CaberNet European Research Seminar on Advances in Distributed Systems, May 2001, Bertinoro, Italy. hal-00308882 HAL Id: hal-00308882 https://hal.archives-ouvertes.fr/hal-00308882 Submitted on 4 Aug 2008 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Protection in the Think exokernel Christophe Rippert,∗ Jean-Bernard Stefani† [email protected], [email protected] Introduction In this paper, we present our preliminary ideas concerning the adaptation of security and protection techniques in the Think exokernel. Think is our proposition of a distributed adaptable kernel, designed according to the exokernel architecture. After summing up the main motivations for using the exokernel architecture, we describe the Think exokernel as it has been implemented on a PowerPC machine. We then present the major protection and security techniques that we plan to adapt to the Think environment, and give an example of how some of these techniques can be combined with the Think model to provide fair and protected resource management. -

OS Extensibility: Spin, Exo-Kernel and L4

OS Extensibility: Spin, Exo-kernel and L4 Extensibility Problem: How? Add code to OS how to preserve isolation? … without killing performance? What abstractions? General principle: mechanisms in OS, policies through the extensions What mechanisms to expose? 2 Spin Approach to extensibility Co-location of kernel and extension Avoid border crossings But what about protection? Language/compiler forced protection Strongly typed language Protection by compiler and run-time Cannot cheat using pointers Logical protection domains No longer rely on hardware address spaces to enforce protection – no boarder crossings Dynamic call binding for extensibility 3 ExoKernel 4 Motivation for Exokernels Traditional centralized resource management cannot be specialized, extended or replaced Privileged software must be used by all applications Fixed high level abstractions too costly for good efficiency Exo-kernel as an end-to-end argument 5 Exokernel Philosophy Expose hardware to libraryOS Not even mechanisms are implemented by exo-kernel They argue that mechanism is policy Exo-kernel worried only about protection not resource management 6 Design Principles Track resource ownership Ensure protection by guarding resource usage Revoke access to resources Expose hardware, allocation, names and revocation Basically validate binding, then let library manage the resource 7 Exokernel Architecture 8 Separating Security from Management Secure bindings – securely bind machine resources Visible revocation – allow libOSes to participate in resource revocation Abort protocol -

Composing Abstractions Using the Null-Kernel

Composing Abstractions using the null-Kernel James Litton Deepak Garg University of Maryland Max Planck Institute for Software Systems Max Planck Institute for Software Systems [email protected] [email protected] Peter Druschel Bobby Bhattacharjee Max Planck Institute for Software Systems University of Maryland [email protected] [email protected] CCS CONCEPTS hardware, such as GPUs, crypto/AI accelerators, smart NICs/- • Software and its engineering → Operating systems; storage devices, NVRAM etc., and to efficiently support new Layered systems; • Security and privacy → Access control. application requirements for functionality such as transac- tional memory, fast snapshots, fine-grained isolation, etc., KEYWORDS that demand new OS abstractions that can exploit existing hardware in novel and more efficient ways. Operating Systems, Capability Systems At its core, the null-Kernel derives its novelty from being ACM Reference Format: able to support and compose across components, called Ab- James Litton, Deepak Garg, Peter Druschel, and Bobby Bhattachar- stract Machines (AMs) that provide programming interfaces jee. 2019. Composing Abstractions using the null-Kernel. In Work- at different levels of abstraction. The null-Kernel uses an ex- shop on Hot Topics in Operating Systems (HotOS ’19), May 13–15, 2019, Bertinoro, Italy. ACM, New York, NY, USA, 6 pages. https: tensible capability mechanism to control resource allocation //doi.org/10.1145/3317550.3321450 and use at runtime. The ability of the null-Kernel to support interfaces at different levels of abstraction accrues several 1 INTRODUCTION benefits: New hardware can be rapidly deployed using rela- tively low-level interfaces; high-level interfaces can easily Abstractions imply choice, one that OS designers must con- be built using low-level ones; and applications can benefit front when designing a programming interface to expose.