Cvpaper. Challenge in 2016: Futuristic Computer Vision Through 1600

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kerouac, Jack

Jack Kerouac Comentario [LT1]: En El Camino Título original ON THE ROAD JACK KEROUAC Nació en Lowell (Massachusetts) en 1922, estudió en escuelas católicas y posteriormente en la Universidad de Columbia. Más tarde se enroló en la marina mercante y se dedicó a recorrer los Estados Unidos. Influenciado por las lecturas de London, Hemingway, Saroyan, Wolfe y Joyce, publicó su primera novela, La ciudad y el campo, en 1950, convirtiéndose en uno de los patriarcas de la generación beat, junto a Burroughs y Ginsberg. Entre sus obras más importantes están: El ángel subterráneo (1958), Doctor Sax (1959), Big Sur (1962), Visiones de Cody (1963). En el camino (1957) es la historia de una aventura moral, de una experiencia mística y de un largo vagabundear por los Estados Unidos, con un fuerte contenido autobiográfico. ÍNDICE PRIMERA PARTE ....................................................................................................................................... 6 SEGUNDA PARTE ................................................................................................................................... 75 TERCERA PARTE .................................................................................................................................. 123 CUARTA PARTE .................................................................................................................................... 167 QUINTA PARTE .................................................................................................................................... -

Nancy Wilson Ross

Nancy Wilson Ross: An Inventory of Her Papers at the Harry Ransom Center Descriptive Summary Creator: Ross, Nancy Wilson, 1901-1986 Title: Nancy Wilson Ross Papers Dates: 1913-1986 Extent: 261.5 document boxes, 12 flat boxes, 18 card boxes, 7 galley folders (138 linear feet) Abstract: The papers of this American writer encompass her entire literary career and include manuscript drafts, extensive correspondence, and subject files reflecting her interest in Eastern cultures. Call Number: Manuscript Collection MS-03616 Language: English Access Open for research Administrative Information Acquisition Purchase, 1972 (R5717) Provenance Ross's first shipment of materials to the Ransom Center accompanied her husband Stanley Young's papers, and consisted of Ross's literary output to 1975, including manuscripts, publications, and research materials. The second, posthumous shipment contained manuscripts created since 1974, and all her correspondence, personal, and financial files, as well as files concerning the estate of Stanley Young. Processed by Rufus Lund, 1992-93; completed by Joan Sibley, 1994 Processing note: Materials from the 1975 and 1986 shipments are grouped following Ross's original order, with the exception of pre-1970, special, and current correspondence which were interfiled during processing. An index of selected correspondents follows at the end of this inventory. Repository: Harry Ransom Center, The University of Texas at Austin Ross, Nancy Wilson, 1901-1986 Manuscript Collection MS-03616 2 Ross, Nancy Wilson, 1901-1986 Manuscript Collection MS-03616 Biographical Sketch Nancy Wilson was born in Olympia, Washington, on November 22, 1901. She graduated from the University of Oregon in 1924, and married Charles W. -

Shallow Crustal Composition of Mercury As Revealed by Spectral Properties and Geological Units of Two Impact Craters

Planetary and Space Science 119 (2015) 250–263 Contents lists available at ScienceDirect Planetary and Space Science journal homepage: www.elsevier.com/locate/pss Shallow crustal composition of Mercury as revealed by spectral properties and geological units of two impact craters Piero D’Incecco a,n, Jörn Helbert a, Mario D’Amore a, Alessandro Maturilli a, James W. Head b, Rachel L. Klima c, Noam R. Izenberg c, William E. McClintock d, Harald Hiesinger e, Sabrina Ferrari a a Institute of Planetary Research, German Aerospace Center, Rutherfordstrasse 2, D-12489 Berlin, Germany b Department of Geological Sciences, Brown University, Providence, RI 02912, USA c The Johns Hopkins University Applied Physics Laboratory, Laurel, MD 20723, USA d Laboratory for Atmospheric and Space Physics, University of Colorado, Boulder, CO 80303, USA e Westfälische Wilhelms-Universität Münster, Institut für Planetologie, Wilhelm-Klemm Str. 10, D-48149 Münster, Germany article info abstract Article history: We have performed a combined geological and spectral analysis of two impact craters on Mercury: the Received 5 March 2015 15 km diameter Waters crater (106°W; 9°S) and the 62.3 km diameter Kuiper crater (30°W; 11°S). Using Received in revised form the Mercury Dual Imaging System (MDIS) Narrow Angle Camera (NAC) dataset we defined and mapped 9 October 2015 several units for each crater and for an external reference area far from any impact related deposits. For Accepted 12 October 2015 each of these units we extracted all spectra from the MESSENGER Atmosphere and Surface Composition Available online 24 October 2015 Spectrometer (MASCS) Visible-InfraRed Spectrograph (VIRS) applying a first order photometric correc- Keywords: tion. -

The Parliament of Poets: an Epic Poem

The Parliament of Poets “Like a story around a campfire.” —From the Audience “A great epic poem of startling originality and universal significance, in every way partaking of the nature of world literature.” —Dr. Hans-George Ruprecht, CKCU Literary News, Carleton University, Ottawa, Canada “A remarkable poem by a uniquely inspired poet, taking us out of time into a new and unspoken consciousness...” —Kevin McGrath, Lowell House, South Asian Studies, Harvard University “Mr. Glaysher has written an epic poem of major importance... Truly a major accomplishment and contribution to American Letters... A landmark achievement.” —ML Liebler, Department of English, Wayne State University, Detroit, Michigan “Glaysher is really an epic poet and this is an epic poem! Glaysher has written a masterpiece...” —James Sale (UK), The Society of Classical Poets “And a fine major work it is.” —Arthur McMaster, Contributing Editor, Poets’ Quarterly; Department of English, Converse College, Spartanburg, South Carolina “This Great Poem promises to be the defining Epic of the Age and will be certain to endure for many Centuries. Frederick Glaysher uses his great Poetic and Literary Skills in an artistic way that is unique for our Era and the Years to come. I strongly recommend this book to all those who enjoy the finest Poetry. A profound spiritual message for humanity.” —Alan Jacobs, Poet Writer Author, Amazon UK Review, London “Very readable and intriguingly enjoyable. A masterpiece that will stand the test of time.” —Poetry Cornwall, No. 36, England, UK “Bravo to the Poet for this toilsome but brilliant endeavour.” —Umme Salma, Transnational Literature, Flinders University, Adelaide, Australia “Am in awe of its brilliance.. -

Space Weathering on Mercury

Advances in Space Research 33 (2004) 2152–2155 www.elsevier.com/locate/asr Space weathering on Mercury S. Sasaki *, E. Kurahashi Department of Earth and Planetary Science, The University of Tokyo, Tokyo 113 0033, Japan Received 16 January 2003; received in revised form 15 April 2003; accepted 16 April 2003 Abstract Space weathering is a process where formation of nanophase iron particles causes darkening of overall reflectance, spectral reddening, and weakening of absorption bands on atmosphereless bodies such as the moon and asteroids. Using pulse laser irra- diation, formation of nanophase iron particles by micrometeorite impact heating is simulated. Although Mercurian surface is poor in iron and rich in anorthite, microscopic process of nanophase iron particle formation can take place on Mercury. On the other hand, growth of nanophase iron particles through Ostwald ripening or repetitive dust impacts would moderate the weathering degree. Future MESSENGER and BepiColombo mission will unveil space weathering on Mercury through multispectral imaging observations. Ó 2003 COSPAR. Published by Elsevier Ltd. All rights reserved. 1. Introduction irradiation should change the optical properties of the uppermost regolith surface of atmosphereless bodies. Space weathering is a proposed process to explain Although Hapke et al. (1975) proposed that formation spectral mismatch between lunar soils and rocks, and of iron particles with sizes from a few to tens nanome- between asteroids (S-type) and ordinary chondrites. ters should be responsible for the optical property Most of lunar surface and asteroidal surface exhibit changes, impact-induced formation of glassy materials darkening of overall reflectance, spectral reddening had been considered as a primary cause for space (darkening of UV–Vis relative to IR), and weakening of weathering. -

THE 53Rd INTERNATIONAL CONFERENCE on ELECTRON, ION, and PHOTON BEAM TECHNOLOGY & NANOFABRICATION

THE 53rd INTERNATIONAL CONFERENCE on ELECTRON, ION, and PHOTON BEAM TECHNOLOGY & NANOFABRICATION Marco Island Marriott Resort, Golf Club and Spa Marco Island, Florida May 26 – May 29, 2009 Co-sponsored by: The American Vacuum Society in cooperation with: The Electron Devices Society of the Institute of Electrical and Electronic Engineers and The Optical Society of America Conference at a Glance Conference at a Glance CONFERENCE ORGANIZATION CONFERENCE CHAIR Stephen Chou, Princeton University PROGRAM CHAIR Elizabeth Dobisz, Hitachi Global Storage Technologies MEETING PLANNER Melissa Widerkehr, Widerkehr and Associates STEERING COMMITTEE R. Blakie, University of Canterbury A. Brodie, KLA-Tencor S. Brueck, University of New Mexico S. Chou, Princeton University E. Dobisz, Hitachi Global Storage Technologies M. Feldman, Louisiana State University C. Hanson, SPAWAR J.A. Liddle, NIST F. Schellenberg, Mentor Graphics G. Wallraff, IBM ADVISORY COMMITTEE Ilesanmi Adesida, Robert Bakish, Alec N. Broers, John H. Bruning, Franco Cerrina, Harold Craighead, K. Cummings, N. Economou, D. Ehrlich, R. Englestad, T. Everhart, M. Gesley, T. Groves, L. Harriott, M. Hatzakis, F. Hohn, R. Howard, E. Hu, J. Kelly, D. Kern, R. Kubena, R. Kunz, N. MacDonald, C. Marrian, S. Matsui, M. McCord, D. Meisburger, J. Melngailis, A. Neureuther, T. Novembre, J. Orloff, G. Owen, S. Pang, R.F. Pease, M. Peckerar, H. Pfeifer, J. Randall, D. Resnick, M. Schattenburg, H. Smith, L. Swanson, D. Tennant*, L. Thompson, G. Varnell, R. Viswanathan, A. Wagner, J. Wiesner, C. Wilkinson, A. Wilson, Shalom Wind, E. Wolfe *Financial Trustee COMMERCIAL SESSION Alan Brodie, KLA Tencor Rob Illic, Cornell University Reginald Farrow, New Jersey Institute of Technology Brian Whitehead, Raith PROGRAM COMMITTEE & SECTION HEADS Lithography E- Beam Optical Lithography A. -

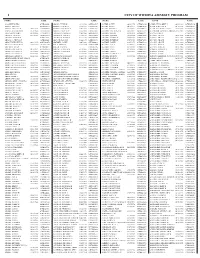

2013 Amnesty List

1 CITY OF WICHITA AMNESTY PROGRAM NAME CASE NAME CASE NAME CASE NAME CASE AAA RENTAL INC, 07PK002542 ADAMS, TYLER R 03/19/1990 08DV002737 AGUIRE, CATHY 04/30/1972 10TM051054 ALBRIGHT, ROBERT J 02/09/1969 06TM007994 AARON, CHELSYE 08PK020790 ADAMS, TYRONE R 01/16/1981 12TM012057 AGUIRE, CATHY 04/30/1972 12TM024836 ALCALA, MIGUEL A 05/12/1975 12CM001823 AARON, CHELSYE R 09/01/1988 12CM000065 ADAMS, VERONICA A 10/07/1993 12TM053071 AGUIRE, RANDY S 02/15/1967 08DU000620 ALCALA-GONZALEZ, JOSE 10/15/1983 08TM052279 AARON, ELIZABETH N 12/24/1980 12TM055461 ADAMS, VINCENT P 02/16/1984 10TM043981 AGUIRRE, CATALINA M 04/30/1972 09CM002133 ALCANTAR-SANCHEZ, ADRIAN07/10/1983 11TM064524 ABALOS, RICARDO 03/29/1966 11TM053312 ADAMS, YOLUNDA M 12/07/1966 08PK026942 AGUIRRE, DAVID 08/18/1973 08TM004772 ALCON, LENA M 07PK014916 ABARCA, LAURA O 04/10/1989 10TM002892 ADAMSON, JASON R 02/23/1972 09TM070757 AGUIRRE, DAVID R 09/26/1986 12TM048738 ALCORN, CHAZ E 08/01/1988 07DR001472 ABARCA, VICTOR M 06PK024766 ADAMSON, ROGER C 10/16/1950 07CM004738 AGUIRRE, ESTHER N 07/07/1989 08CM004521 ALCORN, CHAZ E 08/01/1988 07DV002172 ABASOLO, CECILIA P 12PK009648 ADCOCK, JASON J 09/24/1977 12DR000991 AGUIRRE, ESTHER N 07/07/1989 09TM072570 ALCORN, CHAZ E 08/01/1988 07DV002970 ABBOTT, KENNETH D 08/11/1950 08DU000470 ADEDRES, LOPEZ 03/07/1981 07TM004213 AGUIRRE, JAMIE L 11/05/1975 09TM065312 ALCORN, CHAZ E 08/01/1988 08TM028698 ABBOTT, NICHOLAS D 01/05/1980 10TM057545 ADER, ADAM M 05/10/1984 09DV002244 AGUIRRE, JESUS 01/14/1973 08TM014097 ALCORN, JOHN D 07/23/1964 09PK008906 -

Kaae, Leonard Kuuleinamoku, July 19, 2012 Leonard Kuuleinamoku Kaae, 84, of Honolulu, a Retired Hawaiian Tug & Barge Seaman and an Army Veteran, Died

Kaae, Leonard Kuuleinamoku, July 19, 2012 Leonard Kuuleinamoku Kaae, 84, of Honolulu, a retired Hawaiian Tug & Barge seaman and an Army veteran, died. He was born in Honolulu. He is survived by wife Ruth H. and sisters Ethel Hardley and Rose Giltner. Private services. [Honolulu Star-Advertiser 11 August 2012] Kaahanui, Agnes Lily Kahihiulaokalani, 77, of Honolulu, Hawaii, passed away June 14, 2012 at Kuakini Medical Center. Born July 10, 1934 in Honolulu, Hawaii. She was retired Maintenance Housekeeping Personel at Iolani Palace. She is survived by sons, Clifford Kalani (Marylyn) Kaahanui, Clyde Haumea Kaahanui, Cyrus Kamea Aloha Kaahanui, Hiromi (Jeanette) Fukuzawa; daughters, Katherine Ku’ulei Kaahanui, Kathleen Kuuipo (Arthur) Sing, Karen Kehaulani Kaahanui; 14 grandchildren; 10 great-grandchildren; sister, Rebecca Leimomi Naha. Visitation 10:00 a.m. Thursday (7/19) at Mililani Downtown Mortuary, Funeral Service 11:00 a.m., Burial 2:00 p.m. at Hawaiian Memorial Park Cemetery. Casual Attire. Flowers Welcome. [Honolulu Star-Advertiser 17 July 2012] Kaahanui, Agnes Lily Kahihiulaokalani, June 14, 2012 Agnes Lily Kahihiulaokalani Kaahanui, 77, of Honolulu, a retired Iolani Palace maintenance housekeeping worker, died in Kuakini Medical Center. She was born in Honolulu. She is survived by sons Clifford K., Clyde H. and Cyrus K. Kaahanui, and Hiromi Fukuzawa; daughters Katherine K. and Karen K. Kaahanui, and Kathleen K. Sing; sister Rebecca L. Naha; 14 grandchildren; and 10 great- grandchildren. Visitation: 10 a.m. Thursday at Mililani Downtown Mortuary. Services: 11 a.m. Burial: 2 p.m. at Hawaiian Memorial Park. Casual attire. Flowers welcome. [Honolulu Star- Advertiser 17 July 2012] Kaahanui, Carolyn Luana, July 21, 2012 Carolyn Luana Kaahanui, 59, of Kahului, a Makena Surf housekeeping department employee, died in Maui Memorial Medical Center. -

At NALC's Doorstep

Volume 134/Number 2 February 2021 In this issue President’s Message 1 Branch Election Notices 81 Special issue LETTER CARRIER POLITICAL FUND The monthly journal of the NATIONAL ASSOCIATION OF LETTER CARRIERS ANARCHY at NALC’s doorstep— PAGE 1 { InstallInstall thethe freefree NALCNALC MemberMember AppApp forfor youryour iPhoneiPhone oror AndroidAndroid smartphonesmartphone As technology increases our ability to communicate, NALC must stay ahead of the curve. We’ve now taken the next step with the NALC Member App for iPhone and Android smartphones. The app was de- veloped with the needs of letter carriers in mind. The app’s features include: • Workplace resources, including the National • Instantaneous NALC news with Agreement, JCAM, MRS and CCA resources personalized push notifications • Interactive Non-Scheduled Days calendar and social media access • Legislative tools, including bill tracker, • Much more individualized congressional representatives and PAC information GoGo to to the the App App Store Store oror GoogleGoogle Play Play and and search search forfor “NALC “NALC Member Member App”App” toto install install for for free free President’s Message Anarchy on NALC’s doorstep have always taken great These developments have left our nation shaken. Our polit- pride in the NALC’s head- ical divisions are raw, and there now is great uncertainty about quarters, the Vincent R. the future. This will certainly complicate our efforts to advance Sombrotto Building. It sits our legislative agenda in the now-restored U.S. Capitol. But kitty-corner to the United there is reason for hope. IStates Capitol, a magnificent First, we should take solace in the fact that the attack on our and inspiring structure that has democracy utterly failed. -

She Smiles Sadly*.•

Number 5 Volume XXVIII. SEATTLE, WASHINGTON, FEBRUARY 4, 1933 SHE SMILES SADLY*.• • Kwan - Yin, Chinese Goddess of Mercy, some times called the Goddess of Peace, has reason these days for that sardonic expression, although the mocking smile is by no means a new one; she has worn it since the Wei Dynasty, Fifth Century A. D. The Goddess is the property of the Bos ton Museum of Art.— Courtesy The Art Digest. Featured This Week: Stuffed Zoos, by Dr. Herbert H. Gowen "Two Can Play"—, by Mack Mathews Editorials: (Up Hill and Down, Amateur Orchestra In Dissent, by George Pampel Starts, C's and R's, France Buys American) A Woman's Span (A Lyrical Sequence), by Helen Maring two THE TOWN CRIER FEBRUARY 4, 1933 By John Locke Worcester. Illus Stage trated with lantern slides. Puget "In Abraham's Bosom'' (Repertory Sound Academy of Science. Gug Playhouse)—Paul Green's Pulit AROUND THE TOWN genheim Hall. Wednesday, Febru zer prize drama produced by Rep ary 22, 8:15 p. m. ertory Company, with cast of Se attle negro actors. Direction Flor By MARGARET CALLAHAN Radio Highlights . , ence Bean James. A negro chorus sings spirituals. Wednesdays and Young People's Symphony Concert Fridays for limited run. 8:30 p.m. "Camille" (Repertory Playhouse) — Spanish ballroom, The Olympic. —New York Philharmonic, under direction of Bruno Walter. 8:30- "Funny Man" (Repertory Play All-University drama. February February 7, 8:30 p. m. 16 and 18, 8:30 p. m. Violin, piano trio—Jean Margaret 9:15 a. m. Saturday. KOL. house)—Comedy of old time Blue Danube—Viennese music un vaudeville life by Felix von Bres- Crow and Nora Crow Winkler, violinists, and Helen Louise Oles, der direction Dr. -

A Topic Model Approach to Represent and Classify American Football Plays

A Topic Model Approach to Represent and Classify American Football Plays Jagannadan Varadarajan1 1 Advanced Digital Sciences Center of Illinois, Singapore [email protected] 2 King Abdullah University of Science and Technology, Saudi Indriyati Atmosukarto1 Arabia [email protected] 3 University of Illinois at Urbana-Champaign, IL USA Shaunak Ahuja1 [email protected] Bernard Ghanem12 [email protected] Narendra Ahuja13 [email protected] Automated understanding of group activities is an important area of re- a Input ba Feature extraction ae Output motion angle, search with applications in many domains such as surveillance, retail, Play type templates and labels time & health care, and sports. Despite active research in automated activity anal- plays player role run left pass left ysis, the sports domain is extremely under-served. One particularly diffi- WR WR QB RB QB abc Documents RB cult but significant sports application is the automated labeling of plays in ] WR WR . American Football (‘football’), a sport in which multiple players interact W W ....W 1 2 D run right pass right . WR WR on a playing field during structured time segments called plays. Football . RB y y y ] QB QB 1 2 D RB plays vary according to the initial formation and trajectories of players - w i - documents, yi - labels WR Trajectories WR making the task of play classification quite challenging. da MedLDA modeling pass midrun mid pass midrun η βk K WR We define a play as a unique combination of offense players’ trajec- WR y QB d RB QB RB tories as shown in Fig. -

LOOK for the STARS... Faulty Hatch Keeps Teacher, Crew on Hold

V . , f. t.;. *..? * - U — MANCHESTER HERALD. S a tu rd a y , Jan. 85, 1986 LOOK FOR THE STARS... ★ MANCHESTER U S WORLD CONNECTICUT Look tor the CLASSIFIED ADS with STARS; stars help you get Charter move Britain’s Thatcher Moffett charges faces her critics frustrate O’Neill better results. Put a star on your ad and see what a draws support .. page 3 ... page 5 ... page 7 difference it makes. Telephone 643-2711, AAonday-Friday, 8:30 a.m. to 5:00 p.m. 4- KIT ‘N’ CARLYLE ®by Larry Wright I MISCELLANEOUS ITTI c a r s /t r u c k s I FOB SALE. LLiJ FOR SALE Hay for Sale by the Bale. 85 Ford 1-10 Dump 10ml; Cash and carry. Pella 84 Caprice Classic loaded anrliFBtrr^ ManchHSter - A City o( VillagG Charm Hrralft Brothers. Bldwell Street. 15ml; 84 Ltd. Cr. Vic. 4 dr. 643-7405. loaded 20ml; 84 Van Vo- nogon Wgn. loaded 9ml; 84 Cavalier Wgn. ot/ac 2 Matching Bridesmaids si* 25 Cents Gowns. Buroondy with 12ml; 83 Mustang convert Dumas Eleetrie— Having^ Monday, Jan. 27,1986 ecru lace. Excellent con loaded 6ml; 83 Chew OdNd ioba, TrucKrng. 'Nome your own price'— I Floarsandlng dition. Sizes 6 & e. 643-4962. window von loaded 22ml; Homa repairs. Yov twine Fatherland son. Fast, Electrlcol Prablemsfr- lik e new. Special 84 GMC V, ton PU 20ml; 84 H,. we do If. Free esti dependable service.; Need a longe or a snwilj older floors. natuf$d'< mates. Insuretf. 643-0304. Fainting, Paperhanglng Repair? We Ipedollie * stained.