Rapid Brain Responses to Familiar Vs. Unfamiliar Music – an EEG and Pupillometry Study

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Can You Predict a Hit?

CAN YOU PREDICT A HIT? FINDING POTENTIAL HIT SONGS THROUGH LAST.FM PREDICTORS BACHELOR THESIS MARVIN SMIT (0720798) DEPARTMENT OF ARTIFICIAL INTELLIGENCE RADBOUD UNIVERSITEIT NIJMEGEN SUPERVISORS: DR. L.G. VUURPIJL DR. F. GROOTJEN Abstract In the field of Music Information Retrieval, studies on Hit Song Science are becoming more and more popular. The concept of predicting whether a song has the potential to become a hit song is an interesting challenge. This paper describes my research on how hit song predictors can be utilized to predict hit songs. Three subject groups of predictors were gathered from the Last.fm service, based on a selection of past hit songs from the record charts. By gathering new data from these predictors and using a term frequency-inversed document frequency algorithm on their listened tracks, I was able to determine which songs had the potential to become a hit song. The results showed that the predictors have a better sense on listening to potential hit songs in comparison to their corresponding control groups. TABLE OF CONTENTS 1 INTRODUCTION........................................................................................................................................... 1 2 BACKGROUND ............................................................................................................................................ 2 2.1 MUSIC INFORMATION RETRIEVAL ................................................................................................. 2 2.2 HIT SONG SCIENCE ........................................................................................................................... -

Full Nets, Empty Hearts Empty Nets, Full

POSTAL PATRON POSTAL PERMIT NO 27 BEACH, SC FOLLY PAID US POSTAGE STANDARD PRESORT June 2016 Folly Beach’s Newspaper Issue 6, Volume 7 Mariner Full Nets, Empty Hearts Returns To BACKMAn’s SEAFOOD ENTERS FIRST SHRIMP The Sea SEAson without it’s SKIPPER AT THE HELM A 441-POUND By Bill Davis | Staff Writer There will be something missing from Battery LEATHERBACK IS Island during shrimp season, and it won’t be the RESCUED ON FOLLY shrimp. Special to The Current According to state Department of Natural Resources spokesperson Erin Weeks, this will be a big season for shrimp. Weeks, who works out of the Fort Johnson Road DNR facility at the tip of James Island, said that scientists are reporting a variety of international factors that point toward a bumper crop. And that could mean big sales as the economy continues to recover, boats come back laden with shrimps, and SUVs arrive at Lowcountry restaurants full of tourists. The current season, Thanks to timely appearance and quick the second of the year, will end later this fall. thinking of Folly Beach City Councilmember What will be missing is Thomas “Junior” Photo By Bill Davis and Folly Beach Turtle Watch (FBTW) Backman, who died late last year at volunteer Teresa Marshall and her neighbors at the age of 76. Marshview Villas, a 441-pound leatherback sea As the namesake of a turtle was rescued from the marsh after being company that ran as many stranded by King Tides early Friday, May 6. as six shrimp boats from High tide was at 8 a.m., the stranded turtle Backman’s Seafood sighted about 9:30 a.m., and was on its way Company on Sol to the hospital by 11 a.m. -

Reykjavík Unesco City of Literature

Reykjavík unesco City of Literature Reykjavík unesco City of Literature Reykjavík unesco City of Literature Reykjavík City of Steering Committee Fridbjörg Ingimarsdóttir Submission writers: Literature submission Svanhildur Konrádsdóttir Director Audur Rán Thorgeirsdóttir, (Committee Chair) Hagthenkir – Kristín Vidarsdóttir Audur Rán Thorgeirsdóttir Director Association of Writers (point person) Reykjavík City of Non-Fiction and Literature Trail: Project Manager Department of Culture Educational Material Reykjavík City Library; Reykjavík City and Tourism Kristín Vidarsdóttir and Department of Culture Esther Ýr Thorvaldsdóttir Úlfhildur Dagsdóttir and Tourism Signý Pálsdóttir Executive Director Tel: (354) 590 1524 Head of Cultural Office Nýhil Publishing Project Coordinator: [email protected] Reykjavík City Svanhildur Konradsdóttir audur.ran.thorgeirsdottir Department of Culture Gudrún Dís Jónatansdóttir @reykjavík.is and Tourism Director Translator: Gerduberg Culture Centre Helga Soffía Einarsdóttir Kristín Vidarsdóttir Anna Torfadóttir (point person) City Librarian Gudrún Nordal Date of submission: Project Manager/Editor Reykjavík City Library Director January 2011 Reykjavík City The Árni Magnússon Institute Department of Culture and Audur Árný Stefánsdóttir for Icelandic Studies Photography: Tourism/Reykjavík City Library Head of Primary and Lower Cover and chapter dividers Tel: (354) 411 6123/ (354) 590 1524 Secondary Schools Halldór Gudmundsson Raphael Pinho [email protected] Reykjavík City Director [email protected] -

Elizabeth Romero, Elected President of ARRT's Board of Directors

ARRT REPORT Elizabeth Romero, Elected President of ARRT’s Board of Directors 13. What does being president look like? The daily includes The American Registry of Radiologic Technologists running board meetings, committee meetings, weekly dis- (ARRT) was founded in 1922 by the Radiological Society cussions with the CEO, attending meetings and suc- of North America, American Roentgen Ray Society, and cessfully relaying the ARRTs mission to technologists. the American Society of X-ray Technicians. In 1936, 14. Tell me one of your goals outside of ARRT? Run ARRT was incorporated, which meant there was a board another marathon. of trustees. In all of the years that a board has existed, there 15. What does this mean for the future of NMTs and has never been a president who was a nuclear medicine the board? Having a nuclear medicine technologist technologist and who was officially nominated by the becoming president gives the feeling that nuclear SNMMI-TS to sit on the ARRT’s board. That was, until medicine is better represented. It shows that ARRT now. To celebrate this momentous occasion, we decided to has always respected the technologists and what we ask the soon-to-be president and current vice-president do. This is a definite recognition. Elizabeth Romero 84 questions. And just so you don’t have 16. Who inspires you? Shalane Flanagan. to do the math, 84 is how many years the ARRT has had a 17. What does it mean to you to be the first presi- board. I called Liz on a Saturday and rapid-fired these dent? It is a really huge honor. -

President Hutchinson

THE BAGPIPE FRIDAY, APRIL 14, 2016 14049 SCENIC HIGHWAY, LOOKOUT MOUNTAIN, GA 30750 VOLUME 62.13 Diversity Worship Night MLB Predictions Junk Review Hottest Drinks on Campus Embodied Still holding out for the day Kobe will be so much better at Chad loves these guys. Also 10/10 would recommend drink- GRACE’S LAST ARTICLE, Lillian finally realizes her love for baseball than MJ ever was. Louis. ing the grilled tilapia juice runoff. KIDDOS. BUCKLE UP. me and croons a ballad. Page 2 Page 4 Page 5 Page 7 Page 8 COVENANT STUDENT AND BASKETBALL PRESIDENT COACH WRESTLE HUTCHINSON WITH PARALYZING by Austin Cantrell AUTOIMMUNE With the year coming to a DISORDER close and students busy with term papers, projects and final exams, student body by Molly Hulsey president Travis Hutchin- This Valentine’s Day, Cov- son is proud of the things he enant College’s Basketball has accomplished, the sen- Coach, Peter Wilkerson, ate he has led, and the future exchanged vows with fi- he sees for our student body. ancée Samantha Walton “I am so proud of before a crowd of 200-250 this year’s senate because visitors. About half were they rose to the challenges sitting; fifty to 100 others that I . and circumstances spilled into the hallway. presented to them.” All listened intent- Hutchinson feels ly to Wilkerson’s father as that his proudest achieve- he conducted the ceremo- grams the body’s defense that doesn’t recover.” How- The Wilkersons’ ments have been working ny, smiled as the couple system to ravage its own ever, after weeks of purée story has been much with senate to add student traded rings, and filed into nervous system. -

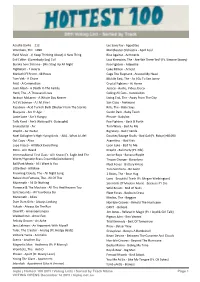

Triple J Hottest 100 2011 | Voting Lists | Sorted by Track Name Page 1 VOTING OPENS December 14 2011 | Triplej.Net.Au

Azealia Banks - 212 Les Savy Fav - Appetites Wombats, The - 1996 Manchester Orchestra - April Fool Field Music - (I Keep Thinking About) A New Thing Rise Against - Architects Evil Eddie - (Somebody Say) Evil Last Kinection, The - Are We There Yet? {Ft. Simone Stacey} Buraka Som Sistema - (We Stay) Up All Night Foo Fighters - Arlandria Digitalism - 2 Hearts Luke Million - Arnold Mariachi El Bronx - 48 Roses Cage The Elephant - Around My Head Tom Vek - A Chore Middle East, The - As I Go To See Janey Feist - A Commotion Crystal Fighters - At Home Juan Alban - A Death In The Family Justice - Audio, Video, Disco Herd, The - A Thousand Lives Calling All Cars - Autobiotics Jackson McLaren - A Whole Day Nearer Living End, The - Away From The City Art Vs Science - A.I.M. Fire! San Cisco - Awkward Kasabian - Acid Turkish Bath (Shelter From The Storm) Kills, The - Baby Says Bluejuice - Act Yr Age Caitlin Park - Baby Teeth Lanie Lane - Ain't Hungry Phrase - Babylon Talib Kweli - Ain't Waiting {Ft. Outasight} Foo Fighters - Back & Forth Snakadaktal - Air Tom Waits - Bad As Me Drapht - Air Guitar Big Scary - Bad Friends Noel Gallagher's High Flying Birds - AKA…What A Life! Douster/Savage Skulls - Bad Gal {Ft. Robyn}+B1090 Cut Copy - Alisa Argentina - Bad Kids Lupe Fiasco - All Black Everything Loon Lake - Bad To Me Mitzi - All I Heard Drapht - Bali Party {Ft. Nfa} Intermashional First Class - All I Know {Ft. Eagle And The Junior Boys - Banana Ripple Worm/Hypnotic Brass Ensemble/Juiceboxxx} Tinpan Orange - Barcelona Ball Park Music - All I Want Is You Fleet Foxes - Battery Kinzie Little Red - All Mine Tara Simmons - Be Gone Frowning Clouds, The - All Night Long 2 Bears, The - Bear Hug Naked And Famous, The - All Of This Lanu - Beautiful Trash {Ft. -

M83 Midnight City 320 Kbps Download

M83 Midnight City 320 Kbps Download 1 / 5 M83 Midnight City 320 Kbps Download 2 / 5 3 / 5 Download the Drums Backing Track of Midnight City as made famous by M83. Minus Drums MP3, HD Version. Designed for ... Track MP3. HD MP3 (320 Kbps) ... ... on SoundCloud Music Downloads 320kbps Tracks DJ Trax Songs mp3 remix, mix rmx, ... City Nights 1 Hour Version. ... M83 'Midnight City' wingy - City nights.. M83 Midnight City 320 Kbps Download DOWNLOAD: http://fancli.com/1dzwwd descargar midnight city m83 320 kbps 40f0e43ec1 Слушайте и скачивайте ... Macmillan Dictionary 7500 Words List Скачивай бесплатно песни midnight city в mp3 отличного качества (320 kbps) на свой мобильный телефон или ПК, а также слушайте их онлайн на сайте ... Call.of.Duty.Black.Ops.II.MULTi5-PLAZA Without Human Verification Food Microbiology,frazierl unanghakbangsapagbasapdf29 M83 — Midnight City (Eric Prydz Private Remix)] • (6:37) 【слушать онлайн】 в хорошем качестве 320 kbps. Скачивайте 4 / 5 бесплатно mp3-песню размером 15.13 Мб. Загрузка ✅️без регистрации в 1 клик - «Mp3 .... Check out Midnight City by M83 on Amazon Music. ... Buy MP3 Album $4.99 ... they sell out. beautiful art and this song and the remixes are just a taste of M83.. TEST PRESSING: Stream Trentemøller's Remix of M83's “Midnight City” Single, Alongside a Free MP3 From Big Black Delta. M83's Midnight City Remixes EP ... Oy! [2009 – FLAC] 3planesoft Aio 80 Torrent Description: UvYaM.jpg. M83 - Hurry Up We're Dreaming 2011 Alternative 320kbps CBR MP3 [VX] [P2PDL] ... M83 - Midnight City (4:03) 3.. M83 Midnight City MP3 Download To start Download you just need to click on below [Download MP3] Button & select Bitrate 320kbps, 256kbps, 192kbps, ... -

Spring 2020 | April to June Chemical World:Science Inourdailylives

ORCA BOOK PUBLISHERS Spring 2020 | April to June SPRING 2020 • APRIL TO JUNE BOARD BOOKS ORCA ORIGINS Big Whales Small World ................................................2 Powwow: A Celebration of Song and Dance .............27 The Sun is A Peach .........................................................4 ORCA WILD Bath Time! .....................................................................8 Bird’s-Eye View: Keeping Wild Birds in Flight ...........28 I Want to Be... ................................................................9 Sea Otters: A Survival Story ........................................29 Nibi is Water ..................................................................10 I Am Violet ......................................................................11 YA NONFICTION One Earth: People of Color Protecting Our Planet ...30 PICTURE BOOKS Maison Rouge ................................................................37 La lune est un étang d’argent ......................................5 Le soleil est une pêche .................................................5 ORCA ISSUES J’ai le cœur rempli de bonheur ...................................6 Heads Up: Changing Minds on Mental Health ...........31 Mi corazón se llena de alegría .....................................6 YA GRAPHIC NOVELS Tout petit toi ..................................................................7 Good Boys ......................................................................34 Tú eres tú .......................................................................7 -

Northstar Repertoire

NORTHSTAR REPERTOIRE LOUNGE/REMIX A Beautiful Mine - Rjd2 Spanish Grease - Verve Remixed (Willie Latch - Disclosure ft Sam Smith Bobo) Future Sex Love Sound - Justin Timberlake Summertime UFO - Verve Remixed (Sarah VauGhn) Is You Is - Verve Remixed Dinah WashinGton The MatinG Game - Bitter Sweet Let's Never Stop FallinG In Love - Pink Whatever Lola Wants - Gotan Project Martini Wait Till You See Him - Verve Remixed De Porcelain - Moby Phazz (Ella Fitzgerald) Return To Paradise - Mark De Clive- Lowe We No Speak Americano - Yolanda Be Cool Remix (Shirley Horn) & DCUP Santa Maria - Gotan Project Where Do I BeGin - Shirley Bassey, Away Team Mix JAZZ / STANDARDS A Sunday Kind Of Love - Etta James Do I Love You - Ella Fitzgerald 1925 Charleston - Paul Dandy Don't Know Why - Norah Jones Ain't Misbehavin' - Fats Waller Elmer’s Tune - “Glen Miller” Ain't That A Kick In The Head - Dean Martin Everybody Loves The Sunshine - Roy Ayers All Of Me - Billie Holiday EverythinG - Michael Buble American Patrol - “Glen Miller” Fly Me To The Moon - Frank Sinatra BeGin The BeGuine - Ella FitzGerald Fly Me To The Moon - Dinah WashinGton Besame Mucho - Glenn Miller Medley - Glenn Miller Beyond The Sea - Bobby Darin Human Nature (Gtr) - Michael Jackson Blue Bossa I Wish You Love - Frank Sinatra/Jack Foster Blue Moon - Elvis I Can't Give You AnythinG But Love - Dean Blue Moon - Frank Sinatra Martin Blue Train - John Coltrane In The Mood - Glen Miller BrinG It On Home To Me - Roy HarGrove It Don't Mean A ThinG - Jazz Standard ChatanooGa Choo Choo - “Glenn Miller” Jump Jive an’ Wail - Brian Setzer Cheek To Cheek - Ella FitzGerald/Louis Just In Time - Frank Sinatra ArmstronG Kalamazoo - “Glen Miller” Daddy’s Little Girl - Michael Buble/Michael L.O.V.E. -

Official Site | Facebook | Twitter “On the Last Album, There Was Too Much

! ! ! ! ! ! ! ! Official Site | Facebook! | Twitter “On the last album, there was too much of me.” That’s how Anthony Gonzalez – the sonic auteur behind the sub- lime sound of M83 – describes the primary inspiration for his forthcoming album JUNK, to be released on April 8, 2016 by Mute. Highly anticipated, JUNK is not just M83’s first studio album in half a decade; it’s also the fol- low-up to Hurry Up, We’re Dreaming – which, upon release in 2011, placed M83 in the direct current of the mainstream. Hurry Up, We’re Dreaming gained acclaim as Gonzalez’s masterpiece summation of all the ele- ments and influences of his epic space-age future pop. It would also become the record that cemented M83’s mainstream breakout, driven by the global hit single “Midnight City.” Other standout tracks like “Outro” and “Wait” from Hurry Up… also became pop-culturally ubiquitous, taking on a life of their own as musical accompa- niment for numerous TV shows and Hollywood blockbuster trailers. So why would Gonzalez try to remove himself from the follow-up to such a creative and commercial success? And furthermore supplant himself on JUNK with the surprising likes of Beck and Steve Vai. Wait, Steve Vai? The legendary virtuoso guitar hero who defined an era? On an M83 album? in 2016?! With Beck, too? Read on… On both Hurry Up… and the two years of triumphant world touring that followed it, Gonzalez served as M83’s musical architect and songwriter, but also its front man and primary vocalist – a role which he grew to find limit- ing. -

M83 Slipper Nyttalbum "Junk" Ute 8.April Hør Første Singel “Do It, Try It”

01-03-2016 20:58 CET M83 SLIPPER NYTTALBUM "JUNK" UTE 8.APRIL HØR FØRSTE SINGEL “DO IT, TRY IT” M83 SLIPPER NYTTALBUM "JUNK" - UTE 8.APRIL HØR FØRSTE SINGEL “DO IT, TRY IT” HER M83, aka Anthony Gonzalez, slipper i dag nyheten og detaljer om sitt etterlengtede nye album, JUNK, ute 8.April via Naïve. Oppfølgeren til Grammy-nominerte ”Hurry Up, We’re Dreaming” og platina- singlen,“Midnight City” samt to år med intense turné. Gonzalez - som fungerer som M83’s musikalske arkitekt, låtskriver, frontmann og vokalist - returnerer med noe man kan kalle en artistisk evolusjon, som han selv sier: “I want to show different sides of me on this album. I want to come back with something more intimate, yet somehow with…less me!” Gonzalez’s har gjenoppdaget sine tidlige inspirasjonskilder som Tangerine Dream, Aphex Twin, og sjanger-ekspanderende visjonærer som Brian Wilson og Kevin Shields, Gonzalez både returnerer til sine røtter samtidig som han tar et logisk 7-milsteg forover.På JUNK, eksperimenterer M83 videre med lyder og stiler han aldri har forsøkt seg på tidligere. “All my albums have layers of eclecticism to them, but with this album I wanted to take that even further,” sier han. Hør på albumets åpningsspor, “Do It, Try It,” - som inneholder alt fra et catchy old school house piano, synth-vokaler, prog-overdådighet og et pop artsy ”bubblegum hook” HER. Om albumets tittel “JUNK,” sier Gonzalez: "Anything we create today is going to end up being space junk at one point anyway, and I find it really fascinating and scary at the same time - beautiful too in a way. -

Porter Robinson Worlds Porter Robinson Worlds Album Download ZIP NEW Download Porter Robinson – Worlds Remixed Album 2015

porter robinson worlds porter robinson worlds album download ZIP NEW Download Porter Robinson – Worlds Remixed Album 2015. Track list: 1. Divinity (ODESZA Remix) (feat. Amy Millan) (5:26) 2. Sad Machine (Dean Custom Remix) (5:06) 3. Years Of War (Rob Mayth Remix) (feat. Breanne Düren & Sean Caskey) (3:55) 4. Flicker (Mat Zo Remix) (4:55) 5. Fresh Static Snow (Last Island Remix) (3:12) 6. Polygon Dust (Sleepy Tom Remix) (feat. Lemaitre) (4:07) 7. Hear The Bells (Electric Mantis Remix) (feat. Imaginary Cities) (4:45) 8. Natural Light (San Holo Remix) (2:55) 9. Lionhearted (Point Point Remix) (feat. Urban Cone) (3:25) 10. Sea Of Voices (Galimatias Remix) (3:01) 11. Fellow Feeling (Slumberjack Remix) (4:51) 12. Goodbye To A World (Chrome Sparks Remix) (6:13) Worlds (album) Worlds is the debut studio album by the American electronic music producer Porter Robinson, released on August 12, 2014 by Astralwerks in the United States and by Virgin EMI Records internationally. The album exhibits a shift in Robinson's music style from the heavy, bass-fueled complextro of his previous work to a more alternative form of electronic music. Contents. Background [ edit | edit source ] During 2012 and 2013, while touring extensively on his Language tour, the then 19-year-old producer began to grow increasingly tired of the current commercial EDM scene, feeling that it was inhibiting his creativity by being too formulaic, with the production process being centered around making "DJ friendly" tracks. He decided instead to create an album that was true to himself and that channeled his own feelings of nostalgia: particularly his interest in Japanese culture such as video games, anime and Vocaloids.