Deep Learning and Image Processing for Handwritten Style Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Old Cyrillic in Unicode*

Old Cyrillic in Unicode* Ivan A Derzhanski Institute for Mathematics and Computer Science, Bulgarian Academy of Sciences [email protected] The current version of the Unicode Standard acknowledges the existence of a pre- modern version of the Cyrillic script, but its support thereof is limited to assigning code points to several obsolete letters. Meanwhile mediæval Cyrillic manuscripts and some early printed books feature a plethora of letter shapes, ligatures, diacritic and punctuation marks that want proper representation. (In addition, contemporary editions of mediæval texts employ a variety of annotation signs.) As generally with scripts that predate printing, an obvious problem is the abundance of functional, chronological, regional and decorative variant shapes, the precise details of whose distribution are often unknown. The present contents of the block will need to be interpreted with Old Cyrillic in mind, and decisions to be made as to which remaining characters should be implemented via Unicode’s mechanism of variation selection, as ligatures in the typeface, or as code points in the Private space or the standard Cyrillic block. I discuss the initial stage of this work. The Unicode Standard (Unicode 4.0.1) makes a controversial statement: The historical form of the Cyrillic alphabet is treated as a font style variation of modern Cyrillic because the historical forms are relatively close to the modern appearance, and because some of them are still in modern use in languages other than Russian (for example, U+0406 “I” CYRILLIC CAPITAL LETTER I is used in modern Ukrainian and Byelorussian). Some of the letters in this range were used in modern typefaces in Russian and Bulgarian. -

Carolingian Uncial: a Context for the Lothar Psalter

CAROLINGIAN UNCIAL: A CONTEXT FOR THE LOTHAR PSALTER ROSAMOND McKITTERICK IN his famous identification and dating ofthe Morgan Golden Gospels published in the Festschrift for Belle da Costa Greene, E. A. Lowe was quite explicit in his categorizing of Carolingian uncial as the 'invention of a display artist'.^ He went on to define it as an artificial script beginning to be found in manuscripts of the ninth century and even of the late eighth century. These uncials were reserved for special display purposes, for headings, titles, colophons, opening lines and, exceptionally, as in the case ofthe Morgan Gospels Lowe was discussing, for an entire codex. Lowe acknowledged that uncial had been used in these ways before the end of the eighth century, but then it was * natural' not 'artificial' uncial. One of the problems I wish to address is the degree to which Frankish uncial in the late eighth and the ninth centuries is indeed 'artificial' rather than 'natural'. Can it be regarded as a deliberate recreation of a script type, or is it a refinement and elevation in status of an existing book script? Secondly, to what degree is a particular script type used for a particular text type in the early Middle Ages? The third problem, related at least to the first, if not to the second, is whether Frankish uncial, be it natural or artificial, is sufficiently distinctive when used by a particular scriptorium to enable us to locate a manuscript or fragment to one atelier rather than another. This problem needs, of course, to be set within the context of later Carolingian book production, the notions of 'house' style as opposed to 'regional' style and the criteria for locating manuscript production to particular scriptoria in the Frankish kingdoms under the Carolingians that I have discussed elsewhere." It is also of particular importance when considering the Hofschule atehers ofthe mid-ninth century associated with the Emperor Lothar and with King Charles the Bald. -

Part 1: Introduction to The

PREVIEW OF THE IPA HANDBOOK Handbook of the International Phonetic Association: A guide to the use of the International Phonetic Alphabet PARTI Introduction to the IPA 1. What is the International Phonetic Alphabet? The aim of the International Phonetic Association is to promote the scientific study of phonetics and the various practical applications of that science. For both these it is necessary to have a consistent way of representing the sounds of language in written form. From its foundation in 1886 the Association has been concerned to develop a system of notation which would be convenient to use, but comprehensive enough to cope with the wide variety of sounds found in the languages of the world; and to encourage the use of thjs notation as widely as possible among those concerned with language. The system is generally known as the International Phonetic Alphabet. Both the Association and its Alphabet are widely referred to by the abbreviation IPA, but here 'IPA' will be used only for the Alphabet. The IPA is based on the Roman alphabet, which has the advantage of being widely familiar, but also includes letters and additional symbols from a variety of other sources. These additions are necessary because the variety of sounds in languages is much greater than the number of letters in the Roman alphabet. The use of sequences of phonetic symbols to represent speech is known as transcription. The IPA can be used for many different purposes. For instance, it can be used as a way to show pronunciation in a dictionary, to record a language in linguistic fieldwork, to form the basis of a writing system for a language, or to annotate acoustic and other displays in the analysis of speech. -

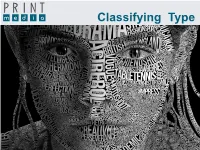

Classifying Type Thunder Graphics Training • Type Workshop Typeface Groups

Classifying Type Thunder Graphics Training • Type Workshop Typeface Groups Cla sifying Type Typeface Groups The typefaces you choose can make or break a layout or design because they set the tone of the message.Choosing The the more right you font know for the about job is type, an important the better design your decision.type choices There will are be. so many different fonts available for the computer that it would be almost impossible to learn the names of every one. However, manys typefaces share similar qualities. Typographers classify fonts into groups to help Typographers classify type into groups to help remember the different kinds. Often, a font from within oneremember group can the be different substituted kinds. for Often, one nota font available from within to achieve one group the samecan be effect. substituted Different for anothertypographers usewhen different not available groupings. to achieve The classifi the samecation effect. system Different used by typographers Thunder Graphics use different includes groups. seven The major groups.classification system used byStevenson includes seven major groups. Use the Right arrow key to move to the next page. • Use the Left arrow key to move back a page. Use the key combination, Command (⌘) + Q to quit the presentation. Thunder Graphics Training • Type Workshop Typeface Groups ����������������������� ��������������������������������������������������������������������������������� ���������������������������������������������������������������������������� ������������������������������������������������������������������������������ -

Detecting Forgery: Forensic Investigation of Documents

University of Kentucky UKnowledge Legal Studies Social and Behavioral Studies 1996 Detecting Forgery: Forensic Investigation of Documents Joe Nickell University of Kentucky Click here to let us know how access to this document benefits ou.y Thanks to the University of Kentucky Libraries and the University Press of Kentucky, this book is freely available to current faculty, students, and staff at the University of Kentucky. Find other University of Kentucky Books at uknowledge.uky.edu/upk. For more information, please contact UKnowledge at [email protected]. Recommended Citation Nickell, Joe, "Detecting Forgery: Forensic Investigation of Documents" (1996). Legal Studies. 1. https://uknowledge.uky.edu/upk_legal_studies/1 Detecting Forgery Forensic Investigation of DOCUlllen ts .~. JOE NICKELL THE UNIVERSITY PRESS OF KENTUCKY Publication of this volume was made possible in part by a grant from the National Endowment for the Humanities. Copyright © 1996 byThe Universiry Press of Kentucky Paperback edition 2005 The Universiry Press of Kentucky Scholarly publisher for the Commonwealth, serving Bellarmine Universiry, Berea College, Centre College of Kentucky, Eastern Kentucky Universiry, The Filson Historical Sociery, Georgetown College, Kentucky Historical Sociery, Kentucky State University, Morehead State Universiry, Transylvania Universiry, University of Kentucky, Universiry of Louisville, and Western Kentucky Universiry. All rights reserved. Editorial and Sales qtJices:The Universiry Press of Kentucky 663 South Limestone Street, Lexington, Kentucky 40508-4008 www.kentuckypress.com The Library of Congress has cataloged the hardcover edition as follows: Nickell,Joe. Detecting forgery : forensic investigation of documents I Joe Nickell. p. cm. ISBN 0-8131-1953-7 (alk. paper) 1. Writing-Identification. 2. Signatures (Writing). 3. -

JAF Herb Specimen © Just Another Foundry, 2010 Page 1 of 9

JAF Herb specimen © Just Another Foundry, 2010 Page 1 of 9 Designer: Tim Ahrens Format: Cross platform OpenType Styles & weights: Regular, Bold, Condensed & Bold Condensed Purchase options : OpenType complete family €79 Single font €29 JAF Herb Webfont subscription €19 per year Tradition ist die Weitergabe des Feuers und nicht die Anbetung der Asche. Gustav Mahler www.justanotherfoundry.com JAF Herb specimen © Just Another Foundry, 2010 Page 2 of 9 Making of Herb Herb is based on 16th century cursive broken Introducing qualities of blackletter into scripts and printing types. Originally designed roman typefaces has become popular in by Tim Ahrens in the MA Typeface Design recent years. The sources of inspiration range course at the University of Reading, it was from rotunda to textura and fraktur. In order further refined and extended in 2010. to achieve a unique style, other kinds of The idea for Herb was to develop a typeface blackletter were used as a source for Herb. that has the positive properties of blackletter One class of broken script that has never but does not evoke the same negative been implemented as printing fonts is the connotations – a type that has the complex, gothic cursive. Since fraktur type hardly ever humane character of fraktur without looking has an ‘italic’ companion like roman types few conservative, aggressive or intolerant. people even know that cursive blackletter As Rudolf Koch illustrated, roman type exists. The only type of cursive broken script appears as timeless, noble and sophisticated. that has gained a certain awareness level is Fraktur, on the other hand, has different civilité, which was a popular printing type in qualities: it is displayed as unpretentious, the 16th century, especially in the Netherlands. -

The Story of Handwriting Is Handwriting As a Practice Still Used in Swedish Schools?

Independent Project – Final Written Report The Story of Handwriting Is handwriting as a practice still used in Swedish schools? Author: Elsa Karlsson Supervisor: Helga Steppan, Cassandra Troyan Examiner: Ola Ståhl Term: VT18 Subject: Visual Communication + Change 1/13 Level: Bachelor Course code: 2DI68E Abstract technological tools and advancements, children still enjoy and value writing by hand, and then it This design project will map, look at and give is my task as a change agent to break the norm answers regarding: The story of handwriting from that handwriting as a practice is disappearing in a pedagogical perspective, within a Swedish Swedish schools and give children the tools they context. It is primarily based on a great interest in need to continue writing new chapters in the writing by hand, and the effects and benefits it has story of handwriting. To stimulate learning with on its practitioners. Handwriting today compared joy, work with fine motor skills and strengthen the to before is getting less space in the digitized ability to concentrate amongst children through society, but is handwriting as a practice still used a handwriting workshop is what the investiga- in Swedish schools? tion has led to. The answers in this thesis will not The predicted meaning is that children in change the world, but the handwriting workshop, school cannot write properly by hand anymore, designed as a pedagogical tool, will hopefully due to all technologies such as smartphones, inspire and motivate children to write by hand for tablets and computers. The question is complex a long time to come. and the answer is more than just a simple yes or no, and therefore this investigation in handwriting has been done. -

Handwriting Toward a Minuscule Alphabet, It Is Written Upright and Is Considered a Majuscule Form

There is much to say about the history of writing. To encapsulate the highlights in an essay by this short essay, it is important to note that the dialectic between formal and informal Jerri-Jo Idarius styles of writing led to periods of degeneration and periods of reform and also to the differentiation between what we refer to as caps and small letters, known technically as majuscules and minuscules. The Roman formal majuscule scripts follow: Although the ascenders and descenders of the half-uncial represent the movement Handwriting toward a minuscule alphabet, it is written upright and is considered a majuscule form. is a craft in which everyone participates, After the fall of Rome, various regional styles developed in Europe but in the 8th yet few people know much about its tradition century King Charlemagne instituted one script throughout the monasteries of Europe or evolution. From the view of a calligrapher* to help unite his empire. This style, known as Carolingian, related to the Roman who has studied and mastered tradi- half uncial and Roman cursive, is the first truly minuscule alphabet. Its beauti- tional forms of handwriting, this lack of ful letters can be written straight or at an angle. A simply drawn form of caps called education is a sign of cultural loss. Most versals appeared in manuscripts of this era. elementary school teachers feel inadequate to teach penmanship, and cannot explain the relationship between the cursive Roman Square Caps (Capitalis Quadrata) handwriting they have to teach and the printed letters they see in books. Since Rustic handwriting is so intimately connected to Uncial self-image, and since most people are (used for Bibles and sacred texts) unhappy with the results of their learning, versals it is common to hear, “I hate my writing!” Carolingian minuscule & or “I never learned to write.” They don’t Medieval scripts are popularly described as blackletter, due to the predomi- know what to do about it. -

Why Cursive Writing Is Important

Parents: why is cursive (joined-up) handwriting important? Research has shown that the use of a continuous cursive handwriting style plays a significant role, not only in developing fine motor skills but also in learning spelling patterns. This is particularly important for children who struggle with spelling and find decoding writing patterns challenging. Once this skill has developed, the child should be able to recall spelling patterns with automaticity. The child can then focus on the content and structure of writing rather than the disconnected process of letter recollection. The brain thinks more rapidly and fluently in whole words than in single letters where the pen is lifted off the page much more frequently. Cursive handwriting therefore encourages fluidity of thought processes when writing and is also much quicker. This will be useful for any student in exams where time is limited. Cursive handwriting also develops hand/eye co-ordination and motor skills which can help develop skills in other areas of life and work. How can I help my daughter? Encourage your daughter to keep trying; sometimes the writing is worse before it gets better! With continuous practice using materials and guidance provided by the teacher or Literacy Co-ordinator, all pupils can learn to write cursively. Start small – 2 / 3 letter words. Join up the letters in words like ‘in’, ‘off’, ‘and’ and then progress to longer words which are well known and used frequently, like ‘then’, ‘where’ and ‘went’. Try the website www.teachhandwriting.co.uk for tips and animated examples of cursive writing. After your daughter has got used to these, encourage her to extend the style of cursive writing to all of her writing in all subject areas. -

Calligraphy Specimens Following Writing

Calligraphy Specimens following Writing Manual by ADOLPH ZUNNER[?] printed by JOHANN CHRISTOPH WEIGEL or CHRISTOPH WEIGEL THE ELDER In German and Latin, manuscript on paper Germany (Nuremberg), c. 1713 20 folios on paper, complete [collation i20], unidentified watermark (bisected with center lacking, crest holding 3? bezants with ornate frame, initials M and F at bottom), foliation in modern pencil in upper recto corners, text written in various calligraphic scripts in black ink on recto only, no visible ruling and varied justification, sketched decorative evergreen boughs on f. 1 and calligraphic scrollwork throughout, minor flecking and staining, some original ink blots. CONTEMPORARY BINDING, brown (once red?) brocade paper with elegant mixed floral design and traces of gold embossing, pasted spine, abrasion and discoloration but wholly intact. Dimensions 150 x 190 mm. Calligraphic sample books from the Renaissance, such as this manuscript, are far less common than their printed exemplars; this charming booklet, designed for teaching writing to the young, appears to be one of a kind. This volume in its fine contemporary binding includes texts that display a scribe’s skill in writing different types of scripts. It is partially copied from writing master Adolph Zunner’s 1709 Kunstrichtige Schreib-Art printed in Nuremberg by famous publisher and engraver [Johann] Christoph Weigel. PROVENANCE 1. Written in Germany, in Nuremberg, in 1713 or shortly thereafter, with a title page reading Gründliche Unterweisung zu Fraktur – Canzley – und Current Schrifften der lieben Jugend zum Anfang des Schreibens und sondern Nuzen gestellet durch A. <A. or Z.?> in Nürnberg Zufinden bey Johann Christoph Weigel (A Thorough Instruction in Fraktur, Chancery, and Cursive Scripts, prepared for the especial utility of dear Youth in beginning to write by A. -

Introduction to Palaeography

Irene Ceccherini / Henrike Lähnemann Oxford, MT 2015/16 Introduction to Palaeography Bibliographical references Online bibliography: http://www.theleme.enc.sorbonne.fr Codicology - Jacques Lemaire, Introduction à la codicologie, Louvain-la-Neuve 1989. - Colin H. Roberts – T.C. Skeats, The birth of the codex, London 1983. - Maria Luisa Agati, Il libro manoscritto da Oriente a Occidente: per una codicologia comparata, Roma 2009. - Elisa Ruiz, Introducción a la codicologia, Madrid 2002. - Marilena Maniaci, Archeologia del manoscritto. Metodi, problemi, bibliografia recente, Roma 2002. - Denis Muzerelle, Vocabulaire codicologique. Répertoire méthodique des termes français relatifs aux manuscrits, Paris 1985. o Italian translation: Marilena Maniaci, Terminologia del libro manoscritto, Roma 1996. o Spanish translation: Pilar Ostos, Maria Luisa Pardo, Elena E. Rodríguez, Vocabulario de codicología, Madrid 1997. o Online version (with English provisional translation): http://www.vocabulaire.irht.cnrs.fr - Cf. Bischoff (palaeography). Palaeography - Bernhard Bischoff, Paläographie des römischen Altertums und des abendländischen Mittelalters, Berlin 19862. o French translation: Paléographie de l’antiquité romaine et du Moyen Age occidental, éd. Hartmut Atsma et Jean Vezin, Paris 1985. o English translation: Latin Palaeography. Antiquity and the Middle Ages, eds Dáibní Ó Cróinin and David Ganz, Cambridge 1990. o Italian translation: Paleografia latina. Antichità e Medioevo, a cura di Gilda Mantovani e Stefano Zamponi, Padova 1992. - Albert Derolez, The palaeography of Gothic manuscript books. From the twelfth to the early sixteenth century, Cambridge 2003 o cf. review by Marc H. Smith in “Scriptorium”58/2 (2004), pp. 274-279). Catalogues of dated and datable manuscripts Austria, Belgium, France, Germany, Italy, Netherlands, Sweden, Switzerland, United Kingdom, Vatican. Abbreviations - Adriano Cappelli, Lexicon abbreviaturarum. -

Five Centurie< of German Fraktur by Walden Font

Five Centurie< of German Fraktur by Walden Font Johanne< Gutenberg 1455 German Fraktur represents one of the most interesting families of typefaces in the history of printing. Few types have had such a turbulent history, and even fewer have been alter- nately praised and despised throughout their history. Only recently has Fraktur been rediscovered for what it is: a beau- tiful way of putting words into written form. Walden Font is proud to pres- ent, for the first time, an edition of 18 classic Fraktur and German Script fonts from five centuries for use on your home computer. This booklet describes the history of each font and provides you with samples for its use. Also included are the standard typeset- ting instructions for Fraktur ligatures and the special characters of the Gutenberg Bibelschrift. We hope you find the Gutenberg Press to be an entertaining and educational publishing tool. We certainly welcome your comments and sug- gestions. You will find information on how to contact us at the end of this booklet. Verehrter Frakturfreund! Wir hoffen mit unserer "“Gutenberg Pre%e”" zur Wiederbelebung der Fraktur= schriften - ohne jedweden politis#en Nebengedanken - beizutragen. Leider verbieten un< die hohen Produktion<kosten eine Deutsche Version diese< Be= nu@erhandbüchlein< herau<zugeben, Sie werden aber den Deutschen Text auf den Programmdisketten finden. Bitte lesen Sie die “liesmich”" Datei für weitere Informationen. Wir freuen un< auch über Ihre Kommentare und Anregungen. Kontaktinformationen sind am Ende diese< Büchlein< angegeben. A brief history of Fraktur At the end of the 15th century, most Latin books in Germany were printed in a dark, barely legible gothic type style known asTextura .