Detecting Textual Reuse in News Stories, at Scale

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Perceived Credibility of Professional Photojournalism Compared to User-Generated Content Among American News Media Audiences

Syracuse University SURFACE Dissertations - ALL SURFACE August 2020 THE PERCEIVED CREDIBILITY OF PROFESSIONAL PHOTOJOURNALISM COMPARED TO USER-GENERATED CONTENT AMONG AMERICAN NEWS MEDIA AUDIENCES Gina Gayle Syracuse University Follow this and additional works at: https://surface.syr.edu/etd Part of the Social and Behavioral Sciences Commons Recommended Citation Gayle, Gina, "THE PERCEIVED CREDIBILITY OF PROFESSIONAL PHOTOJOURNALISM COMPARED TO USER-GENERATED CONTENT AMONG AMERICAN NEWS MEDIA AUDIENCES" (2020). Dissertations - ALL. 1212. https://surface.syr.edu/etd/1212 This Dissertation is brought to you for free and open access by the SURFACE at SURFACE. It has been accepted for inclusion in Dissertations - ALL by an authorized administrator of SURFACE. For more information, please contact [email protected]. ABSTRACT This study examines the perceived credibility of professional photojournalism in context to the usage of User-Generated Content (UGC) when compared across digital news and social media platforms, by individual news consumers in the United States employing a Q methodology experiment. The literature review studies source credibility as the theoretical framework through which to begin; however, using an inductive design, the data may indicate additional patterns and themes. Credibility as a news concept has been studied in terms of print media, broadcast and cable television, social media, and inline news, both individually and between genres. Very few studies involve audience perceptions of credibility, and even fewer are concerned with visual images. Using online Q methodology software, this experiment was given to 100 random participants who sorted a total of 40 images labeled with photographer and platform information. The data revealed that audiences do discern the source of the image, in both the platform and the photographer, but also take into consideration the category of news image in their perception of the credibility of an image. -

FOLLOWING a COLUMNIST AP English Language Originated by Jim Veal; Modified by V

WAYS TO IMPROVE MEMORY: FOLLOWING A COLUMNIST AP English Language Originated by Jim Veal; modified by V. Stevenson 3/30/2009; reprint: 5/24/2012 Some of the most prominent practitioners of stylish written rhetoric in our culture are newspaper columnists. Sometimes they are called pundits – that is, sources of opinion, or critics. On the reverse side find a list of well-know newspaper columnists. Select one (or another one that I approve of) and complete the tasks below. Please start a new page and label as TASK # each time you start a new task. TASK 1—Brief Biography. Write a brief (100-200 word) biography of the columnist. Make sure you cite your source(s) at the bottom of the page. I suggest you import a picture of the author if possible. TASK 2—Five Annotated Columns. Make copies from newspapers or magazines or download them from the internet. I suggest cutting and pasting the columns into Microsoft word and double-spacing them because it makes them easier to annotate and work with. Your annotations should emphasize such things as: - the central idea of the column - identify appeals to logos, pathos, or ethos - (by what means does the columnist seek to convince readers of the truth of his central idea?) - the chief rhetorical and stylistic devices at work in the column - the tone (or tones) of the column - errors of logic (if any) that appear in the column - the way the author uses sources, the type of sources the author uses (Be sure to pay attention to this one!) - the apparent audience the author is writing for Add a few final comments to each column that summarizes your general response to the piece— do not summarize the column! This task is hand-written. -

Thesis Old Media, New Media

THESIS OLD MEDIA, NEW MEDIA: IS THE NEWS RELEASE DEAD YET? HOW SOCIAL MEDIA ARE CHANGING THE WAY WILDFIRE INFORMATION IS BEING SHARED Submitted By Mary Ann Chambers Department of Journalism and Technical Communication In partial fulfillment of the requirements For the Degree of Master of Science Colorado State University Fort Collins, Colorado Spring 2015 Master’s Committee: Advisor: Joseph Champ Peter Seel Tony Cheng Copyright by Mary Ann Chambers 2015 All Rights Reserved ABSTRACT OLD MEDIA, NEW MEDIA: IS THE NEWS RELEASE DEAD YET? HOW SOCIAL MEDIA ARE CHANGING THE WAY WILDFIRE INFORMATION IS BEING SHARED This qualitative study examines the use of news releases and social media by public information officers (PIO) who work on wildfire responses, and journalists who cover wildfires. It also checks in with firefighters who may be (unknowingly or knowingly) contributing content to the media through their use of social media sites such as Twitter and Facebook. Though social media is extremely popular and used by all groups interviewed, some of its content is unverifiable ( Ma, Lee, & Goh, 2014). More conventional ways of doing business, such as the news release, are filling in the gaps created by the lack of trust on the internet and social media sites and could be why the news release is not dead yet. The roles training, friends, and colleagues play in the adaptation of social media as a source is explored. For the practitioner, there are updates explaining what social media tools are most helpful to each group. For the theoretician, there is news about changes in agenda building and agenda setting theories caused by the use of social media. -

Beyond Objectivity and Relativism: a View Of

BEYOND OBJECTIVITY AND RELATIVISM: A VIEW OF JOURNALISM FROM A RHETORICAL PERSPECTIVE A Dissertation Presented in Partial Fulfillment of the Requirements for the degree of Doctor of Philosophy in the Graduate School of The Ohio State University by Catherine Meienberg Gynn, B.A., M.A. The Ohio State University 1995 Dissertation Committee Approved by Josina M. Makau Susan L. Kline Adviser Paul V. Peterson Department of Communication Joseph M. Foley UMI Number: 9533982 UMI Microform 9533982 Copyright 1995, by UMI Company. All rights reserved. This microform edition is protected against unauthorized copying under Title 17, United States Code. UMI 300 North Zeeb Road Ann Arbor, MI 48103 DEDICATION To my husband, Jack D. Gynn, and my son, Matthew M. Gynn. With thanks to my parents, Alyce W. Meienberg and the late John T. Meienberg. This dissertation is in respectful memory of Lauren Rudolph Michael James Nole Celina Shribbs Riley Detwiler young victims of the events described herein. ACKNOWLEDGMENTS I express sincere appreciation to Professor Josina M. Makau, Academic Planner, California State University at Monterey Bay, whose faith in this project was unwavering and who continually inspired me throughout my graduate studies, and to Professor Susan Kline, Department of Communication, The Ohio State University, whose guidance, friendship and encouragement made the final steps of this particular journey enjoyable. I wish to thank Professor Emeritus Paul V. Peterson, School of Journalism, The Ohio State University, for guidance that I have relied on since my undergraduate and master's programs, and whose distinguished participation in this project is meaningful to me beyond its significant academic merit. -

Should Kids Be Allowed to Buy

DECEMBER 21, 2020 ISSN 1554-2440 No. 10 Vol. 89 Vol. BIG DEBATE Should Kids Be Allowed to Buy Some places in WATCH Mexico have banned THESE VIDEOS! . SCHOLASTIC.COM/SN56 kids from buying junk Find out what’s hiding in junk food food. Should we do Titanic. the same in the U.S.? Explore the Become an expert on hurricanes. BIG DEBATE Should You Be Banned From Buying AS YOU READ, THINK ABOUT: What are ways that eating too much junk food can affect your health? magine going to a store and not being allowed to buy a bag of chips. The reason? You’re too young! What exactly is That’s what may happen in one state in Mexico, which junk food? I It’s food and drinks that recently banned the sale of unhealthy snacks and sugar- sweetened drinks to kids younger than 18. Several other are high in fat, salt, or Mexican states are considering passing similar laws. sugar and have little The goal of these bans is to improve kids’ health. nutritional value. Research shows that eating too much junk food can lead to obesity—the condition of being severely overweight. People with obesity are at higher risk for health problems such as heart disease and type 2 diabetes. But some people argue that a ban on buying junk WORDS TO KNOW food isn’t a good way to change kids’ eating habits. calories noun, plural. units that measure the amount of energy Should states in the U.S. also consider banning the released by food in the body sale of junk food to kids? consequences noun, plural. -

Subsidizing the News? Organizational Press Releases' Influence on News Media's Agenda and Content Boumans, J

UvA-DARE (Digital Academic Repository) Subsidizing the news? Organizational press releases' influence on news media's agenda and content Boumans, J. DOI 10.1080/1461670X.2017.1338154 Publication date 2018 Document Version Final published version Published in Journalism Studies License CC BY-NC-ND Link to publication Citation for published version (APA): Boumans, J. (2018). Subsidizing the news? Organizational press releases' influence on news media's agenda and content. Journalism Studies, 19(15), 2264-2282. https://doi.org/10.1080/1461670X.2017.1338154 General rights It is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), other than for strictly personal, individual use, unless the work is under an open content license (like Creative Commons). Disclaimer/Complaints regulations If you believe that digital publication of certain material infringes any of your rights or (privacy) interests, please let the Library know, stating your reasons. In case of a legitimate complaint, the Library will make the material inaccessible and/or remove it from the website. Please Ask the Library: https://uba.uva.nl/en/contact, or a letter to: Library of the University of Amsterdam, Secretariat, Singel 425, 1012 WP Amsterdam, The Netherlands. You will be contacted as soon as possible. UvA-DARE is a service provided by the library of the University of Amsterdam (https://dare.uva.nl) Download date:27 Sep 2021 SUBSIDIZING THE NEWS? Organizational press releases’ influence on news media’s agenda and content Jelle Boumans The relation between organizational press releases and newspaper content has generated consider- able attention. -

FACTS Summary

Fast Food Advertising: Billions in spending, continued high exposure by youth J U N E 2 0 2 1 Fast-food consumption among youth remains a significant public health concern. The findings in this report demonstrate that fast-food advertising spending increased from 2012 to 2019; youth exposure to TV ads declined, but at a lower rate than reductions in TV viewing times; many restaurants continued to disproportionately target advertising to Hispanic and Black youth; and restaurants did not actively promote healthier menu items. Restaurants must do more to reduce harmful fast-food advertising to youth. B A C K G R O U N D Excessive consumption of fast food is linked to poor diet and weight outcomes among children and teens,1 and consumption of fast food has increased over the past decade.2 On a given day, one-third of children and teens eat fast food, and on those days they consume 126 and 310 additional calories, including more sugary drinks, compared to days they do not eat fast food.3 Moreover, fast food consumption is higher for Hispanic and non-Hispanic Black teens, who also face greater risks for obesity and other diet-related diseases, compared to non-Hispanic White teens. 4, 5 While many factors likely influence frequent fast-food consumption,6-8 extensive fast-food advertising is a major contributor.9 Frequent and widespread exposure to food marketing increases young people’s preferences, purchase requests, attitudes, and consumption of the primarily nutrient- poor energy-dense products promoted.10-12 Fast food is the most frequently advertised food and beverage category to children and teens, representing 40% of all youth-directed food marketing expenditures 13 and more than one-quarter of food and drink TV ads viewed14. -

The Protection of Journalistic Sources, a Cornerstone of the Freedom of the Press

Thematic factsheet1 Last update: June 2018 THE PROTECTION OF JOURNALISTIC SOURCES, A CORNERSTONE OF THE FREEDOM OF THE PRESS According to the case-law of the European Court of Human Rights, the right of journalists not to disclose their sources is not a mere privilege to be granted or taken away depending on the lawfulness or unlawfulness of their sources, but is part and parcel of the right to information, to be treated with the utmost caution. Without an effective protection, sources may be deterred from assisting the press in informing the public on matters of public interest. As a result, the vital “public watchdog” role of the press may be undermined. Any interference with the right to protection of journalistic sources (searches at journalists’ workplace or home, seizure of journalistic material, disclosure orders etc) that could lead to their identification must be backed up by effective legal procedural safeguards commensurate with the importance of the principle at stake. First and foremost among these safeguards is the guarantee of a review by an independent and impartial body to prevent unnecessary access to information capable of disclosing the sources’ identity. Such a review is preventive in nature. The review body has to be in a position to weigh up the potential risks and respective interests prior to any disclosure. Its decision should be governed by clear criteria, including as to whether less intrusive measures would suffice. The disclosure orders placed on journalists have a detrimental impact not only on their sources, whose identity may be revealed, but also on the newspaper against which the order is directed, whose reputation may be negatively affected in the eyes of future potential sources by the disclosure, and on the members of the public, who have an interest in receiving information imparted through anonymous sources and who are also potential sources themselves. -

"'Who Shared It?': How Americans Decide What News to Trust

NORC WORKING PAPER SERIES WHO SHARED IT?: HOW AMERICANS DECIDE WHAT NEWS TO TRUST ON SOCIAL MEDIA WP-2018-001 | AUGUST, 2018 PRESENTED BY: NORC at the University of Chicago 55 East Monroe Street 30th Floor Chicago, IL 60603 Ph. (312) 759-4000 AUTHORS: David Sterrett, Dan Malato Jennifer Benz Liz Kantor Trevor Tompson Tom Rosenstiel Jeff Sonderman Kevin Loker Emily Swanson NORC | WHO SHARED IT?: HOW AMERICANS DECIDE WHAT NEWS TO TRUST ON SOCIAL MEDIA AUTHOR INFORMATION David Sterrett, Research Scientist, AP-NORC Center for Public Affairs Research Dan Malato, Principal Research Analyst, AP-NORC Center for Public Affairs Research Jennifer Benz, Principal Research Scientist, AP-NORC Center for Public Affairs Research Liz Kantor, Research Assistant, AP-NORC Center for Public Affairs Research Trevor Tompson, Vice President for Public Affairs Research, AP-NORC Center for Public Affairs Research Tom Rosenstiel, Executive Director, American Press Institute Jeff Sonderman, Deputy Director, American Press Institute Kevin Loker, Program Manager, American Press Institute Emily Swanson, Polling Editor, Associated Press NORC WORKING PAPER SERIES | I NORC | WHO SHARED IT?: HOW AMERICANS DECIDE WHAT NEWS TO TRUST ON SOCIAL MEDIA Table of Contents Introduction ..................................................................................... Error! Bookmark not defined. Choosing an analytic approach ..................................................... Error! Bookmark not defined. I. Inductive coding ................................................................ -

Cairncross Review a Sustainable Future for Journalism

THE CAIRNCROSS REVIEW A SUSTAINABLE FUTURE FOR JOURNALISM 12 TH FEBRUARY 2019 Contents Executive Summary 5 Chapter 1 – Why should we care about the future of journalism? 14 Introduction 14 1.1 What kinds of journalism matter most? 16 1.2 The wider landscape of news provision 17 1.3 Investigative journalism 18 1.4 Reporting on democracy 21 Chapter 2 – The changing market for news 24 Introduction 24 2.1 Readers have moved online, and print has declined 25 2.2 Online news distribution has changed the ways people consume news 27 2.3 What could be done? 34 Chapter 3 – News publishers’ response to the shift online and falling revenues 39 Introduction 39 3.1 The pursuit of digital advertising revenue 40 Case Study: A Contemporary Newsroom 43 3.2 Direct payment by consumers 48 3.3 What could be done 53 Chapter 4 – The role of the online platforms in the markets for news and advertising 57 Introduction 57 4.1 The online advertising market 58 4.2 The distribution of news publishers’ content online 65 4.3 What could be done? 72 Cairncross Review | 2 Chapter 5 – A future for public interest news 76 5.1 The digital transition has undermined the provision of public-interest journalism 77 5.2 What are publishers already doing to sustain the provision of public-interest news? 78 5.3 The challenges to public-interest journalism are most acute at the local level 79 5.4 What could be done? 82 Conclusion 88 Chapter 6 – What should be done? 90 Endnotes 103 Appendix A: Terms of Reference 114 Appendix B: Advisory Panel 116 Appendix C: Review Methodology 120 Appendix D: List of organisations met during the Review 121 Appendix E: Review Glossary 123 Appendix F: Summary of the Call for Evidence 128 Introduction 128 Appendix G: Acknowledgements 157 Cairncross Review | 3 Executive Summary Executive Summary “The full importance of an epoch-making idea is But the evidence also showed the difficulties with often not perceived in the generation in which it recommending general measures to support is made.. -

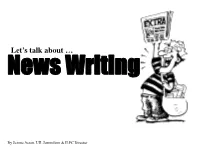

Let's Talk About …

Let’s talk about … News Writing By Jeanne Acton, UIL Journalism & ILPC Director News Writing … gives the reader information that will have an impact on them in some way. It usually flows from most important to least important. “What is news? It is information only.” - Walter Cronkite, former CBS News anchor Transition/Quote Formula Lead: Most important information. Focus on newest information. Focus on the future. Additional Information: Important information not found in the lead. Sometimes not needed. Linked Side Notes: 1) Each box is Direct Quote: a new a para- Connects to the additional information or lead. Use more than one sentence. graph. 2) Story should flow Transition: from most Next important fact or opinion for the story . important to Use transition words to help story flow . Transi- least important tion can be a fact, indirect quote or partial information. quote. Linked Direct Quote: Connects to the first transition. Use more than one sentence. Do not repeat the transition in the quote. DQ should elaborate on the transition. DQ should give details, opinions, etc. Transition: Next important fact or opinion for the story . Use transition words to help story flow . Transi- tion can be a fact, indirect quote or partial Linked quote. Direct Quote: Connects to the second transition. Use more than one sentence. Do not repeat the transition in the quote. DQ should elaborate on the transi- tion. DQ should give details, opinions, etc. and so on!!! until the story is complete Let’s start at the beginning with … LEADS. Let’s talk about Leads Lead: Most important information. -

21 Types of News

21 Types Of News In the fIrst several chapters, we saw media systems in flux. Fewer newspaper journalists but more websites, more hours of local TV news but fewer reporters, more “news/talk” radio but less local news radio, national cable news thriving, local cable news stalled. But what matters most is not the health of a particular sector but how these changes net out, and how the pieces fit together. Here we will consider the health of the news media based on the region of coverage, whether neigh- borhood, city, state, country, or world. Hyperlocal The term “hyperlocal” commonly refers to news coverage on a neighborhood or even block-by-block level. The tradi- tional media models, even in their fattest, happiest days could not field enough reporters to cover every neighborhood on a granular level. As in all areas, there are elements of progress and retreat. On one hand, metropolitan newspapers have cut back on regional editions, which in all likelihood means less coverage of neighborhoods in those regions. But the Internet has revolutionized the provision of hyperlocal information. The first wave of technology— LISTSERV® and other email groups—made it far easier for citizens to inform one another of what was happening with the neighborhood crime watch or the new grocery store or the death of citizens can now snap a beloved senior who lived on the block for 40 years. More recently, social media tools have enabled citizens to self-organize, and connect in ever more picture of potholes and dynamic ways. Citizens can now snap pictures of potholes and send them to send to city hall, or share city hall, or share with each other via Facebook, Twitter or email.