Free Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dockerdocker

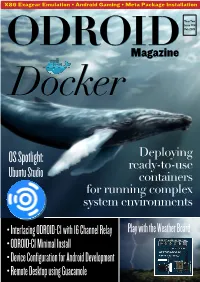

X86 Exagear Emulation • Android Gaming • Meta Package Installation Year Two Issue #14 Feb 2015 ODROIDMagazine DockerDocker OS Spotlight: Deploying ready-to-use Ubuntu Studio containers for running complex system environments • Interfacing ODROID-C1 with 16 Channel Relay Play with the Weather Board • ODROID-C1 Minimal Install • Device Configuration for Android Development • Remote Desktop using Guacamole What we stand for. We strive to symbolize the edge of technology, future, youth, humanity, and engineering. Our philosophy is based on Developers. And our efforts to keep close relationships with developers around the world. For that, you can always count on having the quality and sophistication that is the hallmark of our products. Simple, modern and distinctive. So you can have the best to accomplish everything you can dream of. We are now shipping the ODROID U3 devices to EU countries! Come and visit our online store to shop! Address: Max-Pollin-Straße 1 85104 Pförring Germany Telephone & Fax phone : +49 (0) 8403 / 920-920 email : [email protected] Our ODROID products can be found at http://bit.ly/1tXPXwe EDITORIAL ow that ODROID Magazine is in its second year, we’ve ex- panded into several social networks in order to make it Neasier for you to ask questions, suggest topics, send article submissions, and be notified whenever the latest issue has been posted. Check out our Google+ page at http://bit.ly/1D7ds9u, our Reddit forum at http://bit. ly/1DyClsP, and our Hardkernel subforum at http://bit.ly/1E66Tm6. If you’ve been following the recent Docker trends, you’ll be excited to find out about some of the pre-built Docker images available for the ODROID, detailed in the second part of our Docker series that began last month. -

First Stepsbeginner-Level Tutorials for Users Dipping Their Toes Into Linux

First Steps Beginner-level tutorials for users dipping their toes into Linux First Steps with Change the way you consume blogs, news sources and loads of other stuff with the best technology you’ve never heard of, says Andy Channelle... technology/default.stm), find the RSS icon – a small orange square with a broadcasting-esque symbol and subscribe to the feed. Every few minutes, our software will check the RSS feed from the Beeb (the page itself is at http://newsrss.bbc.co.uk/ rss/newsonline_uk_edition/technology/rss.xml) and if anything has been added, it will be downloaded to the reader. And so we don’t have to go to this site to check whether anything has been added, we’ll know through the magic of RSS. Smart. And while we’re using text in our examples below, RSS is sophisticated enough to cope with other content formats including audio (podcasting), pictures (photocasting) and even video (vodcasting?!), so these instructions could be repurposed quite easily for a range of different tasks. Setting up Liferea As you may expect there are many RSS readers available for Linux and for the main desktops. On Gnome, the ‘standard’ reader is Liferea, an application with a clumsy name (an abbreviation for LInux FEed REAder), but nonetheless has a powerful and intuitive featureset that is equally at home on a KDE desktop. The latest version of Liferea is 1.4.9 and is available from http://liferea. sourceforge.net. Source and binaries are available for a range of distributions and we grabbed the latest Ubuntu-specific package via the desktop’s Applications > Add/Remove menu. -

Community Notebook

Community Notebook Free Software Projects Projects on the Move Are you ready to assess your assets and limit your liabilities? Or maybe you just want to find out where your money goes. This month we look at Grisbi, GnuCash, and HomeBank finance managers. By Rikki Endsley his time, it’s personal. My current system of logging into my bank ac- count to see whether my magical debit card still works isn’t working for me, so I’ve decided to test drive some open source finance managers: T Grisbi, GnuCash, and HomeBank. Grisbi First released in French in 2000, the Grisbi accounting program is now available nobilior, 123RF in several languages and runs on most operating systems. To download Grisbi, visit the project’s SourceForge page [1] or homepage [2]. The install and con- figuration is intuitive – select your country and currency, the list of “categories” you’ll use (either a standard category set or an empty list with no categories defined yet), add bank details, and then create a new account from scratch or import data RIKKI ENDSLEY from an online bank account or accounting software. I opted to create a new bank ac- count. Other options include a cash account, liabilities account, or assets account Rikki Endsley is a freelance writer and the community manager for USENIX. In (Figure 1). addition to Linux Magazine and Linux After you set up your account, a window with transactions and properties opens Pro Magazine, Endsley has been (Figure 2). Here you can enter, view, and reconcile transactions; adjust your bank de- published on Linux.com, NetworkWorld. -

UNIVERSITY of CALIFORNIA SANTA CRUZ UNDERSTANDING and SIMULATING SOFTWARE EVOLUTION a Dissertation Submitted in Partial Satisfac

UNIVERSITY OF CALIFORNIA SANTA CRUZ UNDERSTANDING AND SIMULATING SOFTWARE EVOLUTION A dissertation submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in COMPUTER SCIENCE by Zhongpeng Lin December 2015 The Dissertation of Zhongpeng Lin is approved: Prof. E. James Whitehead, Jr., Chair Asst. Prof. Seshadhri Comandur Prof. Timothy J. Menzies Tyrus Miller Vice Provost and Dean of Graduate Studies Copyright c by Zhongpeng Lin 2015 Table of Contents List of Figures v List of Tables vii Abstract ix Dedication xi Acknowledgments xii 1 Introduction 1 1.1 Emergent Phenomena in Software . 1 1.2 Simulation of Software Evolution . 3 1.3 Research Outline . 4 2 Power Law and Complex Networks 6 2.1 Power Law . 6 2.2 Complex Networks . 9 2.3 Empirical Studies of Software Evolution . 12 2.4 Summary . 17 3 Data Set and AST Differences 19 3.1 Data Set . 19 3.2 ChangeDistiller . 21 3.3 Data Collection Work Flow . 23 4 Change Size in Four Open Source Software Projects 24 4.1 Methodology . 25 4.2 Commit Size . 27 4.3 Monthly Change Size . 32 4.4 Summary . 36 iii 5 Generative Models for Power Law and Complex Networks 38 5.1 Generative Models for Power Law . 38 5.1.1 Preferential Attachment . 41 5.1.2 Self-organized Criticality . 42 5.2 Generative Models for Complex Networks . 50 6 Simulating SOC and Preferential Attachment in Software Evolution 53 6.1 Preferential Attachment . 54 6.2 Self-organized Criticality . 56 6.3 Simulation Model . 57 6.4 Experiment Setup . -

'Building' Architects and Use of Open-Source Tools Towards Achievement of Millennium Development Goals

'Building' Architects and Use of Open-source Tools Towards Achievement of Millennium Development Goals. Oku, Onyeibo Chidozie Department of Architecture Faculty of Environmental Sciences Enugu State University of Science and Technology Email: [email protected] ABSTRACT Millennium Development Goals (MDGs) were established by the United Nations to improve the well-being of humans and their habitat. Whether they are the target beneficiaries or amongst the parties administering services for achieving the MDGs, humans must carry out these activities in a physical environment. Hence, the Seventh Goal of the MDG has an indirect and far-reaching relationship with the others because it deals with the sustainable development of the built environment. Architects deliver consultancy services that span the design, documentation and construction supervision of the built environment. This study sought to determine the extent to which these professionals can do this, with respect to the Seventh Millennium Development Goal, using mainly open-source tools. The study draws from literature reviews, end-user feedback or reports, interviews with developers of applicable open-source products, and statistics from a survey, launched in 2011, for capturing how architects use ICT in their businesses. Analysis of popular open-source technologies for the Architecture, Engineering and Construction (AEC) industry show a concentration of resources in favour of the later stages of the Architect's role, rather than the design and contract-drawing stages. Some of the better-implemented tools are either too cryptic for professionals who communicate in graphical terms, or heavily biased towards software engineering practices. The products that promise Building Information Modelling (BIM) capabilities are still at an early developmental stage. -

Accesso Alle Macchine Virtuali in Lab Vela

Accesso alle Macchine Virtuali in Lab In tutti i Lab del camous esiste la possibilita' di usare: 1. Una macchina virtuale Linux Light Ubuntu 20.04.03, che sfrutta il disco locale del PC ed espone un solo utente: studente con password studente. Percio' tutti gli studenti che accedono ad un certo PC ed usano quella macchina virtuale hanno la stessa home directory e scrivono sugli stessi file che rimangono solo su quel PC. L'utente PUO' usare i diritti di amministratore di sistema mediante il comando sudo. 2. Una macchina virtuale Linux Light Ubuntu 20.04.03 personalizzata per ciascuno studente e la cui immagine e' salvata su un server di storage remoto. Quando un utente autenticato ([email protected]) fa partire questa macchina Virtuale LUbuntu, viene caricata dallo storage centrale un immagine del disco esclusivamente per quell'utente specifico. I file modificati dall'utente vengono salvati nella sua immagine sullo storage centrale. L'immagine per quell'utente viene utilizzata anche se l'utente usa un PC diverso. L'utente nella VM è studente con password studente ed HA i diritti di amministratore di sistema mediante il comando sudo. Entrambe le macchine virtuali usano, per ora, l'hypervisor vmware. • All'inizio useremo la macchina virtuale LUbuntu che salva i file sul disco locale, per poterla usare qualora accadesse un fault delle macchine virtuali personalizzate. • Dalla prossima lezione useremo la macchina virtuale LUbuntu che salva le immagini personalizzate in un server remoto. Avviare VM LUBUNTU in Locale (1) Se la macchina fisica è spenta occorre accenderla. Fatto il boot di windows occorre loggarsi sulla macchina fisica Windows usando la propria account istituzionale [email protected] Nel desktop Windows, aprire il File esplorer ed andare nella cartella C:\VM\LUbuntu Nella directory vedete un file LUbuntu.vmx Probabilmente l'estensione vmx non è visibile e ci sono molti file con lo stesso nome LUbuntu. -

Instant Messaging Video Converter, Iphone Converter Application

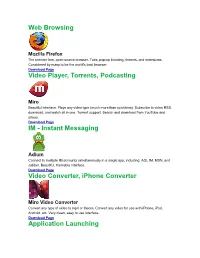

Web Browsing Mozilla Firefox The premier free, open-source browser. Tabs, pop-up blocking, themes, and extensions. Considered by many to be the world's best browser. Download Page Video Player, Torrents, Podcasting Miro Beautiful interface. Plays any video type (much more than quicktime). Subscribe to video RSS, download, and watch all in one. Torrent support. Search and download from YouTube and others. Download Page IM - Instant Messaging Adium Connect to multiple IM accounts simultaneously in a single app, including: AOL IM, MSN, and Jabber. Beautiful, themable interface. Download Page Video Converter, iPhone Converter Miro Video Converter Convert any type of video to mp4 or theora. Convert any video for use with iPhone, iPod, Android, etc. Very clean, easy to use interface. Download Page Application Launching Quicksilver Quicksilver lets you start applications (and do just about everything) with a few quick taps of your fingers. Warning: start using Quicksilver and you won't be able to imagine using a Mac without it. Download Page Email Mozilla Thunderbird Powerful spam filtering, solid interface, and all the features you need. Download Page Utilities The Unarchiver Uncompress RAR, 7zip, tar, and bz2 files on your Mac. Many new Mac users will be puzzled the first time they download a RAR file. Do them a favor and download UnRarX for them! Download Page DVD Ripping Handbrake DVD ripper and MPEG-4 / H.264 encoding. Very simple to use. Download Page RSS Vienna Very nice, native RSS client. Download Page RSSOwl Solid cross-platform RSS client. Download Page Peer-to-Peer Filesharing Cabos A simple, easy to use filesharing program. -

SUSE® Linux Enterprise Desktop 12 and the Workstation Extension: What's New ?

SUSE® Linux Enterprise Desktop 12 and the Workstation Extension: What's New ? Frédéric Crozat <[email protected]> Enterprise Desktop Release Manager Scott Reeves <[email protected]> Enterprise Desktop Development Manager Agenda • Design Criteria • Desktop Environment in SUSE Linux Enterprise 12 • GNOME Shell • Desktop Features and Applications 2 Design Criteria SUSE Linux Enterprise Desktop Interoperability Ease of Use Security Ease of Management Lower Costs 4 SUSE Linux Enterprise Desktop 12 • Focus on technical workstation ‒ Developers and System administrators • One tool for the job • Main desktop applications will be shipped: ‒ Mail client, Office Suite, Graphical Editors, ... • SUSE Linux Enterprise Workstation Extension ‒ Extend SUSE Linux Enterprise Server with packages only available on SUSE Linux Enterprise Desktop. (x86-64 only) 5 Desktop in SUSE Linux Enterprise 12 As Part of the Common Code Base SUSE Linux Enterprise 12 Desktop Environment • SUSE Linux Enterprise 12 contains one primary desktop environment • Additional light-weight environment for special use-cases: ‒ Integrated Systems • Desktop environment is shared between the server and desktop products 7 SUSE Linux Enterprise 12 Desktop Environment • GNOME 3 is the main desktop environment ‒ SLE Classic mode by default ‒ GNOME 3 Classic Mode and GNOME 3 Shell Mode also available • SUSE Linux Enterprise 12 ships also lightweight IceWM ‒ Targeted at Integrated Systems • QT fully supported: ‒ QT5 supported for entire SLE12 lifecycle ‒ QT4 supported, will be removed in future -

Synthetic Data for English Lexical Normalization: How Close Can We Get to Manually Annotated Data?

Proceedings of the 12th Conference on Language Resources and Evaluation (LREC 2020), pages 6300–6309 Marseille, 11–16 May 2020 c European Language Resources Association (ELRA), licensed under CC-BY-NC Synthetic Data for English Lexical Normalization: How Close Can We Get to Manually Annotated Data? Kelly Dekker, Rob van der Goot University of Groningen, IT University of Copenhagen [email protected], [email protected] Abstract Social media is a valuable data resource for various natural language processing (NLP) tasks. However, standard NLP tools were often designed with standard texts in mind, and their performance decreases heavily when applied to social media data. One solution to this problem is to adapt the input text to a more standard form, a task also referred to as normalization. Automatic approaches to normalization have shown that they can be used to improve performance on a variety of NLP tasks. However, all of these systems are supervised, thereby being heavily dependent on the availability of training data for the correct language and domain. In this work, we attempt to overcome this dependence by automatically generating training data for lexical normalization. Starting with raw tweets, we attempt two directions, to insert non-standardness (noise) and to automatically normalize in an unsupervised setting. Our best results are achieved by automatically inserting noise. We evaluate our approaches by using an existing lexical normalization system; our best scores are achieved by custom error generation system, which makes use of some manually created datasets. With this system, we score 94.29 accuracy on the test data, compared to 95.22 when it is trained on human-annotated data. -

Portable Apps Fact Sheet

Gerry Kennedy- Fact Sheets Pottable Apps xx/xx/2009 PORTABLE APPS FACT SHEET What is a portable program? A Portable App or program is a piece of software that can be accessed from a portable USB device (USB thumb or pen drive, PDA, iPod or external hard disk) and used on any computer. It could be an email program, a browser, a system recovery tool or a program to achieve a set task – reading, writing, music editing, art and design, magnification, text to speech, OCR or to provide more efficient or equitable access (e.g. Click ‘n’ Type onscreen keyboard). There are hundreds of programs that are available. All of a user’s data and settings are always stored on the thumb drive so when a user unplugs the device, none of the personal data is left behind. It provides a way of accessing and using relevant software – anywhere, anytime. Portable Apps are relatively new. Apps do NOT need to be installed on the ‘host’ computer. No Tech support is required on campus. No permissions are required in order to have the software made available to them. Students can work anywhere - as long as they have access to a computer. The majority of programs are free (Freeware or Open Source). Fact sheets, user guides and support are all available on the web or within the Help File in the various applications. Or – have students write their own! Be sure to encourage all users to BACK UP their files as USB drives can be lost, stolen, misplaced, left behind, erased -due to strong magnetic fields or accidentally compromised (left on a parcel shelf in a car, or left in clothes and washed!). -

Open Sourcing De Imac =------Jan Stedehouder

Copyright: DOSgg ProgrammaTheek BV SoftwareBus 2007 3 ---------------------------------------------------------------------------= Open Sourcing de iMac =--------------------------------------------------------------------------------------------------------- Jan Stedehouder Inleiding Het kan dus betrekkelijk onschuldig wor- Het zijn er niet veel, maar zo nu en dan den geïnstalleerd (betrekkelijk, want heb ik een uurtje over voor wat extra het kan altijd misgaan, de risico’s zijn speelwerk. Bij voorkeur doe je dan iets voor uzelf). wat zowel leuk als interessant is en in dit geval stond mijn trouwe iMac Blue Fink sluit bij de uitvoering heel sterk (733 MHz, 768 MB RAM en een 20 GB HD) aan bij Debian en maakt gebruik van de te smeken om onder handen genomen te pakketbeheermogelijkheden die worden worden. De afgelopen maanden heeft ’ie worden geboden door dpkg, dselect en namelijk wat te lijden gehad onder (1) apt-get. Fink Commander is een grafi- een gebrek aan tijd en (2) een reeks ex- sche interface die wel iets weg heeft perimenten met Linux voor de PowerPC van Synaptic. De lijst van beschikbare (zie tekstvak). Het uitgangspunt was nu: programma’s is niet gek. Zo kunnen we ‘Hoe ver kan ik gaan met installeren van KDE en Gnome installeren. In vergelij- open source-software met Mac OSX als king met de complete Debian reposito- platform?’ De speurtocht leverde weer ries (softwarelijsten) is het wel beperkt. voldoende inspiratie op voor dit artikel Mijn Fink-installatie geeft aan dat er en op die wijze kunnen we ook onze Mac- ruim 2500 pakketten beschikbaar zijn, liefhebbers van dienst zijn. We beginnen tegen bijna 20.000 voor de Debian- met Fink, een project om Unix-software repositories. -

Ebook Ubuntu 18.04

Impressum Ubuntu 18.04 Installation. Gnome. Konfiguration. Anwendungsprogramme. Tipps und Tricks. Programmierung. Root-Server-Konfiguration. WSL. © Michael Kofler / ebooks.kofler 2018 Autor: Michael Kofler Korrektorat: Markus Hinterreither ISBN PDF: 978-3-902643-31-5 ISBN EPUB: 978-3-902643-32-2 Verlag: ebooks.kofler, Schönbrunngasse 54c, 8010 Graz, Austria Die PDF- und EPUB-Ausgaben dieses eBooks sind hier erhältlich: https://kofler.info/ebooks/ubuntu-18-04 Inhaltsverzeichnis Vorwort 7 1 ÜberUbuntu 10 1.1 Besonderheiten 10 1.2 Ubuntu-Varianten 11 1.3 Neu in Ubuntu 18.04 13 1.4 Ubuntu ohne Installation ausprobieren (Live-System) 15 2 Installation 18 2.1 Grundlagen der Festplattenpartitionierung 19 2.2 EFI-Grundlagen 25 2.3 Ubuntu-Installationsmedium vorbereiten 26 2.4 Windows-Partition verkleinern 29 2.5 Ubuntu installieren 31 2.6 LVM-Installation 47 2.7 Installation in einer virtuellen Maschine (VirtualBox) 52 3 Der Ubuntu-Desktop 54 3.1 Gnome 54 3.2 Dateien und Verzeichnisse (Nautilus) 62 3.3 Updates durchführen (Software-Aktualisierung) 73 3.4 Neue Programme installieren 76 Ubuntu 18.04 INHALTSVERZEICHNIS 4 ebooks.kofler 4 Konfiguration 80 4.1 Konfigurationswerkzeuge 80 4.2 Desktop-Konfiguration 85 4.3 Gnome-Shell-Erweiterungen 89 4.4 Gnome Shell Themes 95 4.5 Gnome-Desktop im Originalzustand verwenden 97 4.6 Tastatur 100 4.7 Maus und Touchpad 102 4.8 Bluetooth 104 4.9 Netzwerkkonfiguration 108 4.10 Druckerkonfiguration 112 4.11 Grafiksystem 115 4.12 Proprietäre Hardware-Treiber 121 4.13 Benutzerverwaltung 123 5 Anwendungsprogramme 125 5.1 Firefox