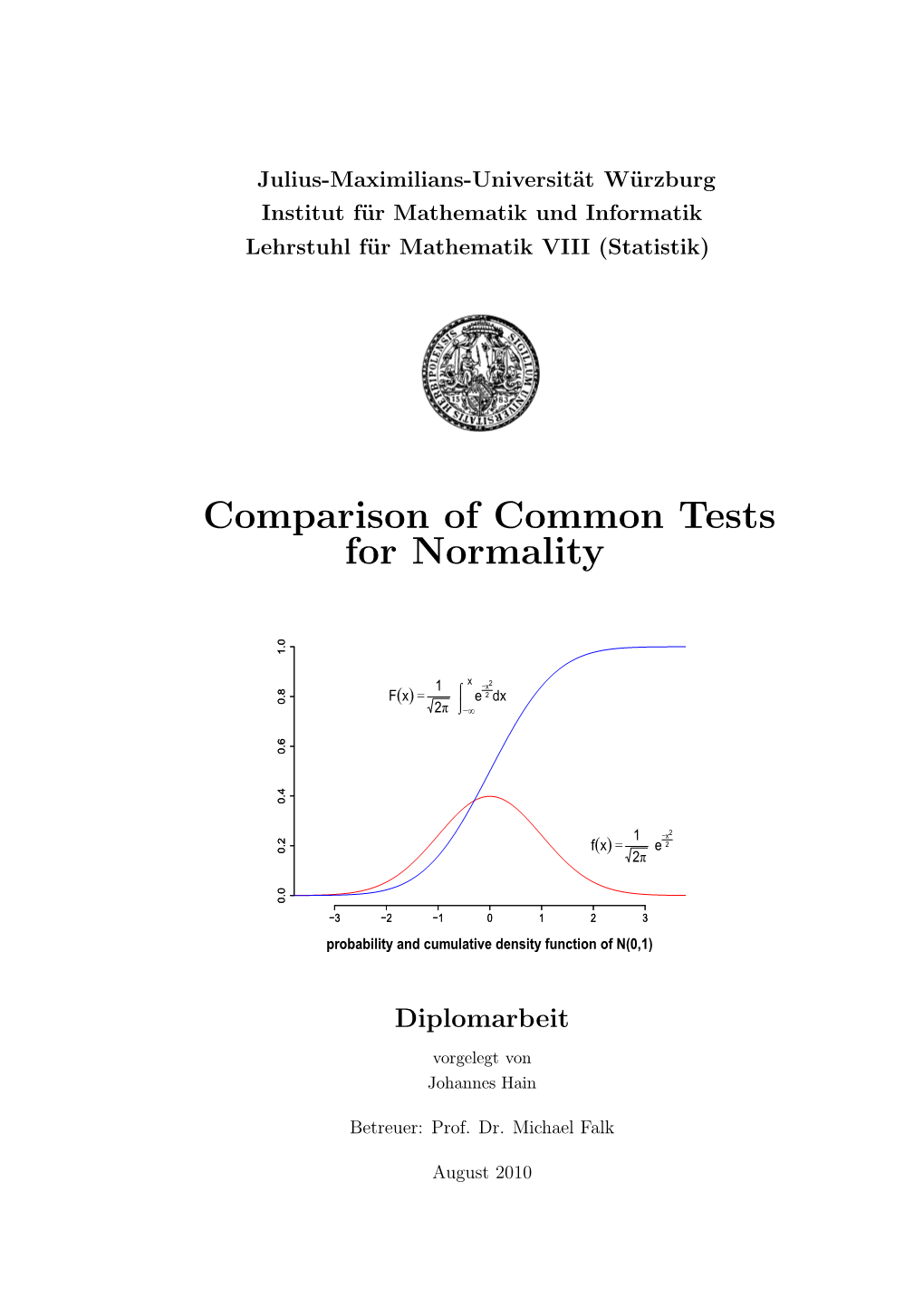

Comparison of Common Tests for Normality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Maxskew and Multiskew: Two R Packages for Detecting, Measuring and Removing Multivariate Skewness

S S symmetry Article MaxSkew and MultiSkew: Two R Packages for Detecting, Measuring and Removing Multivariate Skewness Cinzia Franceschini 1,† and Nicola Loperfido 2,*,† 1 Dipartimento di Scienze Agrarie e Forestali (DAFNE), Università degli Studi della Tuscia, Via San Camillo de Lellis snc, 01100 Viterbo, Italy 2 Dipartimento di Economia, Società e Politica (DESP), Università degli Studi di Urbino “Carlo Bo”, Via Saffi 42, 61029 Urbino, Italy * Correspondence: nicola.loperfi[email protected] † These authors contributed equally to this work. Received: 6 July 2019; Accepted: 22 July 2019; Published: 1 August 2019 Abstract: The R packages MaxSkew and MultiSkew measure, test and remove skewness from multivariate data using their third-order standardized moments. Skewness is measured by scalar functions of the third standardized moment matrix. Skewness is tested with either the bootstrap or under normality. Skewness is removed by appropriate linear projections. The packages might be used to recover data features, as for example clusters and outliers. They are also helpful in improving the performances of statistical methods, as for example the Hotelling’s one-sample test. The Iris dataset illustrates the usages of MaxSkew and MultiSkew. Keywords: asymmetry; bootstrap; projection pursuit; symmetrization; third cumulant 1. Introduction The skewness of a random variable X satisfying E jXj3 < +¥ is often measured by its third standardized cumulant h i E (X − m)3 g (X) = , (1) 1 s3 where m and s are the mean and the standard deviation of X. The squared third standardized cumulant 2 b1 (X) = g1 (X), known as Pearson’s skewness, is also used. The numerator of g1 (X), that is h 3i k3 (X) = E (X − m) , (2) is the third cumulant (i.e., the third central moment) of X. -

An Honest Approach to Parallel Trends ∗

An Honest Approach to Parallel Trends ∗ Ashesh Rambachany Jonathan Rothz (Job market paper) December 18, 2019 Please click here for the latest version. Abstract Standard approaches for causal inference in difference-in-differences and event-study designs are valid only under the assumption of parallel trends. Researchers are typically unsure whether the parallel trends assumption holds, and therefore gauge its plausibility by testing for pre-treatment differences in trends (“pre-trends”) between the treated and untreated groups. This paper proposes robust inference methods that do not require that the parallel trends assumption holds exactly. Instead, we impose restrictions on the set of possible violations of parallel trends that formalize the logic motivating pre-trends testing — namely, that the pre-trends are informative about what would have happened under the counterfactual. Under a wide class of restrictions on the possible differences in trends, the parameter of interest is set-identified and inference on the treatment effect of interest is equivalent to testing a set of moment inequalities with linear nuisance parameters. We derive computationally tractable confidence sets that are uniformly valid (“honest”) so long as the difference in trends satisfies the imposed restrictions. Our proposed confidence sets are consistent, and have optimal local asymptotic power for many parameter configurations. We also introduce fixed length confidence intervals, which can offer finite-sample improvements for a subset of the cases we consider. We recommend that researchers conduct sensitivity analyses to show what conclusions can be drawn under various restrictions on the set of possible differences in trends. We conduct a simulation study and illustrate our recommended approach with applications to two recently published papers. -

Statistical Evidence of Central Moments As Fault Indicators in Ball Bearing Diagnostics

Statistical evidence of central moments as fault indicators in ball bearing diagnostics Marco Cocconcelli1, Giuseppe Curcuru´2 and Riccardo Rubini1 1University of Modena and Reggio Emilia Via Amendola 2 - Pad. Morselli, 42122 Reggio Emilia, Italy fmarco.cocconcelli, [email protected] 2University of Palermo Viale delle Scienze 1, 90128 Palermo, Italy [email protected] Abstract This paper deals with post processing of vibration data coming from a experimental tests. An AC motor running at constant speed is provided with a faulted ball bearing, tests are done changing the type of fault (outer race, inner race and balls) and the stage of the fault (three levels of severity: from early to late stage). A healthy bearing is also measured for the aim of comparison. The post processing simply consists in the computation of scalar quantities that are used in condition monitoring of mechanical systems: variance, skewness and kurtosis. These are the second, the third and the fourth central moment of a real-valued function respectively. The variance is the expectation of the squared deviation of a random variable from its mean, the skewness is the measure of the lopsidedness of the distribution, while the kurtosis is a measure of the heaviness of the tail of the distribution, compared to the normal distribution of the same variance. Most of the papers in the last decades use them with excellent results. This paper does not propose a new fault detection technique, but it focuses on the informative content of those three quantities in ball bearing diagnostics from a statistical point of view. -

Chapter 5. Multiple Random Variables 5.6: Moment Generating Functions Slides (Google Drive) Alex Tsun Video (Youtube)

Chapter 5. Multiple Random Variables 5.6: Moment Generating Functions Slides (Google Drive) Alex Tsun Video (YouTube) Last time, we talked about how to find the distribution of the sum of two independent random variables. Some of the most important use cases are to prove the results we've been using for so long: the sum of independent Binomials is Binomial, the sum of independent Poissons is Poisson (we proved this in 5.5 using convolution), etc. We'll now talk about Moment Generating Functions, which allow us to do these in a different (and arguably easier) way. These will also be used to prove the Central Limit Theorem (next section), probably the most important result in all of statistics! Also, to derive the Chernoff bound (6.2). The point is, these are used to prove a lot of important results. They might not be as direct applicable to problems though. 5.6.1 Moments First, we need to define what a moment is. Definition 5.6.1: Moments Let X be a random variable and c 2 R a scalar. Then: The kth moment of X is: k E X and the kth moment of X (about c) is: k E (X − c) The first four moments of a distribution/RV are commonly used, though we have only talked about the first two of them. I'll briefly explain each but we won't talk about the latter two much. 1. The first moment of X is the mean of the distribution µ = E [X]. This describes the center or average value. -

Lecture 11: Using the LLN and CLT, Moments of Distributions

Chapter 3.1,3.3,3.4 LLN and CLT Markov's and Chebyshev's Inequalities For any random variable X ≥ 0 and constant a > 0 then Lecture 11: Using the LLN and CLT, Moments of Markov's Inequality: Distributions E(X ) P(X ≥ a) ≤ a Statistics 104 Chebyshev's Inequality: Var(X ) Colin Rundel P(jX − E(X )j ≥ a) ≤ a2 February 22, 2012 Statistics 104 (Colin Rundel) Lecture 11 February 22, 2012 1 / 22 Chapter 3.1,3.3,3.4 LLN and CLT Chapter 3.1,3.3,3.4 LLN and CLT Using Markov's and Chebyshev's Inequalities Accuracy of Chebyshev's inequality Suppose that it is known that the number of items produced in a factory during a week How tight the inequality is depends on the distribution, but we can look at is a random variable X with mean 50. (Note that we don't know anything about the the Normal distribution since it is easy to evaluate. Let X ∼ N (µ, σ2) then distribution of the pmf) 1 P(jX − µj ≥ kσ) ≤ (a) What can be said about the probability that this week's production will be exceed k2 75 units? jX − µj 1 P ≥ k ≤ (b) If the variance of a week's production is known to equal 25, then what can be said σ k2 X − µ X − µ 1 about the probability that this week's production will be between 40 and 60 units? P ≤ −k or ≥ k ≤ σ σ k2 -k 0 k Statistics 104 (Colin Rundel) Lecture 11 February 22, 2012 2 / 22 Statistics 104 (Colin Rundel) Lecture 11 February 22, 2012 3 / 22 Chapter 3.1,3.3,3.4 LLN and CLT Chapter 3.1,3.3,3.4 LLN and CLT Accuracy of Chebyshev's inequality, cont. -

Machine Learning

Quantitative Analyst Program Session 2, Oct. 14th, 2019 Roy Chen Zhang In collaboration with McGill Investment Club and Desautels Faculty of Management Session Content at [roychenzhang.com/teaching] 1 =37 Outline 1 Another Brief Case Study 1.1 "John Ioannidis 1.2 ...and the Cross-Section of Expected Returns" 2 Statistical Foundations 2.1 Sampling 2.2 Moments and Cumulants 3 Distributions and Correlations 3.1 Distributions 3.2 Correlations 4 Testing and Validation 4.1 Hypothesis Testing 4.2 The Mistake Everyone Made 5 Standardization and Normalization 5.1 Normalization 5.2 Standardization QAP 2019-2020 Roy Chen Zhang (roychenzhang.com) 1 Another Brief Case Study 2 =37 Section 1: Another Brief Case Study QAP 2019-2020 Roy Chen Zhang (roychenzhang.com) 1 Another Brief Case Study 1.1 "John Ioannidis 3 =37 Another Brief Case Study: 1 John Ioannidis In 2005, physician John Ioannidis, then a professor at the University of Ioannina Medical School, made an outrageous assertion: That most research findings in all of science were, in fact, frauds. His paper, titled "Why Most Published Research Findings Are False", quickly gained traction in the scientific community, soon becoming the most downloaded paper in the Public Library of Science. This marked the beginning of a field called ’meta-research’, or research on research itself. Questions: • Why might researchers be motivated to fake results? • What do you think he noticed? QAP 2019-2020 Roy Chen Zhang (roychenzhang.com) 1 Another Brief Case Study 1.2 ...and the Cross-Section of Expected Returns" 4 =37 A Brief Case Study: 2 ...and the Cross-Section of Expected Returns Finance Academia’s own epiphany in meta-research came in the form of a 2015 paper, titled ’...and the Cross-Section of Expected Returns’. -

Learning Exponential Families in High-Dimensions

Learning Exponential Families in High-Dimensions: Strong Convexity and Sparsity Sham M. Kakade Ohad Shamir Department of Statistics School of Computer Science and Engineering The Wharton School The Hebrew University of Jerusalem, Israel University of Pennsylvania, USA Karthik Sridharan Ambuj Tewari Toyota Technological Institute Toyota Technological Institute Chicago, USA Chicago, USA Abstract The versatility of exponential families, along with their attendant convexity prop- erties, make them a popular and effective statistical model. A central issue is learning these models in high-dimensions, such as when there is some sparsity pattern of the optimal parameter. This work characterizes a certain strong con- vexity property of general exponential families, which allow their generalization ability to be quantified. In particular, we show how this property can be used to analyze generic exponential families under L1 regularization. 1 Introduction Exponential models are perhaps the most versatile and pragmatic statistical model for a variety of reasons — modelling flexibility (encompassing discrete variables, continuous variables, covariance matrices, time series, graphical models, etc); convexity properties allowing ease of optimization; and robust generalization ability. A principal issue for applicability to large scale problems is estimating these models when the ambient dimension of the parameters, p, is much larger than the sample size arXiv:0911.0054v2 [cs.LG] 16 May 2015 n —the “p n” regime. ≫ Much recent work has focused on this problem in the special case of linear regression in high di- mensions, where it is assumed that the optimal parameter vector is sparse (e.g. Zhao and Yu [2006], Candes and Tao [2007], Meinshausen and Yu [2009], Bickel et al. -

Moment-Ratio Diagrams for Univariate Distributions

Moment-Ratio Diagrams for Univariate Distributions ERIK VARGO Department of Mathematics, The College of William & Mary, Williamsburg, VA 23187 RAGHU PASUPATHY The Grado Department of Industrial and Systems Engineering, Virginia Tech, Blacksburg, VA 24061 LAWRENCE LEEMIS Department of Mathematics, The College of William & Mary, Williamsburg, VA 23187 We present two moment-ratio diagrams along with guidance for their interpretation. The first moment- ratio diagram is a graph of skewness versus kurtosis for common univariate probability distributions. The second moment-ratio diagram is a graph of coefficient of variation versus skewness for common univariate probability distributions. Both of these diagrams, to our knowledge, are the most comprehensive to date. The diagrams serve four purposes: (1) they quantify the proximity between various univariate distributions based on their second, third, and fourth moments; (2) they illustrate the versatility of a particular distribution based on the range of values that the various moments can assume; and (3) they can be used to create a short list of potential probability models based on a data set; (4) they clarify the limiting relationships between various well-known distribution families. The use of the moment-ratio diagrams for choosing a distribution that models given data is illustrated. Key Words: Coefficient of Variation; Kurtosis; Skewness. Introduction the skewness (or third standardized moment) 3 HE MOMENT-RATIO DIAGRAM for a distribution X − μX T γ3 = E , refers to the locus of a pair of standardized mo- σX ments plotted on a single set of coordinate axes (Kotz and Johnson (2006)). By standardized moments we and the kurtosis (or fourth standardized moment) mean the coefficient of variation (CV), 4 X − μX γ4 = E , σX σX γ2 = , μX where μX and σX are the mean and the standard de- viation of the implied (univariate) random variable X. -

Least Quartic Regression Criterion to Evaluate Systematic Risk in the Presence of Co-Skewness and Co-Kurtosis

risks Article Least Quartic Regression Criterion to Evaluate Systematic Risk in the Presence of Co-Skewness and Co-Kurtosis Giuseppe Arbia, Riccardo Bramante and Silvia Facchinetti * Department of Statistical Sciences, Università Cattolica del Sacro Cuore, Largo Gemelli 1, 20123 Milan, Italy; [email protected] (G.A.); [email protected] (R.B.) * Correspondence: [email protected] Received: 23 July 2020; Accepted: 2 September 2020; Published: 8 September 2020 Abstract: This article proposes a new method for the estimation of the parameters of a simple linear regression model which is based on the minimization of a quartic loss function. The aim is to extend the traditional methodology, based on the normality assumption, to also take into account higher moments and to provide a measure for situations where the phenomenon is characterized by strong non-Gaussian distribution like outliers, multimodality, skewness and kurtosis. Although the proposed method is very general, along with the description of the methodology, we examine its application to finance. In fact, in this field, the contribution of the co-moments in explaining the return-generating process is of paramount importance when evaluating the systematic risk of an asset within the framework of the Capital Asset Pricing Model. We also illustrate a Monte Carlo test of significance on the estimated slope parameter and an application of the method based on the top 300 market capitalization components of the STOXX® Europe 600. A comparison between the slope coefficients evaluated using the ordinary Least Squares (LS) approach and the new Least Quartic (LQ) technique shows that the perception of market risk exposure is best captured by the proposed estimator during market turmoil, and it seems to anticipate the market risk increase typical of these periods. -

Lecture 12: Central Limit Theorem and Cdfs Raw Moment: 0 N Μn = E(X )

CLT Moments of Distributions Moments Lecture 12: Central Limit Theorem and CDFs Raw moment: 0 n µn = E(X ) Statistics 104 Central moment: 2 Colin Rundel µn = E[(X − µ) ] February 27, 2012 Normalized / Standardized moment: µn σn Statistics 104 (Colin Rundel) Lecture 12 February 27, 2012 1 / 22 CLT Moments of Distributions CLT Moments of Distributions Moment Generating Function Moment Generating Function - Properties The moment generating function of a random variable X is defined for all If X and Y are independent random variables then the moment generating real values of t by function for the distribution of X + Y is (P tx tX x e P(X = x) If X is discrete MX (t) = E[e ] = R tx t(X +Y ) tX tY tX tY x e P(X = x)dx If X is continuous MX +Y (t) = E[e ] = E[e e ] = E[e ]E[e ] = MX (t)MY (t) This is called the moment generating function because we can obtain the Similarly, the moment generating function for S , the sum of iid random raw moments of X by successively differentiating MX (t) and evaluating at n t = 0. variables X1; X2;:::; Xn is 0 0 MX (0) = E[e ] = 1 = µ0 n MS (t) = [MX (t)] d d n i M0 (t) = E[etX ] = E etX = E[XetX ] X dt dt 0 0 0 MX (0) = E[Xe ] = E[X ] = µ1 d d d M00(t) = M0 (t) = E[XetX ] = E (XetX ) = E[X 2etX ] X dt X dt dt 00 2 0 2 0 MX (0) = E[X e ] = E[X ] = µ2 Statistics 104 (Colin Rundel) Lecture 12 February 27, 2012 2 / 22 Statistics 104 (Colin Rundel) Lecture 12 February 27, 2012 3 / 22 CLT Moments of Distributions CLT Moments of Distributions Moment Generating Function - Unit Normal Moment Generating Function - Unit Normal, cont. -

A Simulation Method for Skewness Correction

U.U.D.M. Project Report 2008:22 A simulation method for skewness correction Måns Eriksson Examensarbete i matematisk statistik, 30 hp Handledare och examinator: Silvelyn Zwanzig December 2008 Department of Mathematics Uppsala University Abstract Let X1,...,Xn be i.i.d. random variables with known variance and skewness. A one-sided confidence interval for the mean with approximate confidence level α can be constructed using normal approximation. For skew distributions the actual confidence level will then be α+o(1). We propose a method for obtaining confidence intervals with confidence level α+o(n−1/2) using skewness correcting pseudo-random variables. The method is compared with a known method; Edgeworth correction. h h Acknowledgements I would like to thank my advisor Silvelyn Zwanzig for introducing me to the subject, for mathematical and stylistic guidance and for always encouraging me. I would also like to thank my friends and teachers at and around the department of mathematics for having inspired me to study mathematics, and for continuing to inspire me. 3 h h Contents 1 Introduction 7 1.1 Skewness . 7 1.2 Setting and notation . 7 2 The Edgeworth expansion 8 2.1 Definition and formal conditions . 8 2.2 Derivations . 11 2.2.1 Edgeworth expansion for Sn ...................... 11 2.2.2 Edgeworth expansions for more general statistics . 14 2.2.3 Edgeworth expansion for Tn ...................... 15 2.2.4 Some remarks; skewness correction . 16 2.3 Cornish-Fisher expansions for quantiles . 16 3 Methods for skewness correction 17 3.1 Coverages of confidence intervals . 17 3.2 Edgeworth correction . -

On the Asymptotic Distribution of an Alternative Measure of Kurtosis

International Journal of Advanced Statistics and Probability,3(2)(2015)161-168 www.sciencepubco.com/index.php/IJASP c Science Publishing Corporation doi: 10.14419/ijasp.v3i2.5007 Research Paper On the asymptotic distribution of an alternative measure of kurtosis Kagba Suaray California State University, Long Beach, USA Email: [email protected] Copyright c 2015 Kagba Suaray. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract Pearson defined the fourth standardized moment of a symmetric distribution as its kurtosis. There has been much discussion in the literature concerning both the meaning of kurtosis, as well as the e↵ectiveness of the classical sample kurtosis as a reliable measure of peakedness and tail weight. In this paper, we consider an alternative measure, developed by Crow and Siddiqui [1], used to describe kurtosis. Its value is calculated for a number of common distributions, and a derivation of its asymptotic distribution is given. Simulations follow, which reveal an interesting connection to the literature on the ratio of normal random variables. Keywords: Kurtosis; Quantile Estimator; Ratio of Normal Variables; Spread Function. 1. Introduction Consider X1,...,Xn F ,whereF is an absolutely continuous cdf with density f. In practice, it can be important either to classify data⇠ as heavy tailed, or to compare the tail heaviness and/or peakedness of two di↵erent samples. The primary descriptor for these purposes is the kurtosis. There has been debate (see[2]) as to whether the population kurtosis (and its variants), essentially defined as the fourth standardized moment 4 µ4 E [X E(X)] β2 = = − 2 σ4 2 E [X E(X)] − h i is a reliable way to describe the movement of probability mass from the “shoulders” to the peak and tails of a density function.