Vnios Deployment on KVM January 2019

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xii 2 Giving Feedback xiv 3 Documentation Conventions xiv 4 Product Life Cycle and Support xvi Support Statement for SUSE Linux Enterprise Server xvii • Technology Previews xviii I FILE SYSTEMS AND MOUNTING 1 1 Overview -

A Practical UNIX Capability System

A Practical UNIX Capability System Adam Langley <[email protected]> 22nd June 2005 ii Abstract This report seeks to document the development of a capability security system based on a Linux kernel and to follow through the implications of such a system. After defining terms, several other capability systems are discussed and found to be excellent, but to have too high a barrier to entry. This motivates the development of the above system. The capability system decomposes traditionally monolithic applications into a number of communicating actors, each of which is a separate process. Actors may only communicate using the capabilities given to them and so the impact of a vulnerability in a given actor can be reasoned about. This design pattern is demonstrated to be advantageous in terms of security, comprehensibility and mod- ularity and with an acceptable performance penality. From this, following through a few of the further avenues which present themselves is the two hours traffic of our stage. Acknowledgments I would like to thank my supervisor, Dr Kelly, for all the time he has put into cajoling and persuading me that the rest of the world might have a trick or two worth learning. Also, I’d like to thank Bryce Wilcox-O’Hearn for introducing me to capabilities many years ago. Contents 1 Introduction 1 2 Terms 3 2.1 POSIX ‘Capabilities’ . 3 2.2 Password Capabilities . 4 3 Motivations 7 3.1 Ambient Authority . 7 3.2 Confused Deputy . 8 3.3 Pervasive Testing . 8 3.4 Clear Auditing of Vulnerabilities . 9 3.5 Easy Configurability . -

Security Assurance Requirements for Linux Application Container Deployments

NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli Computer Security Division Information Technology Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 October 2017 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology NISTIR 8176 SECURITY ASSURANCE FOR LINUX CONTAINERS National Institute of Standards and Technology Internal Report 8176 37 pages (October 2017) This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by NIST, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. This p There may be references in this publication to other publications currently under development by NIST in accordance with its assigned statutory responsibilities. The information in this publication, including concepts and methodologies, may be used by federal agencies even before the completion of such companion publications. Thus, until each ublication is available free of charge from: http publication is completed, current requirements, guidelines, and procedures, where they exist, remain operative. For planning and transition purposes, federal agencies may wish to closely follow the development of these new publications by NIST. -

Course Outline & Schedule

Course Outline & Schedule Call US 408-759-5074 or UK +44 20 7620 0033 Suse Linux Advanced System Administration Curriculum Linux Course Code SLASA Duration 5 Day Course Price $2,425 Course Description This instructor led SUSE Linux Advanced System Administration training course is designed to teach the advanced administration, security, networking and performance tasks required on a SUSE Linux Enterprise system. Targeted to closely follow the official LPI curriculum (generic Linux), this course together with the SUSE Linux System Administration course will enable the delegate to work towards achieving the LPIC-2 qualification. Exercises and examples are used throughout the course to give practical hands-on experience with the techniques covered. Objectives The delegate will learn and acquire skills as follows: Perform administrative tasks with supplied tools such as YaST Advanced network configuration Network troubleshooting and analysing packets Creating Apache virtual hosts and hosting user web content Sharing Windows and Linux resources with SAMBA Configuring a DNS server and configuring DNS logging Configuring a DHCP server and client Sharing Linux network resources with NFS Creating Unit Files Configuring AutoFS direct and indirect maps Configuring a secure FTP server Configuring a SQUID proxy server Creating Btrfs subvolumes and snapshots Backing-up and restoring XFS filesystems Configuring LVM and managing Logical Volumes Managing software RAID Centralised storage with iSCSI Monitoring disk status and reliability with SMART Perpetual -

How SUSE Helps Pave the Road to S/4HANA

White Paper How SUSE Helps Pave the Road to S/4HANA Sponsored by: SUSE and SAP Peter Rutten Henry D. Morris August 2017 IDC OPINION According to SAP, there are now 6,800 SAP customers that have chosen S/4HANA. In addition, many more of the software company's customers still have to make the decision to move to S/4HANA. These customers have to determine whether they are ready to migrate their database for mission-critical workloads to a new database (SAP HANA) and a new application suite (S/4HANA). Furthermore, SAP HANA runs on Linux only, an operating environment that SAP customers may not have used extensively before. This white paper discusses the choices SAP customers have for migrating to S/4HANA on Linux and looks at how SUSE helps customers along the way. SITUATION OVERVIEW Among SAP customers, migration from traditional databases to the SAP HANA in-memory platform is continuing steadily. IDC's Data Analytics Infrastructure (SAP) Survey, November 2016 (n = 300) found that 49.3% of SAP customers in North America are running SAP HANA today. Among those that are not on SAP HANA, 29.9% will be in two to four years and 27.1% in four to six years. The slower adopters say that they don't feel rushed, stating that there's time until 2025 to make the decision, and that their current databases are performing adequately. While there is no specific order that SAP customers need to follow to get to S/4HANA, historically businesses have often migrated to SAP Business Warehouse (BW) on SAP HANA first as BW is a rewarding starting point for SAP HANA for two reasons: there is an instant performance improvement and migration is relatively easy since BW typically does not contain core enterprise data that is business critical. -

Securing Server Applications Using Apparmor TUT-1179

Securing Server Applications Using AppArmor TUT-1179 Brian Six Sales Engineer – SUSE [email protected] 1 Agenda AppArmor Introduction How to interact and configure Demo with simple script Can AppArmor help with containers Final thoughts 2 Intro to AppArmor 3 What is AppArmor? Bad software does what it shouldn’t do Good software does what it should do Secure software does what it should do, and nothing else AppArmor secures the Good and the Bad software 4 What is AppArmor? Mandatory Access Control (MAC) security system Applies policies to Applications Access to files and paths, network functions, system functions 5 What is AppArmor? Dynamic for existing profiled applications Regardless of the “user” the process is running as, the policy can be enforced. Root is no exception. 6 What is AppArmor? 7 Architecture Logging and OS Component Application Alerting AppArmor AppArmor Interface Application Profile AppArmor Linux Kernel 2.6 and later LSM Interface 8 How To Interact And Configure 9 How To Interact With AppArmor Apparmor-Utils First Install package 10 How To Interact With AppArmor Used more often 11 What Do Some Of These Do aa-status: Display aa-genprof : Create aa-logprof: Update aa-complain: Set a aa-enforce: Set a status of profile a profile an existing profile profile in complain profile in enforce enforcement mode mode 12 Common Permissions r – read w – write k – lock ux - unconstrained execute Ux - unconstrained execute – scrub px – discrete profile execute Px - discrete profile execute – scrub ix - inherit execute cx - local security profile l – link a – append m - mmap 13 Profile At-A-Glance https://doc.opensuse.org/documentation/leap/archive/42.3/security/html/book.security/cha.apparmor.profiles.html # a comment about foo's local (children) profile for #include <tunables/global> /usr/bin/foobar. -

A Multiplatform Pseudo Terminal

A Multi-Platform Pseudo Terminal API Project Report Submitted in Partial Fulfillment for the Masters' Degree in Computer Science By Qutaiba Mahmoud Supervised By Dr. Clinton Jeffery ABSTRACT This project is the construction of a pseudo-terminal API, which will provide a pseudo-terminal interface access to interactive programs. The API is aimed at developing an extension to the Unicon language to allow Unicon programs to easily utilize applications that require user interaction via a terminal. A pseudo-terminal is a pair of virtual devices that provide a bidirectional communication channel. This project was constructed to enable an enhancement to a collaborative virtual environment, because it will allow external tools such to be utilized within the same environment. In general the purpose of this API is to allow the UNICON runtime system to act as the user via the terminal which is provided by the API, the terminal is in turn connected to a client process such as a compiler, debugger, or an editor. It can also be viewed as a way for the UNICON environment to control and customize the input and output of external programs. Table of Contents: 1. Introduction 1.1 Pseudo Terminals 1.2 Other Terminals 1.3 Relation To Other Pseudo Terminal Applications. 2. Methodology 2.1 Pseudo Terminal API Function Description 3. Results 3.1 UNIX Implementation 3.2 Windows Implementation 4. Conclusion 5. Recommendations 6. References Acknowledgments I would like to thank my advisor, Dr. Clinton Jeffery, for his support, patience and understanding. Dr. Jeffery has always been prompt in delivering and sharing his knowledge and in providing his assistance. -

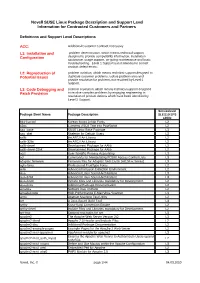

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP3 s390x 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-32bit An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 aalib-devel-32bit Development Package for AAlib L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-32bit Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. L2 alsa-docs Additional Package Documentation. L2 amanda Network Disk Archiver L2 amavisd-new High-Performance E-Mail Virus Scanner L3 amtu Abstract Machine Test Utility L2 ant A Java-Based Build Tool L3 anthy Kana-Kanji Conversion Engine L2 anthy-devel Include Files and Libraries mandatory for Development. -

Proceedings of the Linux Symposium

Proceedings of the Linux Symposium Volume One June 27th–30th, 2007 Ottawa, Ontario Canada Contents The Price of Safety: Evaluating IOMMU Performance 9 Ben-Yehuda, Xenidis, Mostrows, Rister, Bruemmer, Van Doorn Linux on Cell Broadband Engine status update 21 Arnd Bergmann Linux Kernel Debugging on Google-sized clusters 29 M. Bligh, M. Desnoyers, & R. Schultz Ltrace Internals 41 Rodrigo Rubira Branco Evaluating effects of cache memory compression on embedded systems 53 Anderson Briglia, Allan Bezerra, Leonid Moiseichuk, & Nitin Gupta ACPI in Linux – Myths vs. Reality 65 Len Brown Cool Hand Linux – Handheld Thermal Extensions 75 Len Brown Asynchronous System Calls 81 Zach Brown Frysk 1, Kernel 0? 87 Andrew Cagney Keeping Kernel Performance from Regressions 93 T. Chen, L. Ananiev, and A. Tikhonov Breaking the Chains—Using LinuxBIOS to Liberate Embedded x86 Processors 103 J. Crouse, M. Jones, & R. Minnich GANESHA, a multi-usage with large cache NFSv4 server 113 P. Deniel, T. Leibovici, & J.-C. Lafoucrière Why Virtualization Fragmentation Sucks 125 Justin M. Forbes A New Network File System is Born: Comparison of SMB2, CIFS, and NFS 131 Steven French Supporting the Allocation of Large Contiguous Regions of Memory 141 Mel Gorman Kernel Scalability—Expanding the Horizon Beyond Fine Grain Locks 153 Corey Gough, Suresh Siddha, & Ken Chen Kdump: Smarter, Easier, Trustier 167 Vivek Goyal Using KVM to run Xen guests without Xen 179 R.A. Harper, A.N. Aliguori & M.D. Day Djprobe—Kernel probing with the smallest overhead 189 M. Hiramatsu and S. Oshima Desktop integration of Bluetooth 201 Marcel Holtmann How virtualization makes power management different 205 Yu Ke Ptrace, Utrace, Uprobes: Lightweight, Dynamic Tracing of User Apps 215 J. -

High Velocity Kernel File Systems with Bento

High Velocity Kernel File Systems with Bento Samantha Miller, Kaiyuan Zhang, Mengqi Chen, and Ryan Jennings, University of Washington; Ang Chen, Rice University; Danyang Zhuo, Duke University; Thomas Anderson, University of Washington https://www.usenix.org/conference/fast21/presentation/miller This paper is included in the Proceedings of the 19th USENIX Conference on File and Storage Technologies. February 23–25, 2021 978-1-939133-20-5 Open access to the Proceedings of the 19th USENIX Conference on File and Storage Technologies is sponsored by USENIX. High Velocity Kernel File Systems with Bento Samantha Miller Kaiyuan Zhang Mengqi Chen Ryan Jennings Ang Chen‡ Danyang Zhuo† Thomas Anderson University of Washington †Duke University ‡Rice University Abstract kernel-level debuggers and kernel testing frameworks makes this worse. The restricted and different kernel programming High development velocity is critical for modern systems. environment also limits the number of trained developers. This is especially true for Linux file systems which are seeing Finally, upgrading a kernel module requires either rebooting increased pressure from new storage devices and new demands the machine or restarting the relevant module, either way on storage systems. However, high velocity Linux kernel rendering the machine unavailable during the upgrade. In the development is challenging due to the ease of introducing cloud setting, this forces kernel upgrades to be batched to meet bugs, the difficulty of testing and debugging, and the lack of cloud-level availability goals. support for redeployment without service disruption. Existing Slow development cycles are a particular problem for file approaches to high-velocity development of file systems for systems. -

Efficient Parallel I/O on Multi-Core Architectures

Lecture series title/ lecture title Efficient parallel I/O on multi-core architectures Adrien Devresse CERN IT-SDC-ID Thematic CERN School of Computing 2014 1 Author(s) names – Affiliation Lecture series title/ lecture title How to make I/O bound application scale with multi-core ? What is an IO bound application ? → A server application → A job that accesses big number of files → An application that uses intensively network 2 Author(s) names – Affiliation Lecture series title/ lecture title Stupid example: Simple server monothreaded // create socket socket_desc = socket(AF_INET , SOCK_STREAM , 0); // bind the socket bind(socket_desc,(struct sockaddr *)&server , sizeof(server)); listen(socket_desc , 100); //accept connection from an incoming client while(1){ // declarations client_sock = accept(socket_desc, (struct sockaddr *)&client, &c); //Receive a message from client while( (read_size = recv(client_sock , client_message , 2000 , 0)) > 0{ // Wonderful, we have a client, do some useful work std::string msg("hello bob"); write(client_sock, msg.c_str(), msg.size()); } } 3 Author(s) names – Affiliation Lecture series title/ lecture title Stupid example: Let's make it parallel ! int main(int argc, char** argv){ // creat socket void do_work(int socket){ socket_desc = socket(AF_INET , SOCK_STREAM , 0); //Receive a message while( (read_size = // bind the socket recv(client_sock , bind(socket_desc, server , sizeof(server)); client_message , 2000 , 0)) > 0{ listen(socket_desc , 100); // Wonderful, we have a client // useful works //accept connection -

Container-Based Virtualization for Byte-Addressable NVM Data Storage

2016 IEEE International Conference on Big Data (Big Data) Container-Based Virtualization for Byte-Addressable NVM Data Storage Ellis R. Giles Rice University Houston, Texas [email protected] Abstract—Container based virtualization is rapidly growing Storage Class Memory, or SCM, is an exciting new in popularity for cloud deployments and applications as a memory technology with the potential of replacing hard virtualization alternative due to the ease of deployment cou- drives and SSDs as it offers high-speed, byte-addressable pled with high-performance. Emerging byte-addressable, non- volatile memories, commonly called Storage Class Memory or persistence on the main memory bus. Several technologies SCM, technologies are promising both byte-addressability and are currently under research and development, each with dif- persistence near DRAM speeds operating on the main memory ferent performance, durability, and capacity characteristics. bus. These new memory alternatives open up a new realm of These include a ReRAM by Micron and Sony, a slower, but applications that no longer have to rely on slow, block-based very large capacity Phase Change Memory or PCM by Mi- persistence, but can rather operate directly on persistent data using ordinary loads and stores through the cache hierarchy cron and others, and a fast, smaller spin-torque ST-MRAM coupled with transaction techniques. by Everspin. High-speed, byte-addressable persistence will However, SCM presents a new challenge for container-based give rise to new applications that no longer have to rely on applications, which typically access persistent data through slow, block based storage devices and to serialize data for layers of block based file isolation.