• Central Florida

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Central Florida Future, Vol. 34 No. 14, November 21, 2001

University of Central Florida STARS Central Florida Future University Archives 11-21-2001 Central Florida Future, Vol. 34 No. 14, November 21, 2001 Part of the Mass Communication Commons, Organizational Communication Commons, Publishing Commons, and the Social Influence and oliticalP Communication Commons Find similar works at: https://stars.library.ucf.edu/centralfloridafuture University of Central Florida Libraries http://library.ucf.edu This Newspaper is brought to you for free and open access by the University Archives at STARS. It has been accepted for inclusion in Central Florida Future by an authorized administrator of STARS. For more information, please contact [email protected]. Recommended Citation "Central Florida Future, Vol. 34 No. 14, November 21, 2001" (2001). Central Florida Future. 1606. https://stars.library.ucf.edu/centralfloridafuture/1606 HAPPY THANKS61VIN6! from The Central THE central florida Florida Future • November 21, 2001 •THE STUDENT NEWSPAPER SERVING UCF SINCE 1968 • www.UCFjuture.com International 0 Hunger Banquet educates Week offered • forums, Study students about poverty Abroad Fair • KRISTA ZILIZI STAFF WRITER PADRA SANCHEZ S'rAfp WRITER • Students got the chance to experience the different social On Nov. 13, UCF held a classes that populate the world series of open forums for students, • at Volunteer UCF's annual faculty and staff about pertinent Hunger Banquet last week. international issues. Held in the "This is a small slice of Student Union's Key West Room, JOE KALEITA I CFF each forum followed a town hall life as it plays out ~ach day in lower class students, who were the world," said Nausheen format, with a panel of guest forced to sit on the floor, had to eat speakers and open microphones Farooqui, Hunger and with "rats". -

Carnegie Institution of Washington Department of Terrestrial Magnetism Washington, DC 20015-1305

43 Carnegie Institution of Washington Department of Terrestrial Magnetism Washington, DC 20015-1305 This report covers astronomical research carried out during protostellar outflows have this much momentum out to rela- the period July 1, 1994 – June 30, 1995. Astronomical tively large distances. Planetary nebulae do not, but with studies at the Department of Terrestrial Magnetism ~DTM! their characteristic high post-shock temperatures, collapse of the Carnegie Institution of Washington encompass again occurs. Hence all three outflows appear to be able to observational and theoretical fields of solar system and trigger the collapse of molecular clouds to form stars. planet formation and evolution, stars and star formation, Foster and Boss are continuing this work and examining galaxy kinematics and evolution, and various areas where the possibility of introducing 26Al into the early solar system these fields intersect. in a heterogeneous manner. Some meteoritical inclusions ~chondrules! show no evidence for live 26Al at the time of their formation. So either the chondrules formed after the 1. PERSONNEL aluminium had decayed away, or the solar nebula was not Staff Members: Sean C. Solomon ~Director!, Conel M. O’D. Alexander, Alan P. Boss, John A. Graham, Vera C. Rubin, uniformly populated with the isotope. Foster & Boss are ex- Franc¸ois Schweizer, George W. Wetherill ploring the injection of shock wave particles into the collaps- Postdoctoral Fellows: Harold M. Butner, John E. Chambers, ing solar system. Preliminary results are that ;40% of the Prudence N. Foster, Munir Humayun, Stacy S. McGaugh, incident shock particles are swallowed by the collapsing Bryan W. Miller, Elizabeth A. Myhill, David L. -

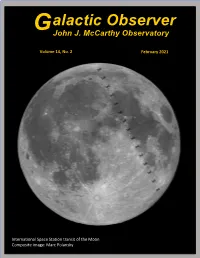

Alactic Observer

alactic Observer G John J. McCarthy Observatory Volume 14, No. 2 February 2021 International Space Station transit of the Moon Composite image: Marc Polansky February Astronomy Calendar and Space Exploration Almanac Bel'kovich (Long 90° E) Hercules (L) and Atlas (R) Posidonius Taurus-Littrow Six-Day-Old Moon mosaic Apollo 17 captured with an antique telescope built by John Benjamin Dancer. Dancer is credited with being the first to photograph the Moon in Tranquility Base England in February 1852 Apollo 11 Apollo 11 and 17 landing sites are visible in the images, as well as Mare Nectaris, one of the older impact basins on Mare Nectaris the Moon Altai Scarp Photos: Bill Cloutier 1 John J. McCarthy Observatory In This Issue Page Out the Window on Your Left ........................................................................3 Valentine Dome ..............................................................................................4 Rocket Trivia ..................................................................................................5 Mars Time (Landing of Perseverance) ...........................................................7 Destination: Jezero Crater ...............................................................................9 Revisiting an Exoplanet Discovery ...............................................................11 Moon Rock in the White House....................................................................13 Solar Beaming Project ..................................................................................14 -

The Hubble Space Telescope – 10 Years On

BEN 11/7/00 2:27 PM Page 2 r bulletin 104 — november 2000 10 BEN 11/7/00 2:28 PM Page 3 the hubble space telescope – 10 years on The Hubble Space Telescope – 10 Years On P. Benvenuti & L. Lindberg Christensen ESA/ESO Space Telescope European Coordinating Facility, Garching, Germany Introduction scientists at the Space Telescope Science ESA is NASA’s partner in the Hubble Space Institute in Baltimore (STScI), USA. In return, a Telescope Project. ESA built the Faint Object minimum of 15% of the Telescope’s observing Camera (the HST instrument that delivers time is guaranteed for projects and research images with the highest spatial resolution), submitted by European astronomers from provided the solar panels that power the ESA’s Member States. In reality, the spacecraft, and supports a team of 15 high standard of projects from European astronomers has, so far, won them some 20% of the total observing time. Last Christmas Eve was very special one for ESA astronauts Claude Nicollier and Jean-François Clervoy: together with their American The initial ESA/NASA Memorandum of colleagues, they spent it aboard the Space Shuttle ‘Discovery’, after Understanding on HST expires 11 years after its concluding the latest scheduled repair mission to the orbiting Hubble launch, i.e. in April 2001. Both ESA and NASA Space Telescope (HST). This third Shuttle refurbishment mission to are convinced that the collaboration on HST has HST was, like its two predecessors, a resounding success. Only days been very successful, not merely in the later, as Hubble entered the new millennium, came the first beautiful development and initial operation of the images of a complex gravitationally lensing cluster of galaxies. -

Astrophysics in 2006 3

ASTROPHYSICS IN 2006 Virginia Trimble1, Markus J. Aschwanden2, and Carl J. Hansen3 1 Department of Physics and Astronomy, University of California, Irvine, CA 92697-4575, Las Cumbres Observatory, Santa Barbara, CA: ([email protected]) 2 Lockheed Martin Advanced Technology Center, Solar and Astrophysics Laboratory, Organization ADBS, Building 252, 3251 Hanover Street, Palo Alto, CA 94304: ([email protected]) 3 JILA, Department of Astrophysical and Planetary Sciences, University of Colorado, Boulder CO 80309: ([email protected]) Received ... : accepted ... Abstract. The fastest pulsar and the slowest nova; the oldest galaxies and the youngest stars; the weirdest life forms and the commonest dwarfs; the highest energy particles and the lowest energy photons. These were some of the extremes of Astrophysics 2006. We attempt also to bring you updates on things of which there is currently only one (habitable planets, the Sun, and the universe) and others of which there are always many, like meteors and molecules, black holes and binaries. Keywords: cosmology: general, galaxies: general, ISM: general, stars: general, Sun: gen- eral, planets and satellites: general, astrobiology CONTENTS 1. Introduction 6 1.1 Up 6 1.2 Down 9 1.3 Around 10 2. Solar Physics 12 2.1 The solar interior 12 2.1.1 From neutrinos to neutralinos 12 2.1.2 Global helioseismology 12 2.1.3 Local helioseismology 12 2.1.4 Tachocline structure 13 arXiv:0705.1730v1 [astro-ph] 11 May 2007 2.1.5 Dynamo models 14 2.2 Photosphere 15 2.2.1 Solar radius and rotation 15 2.2.2 Distribution of magnetic fields 15 2.2.3 Magnetic flux emergence rate 15 2.2.4 Photospheric motion of magnetic fields 16 2.2.5 Faculae production 16 2.2.6 The photospheric boundary of magnetic fields 17 2.2.7 Flare prediction from photospheric fields 17 c 2008 Springer Science + Business Media. -

Trajectory Design Tools for Libration and Cis-Lunar Environments

Trajectory Design Tools for Libration and Cis-Lunar Environments David C. Folta, Cassandra M. Webster, Natasha Bosanac, Andrew Cox, Davide Guzzetti, and Kathleen C. Howell National Aeronautics and Space Administration/ Goddard Space Flight Center, Greenbelt, MD, 20771 [email protected], [email protected] School of Aeronautics and Astronautics, Purdue University, West Lafayette, IN 47907 {nbosanac,cox50,dguzzett,howell}@purdue.edu ABSTRACT science orbit. WFIRST trajectory design is based on an optimal direct-transfer trajectory to a specific Sun- Innovative trajectory design tools are required to Earth L2 quasi-halo orbit. support challenging multi-body regimes with complex dynamics, uncertain perturbations, and the integration Trajectory design in support of lunar and libration of propulsion influences. Two distinctive tools, point missions is becoming more challenging as more Adaptive Trajectory Design and the General Mission complex mission designs are envisioned. To meet Analysis Tool have been developed and certified to these greater challenges, trajectory design software provide the astrodynamics community with the ability must be developed or enhanced to incorporate to design multi-body trajectories. In this paper we improved understanding of the Sun-Earth/Moon discuss the multi-body design process and the dynamical solution space and to encompass new capabilities of both tools. Demonstrable applications to optimal methods. Thus the support community needs confirmed missions, the Lunar IceCube Cubesat lunar to improve the efficiency and expand the capabilities mission and the Wide-Field Infrared Survey Telescope of current trajectory design approaches. For example, (WFIRST) Sun-Earth L2 mission, are presented. invariant manifolds, derived from dynamical systems theory, have been applied to the trajectory design of 1. -

Monthly New Awards – September 2018

Monthly New Awards – September 2018 Abdolvand, Dr. Reza Acoustoelectric Amplification in Composite Piezoelectric-Silicon Cavities: A Circuit-Less Amplification Paradigm for RF Signal Processing and Wireless Sensing National Science Foundation $319,999 College of Engineering and Computer Ahmed, Kareem A Fuel Injectors for Combustion Instability Reduction Creare, LLC College of Engineering and Computer Science Amezcua Correa, Rodrigo 19 Fibers Photonics Lantern Harris Corporation $20,000 College of Optics and Photonics Amezcua Correa, Rodrigo / Li, Guifang / Schulzgen, Axel Multi-Core Fiber True-Time Delays for Ultra-Wideband Analog Signal Processing Systems Harris Corporation $90,000 College of Optics and Photonics Azevedo, Roger Convergence HTF: Collaborative: Workshop on Convergence Research about Multimodal Human Learning Data during Human Machine Interactions National Science Foundation $12,515 College of Community Innovation and Education Batarseh, Issa E Engineering Research Center for Energy Storage System Enabled Society National Science Foundation $100,000 College of Engineering and Computer Science Bridge, Candice M Public Service to the Offices of Jonathan Rose Public Attorney Jonathan Rose Public Attorney $530 College of Sciences – Chemistry Brisbois, Elizabeth Bioinspired liquid-infused nitric oxide (NO) releasing surfaces for advanced insulin delivery systems JDRF International $373,999 College of Engineering and Computer Science Brisset, Julie Carine Margaret / Pinilla-Alonso, Noemi Active Asteroids: Investigating Surface -

UCF Postal Services Zip+4 Routing Guide Last Updated 26-August-2021

UCF Postal Services Zip+4 Routing Guide Last Updated 26-August-2021 DEPARTMENT NAME ZIP+4 PO BOX Academic Affairs/Provost/Faculty Affairs 0065 160065 Academic Services 0125 160125 Academic Services for Student Athletes 3554 163554 Accounting, Kenneth G. Dixon School of 1400 161400 Activity & Service fee Business Office 3230 163230 Administration & Finance Division 0020 160020 Advanced Transportation Systems Simulation 2450 162450 Advertising and Public Relations 1424 161424 African American Studies 1996 161996 Air Force ROTC 2380 162380 Alumni Relations/Alumni Association 0046 160046 AMPAC - Advanced Materials Processing & Analysis 2455 162455 Anthropology 1361 161361 Arboretum 2368 162368 Arena, CFE (Global-Spectrum) 1500 161500 Army ROTC 3377 163377 Arts and Humanities Dean's Office 1990 161990 Arts and Humanities Student Advising (CAHSA) 1993 161993 Athletic Training Program 2205 162205 Athletics - Football 3555 163555 Athletics - Mens Basketball 3555 163555 Athletics Department 3555 163555 Autism & Related Disabilities, (CARD) 2202 162202 AVP and LGBT 3244 163244 Biology 2368 162368 Biomedical Sciences, Burnett School of 2364 162364 Biomolecular Research Annex 3227 163227 Burnett Biomedical Sciences - Lake Nona 7407 167407 Burnett Honors College 1800 161800 Business Administration Dean's Office 1991 161991 Business Administration, College of 1400 161400 Business Services 0055 160055 Card Services 0056 160056 Career Services 0165 160165 Thursday, August 26, 2021 Page 1 of 9 UCF Postal Services Zip+4 Routing Guide Last Updated 26-August-2021 -

2 Jobs 7 Partnerships 1 National Impact 4 out of This World 6 Unique

SPACE RELATED RESEARCH has been part of UCF’s DNA since day one. Today it boasts a team of more than 20 experts focused on exploring our solar system and helping use its resources to better our world. Our experts have decades of experience working with NASA and commercial space companies such as Space X, Blue Origin and Virgin Galactic. UCF is also home to centers and institutes recognized for their excellence in space-related research. Our researchers and students are developing ways to mine asteroids, create new rocket fuels, and mitigate the effects of weightlessness on the human body and mind. We even count two astronauts among our graduates - Fernando Caldeiro and Nicole Stott. When it comes to space, UCF is a powerhouse. National Impact Jobs 1 UCF’s researchers are part of major missions 2 UCF is No. 1 for placing graduates in aerospace-related jobs. Our to asteroids, Mars, Pluto, the moon and beyond. alumni work everywhere from public agencies to commercial companies Together UCF has participated in dozens of that have a stake in space. About 30 percent of the employees at Kennedy NASA missions including OSIRIS-REx, Lucy, Mars Space Center have a UCF degree. Some alumni have launched their Curiosity, New Horizons, Lunar Recon Orbiter, own companies, such as Made in Space, which has a 3D printer on the Cassini, and Lunar Trailblazer among others. International Space Station. Local Impact Out of This World 3 The university holds several events 4 Our connections truly during the year on campus and at local extend into outer space with schools as a way of encouraging students to the existence of a UCF planet continue STEM and also to inspire the public and multiple UCF asteroids. -

Trajectory Design Tools for Libration and Cislunar Environments

TRAJECTORY DESIGN TOOLS FOR LIBRATION AND CISLUNAR ENVIRONMENTS David C. Folta, Cassandra M. Webster, Natasha Bosanac, Andrew D. Cox, Davide Guzzetti, and Kathleen C. Howell National Aeronautics and Space Administration Goddard Space Flight Center, Greenbelt, MD, 20771 [email protected], [email protected] School of Aeronautics and Astronautics, Purdue University, West Lafayette, IN 47907 {nbosanac,cox50,dguzzett,howell}@purdue.edu ABSTRACT supplies insight into the natural dynamics associated with multi- body systems. In fact, such information enables a rapid and robust Innovative trajectory design tools are required to support methodology for libration point orbit and transfer design when challenging multi-body regimes with complex dynamics, uncertain used in combination with numerical techniques such as targeting perturbations, and the integration of propulsion influences. Two and optimization. distinctive tools, Adaptive Trajectory Design and the General Strategies that offer interactive access to a variety of solutions Mission Analysis Tool have been developed and certified to enable a thorough and guided exploration of the trajectory design provide the astrodynamics community with the ability to design space. ATD is intended to provide access to well-known solutions multi-body trajectories. In this paper we discuss the multi-body that exist within the framework of the Circular Restricted Three- design process and the capabilities of both tools. Demonstrable Body Problem (CR3BP) by leveraging both interactive and applications to confirmed missions, the Lunar IceCube CubeSat automated features to facilitate trajectory design in multi-body mission and the Wide-Field Infrared Survey Telescope Sun-Earth regimes [8]. In particular, well-known solutions from the CR3BP, such as body-centered, resonant and libration point periodic and L2 mission, are presented. -

Get to Know Us

UNIVERSITY PROFILE UNIVERSITY OF CENTRAL FLORIDA ABOUT UCF FOUNDED IN 1963, ACADEMIC, INCLUSIVE AND the University of Central Florida OPERATIONAL EXCELLENCE (UCF) is a metropolitan research UCF aspires to be one of the university built to make a better nation’s leading innovative research future for our students and society. We universities, with a focus on student solve tomorrow’s greatest challenges success and contributing to the through a commitment to academic, betterment of society. A different inclusive and operational excellence. kind of university driven by its Leveraging innovative learning, entrepreneurialism and optimism, discovery and partnerships, we foster UCF isn’t just meeting Florida’s UCF will not be defined by its social mobility while developing needs today but helping to fuel future contemporaries, and rather seeks to the skilled talent needed to advance prosperity and discovery. forge a new path with the potential industry for our region, state and The university confers more than to be a leading metropolitan research beyond. 16,000 degrees each year and benefits university that will help to define the These are some of the reasons why from a diverse faculty and staff who future of higher education. U.S. News & World Report ranks UCF create a welcoming environment Following years of growth, the as Florida’s most innovative university and opportunities for approximately university will now focus on building — and one of the nation’s top 20 most 72,000 students to grow, learn and the critical infrastructure that will innovative. UCF is also ranked as a succeed. support its pursuit of excellence. -

University of Central Florida Interdepartmental Journals Contact List

University of Central Florida Interdepartmental Journals Contact List The following provides contact information for Finance and Accounting (F&A) staff who can assist a journal creator in the processing of Interdepartmental Journals (source codes CON, IDI, or IDS) when issues arise or the status of a journal needs to be known. Appendix A provides additional contact information while Appendix B provides status and error examples from UCF Financials. Contact Issue IDI & IDS source code journals that do not contain a Contracts & Grants (C&G) Project, submitted for approval but not yet posted. See Appendix A F&A General Accounting (GA) Staff below. CON source or IDI source code that contains a construction Project (fund Tuyet Anh Nguyen code = 51XXX) journals, submitted for approval but not yet posted. UCF Financials Service Desk Offline Journal Template Setup or Upload Issues. ID Source Code C&G Project journals pending ORC approval. (These journals are first routed to ORC for approval then to F&A C&G for approval.) ORC ID Transfer See Appendix B below. Primary: Esther D'Silva IDI Source Code C&G Project journals pending F&A C&G approval. See Backup: Maribel Villanueva Appendix B below. Primary: Rashida Pierre-Graham Journal Status is an "E" or Budget Status is an "E". See Appendix B below. Financials Service Desk Journal Status is a "V" and Budget Status is an "N". See Appendix B below. Page 1 of 14 2/23/2021 Appendix A Appendix A applies to IDI and IDS Source code journals that do not contain a Contracts and Grants (C&G) project and that have been submitted for approval but have not yet posted.