Inside an Apple

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CPU ボードカタログ サポート CPU Intel :Core I7、Xeon-E5 Freescale :T4240、P4080、MPC8640D AMD :Radeon HD 6970M、HD 7970M GPGPU NVIDIA :Fermi、Kepler Architecture GPGPU

組込みシステム向け CPU ボードカタログ サポート CPU Intel :Core i7、Xeon-E5 Freescale :T4240、P4080、MPC8640D AMD :Radeon HD 6970M、HD 7970M GPGPU NVIDIA :Fermi、Kepler Architecture GPGPU サポートバス規格 OpenVPX VME/VXS CompactPCI PMC/XMC ATCA/AMC PCI Express 403102 Ⓒ MISH International Co., Ltd. MISH International Co., Ltd. ミッシュインターナショナルでは CPU ボードをスピーディに 導入頂けますよう、次のような サービスを提供しております CPU ボードのお貸出しサービス CPU ボードの性能評価検証サービス ミッシュインターナショナルでは、ユーザが実際に製品を導入する前に性能評価を実施していただけ ミッシュインターナショナルでは、専門の CPU ボードサポート技術者がお客様のご要望に応じて CPU ますよう各種評価用 CPU ボードをお貸出ししています。お貸出し時には、リアルタイム OS を含めた ボードの性能を評価・検証させていただきます。たとえばFFT の処理速度やボード間のデータ転送スピー CPU ボードに関するトータルな技術サポートを行っております。 ドの測定などユーザがシステムインテグレーションする上で必要なデータを検証の上、レポートさせて いただきます。(お客様のご要望内容によっては別途有償の場合もあります) CPU ボードの技術サポート ミッシュインターナショナルでは、専門のCPU ボードサポート技術者が導入前はもちろん、導入後もハー ド・ソフトの両面からお客様の技術サポートをいたします。CPU ボードのドライバソフトウェアやアプ リケーションの開発方法等をトータルにバックアップいたします。また、リアルタイム OS を含んだシ CPU ボード用フレームワークソフトウェアの開発サービス ステムインテグレーッションに関するアドバイスも対応しています。 CPU ボードを含んだ組込み用システムを構 築する上では、CPU ボードのハード・ソフ トに関する技術的な知識経験はもちろんです が、CPU ボード以外の A/D、D/A、DIO ボー ド等の各種 I/O ボードとのシームレスな高速 データ通信やリアルタイム OS を使用したイ ンテグレーションが必要です。当社では複数 のボードを使ったマルチ CPU ボードシステ ムやレーダ、ソナー、移動体通信等の無線信 号のリアルタイム処理等をトータルにサポートしています。全体的なデータのパスをサポートした『フ レームワークソフトウェア』の開発もお手伝いしています。ユーザは『フレームワークソフトウェア』 の開発を当社へ外注することにより、アプリケーションソフトウェアの開発や FPGA の開発に専念する ことが出来ます。(お客様のご要望内容によっては別途有償の場合もあります) インテル製 プロセッサ搭載 CPU ボード ボード CPU スピード 拡張 USB 耐環境 型名 プロセッサ メモリ NVRAM Ethernet インテル製 プロセッサ Core i7(Ivy Bridge)、 タイプ (Max) メザニン 2.0 仕様 Xeon E5-2648L x 2 32GB DDR3- 8MB NOR 1000BASE-T x 1 Level HDS6601 6U VPX 1.8GHz - 3 Xeon(8 Core) 搭載 CPU ボード (Sandy Bridge) -

Wind Rose Data Comes in the Form >200,000 Wind Rose Images

Making Wind Speed and Direction Maps Rich Stromberg Alaska Energy Authority [email protected]/907-771-3053 6/30/2011 Wind Direction Maps 1 Wind rose data comes in the form of >200,000 wind rose images across Alaska 6/30/2011 Wind Direction Maps 2 Wind rose data is quantified in very large Excel™ spreadsheets for each region of the state • Fields: X Y X_1 Y_1 FILE FREQ1 FREQ2 FREQ3 FREQ4 FREQ5 FREQ6 FREQ7 FREQ8 FREQ9 FREQ10 FREQ11 FREQ12 FREQ13 FREQ14 FREQ15 FREQ16 SPEED1 SPEED2 SPEED3 SPEED4 SPEED5 SPEED6 SPEED7 SPEED8 SPEED9 SPEED10 SPEED11 SPEED12 SPEED13 SPEED14 SPEED15 SPEED16 POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 WEIBC1 WEIBC2 WEIBC3 WEIBC4 WEIBC5 WEIBC6 WEIBC7 WEIBC8 WEIBC9 WEIBC10 WEIBC11 WEIBC12 WEIBC13 WEIBC14 WEIBC15 WEIBC16 WEIBK1 WEIBK2 WEIBK3 WEIBK4 WEIBK5 WEIBK6 WEIBK7 WEIBK8 WEIBK9 WEIBK10 WEIBK11 WEIBK12 WEIBK13 WEIBK14 WEIBK15 WEIBK16 6/30/2011 Wind Direction Maps 3 Data set is thinned down to wind power density • Fields: X Y • POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 • Power1 is the wind power density coming from the north (0 degrees). Power 2 is wind power from 22.5 deg.,…Power 9 is south (180 deg.), etc… 6/30/2011 Wind Direction Maps 4 Spreadsheet calculations X Y POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 Max Wind Dir Prim 2nd Wind Dir Sec -132.7365 54.4833 0.643 0.767 1.911 4.083 -

Charactersing the Limits of the Openflow Slow-Path

Charactersing the Limits of the OpenFlow Slow-Path Richard Sanger, [email protected] Brad Cowie, [email protected] Matthew Luckie, [email protected] Richard Nelson, [email protected] University of Waikato, New Zealand 28 November 2018 The Question How slow is the slow-path? © THE UNIVERSITY OF WAIKATO • TE WHARE WANANGA O WAIKATO 2 Contents • Introduction to the Slow-Path • Motivation • Test Suite • Test Methodology • Results • Conclusions © THE UNIVERSITY OF WAIKATO • TE WHARE WANANGA O WAIKATO 3 OpenFlow Packet-in and Packet-out To move packets between the controller and network, packets are encapsulated in OpenFlow packet-in and packet-out messages and sent via the slow-path. © THE UNIVERSITY OF WAIKATO • TE WHARE WANANGA O WAIKATO 4 The Fast-Path ASIC OpenFlow Agent Ingress Egress OpenFlow Switch Network © THE UNIVERSITY OF WAIKATO • TE WHARE WANANGA O WAIKATO 5 The Slow-Path (Packet In) ASIC OpenFlow Agent Packet in OpenFlow Switch Network Control-Plane Network OpenFlow Application NIC Controller © THE UNIVERSITY OF WAIKATO • TE WHARE WANANGA O WAIKATO 6 Motivation: Control Traffic Requirements Control traffic is sensitive to bandwidth and latency Latency • Keep-alives • Flow Establishment (Reactive control) Bandwidth • Initial route exchange (BGP etc.) • Capture (Network debugging) • DoS (Misconfiguration, ICMP, etc.) © THE UNIVERSITY OF WAIKATO • TE WHARE WANANGA O WAIKATO 7 Motivation: Control Traffic Requirements Control traffic requirements must be met simultaneously. Example: consider the requirement of link detection probing. • Typical Bidirectional Forwarding Detection (BFD) requirements • < 50ms • 2,880pps (48 port switch) © THE UNIVERSITY OF WAIKATO • TE WHARE WANANGA O WAIKATO 8 Motivation: Shared Resource The slow-path is shared with all other OpenFlow messages. -

$300 Rebate on the System That Does Everything You Need for School.*

d l o f Customer Survey On behalf of Apple, we invite you to participate in the following survey. Your opinion is very important to us. All information that you provide will be kept strictly confidential and used only for market research purposes. Survey results are viewed in aggregate; individual responses are not identified. Which Apple computer did you purchase? iBook PowerBook $300 rebate If Apple had not offered this promotion at this time, which of the following best describes what you would have done? on the system Delayed purchasing a Mac Purchased the Mac anyway Purchased a Windows PC that does everything Terms and Conditions you need The following terms and conditions govern this offer: • Order and take possession of qualifying products from June 29, 2003, through September 27, 2003. Products must be purchased from the Apple Store for Education Individuals or a participating Apple Authorized Campus Reseller located in the 50 United States or District of Columbia. • QUALIFYING PRODUCTS: Any Apple * PowerBook or iBook portable computer (EXCEPT: M8758LL/A iBook 800MHz/CD-ROM and Z06U for school. iBook CD-ROM Configure-to-Order), any Apple iPod, and any HP DeskJet printer with an MSRP of $99 or higher, any HP Photosmart printer with an MSRP of $149 or higher, or any HP All-in- One product with an MSRP of $149 or higher. • This offer is not valid with the purchase of Apple education promotional bundles, or used, or refurbished equipment. • You must be a qualified Apple Education Individual end-user purchaser (employee, board member, or attendee of a home school or public or private education institution in the 50 United States or District of Columbia), and not a reseller, to obtain this promotional offer. -

Caching Basics

Introduction Why memory subsystem design is important • CPU speeds increase 25%-30% per year • DRAM speeds increase 2%-11% per year Autumn 2006 CSE P548 - Memory Hierarchy 1 Memory Hierarchy Levels of memory with different sizes & speeds • close to the CPU: small, fast access • close to memory: large, slow access Memory hierarchies improve performance • caches: demand-driven storage • principal of locality of reference temporal: a referenced word will be referenced again soon spatial: words near a reference word will be referenced soon • speed/size trade-off in technology ⇒ fast access for most references First Cache: IBM 360/85 in the late ‘60s Autumn 2006 CSE P548 - Memory Hierarchy 2 1 Cache Organization Block: • # bytes associated with 1 tag • usually the # bytes transferred on a memory request Set: the blocks that can be accessed with the same index bits Associativity: the number of blocks in a set • direct mapped • set associative • fully associative Size: # bytes of data How do you calculate this? Autumn 2006 CSE P548 - Memory Hierarchy 3 Logical Diagram of a Cache Autumn 2006 CSE P548 - Memory Hierarchy 4 2 Logical Diagram of a Set-associative Cache Autumn 2006 CSE P548 - Memory Hierarchy 5 Accessing a Cache General formulas • number of index bits = log2(cache size / block size) (for a direct mapped cache) • number of index bits = log2(cache size /( block size * associativity)) (for a set-associative cache) Autumn 2006 CSE P548 - Memory Hierarchy 6 3 Design Tradeoffs Cache size the bigger the cache, + the higher the hit ratio -

Ray Tracing on the Cell Processor

Ray Tracing on the Cell Processor Carsten Benthin† Ingo Wald Michael Scherbaum† Heiko Friedrich‡ †inTrace Realtime Ray Tracing GmbH SCI Institute, University of Utah ‡Saarland University {benthin, scherbaum}@intrace.com, [email protected], [email protected] Abstract band”) architectures1 to hide memory latencies, at exposing par- Over the last three decades, higher CPU performance has been allelism through SIMD units, and at multi-core architectures. At achieved almost exclusively by raising the CPU’s clock rate. Today, least for specialized tasks such as triangle rasterization, these con- the resulting power consumption and heat dissipation threaten to cepts have been proven as very powerful, and have made modern end this trend, and CPU designers are looking for alternative ways GPUs as fast as they are; for example, a Nvidia 7800 GTX offers of providing more compute power. In particular, they are looking 313 GFlops [16], 35 times more than a 2.2 GHz AMD Opteron towards three concepts: a streaming compute model, vector-like CPU. Today, there seems to be a convergence between CPUs and SIMD units, and multi-core architectures. One particular example GPUs, with GPUs—already using the streaming compute model, of such an architecture is the Cell Broadband Engine Architecture SIMD, and multi-core—become increasingly programmable, and (CBEA), a multi-core processor that offers a raw compute power CPUs are getting equipped with more and more cores and stream- of up to 200 GFlops per 3.2 GHz chip. The Cell bears a huge po- ing functionalities. Most commodity CPUs already offer 2–8 tential for compute-intensive applications like ray tracing, but also cores [1, 9, 10, 25], and desktop PCs with more cores can be built requires addressing the challenges caused by this processor’s un- by stacking and interconnecting smaller multi-core systems (mostly conventional architecture. -

45-Year CPU Evolution: One Law and Two Equations

45-year CPU evolution: one law and two equations Daniel Etiemble LRI-CNRS University Paris Sud Orsay, France [email protected] Abstract— Moore’s law and two equations allow to explain the a) IC is the instruction count. main trends of CPU evolution since MOS technologies have been b) CPI is the clock cycles per instruction and IPC = 1/CPI is the used to implement microprocessors. Instruction count per clock cycle. c) Tc is the clock cycle time and F=1/Tc is the clock frequency. Keywords—Moore’s law, execution time, CM0S power dissipation. The Power dissipation of CMOS circuits is the second I. INTRODUCTION equation (2). CMOS power dissipation is decomposed into static and dynamic powers. For dynamic power, Vdd is the power A new era started when MOS technologies were used to supply, F is the clock frequency, ΣCi is the sum of gate and build microprocessors. After pMOS (Intel 4004 in 1971) and interconnection capacitances and α is the average percentage of nMOS (Intel 8080 in 1974), CMOS became quickly the leading switching capacitances: α is the activity factor of the overall technology, used by Intel since 1985 with 80386 CPU. circuit MOS technologies obey an empirical law, stated in 1965 and 2 Pd = Pdstatic + α x ΣCi x Vdd x F (2) known as Moore’s law: the number of transistors integrated on a chip doubles every N months. Fig. 1 presents the evolution for II. CONSEQUENCES OF MOORE LAW DRAM memories, processors (MPU) and three types of read- only memories [1]. The growth rate decreases with years, from A. -

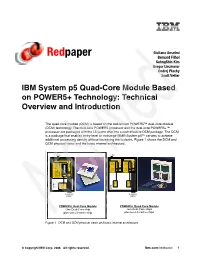

IBM System P5 Quad-Core Module Based on POWER5+ Technology: Technical Overview and Introduction

Giuliano Anselmi Redpaper Bernard Filhol SahngShin Kim Gregor Linzmeier Ondrej Plachy Scott Vetter IBM System p5 Quad-Core Module Based on POWER5+ Technology: Technical Overview and Introduction The quad-core module (QCM) is based on the well-known POWER5™ dual-core module (DCM) technology. The dual-core POWER5 processor and the dual-core POWER5+™ processor are packaged with the L3 cache chip into a cost-effective DCM package. The QCM is a package that enables entry-level or midrange IBM® System p5™ servers to achieve additional processing density without increasing the footprint. Figure 1 shows the DCM and QCM physical views and the basic internal architecture. to I/O to I/O Core Core 1.5 GHz 1.5 GHz Enhanced Enhanced 1.9 MB MB 1.9 1.9 MB MB 1.9 L2 cache L2 L2 cache switch distributed switch distributed switch 1.9 GHz POWER5 Core Core core or CPU or core 1.5 GHz L3 Mem 1.5 GHz L3 Mem L2 cache L2 ctrl ctrl ctrl ctrl Enhanced distributed Enhanced 1.9 MB Shared Shared MB 1.9 Ctrl 1.9 GHz L3 Mem Ctrl POWER5 core or CPU or core 36 MB 36 MB 36 L3 cache L3 cache L3 DCM 36 MB QCM L3 cache L3 to memory to memory DIMMs DIMMs POWER5+ Dual-Core Module POWER5+ Quad-Core Module One Dual-Core-chip Two Dual-Core-chips plus two L3-cache-chips plus one L3-cache-chip Figure 1 DCM and QCM physical views and basic internal architecture © Copyright IBM Corp. 2006. -

Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important

VOLUME 13, NUMBER 13 OCTOBER 6,1999 MICROPROCESSOR REPORT THE INSIDERS’ GUIDE TO MICROPROCESSOR HARDWARE Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important by Keith Diefendorff company has decided to make a last-gasp effort to retain control of its high-end server silicon by throwing its consid- Not content to wrap sheet metal around erable financial and technical weight behind Power4. Intel microprocessors for its future server After investing this much effort in Power4, if IBM fails business, IBM is developing a processor it to deliver a server processor with compelling advantages hopes will fend off the IA-64 juggernaut. Speaking at this over the best IA-64 processors, it will be left with little alter- week’s Microprocessor Forum, chief architect Jim Kahle de- native but to capitulate. If Power4 fails, it will also be a clear scribed IBM’s monster 170-million-transistor Power4 chip, indication to Sun, Compaq, and others that are bucking which boasts two 64-bit 1-GHz five-issue superscalar cores, a IA-64, that the days of proprietary CPUs are numbered. But triple-level cache hierarchy, a 10-GByte/s main-memory IBM intends to resist mightily, and, based on what the com- interface, and a 45-GByte/s multiprocessor interface, as pany has disclosed about Power4 so far, it may just succeed. Figure 1 shows. Kahle said that IBM will see first silicon on Power4 in 1Q00, and systems will begin shipping in 2H01. Looking for Parallelism in All the Right Places With Power4, IBM is targeting the high-reliability servers No Holds Barred that will power future e-businesses. -

Hierarchical Roofline Analysis for Gpus: Accelerating Performance

Hierarchical Roofline Analysis for GPUs: Accelerating Performance Optimization for the NERSC-9 Perlmutter System Charlene Yang, Thorsten Kurth Samuel Williams National Energy Research Scientific Computing Center Computational Research Division Lawrence Berkeley National Laboratory Lawrence Berkeley National Laboratory Berkeley, CA 94720, USA Berkeley, CA 94720, USA fcjyang, [email protected] [email protected] Abstract—The Roofline performance model provides an Performance (GFLOP/s) is bound by: intuitive and insightful approach to identifying performance bottlenecks and guiding performance optimization. In prepa- Peak GFLOP/s GFLOP/s ≤ min (1) ration for the next-generation supercomputer Perlmutter at Peak GB/s × Arithmetic Intensity NERSC, this paper presents a methodology to construct a hi- erarchical Roofline on NVIDIA GPUs and extend it to support which produces the traditional Roofline formulation when reduced precision and Tensor Cores. The hierarchical Roofline incorporates L1, L2, device memory and system memory plotted on a log-log plot. bandwidths into one single figure, and it offers more profound Previously, the Roofline model was expanded to support insights into performance analysis than the traditional DRAM- the full memory hierarchy [2], [3] by adding additional band- only Roofline. We use our Roofline methodology to analyze width “ceilings”. Similarly, additional ceilings beneath the three proxy applications: GPP from BerkeleyGW, HPGMG Roofline can be added to represent performance bottlenecks from AMReX, and conv2d from TensorFlow. In so doing, we demonstrate the ability of our methodology to readily arising from lack of vectorization or the failure to exploit understand various aspects of performance and performance fused multiply-add (FMA) instructions. bottlenecks on NVIDIA GPUs and motivate code optimizations. -

Ibook, Powerbook & Macbook Keycap Removal & Fitting

iBook, PowerBook & MacBook Keycap removal & fitting Although there are a number of keyboard variants, for illustration purposes we have used the most common type. Please note that certain keys on the keyboard vary in terms of size and orientation. For F-keys and arrow keys, the scissor clip is positioned sideways, i.e. the top in the diagram below is on the left hand side. This also applies to all keys on MacBook 13" keyboards where the scissor clips are also transverse. The most important thing to know when removing or replacing a keycap from a laptop keyboard is how the key attaches to the keyboard which is very difficult to see when the key cap is in place. I know it's boring, but bear with us; it's worth knowing! The key cap is fixed to the aluminium backplate of the keyboard Top with a two-part, plastic scissor clip. The digram shows a top Scissor Clip view of the scissor clip on the left and a side view of how the key & scissor clip fix to the keyboard backplate on the right. The scissor clip fixes to the backplate at three points. A single tab hooks onto the bottom of the scissor clip at point D and the two lugs on the scissor clip fit into the holes on two hoops on the base plate (see A). Key Cap The key cap fixes to the scissor clip at four points. Two lugs on the scissor clup slide into hooks at the bottom of the key cap Keyboard Backplate (see B) and the two lugs at the top of the scissor clip snap into a pair of very delicate pinsor clips (see C). -

POWER® Processor-Based Systems

IBM® Power® Systems RAS Introduction to IBM® Power® Reliability, Availability, and Serviceability for POWER9® processor-based systems using IBM PowerVM™ With Updates covering the latest 4+ Socket Power10 processor-based systems IBM Systems Group Daniel Henderson, Irving Baysah Trademarks, Copyrights, Notices and Acknowledgements Trademarks IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both: Active AIX® POWER® POWER Power Power Systems Memory™ Hypervisor™ Systems™ Software™ Power® POWER POWER7 POWER8™ POWER® PowerLinux™ 7® +™ POWER® PowerHA® POWER6 ® PowerVM System System PowerVC™ POWER Power Architecture™ ® x® z® Hypervisor™ Additional Trademarks may be identified in the body of this document. Other company, product, or service names may be trademarks or service marks of others. Notices The last page of this document contains copyright information, important notices, and other information. Acknowledgements While this whitepaper has two principal authors/editors it is the culmination of the work of a number of different subject matter experts within IBM who contributed ideas, detailed technical information, and the occasional photograph and section of description.