Efficient and Fast Multi-View Face Detection Based on Feature Transformation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Published 22 February 2019 LKFF 2012

THE LONDON KOREAN FILM FESTIVAL 제7회 런던한국영화제 1-16 NoVEMBER StARTING IN LONDON AND ON TOUR IN BOURNEMOUTH, GlASGOW AND BRISTOL 12�OZE�293 OZ Quadra Smartium(Film Festival).pdf 1 10/18/12 6:14 PM A MESSAGEUR ROM O TOR LONDON-SEOUL, DAILY F TIC DIREC ASIANA AIRLINES ARTIS is redefining It is with great pride and honour that I welcome you to the 7th London Korean Film Festival. Regardless of whether you are a connoisseur of Korean cinema or completely Business Class. new to the country’s film scene, we have created an exciting and varied C Beginning November 17th, all the comforts of programme that will delight, thrill, scare and, most importantly, entertain you. M our new premium business class seat, Y the OZ Quadra Smartium, can be yours. We start off large with our return to the Odeon West End with one of the CM There’s a wonderful new way to get back and biggest Korean films in the last ten years;The Thieves. Our presentation MY forth to Seoul everyday. Announcing Asiana Airlines’ innovative of this exciting crime caper also sees its director, Choi Dong-hoon, and new premium business class seat, the OZ Quadra Smartium, lead actor Kim Yoon-suk gracing London’s Leicester Square. CY offering you both the privacy of your own space CMY and the relaxation of a full-flat bed. From the 2nd of November through to the 10th in London (continuing until the 16th in K Glasgow, Bristol and Bournemouth) we will show everything from the big box office hits to the smallest of independents, ending with the much-lauded Masquerade, as our closing Gala feature. -

Myongji University, Seoul

Myongji University, Seoul Guideline for 2013 Fall Semester Exchange Student Application How to Apply to Myongji University 1. Application Deadline : 31(Fri) May, 2013 2. Send Applications To: Myongji University (Seoul Campus) HaengJeongDong 2F Office of International Affairs (Rm. 5217) ※ To. Ms Young Hye Lee, Officer ① Address: 34 Geobukgol-ro, Seodaemun-gu, Seoul, Korea (Zip Code : 120-728) ② Contact: ☏ (+82) 2 - 300 - 1514 (E-mail: [email protected]) ※Please mail your applications to the above address! 4. Required Documents : **Refer to the Guideline (p. 4) Exchange Student Program - Program Overview / Qualifications / Application Schedule Program Overview A foreign exchange student is a student who studies at Myongji University for one semester or 1 academic year after being selected by his/her home university in accordance with the terms and conditions specified in a valid partnership agreement(MOU) signed with Myongji University. During their study at Myongji University, foreign exchange students: - Are exempt from the tuition of Myongji University after paying the tuition of their home universities. - Attend orientation for a comprehensive briefing on every aspect of life at Myongji University. - Attend regular classes conducted in Korean or English to obtain credits. - Can attend a Korean Language Course depending on their Korean Lv. without tuition payment. - Attend special cultural events including Korean culture field trip. - Make Korean Friends through the International Outreach Student Club(어우라미) and mentors in their department. After the end of their study at Myongji University, Myongji University will send academic transcripts to the home universities for credit transfer. Qualifications You must meet the following requirements to apply for the exchange student program with Myongji University - You need to pass the selection process of your home university for this program. -

2008년도 한국미생물학회연합 국제학술대회 2008 International Meeting of the Federation of Korean Microbiological Societies October 16-17, 2008 Seoul, Korea

2008년도 한국미생물학회연합 국제학술대회 2008 International Meeting of the Federation of Korean Microbiological Societies October 16-17, 2008 Seoul, Korea Contents Timetable / 일정표 1 Floor Plan / 행사장 안내도 3 Scientific Programs / 학술프로그램 Plenary Lectures / 기조강연 5 Hantaan Prize Award Lecture / 한탄상 수상자 강연 6 Symposia / 심포지아 7 Colloquium / 콜로퀴움 15 Workshop / 워크샵 16 Public Hearing on the Future Research and 17 Development of Microbial Technology Poster Sessions / 포스터세션 18 Scientific Programs of the 9th KJISM 78 Poster Sessions / 포스터세션 81 Author Index / 저자색인 90 Exhibition / 전시회 108 Timetable / 일정표 October 16 (Thursday) Geomungo Hall Daegeum Hall Hall A Hall B Hall C 08:00-09:00 09:00-09:15 Opening Ceremony and Ceremony of Awarding Hantaan Prize (Hall C) S1 S2 S3 S4 09:15-11:15 Strategies of Pathogens Microbial Diversity and Viral Pathogenesis Fungal Bioscience for the Survival in Host Application 11:15-12:00 Plenary Lecture 1 (Hall C) 12:00-12:30 Hantaan Prize Award Lecture (Hall C) 12:30-13:30 Lunch Registration 13:30-14:30 MSK GM KSMy GM 14:30-15:15 Plenary Lecture 2 (Hall B) Poster Session 1 & Exhibition S5 S7 S6 S8 15:15-17:15 Emerging Trends Viral Replication and Regulation of Gene Expression Mushroom Science in Enzyme Screening Gene Expression 17:15-18:00 Plenary Lecture 3 (Hall A) 18:10-18:55 Plenary Lecture 4 (Hall A) 19:00-20:30 Welcome Reception (Hall B+C) October 17 (Friday) Geomungo Hall Daegeum Hall Hall A Hall B Hall C 08:00-09:00 S9 KJS1 S11 S10 09:00-11:00 New Insights into Starter Cultures Microbial Genomics and Emergence of New and Immune System -

K O R E a N C in E M a 2 0

KOREAN CINEMA 2006 www.kofic.or.kr/english Korean Cinema 2006 Contents FOREWORD 04 KOREAN FILMS IN 2006 AND 2007 05 Acknowledgements KOREAN FILM COUNCIL 12 PUBLISHER FEATURE FILMS AN Cheong-sook Fiction 22 Chairperson Korean Film Council Documentary 294 206-46, Cheongnyangni-dong, Dongdaemun-gu, Seoul, Korea 130-010 Animation 336 EDITOR-IN-CHIEF Daniel D. H. PARK Director of International Promotion SHORT FILMS Fiction 344 EDITORS Documentary 431 JUNG Hyun-chang, YANG You-jeong Animation 436 COLLABORATORS Darcy Paquet, Earl Jackson, KANG Byung-woon FILMS IN PRODUCTION CONTRIBUTING WRITER Fiction 470 LEE Jong-do Film image, stills and part of film information are provided by directors, producers, production & sales companies, and Film Festivals in Korea including JIFF (Jeonju International Film Festival), PIFF APPENDIX (Pusan International Film Festival), SIFF (Seoul Independent Film Festival), Women’s Film Festival Statistics 494 in Seoul, Puchon International Fantastic Film Festival, Seoul International Youth Film Festival, Index of 2006 films 502 Asiana International Short Film Festival, and Experimental Film and Video Festival in Seoul. KOFIC appreciates their help and cooperation. Contacts 517 © Korean Film Council 2006 Foreword For the Korean film industry, the year 2006 began with LEE Joon-ik's <King and the Clown> - The Korean Film Council is striving to secure the continuous growth of Korean cinema and to released at the end of 2005 - and expanded with BONG Joon-ho's <The Host> in July. First, <King provide steadfast support to Korean filmmakers. This year, new projects of note include new and the Clown> broke the all-time box office record set by <Taegukgi> in 2004, attracting a record international support programs such as the ‘Filmmakers Development Lab’ and the ‘Business R&D breaking 12 million viewers at the box office over a three month run. -

MYONGJI UNIVERSITY, SEOUL Conditions Specified in the Valid Partnership Agreement (MOU) Signed with MJU

Exchange Program - Program Overview / Qualifications / Application Schedule Program Overview An international exchange student is a student who studies at Myongji University (MJU) for one semester or one academic year after being nominated by his/her home university in accordance with the terms and MYONGJI UNIVERSITY, SEOUL conditions specified in the valid partnership agreement (MOU) signed with MJU. Guideline for 2017-2018 Exchange Program During their study at MJU, international exchange students are: - Exempted from paying tuition to MJU after paying the tuition fee to their home universities. - Provided with a comprehensive orientation & closing ceremonies which includes academic details and life in MJU. - Permitted to taking regular classes conducted in Korean or English to obtain credits. - Able to participate in special cultural events including Korean cultural field trips with minimum charge. - Supported by MJU’s International Outreach Student Club (OULAMI) and mentors in their departments to easily adapt to MJU life and culture and make Korean and international friends. - After the study at MJU, official academic transcripts will be sent to the home universities for credit transfer. - Participate in special summer program with tuition discount. - Take intensive Korean language courses at the Korean Language Institute (KLI) with 50% tuition waiver. Qualifications Students must meet the following requirements to apply for the international exchange student program in MJU: - Need to be nominated by the home university to apply for the program. - Need to have finished at least one or more semester at the home university. - Must have a good command of Korean or English or an interest in Korean language and culture. -

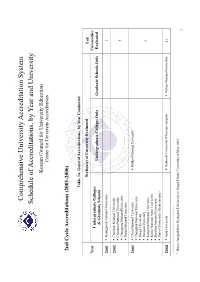

Schedule of Accreditations, by Year and University

Comprehensive University Accreditation System Schedule of Accreditations, by Year and University Korean Council for University Education Center for University Accreditation 2nd Cycle Accreditations (2001-2006) Table 1a: General Accreditations, by Year Conducted Section(s) of University Evaluated # of Year Universities Undergraduate Colleges Undergraduate Colleges Only Graduate Schools Only Evaluated & Graduate Schools 2001 Kyungpook National University 1 2002 Chonbuk National University Chonnam National University 4 Chungnam National University Pusan National University 2003 Cheju National University Mokpo National University Chungbuk National University Daegu University Daejeon University 9 Kangwon National University Korea National Sport University Sunchon National University Yonsei University (Seoul campus) 2004 Ajou University Dankook University (Cheonan campus) Mokpo National University 41 1 Name changed from Kyungsan University to Daegu Haany University in May 2003. 1 Andong National University Hanyang University (Ansan campus) Catholic University of Daegu Yonsei University (Wonju campus) Catholic University of Korea Changwon National University Chosun University Daegu Haany University1 Dankook University (Seoul campus) Dong-A University Dong-eui University Dongseo University Ewha Womans University Gyeongsang National University Hallym University Hanshin University Hansung University Hanyang University Hoseo University Inha University Inje University Jeonju University Konkuk University Korea -

UNDERGRADUATE PROGRAMS of MYONGJI UNIVERSITY Address: 34 Geobukgol-Ro, Seodaemun-Gu, Seoul, Korea (120-728) / Tel

UNDERGRADUATE PROGRAMS OF MYONGJI UNIVERSITY Address: 34 Geobukgol-ro, Seodaemun-gu, Seoul, Korea (120-728) / Tel. (+82) 2 300 1514 / Fax. (+82) 2 300 1516 Campus (Location) College Unit (Division or Department) Major Korean Language and Literature Korean Language and Literature Chinese Language and Literature Chinese Language and Literature Japanese Language and Literature Japanese Language and Literature English Language and Literature English Language and Literature Humanities History History Library and Information Science Library and Information Science Art History Art History Arabic Studies Arabic Studies Philosophy Philosophy Seoul Campus Public Administration Public Administration Economics Economics Political Science and Diplomacy Political Science and Diplomacy Social Science Digital Media Digital Media (Mass Media) Child Development and Education Child Development and Education Youth Education and Leadership Youth Education and Leadership Business Administration Business Administration Business International Business and Trade International Business and Trade Administration Management Information System Management Information System (MIS) Law Law Law Mathematics Mathematics Physics Physics Natural Chemistry Chemistry Science Food and Nutrition Food and Nutrition Division of Bioscience and Bio-Informatics Bioscience / Bio-Informatics Electrical Engineering Electrical Engineering Electronics Engineering Electronics Engineering Information Engineering Information Engineering Communication Engineering Communication Engineering -

Korea and the World Economy Vol

JKE 표지(21-3) 1907.9.20 5:52 PM 페이지1 mac1 Korea and the World Economy Korea and the World Vol. 21, No. 3 December 2020 / ISSN 2234-2346 Korea and the World Economy Articles Vol. 21, No. 3 December 2020 Information Stickiness and Monetary Policy on the Great Moderation BYEONGDEUK JANG Safety Nearby and Financial Welfare: Common Barriers to Safer Neighborhood and Financial Welfare NA YOUNG PARK Real Exchange Rate Dynamics under Alternative Approaches to Expectations YOUNG SE KIMŤJIYUN KIM AKES JKE 표지(21-3) 1907.9.20 5:52 PM 페이지2 mac1 Korea and the World Economy President and Members of Council The Association of Korean Economic Studies The Association of Korean Economic Studies http://www.akes.or.kr http://www.akes.or.kr EDITOR YOUNG SE KIM, Sungkyunkwan University, Korea President EDITORIAL ADVISORY BOARD CHONG-GAK SHIN, Korea Employment Information Service JOSHUA AIZENMAN, University of Southern California, USA ALICE H. AMSDEN, Massachusetts Institute of Technology, USA YONGSUNG CHANG, Seoul National University, Korea Honorary Presidents KYONGWOOK CHOI, University of Seoul, Korea KI TAE KIM, Sungkyunkwan University SUN EAE CHUN, Chung-Ang University, Korea CHARLES HARVIE, University of Wollongong, Australia HEE YHON SONG, Korea Development Institute HYEON-SEUNG HUH, Yonsei University, Korea HIROMITSU ISHI, Hitotsubashi University, Japan JONG WON LEE, Sungkyunkwan University JINILL KIM, Korea University, Korea SEONG TAE RO, Woori Bank FUKUNARI KIMURA, Keio University, Japan JONGWON LEE, Sungkyunkwan University, Korea CHUNG MO KOO, Kangwon National University KEUN LEE, Seoul National University, Korea EUI-SOON SHIN, Yonsei University YEONHO LEE, Chungbuk National University, Korea PETER J. LLOYD, University of Melbourne, Australia JEONG HO HAHM, Incheon National University M. -

Layer-By-Layer Assembled Graphene Multilayers on Multidimensional Surfaces for Highly Durable, Scalable, and Wearable Triboelect

Electronic Supplementary Material (ESI) for Journal of Materials Chemistry A. This journal is © The Royal Society of Chemistry 2018 Supporting Information Layer-by-layer assembled graphene multilayers on multidimensional surfaces for highly durable, scalable, and wearable triboelectric nanogenerators Il Jun Chung,†a Wook Kim,†b Wonjun Jang,a Hyun-Woo Park,c Ahrum Sohn,d Kwun-Bum Chung,c Dong-Wook Kim,d Dukhyun Choi*b and Yong Tae Park*a aDepartment of Mechanical Engineering, Myongji University, 116 Myongji-ro, Cheoin-gu, Yongin, Gyeonggi-do, 17058, Republic of Korea Email: [email protected] bDepartment of Mechanical Engineering, Kyung Hee University, 1732 Deogyeong-daero, Giheung-gu, Yongin, Gyeonggi-do, 17104, Republic of Korea Email: [email protected] cDivision of Physics and Semiconductor Science, Dongguk University, 30, Pildong-ro 1-gil, Jung-gu, Seoul, 04620, Republic of Korea dDepartment of Physics, Ewha Womans University, 52, Ewhayeodae-gil, Seodaemun-gu, Seoul, 03760, Republic of Korea Fig. S1 Power generation mechanism of G-TENGs. a–d) Potential distribution of the G- TENG simulated by the COMSOL multi-physics software. Fig. S2 FE-SEM images of (a) the top surface of a bare (flat) PET substrate, (b) the top surface of an NaOH-etched (undulated) PET substrate, and (c) an NaOH-etched PET substrate tilted about 30 degrees. Fig. S3 (a) Visible light absorbance spectra of the LbL-assembled graphene films (1 to 10 BLs, wavelength = 450-850 nm). (b) Dependence of the light absorbance at 550 nm wavelength of the LbL-assembled graphene films (1 to 10 BLs) on the number of bilayers. -

Freshwater Viral Metagenome Reveals Novel and Functional Phage-Borne Antibiotic Resistance Genes

Freshwater Viral Metagenome Reveals Novel and Functional Phage-borne Antibiotic Resistance Genes Kira Moon Inha University Ilnam Kang Inha University Kwang Seung Park Myongji University Jeong Ho Jeon Myongji University Kihyun lee Chung-Ang University Chang-Jun Cha Chung-Ang University Sang Hee Lee ( [email protected] ) Myongji University Jang-Cheon Cho ( [email protected] ) Inha University https://orcid.org/0000-0002-0666-3791 Research Keywords: Bacteriophage, Viral metagenome, Virome, Antibiotic resistance gene, Minimum inhibitory concentration, β-lactamase, River Posted Date: December 5th, 2019 DOI: https://doi.org/10.21203/rs.2.18207/v1 License: This work is licensed under a Creative Commons Attribution 4.0 International License. Read Full License Version of Record: A version of this preprint was published on June 1st, 2020. See the published version at https://doi.org/10.1186/s40168-020-00863-4. Page 1/25 Abstract Background Antibiotic resistance developed by bacteria is a signicant threat to global health. Antibiotic resistance genes (ARGs) spread across different bacterial populations through multiple dissemination routes, including horizontal gene transfer mediated by bacteriophages. ARGs carried by bacteriophages are considered especially threatening due to their prolonged persistence in the environment, fast replication rates, and ability to infect phylogenetically remote bacterial hosts. Several studies employing qPCR and viral metagenomics have shown that viral fraction and viral sequence reads in clinical and environmental samples carry many ARGs. However, only a few ARGs have been found in viral contigs assembled from metagenome reads, with most of these genes lacking effective antibiotic resistance phenotypes. Owing to the wide application of viral metagenomics, nevertheless, different classes of ARGs are being continuously found in viral metagenomes acquired from diverse environments. -

Study Locally, Succeed Globally

STUDY LOCALLY, SUCCEED GLOBALLY GLOBAL MOBILITY PROGRAMME THAT YOU CAN PARTICIPATE GLOBAL EXCHANGE PARTNERS STUDENT EXCHANGE SHORT-TERM MOBILITY Undergraduates can participate in All students will have the opportunity a student exchange programme and to participate in short mobility spend one semester abroad at partner programmes (2 weeks) at partner 112 PARTNERS IN 66 PARTNERS IN university by paying local tuition fees. universities. 18 COUNTRIES 9 COUNTRIES 9 PARTNERS IN 11 PARTNERS IN 3 COUNTRIES 2 COUNTRIES SOUTH AMERICA 2 PARTNERS IN 2 COUNTRIES Students will have the opportunity to study in one of the 200 universities worldwide TAYLOR’S UNIVERSITY STUDENT EXCHANGE PROGRAMME COST OF LIVING IN COUNTRIES OF OUR PARTNER UNIVERSITIES *Note: Estimated cost only includes accommodation, food and general living expenses for the month. NO. COUNTRY ESTIMATED COST OF LIVING PER MONTH (RM) 1 Argentina 4500 – 6000 2 Australia 5000 – 8000 3 Austria 2500 – 4000 4 Belgium 2500 – 3000 5 Canada 5500 – 8000 6 China 2600 – 5000 7 Czech Republic 2000 – 3500 8 Denmark 3000 – 4000 9 Finland 2700 – 3200 10 France 3000 – 5000 11 Germany 3000 – 5000 12 Hong Kong SAR, China 2500 – 3300 13 Indonesia 2000 – 3500 14 Italy 3000 – 3400 15 Japan 3000 – 4500 16 Korea 2500 – 3000 17 Lithuania 1400 – 3000 18 Mexico 2000 – 2500 Taylor’s University Student Exchange Programme is a vital component to enhance the student’s international 19 Netherlands 3000 – 4500 perspective and provide an opportunity for students to benefit from international ideas, viewpoints and knowledge. 20 New Zealand 6000 – 8000 21 Norway 3500 – 5200 Essentially, Taylor’s University is committed to preparing our students for a future in which they will be global 22 Peru 2500 – 3300 citizens. -

Opportunities, Challenges, and Directions: Celebrating 40 Years of ALAK

1 2018 ALAK International Conference Opportunities, Challenges, and Directions: Celebrating 40 years of ALAK October 13, 2018 Venue: Sogang University, Seoul, KOREA Jeong Hasang Hall Organized by Applied Linguistics Association of Korea Hosted by Sogang University 2 2018 ALAK International Conference Organizing Committee Conference Chair Yo-An Lee (Sogang University) Program Chairs Jae-Eun Park (Kangnam University) Tae Youn Ahn (Korea National Sport University) Proceedings Chair Kwanghyun Park (Myongji University) Site Chair Eun Sung Park (Sogang University) International Affairs Chair Sun-Young Lee (Cyber Hankuk University of Foreign Studies) General Affairs Chair Soondo Baek (Kookmin University) Financial Affairs Chair Seonmin Park (Korea Advanced Institute of Science and Technology) Technology Chair Deayeon Nam (Ulsan National Institute of Science and Technology) Accommodation Chair Jungmin Ko (Sungshin Women's University) Fund Raising Chair Bo Young Lee (Ewha Womans University) Kyungjin Joo (Sungkyunkwan University) 3 About the Applied Linguistics Association of Korea (ALAK) The Applied Linguistics Association of Korea (ALAK) is the national organization for applied linguistics in Korea. ALAK aims to provide leadership in applied linguistics and supports the development of teaching, learning and research in the field. ALAK includes national and international members and is affiliated with the International Association of Applied Linguistics (Association Internationale de Linguistique Appliquée, AILA). Members use a variety of theoretical frameworks and methodological approaches to address a wide range of language-related issues that affect individuals and society. The mission of ALAK is to facilitate the advancement and dissemination of knowledge regarding these language-related issues in order to improve the lives of individuals and conditions in society.