Aalborg Universitet an Analysis of the GTZAN Music Genre Dataset Sturm

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Talking Heads -.:: GEOCITIES.Ws

Talking Heads / David Byrne / A+ A B+ B C D updated: 09/06/2005 Tom Tom Club / Jerry Harrison / ARTISTS = Artists I´m always looking to trade for new shows not on my list :-) The Heads DVD+R for trade: (I´ll trade 1 DVD+R for 2 CDRs if you haven´t a DVD I´m interested and you still would like to tarde for a DVD+R before contact me here are the simple rules of trading policy: - No trading of official released CD´s, only bootleg recordings, - Please use decent blanks. (TDK, Memorex), - I do not sell any bootleg or copies of them from my list. Do not ask!! - I send CDRs only, no tapes, Trading only !!! ;-), - I don't do blanks & postage or 2 for 1's; fair trading only (CD for a CD or CD for a tape), I trade 1 DVD+R for 2 CDRs if you haven´t a DVD I´m - Pack well, ship airmail interested - CD's should be burned DAO, without 2 seconds gaps between the - Don´t send Jewel Cases, use plastic or paper pockets instead, I´ll do the same tracks, - For set lists or quality please ask, - No writing on the disks please - Please include a set list or any venue info, I´l do the same, - Keep contact open during trade to work out a trade please contact me: [email protected] TERRY ALLEN Stubbs, Austin, TX 1999/03/21 Audience Recording (feat. David Byrne) CD Amarillo Highway / Wilderness Of This World / New Delhi Freight Train / Cortez Sail / Buck Naked (w/ David Byrne) / Bloodlines I / Gimme A Ride To Heaven Boy DAVID BYRNE In The Pink Special --/--/-- TV-broadcast / documentary DVD+R Interviews & music excerpts TV-Show Appearances 1990 - 2004 TV-broadcasts (quality differs on each part) DVD+R I. -

Ally Anticipated, Molly Remained Undisclosed

Hatchet loses $15,000 by Sherri Conyers Playing to a crowd one third the Hatchet's share of ticket receipts with flashiights, searching size originally anticipated, Molly remained undisclosed. reliable back packs and bags for forbidden Hatchet and Trigger Happy belted figures indicate that the SGA has beverages. and depositing their out their visions of rock and roll, in lost approximately fifteen thousand discoveries in garbage cans which the first Student Government dollars on the concert. Pinder were soon overflowing with Association (SGA) sponsored emphasized that the concert was a contraband. concert in four years. social and logistic, if not a financial, "Everybody was geared up for Both SGA Concert Coordinator success. calling the event "the best problems" with the concert, said Jon Pinder and SGA President put-together concert I've been Pinder: who felt there was "'00 Terry Nolan were dissatisfied with involved in, in ten years in the much" security: "'security should be the relatively low attendance figures. business." substantial but not excessive ... you According to Nolan, the "turnstile Apparently the small turnout was don't need that at a concert figure" of the number of playing due to the short advertising and sales nobody was going to do anything." guests was 956. , lead time available to Pinder and his Pinder is hopeful that at future U M BC concerts "security won't be a Photo by B. Although at press time Molly staff. A contract dispute between Molly Hatchet brought 956 rock 'n rollers to the field house Saturday night Molly Hatchet's agents and the problem anymore .. -

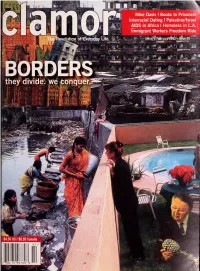

Clamor Magazine Using Our Online Clamor Is Also Available Through These Fine Distribution Outlets: AK Press, Armadillo, Desert Survey At

, r J^ I^HJ w^ litM: ia^ri^fTl^ pfn^J r@ IDS in Africa I Homeless in L.A. 1 J oniigrant Workers Freedom Ride " evolution of ^EVerycfay Life .'^ »v -^3., JanuaiV7F«bruary^0V* l^e 30 5 " -»-^ - —t«.iti '•ill1.1, #1 *ymm f**— 'J:'<Uia-. ^ they divide, we conquerJ fml^ir $4.50 us/ $6.95 Canada 7 "'252 74 "96769' fKa%'t* NEW ALBUMS JANUARY 25 'M WIDE AWAKEjrS MORNING DIGITAL ASH IN A DIGITAL URN K. OUT NOW LUA CD SINGLE from I'M WIDE AWAKE, IT'S MORNING TAKE IT EASY (LOVE NOTHING) CD SINGLE from DIGITAL ASH IN A DIGITAL URN SGN.AMBULANCE THE FAINT THE GOOD LIFE BEEP BEEP L KEY WET FROM BIRTH ALBUM OF THE YEAR BUSINESS CASUAL WWW.SADDLE-CREEK.COM | [email protected] the revolution of everyday life, delivered to your door subscribe now. $18 for one year at www.clamormagazine.org or at PO Box 20128 1 Toledo, Ohio 43610 : EDITORyPUBLISHERS Jen Angel & Jason Kucsma from your editors CONSULTING EDITOR Jason Kucsma Joshua Breitbart WEB DESIGN In November after the election, I had the fortune to sit next to an Israeli man named Gadi on CULTURE EDITOR Derek Hogue a flight from Cleveland to Detroit. He was on his way to Europe and then home, and I was on Eric Zassenhaus VOLUNTEERS my way to my sister's house in Wisconsin. ECONOMICS EDITOR Mike McHone, Our conversation, which touched on everything from Bush and Kerry to Iraq to the se- Arthur Stamoulis JakeWhiteman curity wall being built by Israel, gave me the opportunity to reflect on world politics from an MEDIA EDITOR PROOFREADERS outsider's perspective. -

EAST COASTINGS LITTLE FETES - Guests at the Oct

EAST COASTINGS LITTLE FETES - Guests at the Oct. 1 listening party RCA threw for Diana Ross' CBS Closes Santa Maria Plant anxiously -awaited debut LP for the label, "Why Do Fools Fall In Love," were treated not (continued from page 8) fect us at all." Ordover added that the divi- just to a sneak preview of the disc (which to our ears sounded like her best in years) but facility would close was CBS' decision in sion "spent quite a few months examining to a "surprise" visit from Ms. Ross herself. The stunning songstress emerged during the August to stop offering custom pressing the situation and carefully considered applause that accompanied the album's completion and after a brief introduction by RCA Records services to numerous small labels. "We customer service. In our opinion it won't be division president Robert Summer, gave an equally pithy thank you were told in August that we were one of the affected; we use Terre Haute as our main speech before mingling with the partygoers. The identity of the album's producer, low-level labels being let go because of distribution point." which had been shrouded in secrecy, was finally revealed to be Ross herself. We feel she's staff reductions," said Richard Seidel of The 588 workers now employed at Santa done a fine job in adding a hot, modern, dance music sound to many of the tracks Contemporary Records. "The closing really Maria will receive extensive job counseling to complement her always -distinctive pop stylings. The hard edge and conspicuous seemed imminent." and assistance. -

DAN KELLY's Ipod 80S PLAYLIST It's the End of The

DAN KELLY’S iPOD 80s PLAYLIST It’s The End of the 70s Cherry Bomb…The Runaways (9/76) Anarchy in the UK…Sex Pistols (12/76) X Offender…Blondie (1/77) See No Evil…Television (2/77) Police & Thieves…The Clash (3/77) Dancing the Night Away…Motors (4/77) Sound and Vision…David Bowie (4/77) Solsbury Hill…Peter Gabriel (4/77) Sheena is a Punk Rocker…Ramones (7/77) First Time…The Boys (7/77) Lust for Life…Iggy Pop (9/7D7) In the Flesh…Blondie (9/77) The Punk…Cherry Vanilla (10/77) Red Hot…Robert Gordon & Link Wray (10/77) 2-4-6-8 Motorway…Tom Robinson (11/77) Rockaway Beach…Ramones (12/77) Statue of Liberty…XTC (1/78) Psycho Killer…Talking Heads (2/78) Fan Mail…Blondie (2/78) This is Pop…XTC (3/78) Who’s Been Sleeping Here…Tuff Darts (4/78) Because the Night…Patty Smith Group (4/78) Ce Plane Pour Moi…Plastic Bertrand (4/78) Do You Wanna Dance?...Ramones (4/78) The Day the World Turned Day-Glo…X-Ray Specs (4/78) The Model…Kraftwerk (5/78) Keep Your Dreams…Suicide (5/78) Miss You…Rolling Stones (5/78) Hot Child in the City…Nick Gilder (6/78) Just What I Needed…The Cars (6/78) Pump It Up…Elvis Costello (6/78) Airport…Motors (7/78) Top of the Pops…The Rezillos (8/78) Another Girl, Another Planet…The Only Ones (8/78) All for the Love of Rock N Roll…Tuff Darts (9/78) Public Image…PIL (10/78) My Best Friend’s Girl…the Cars (10/78) Here Comes the Night…Nick Gilder (11/78) Europe Endless…Kraftwerk (11/78) Slow Motion…Ultravox (12/78) Roxanne…The Police (2/79) Lucky Number (slavic dance version)…Lene Lovich (3/79) Good Times Roll…The Cars (3/79) Dance -

1 7Th Wonder

1 7th Wonder - The Tilt 2 A Taste Of Honey - Boogie Oogie Oogie 3 A Taste Of Honey - Do It Good 4 A Taste Of Honey - Rescue Me 5 Ad Visser And Daniel Sahuleka - Giddyap A Gogo 6 Ago - For You 7 AKB - Stand Up-Sit Down 8 Al Hudson & The Partners - You Can Do It 9 Al Jarreau - Boogie Down 10 Al Jarreau - Breakin' Away 11 Alicia Bridges - I Love The Nightlife 12 Alphaville - Big In Japan 13 Alphaville - Sounds Like A Melody 14 Alton McClain & Destiny - It Must Be Love 15 Alvin Cash - Ali Shuffle 16 Amanda Lear - Queen Of Chinatown 17 Amii Stewart - Friends 18 Amii Stewart - Knock On Wood 19 Amuzement Park - Groove Your Blues Away 20 Aneka - Japanese Boy 21 Animal Nightlife - Native Boy 22 Anita Ward - Don't Drop My Love 23 Anita Ward - Ring My Bell 24 Archie Bell & The Drells - The Soul City Walk 25 Aretha Franklin - Jump To It 26 Aretha Franklin - Who's Zoomin' Who 27 Asha Puthly - The Devil Is Loose 28 Ashford & Simpson - Found A Cure 29 Ashford & Simpson - Street Corner 30 Ashford & Simpsons - Solid 31 Atlantic Starr - Circles 32 Atlantic Starr - Perfect Love 33 Atlantis - Keep On Movin' 34 Aurra - Make Up Your Mind 35 B. T. (Brenda Taylor) - You Can't Have Your Cake And Eat It Too 36 B.T. Express - Does It Feel Good 37 B.T. Express - Let Me Be The One 38 Baccara - Yes Sir, I Can Boogie 39 Baciotti - Black Jack 40 Bandolero - Paris Latino 41 Barrabas - On The Road Again 42 Barry Manilow - Copacabana (At The Copa) 43 Barry White - Change 44 Barry White - Just The Way You Are 45 Barry White - Let The Music Play 46 Barry White - You See The -

Tom Tom Club Live at the Clubhouse Mp3, Flac, Wma

Tom Tom Club Live At The Clubhouse mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic Album: Live At The Clubhouse Country: Europe Released: 2002 Style: Trip Hop, Disco MP3 version RAR size: 1914 mb FLAC version RAR size: 1613 mb WMA version RAR size: 1922 mb Rating: 4.2 Votes: 387 Other Formats: ADX VOC MPC MIDI AHX DMF MP4 Tracklist 1-01 Suboceana 6:57 1-02 Time To Bounce 4:34 1-03 Punk Lolita 5:08 1-04 Soul Fire 3:38 1-05 Who Feelin' It 5:42 1-06 Happiness Can't Buy Money 5:01 1-07 Sand 6:03 1-08 She's Dangerous 4:34 1-09 Man With The 4-Way Hips 10:19 2-01 Genius Of Love 7:17 2-02 Band Introduction 1:58 2-03 You Sexy Thing 5:08 2-04 Holy Water 4:25 2-05 Wordy Rappinghood 8:17 2-06 As Above So Below 6:10 2-07 96 Tears 3:59 2-08 Take Me To The River 4:59 Companies, etc. Phonographic Copyright (p) – Tip Top Music, Inc. Copyright (c) – Tip Top Music, Inc. Distributed By – Pinnacle Distributed By – [pias] Distributed By – Playground Music Scandinavia Duplicated By – Disctronics Credits Agogô [Agogô Bells], Keyboards, Timbales, Vocals – Bruce Martin Artwork By – Frank Veldkamp Bass, Vocals – Tina Weymouth Congas, Cowbell, Timbales, Vocals – Steve Scales Drums [Drum Kit], Vocals – Chris Frantz Featuring, Alto Saxophone – Hope Clayburn Featuring, Tenor Saxophone – Rob Somerville Featuring, Trombone – Bryan Smith Guitar, Vocals – Robby Aceto Percussion, Vocals – Mystic Bowie, Victoria Clamp Producer – Chris Frantz, Tina Weymouth Talking Drum – Abdou M'Boup Notes Performed Live at the Clubhouse Music Studio at Cock Island in Connecticut on Sunday, October 14, 2001. -

Corpus Antville

Corpus Epistemológico da Investigação Vídeos musicais referenciados pela comunidade Antville entre Junho de 2006 e Junho de 2011 no blogue homónimo www.videos.antville.org Data Título do post 01‐06‐2006 videos at multiple speeds? 01‐06‐2006 music videos based on cars? 01‐06‐2006 can anyone tell me videos with machine guns? 01‐06‐2006 Muse "Supermassive Black Hole" (Dir: Floria Sigismondi) 01‐06‐2006 Skye ‐ "What's Wrong With Me" 01‐06‐2006 Madison "Radiate". Directed by Erin Levendorf 01‐06‐2006 PANASONIC “SHARE THE AIR†VIDEO CONTEST 01‐06‐2006 Number of times 'panasonic' mentioned in last post 01‐06‐2006 Please Panasonic 01‐06‐2006 Paul Oakenfold "FASTER KILL FASTER PUSSYCAT" : Dir. Jake Nava 01‐06‐2006 Presets "Down Down Down" : Dir. Presets + Kim Greenway 01‐06‐2006 Lansing‐Dreiden "A Line You Can Cross" : Dir. 01‐06‐2006 SnowPatrol "You're All I Have" : Dir. 01‐06‐2006 Wolfmother "White Unicorn" : Dir. Kris Moyes? 01‐06‐2006 Fiona Apple ‐ Across The Universe ‐ Director ‐ Paul Thomas Anderson. 02‐06‐2006 Ayumi Hamasaki ‐ Real Me ‐ Director: Ukon Kamimura 02‐06‐2006 They Might Be Giants ‐ "Dallas" d. Asterisk 02‐06‐2006 Bersuit Vergarabat "Sencillamente" 02‐06‐2006 Lily Allen ‐ LDN (epk promo) directed by Ben & Greg 02‐06‐2006 Jamie T 'Sheila' directed by Nima Nourizadeh 02‐06‐2006 Farben Lehre ''Terrorystan'', Director: Marek Gluziñski 02‐06‐2006 Chris And The Other Girls ‐ Lullaby (director: Christian Pitschl, camera: Federico Salvalaio) 02‐06‐2006 Megan Mullins ''Ain't What It Used To Be'' 02‐06‐2006 Mr. -

Rhythm & Blues...60 Deutsche

1 COUNTRY .......................2 BEAT, 60s/70s ..................69 OUTLAWS/SINGER-SONGWRITER .......23 SURF .............................81 WESTERN..........................27 REVIVAL/NEO ROCKABILLY ............84 WESTERN SWING....................29 BRITISH R&R ........................89 TRUCKS & TRAINS ...................31 INSTRUMENTAL R&R/BEAT .............91 C&W SOUNDTRACKS.................31 POP.............................93 COUNTRY AUSTRALIA/NEW ZEALAND....32 POP INSTRUMENTAL .................106 COUNTRY DEUTSCHLAND/EUROPE......33 LATIN ............................109 COUNTRY CHRISTMAS................34 JAZZ .............................110 BLUEGRASS ........................34 SOUNDTRACKS .....................111 NEWGRASS ........................36 INSTRUMENTAL .....................37 DEUTSCHE OLDIES112 OLDTIME ..........................38 KLEINKUNST / KABARETT ..............117 HAWAII ...........................39 CAJUN/ZYDECO ....................39 BOOKS/BÜCHER ................119 FOLK .............................39 KALENDER/CALENDAR................122 WORLD ...........................42 DISCOGRAPHIES/LABEL REFERENCES.....122 POSTER ...........................123 ROCK & ROLL ...................42 METAL SIGNS ......................123 LABEL R&R .........................54 MERCHANDISE .....................124 R&R SOUNDTRACKS .................56 ELVIS .............................57 DVD ARTISTS ...................125 DVD Western .......................139 RHYTHM & BLUES...............60 DVD Special Interest..................140 -

DAN KELLY's Ipod 80S PLAYLIST It's the End of The

DAN KELLY’S iPOD 80s PLAYLIST It’s The End of the 70s Cherry Bomb…The Runaways (9/76) Anarchy in the UK…Sex Pistols (12/76) X Offender…Blondie (1/77) See No Evil…Television (2/77) Police & Thieves…The Clash (3/77) Dancing the Night Away…Motors (4/77) Sound and Vision…David Bowie (4/77) Solsbury Hill…Peter Gabriel (4/77) Sheena is a Punk Rocker…Ramones (7/77) First Time…The Boys (7/77) Lust for Life…Iggy Pop (9/7D7) In the Flesh…Blondie (9/77) The Punk…Cherry Vanilla (10/77) Red Hot…Robert Gordon & Link Wray (10/77) 2-4-6-8 Motorway…Tom Robinson (11/77) Rockaway Beach…Ramones (12/77) Statue of Liberty…XTC (1/78) Psycho Killer…Talking Heads (2/78) Fan Mail…Blondie (2/78) Who’s Been Sleeping Here…Tuff Darts (4/78) Because the Night…Patty Smith Group (4/78) Touch and Go…Magazine (4/78) Ce Plane Pour Moi…Plastic Bertrand (4/78) Do You Wanna Dance?...Ramones (4/78) The Day the World Turned Day-Glo…X-Ray Specs (4/78) The Model…Kraftwerk (5/78) Keep Your Dreams…Suicide (5/78) Miss You…Rolling Stones (5/78) Hot Child in the City…Nick Gilder (6/78) Just What I Needed…The Cars (6/78) Pump It Up…Elvis Costello (6/78) Sex Master…Squeeze (7/78) Surrender…Cheap Trick (7/78) Top of the Pops…The Rezillos (8/78) Another Girl, Another Planet…The Only Ones (8/78) All for the Love of Rock N Roll…Tuff Darts (9/78) Public Image…PIL (10/78) I Wanna Be Sedated (megamix)…The Ramones (10/78) My Best Friend’s Girl…the Cars (10/78) Here Comes the Night…Nick Gilder (11/78) Europe Endless…Kraftwerk (11/78) Slow Motion…Ultravox (12/78) I See Red…Split Enz (12/78) Roxanne…The -

Experimentelle Deutsche Musikvideos 2003-2007 Inhalt Experimentelle Musikclips – Wie Hase Und Igel

Zur Rettung der Popkultur Experimentelle deutsche Musikvideos 2003-2007 Inhalt Experimentelle Musikclips – wie Hase und Igel 3 Experimentelle Musikclips – wie Hase und Igel Den ersten Musikclip drehte Oskar Fischinger 1929 mit der „Studie 2“. Hans 4 Im Untergrund Richter schuf zeitnah seinen „Vormittagsspuk“ zur Musik von Paul Hinde- 5 Zur Geschichte und Zukunft des Musikvideos mith. Abstrakten Film und dadaistische Montage verstand man als „Augen- musik“ oder Malerei in der Zeit. Während der Zeit des Nationalsozialismus 8 My Mouth / Beautiful Day emigrierte der experimentelle Musikclip. In den 1980er-Jahren fand er eine 9 Working Girl neue Plattform in den Sendern des Musikfernsehens. Hier musste das Expe- 10 Mugen Kyuukou – How To Believe In Jesus rimentelle nach einigen Jahren dem Mainstream weichen, um dann wie ein 11 Let’s Push Things Forward Phönix erneut aufzutauchen, auf den Internetplattformen von YouTube, My- 12 Lightning Bolts & Man Hands Space u.a. Dort sind die kurzen Clips zum derzeit meist gesehenen filmi- 13 Die Zeit heilt alle Wunder schen Format avanciert. Die Sehgewohnheiten und die Wirklichkeitswahr- 14 Swelan nehmung junger Menschen ändern sich rasant. Dabei ist eine Bewegung 15 Wordy Rappinghood weg vom Fernsehen und hin zu den Plattformen des Web 2.0 zu beobachten. 16 Cut Auf diesen Plattformen haben es die langen Filme schwer und die kurzen 17 Geht’s noch leicht. Den großen Spielfilmen aus Hollywood ergeht es wie den Dinosau- 18 Time Is Running Out riern, die kleinen Filme feiern ihre Renaissance. Wo immer die Entwicklung 19 Good Morning Stranger hingeht, der experimentelle Musikclip ist schon da, wie im Märchen von 20 The Lady Moon Turns Sulky Hase und Igel. -

Rip “Her” to Shreds

Rip “Her” To Shreds: How the Women of 1970s New York Punk Defied Gender Norms Rebecca Willa Davis Senior Thesis in American Studies Barnard College, Columbia University Thesis Advisor: Karine Walther April 18, 2007 Contents Introduction 2 Chapter One Patti Smith: Jesus Died For Somebody’s Sins But Not Mine 11 Chapter Two Deborah Harry: I Wanna Be a Platinum Blonde 21 Chapter Three Tina Weymouth: Seen and Not Seen 32 Conclusion 44 Bibliography 48 1 Introduction Nestled between the height of the second wave of feminism and the impending takeover of government by conservatives in 1980 stood a stretch of time in which Americans grappled with new choices and old stereotypes. It was here, in the mid-to-late 1970s, that punk was born. 1 Starting in New York—a city on the verge of bankruptcy—and spreading to Los Angeles and London, women took to the stage, picking punk as their Trojan Horse for entry into the boy bastion of rock’n’roll. 2 It wasn’t just the music that these women were looking to change, but also traditionally held notions of gender as well. This thesis focuses on Patti Smith, Deborah Harry, and Tina Weymouth—arguably the first, and most important, female punk musicians—to demonstrate that women in punk used multiple methods to question, re-interpret, and reject gender. On the surface, punk appeared just as sexist as any other previous rock movement; men still controlled the stage, the sound room, the music journals and the record labels. As writer Carola Dibbell admitted in 1995, “I still have trouble figuring out how women ever won their place in this noise-loving, boy-loving, love-fearing, body-hating music, which at first glance looked like one more case where rock’s little problem, women, would be neutralized by male androgyny.” According to Dibbell, “Punk was the music of the obnoxious, permanently adolescent white boy—skinny, zitty, ugly, loud, stupid, fucked up.”3 Punk music was loud and aggressive, spawning the violent, almost exclusively-male mosh pit at live shows that still exists today.