Iot Inspector: Crowdsourcing Labeled Network Traffic from Smart Home Devices at Scale

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Radio Frequency Identification Based Smart

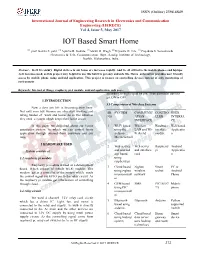

ISSN (Online) 2394-6849 International Journal of Engineering Research in Electronics and Communication Engineering (IJERECE) Vol 4, Issue 5, May 2017 IOT Based Smart Home [1] prof. Kavita S. patil, [2] Apurva R. Kokate, [3] Shruti R. Wagh, [4]Priyanka D. Tile, [5] Prajakata S. Somavanshi Electronics & Tele- Communication Dept., Sandip Institute of Technology, Nashik, Maharashtra, India. Abstract - In 21 St century Digital devices in our home are increases rapidly. And we all affixed to the mobile phones and laptops. As it becomes need, so this project may helpful to use this habit to get easy and safe life. Home automation provides user friendly access by mobile phone using android application. This project is focuses on controlling devices instead of only monitoring of environment Keywords: Internet of things, raspberry pi-3 module, android application, web page. according to high signal on pin. Then particular devices get ON or OFF. 1.INTRODUCTION 3.1Comparison of Wireless Systems Now a days our life is becoming very busy. Not only men but women are also start working and SR SYSTEM COMMUNIC CONTRO USER taking burden of work and home. So in this situation NO ATION LLER INTERFA they need a system which keeps their home secure. INTERFACE CE In this paper we described about our Home 1 Wi-Fi based Wireless Hardware Web based automation system. In which we can control home using the LAN and Wi- interface Applicatio application through internet from anywhere and any arduino Fi shield module n time. Microcontroll er 2.HARDWARE USED 2 Web service Web server Raspberry Android System consists of and android and interface pi Applicatio app based card n 2.1 raspberry pi module using raspberry pi Raspberry pi module is used as a development 3 Cloud based Zigbee Smart PC or board. -

Heartmate: a Competitive and Motivational Fitness Application for Ios Devices

University of Nevada, Reno HeartMate: A Competitive and Motivational Fitness Application for iOS Devices A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Science and Engineering by Marlon Daniel Chavez Dr. Frederick C. Harris, Jr., Thesis Advisor May, 2016 THE GRADUATE SCHOOL We recommend that the thesis prepared under our supervision by MARLON DANIEL CHAVEZ Entitled HeartMate: A Competitive and Motivational Fitness Application for iOS Devices be accepted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Dr. Frederick C. Harris, Jr., Advisor Dr. Sergiu M. Dascalu, Committee Member Dr. Yantao Shen, Graduate School Representative David W. Zeh, Ph.D., Dean, Graduate School May, 2016 i Abstract Smartphones today are more advanced than they have ever been before with hardware sensors built into them to detect location, motion, as well ways to com- municate with third party hardware through bluetooth, and the internet through cellular or wifi. With the introduction of Apple’s App Store, and the Google Play store smartphone devices have been given features one would not think possible on a cell phone. One of the target categories for app developers is health, due to the sensors provided in current smartphones an application can be developed to track a user’s health. The apps currently on the market motivate users through mostly goal based challenges between themselves or their friends. HeartMate is an iOS mobile application that utilizes the sensors in the iPhone, and the use of a Bluetooth LE connected heart rate monitor to create a new competitive, motivational, real time social experience using heart rates as a performance measure. -

Atwinc15x0 ATWINC3400 Wi-Fi Network Controller Software Programming Guide

AN5305 ATWINC15x0/ATWINC3400 Wi-Fi® Network Controller Software Programming Guide Introduction This software programming guide describes the use of the ATWINC15x0/ATWINC3400 Wi-Fi Network Controller to build state-of-the-art Internet of Things (IoT) applications. The following topics will be covered: • How examples are organized • Target board information • Instruction for each example Note: All the listed examples and folder structure/files are similar for both WINC15x0 and ATWINC3400. The diagrams and console logs in this document refer to ATWINC15x0. Prerequisites • Hardware Prerequisites: – SAM D21 Xplained Pro Evaluation Kit – ATWINC15x0 extension (For more details, refer to ATWINC15x0 user guide) – ATWINC3400 extension (For more details, refer to ATWINC3400 user guide) – IO1 extension – Micro-USB Cable (Micro-A/Micro-B) • Software Prerequisites: – Atmel Studio 7.0 (For more details, refer to user guide) – Wi-Fi IoT Examples (for more details, refer to application notes) Figure 1. SAM D21 XSTK Board Demo Setup © 2019 Microchip Technology Inc. Application Note DS70005305C-page 1 AN5305 Table of Contents Introduction.....................................................................................................................................................1 Prerequisites.................................................................................................................................................. 1 1. How the Examples are Organized......................................................................................................... -

Downloaded from ORCA, Cardiff University's Institutional Repository

This is an Open Access document downloaded from ORCA, Cardiff University's institutional repository: http://orca.cf.ac.uk/113958/ This is the author’s version of a work that was submitted to / accepted for publication. Citation for final published version: Anthi, Eirini, Ahmad, Shazaib, Rana, Omer, Burnap, Pete and Theodorakopoulos, Georgios 2018. EclipseIoT: A secure and adaptive hub for the Internet of Things. Computers and Security 78 , pp. 477-490. 10.1016/j.cose.2018.07.016 file Publishers page: https://doi.org/10.1016/j.cose.2018.07.016 <https://doi.org/10.1016/j.cose.2018.07.016> Please note: Changes made as a result of publishing processes such as copy-editing, formatting and page numbers may not be reflected in this version. For the definitive version of this publication, please refer to the published source. You are advised to consult the publisher’s version if you wish to cite this paper. This version is being made available in accordance with publisher policies. See http://orca.cf.ac.uk/policies.html for usage policies. Copyright and moral rights for publications made available in ORCA are retained by the copyright holders. EclipseIoT: A Secure and Adaptive Hub for the Internet of Things Eirini Anthi∗, Shazaib Ahmad, Omer Rana, George Theodorakopoulos, Pete Burnap Cardiff University, School of Computer Science & Informatics, 5 The Parade, Roath, Cardiff, CF24 3AA Abstract With the proliferation in the quantity and types of devices that may be included in an Internet of Things (IoT) ecosystem, particularly in the context of a smart home, it is essential to provide mechanisms to deal with the heterogeneity which such devices encompass. -

Future-Proofing the Connected World: 13 Steps to Developing Secure Iot Products

Future-proofing the Connected World: 13 Steps to Developing Secure IoT Products Presented by the IoT Working Group Table of Contents Forward Introduction Document Scope The Need for IoT Security IoT Products Can Compromise Privacy IoT products can lend their computing power to launch DDoS Attacks Medical Devices and Medical Standard Protocols are Vulnerable to Attack Drones Are Approaching Mainstream Status and Being Used as a Platform for Reconnaissance Critical national infrastructure can rely on the IoT ecosystem Cars are becoming connected and autonomous Moving Forward Why Development Organizations Should Care About Securing IoT Products IoT Device Security Challenges IoT products may be deployed in insecure or physically exposed environments Security is new to many manufacturers and there is limited security planning in development methodologies Security is not a business driver and there is limited security sponsorship and management support in development of IoT products There is a lack of defined standards and reference architecture for secure IoT development There are difficulties recruiting and retaining requisite skills for IoT development teams including architects, secure software engineers, hardware security engineers, and security testing staff The low price point increases the potential adversary pool Resource constraints in embedded systems limit security options IoT Security Survey Guidance for Secure IoT Development 1. Start with a Secure Development Methodology Security Requirements Security Processes Perform Safety Impact Assessment Perform Threat Modeling 2. Implement a Secure Development and Integration Environment Evaluate Programming Languages OWASP Python Security Project Link Integrated Development Environments Continuous Integration Plugins Testing and Code Quality Processes 3. Identify Framework and Platform Security Features Selecting an Integration Framework Evaluate Platform Security Features 4. -

Critical Capabilities for the Enterprise with New Data Introduction - Cover Page - Overview

Critical Capabilities for the Enterprise with New Data Introduction - Cover page - Overview Critical Capabilities for Deploying Enterprise Analytics and Mobility Applications Report generated on October 2, 2017 This information represents the opinions of MicroStrategy based on its assessment of the products listed. 1 / 167 Critical Capabilities for the Enterprise with New Data Introduction - Why MicroStrategy - Overview Why MicroStrategy Technical Critical Critical Capability Tableau Qlik IBM SAP MSFT Oracle MSTR Solution Capability Description Why MicroStrategy Score Sense Cognos BO PowerBI Score Score Score Score Score Score Enterprise Object An object-oriented MicroStrategy offers a centralized metadata repository that is built with and Platform Oriented architecture catalogs contains objects, as defined by developers, and can be reused across the Reusability every aspect of the BI project. Objects can be combined to create higher level objects (e.g., business. Data assets, attributes and metrics make up a report; reports, filters, and prompts make KPIs, dimensions, up a document; documents, and other reports can be embedded into a users, reports, and document - all of which are stored as "metadata objects"), and this dashboards are all together delivers a single BI project or a data model. stored in a central • The reusable model enables objects across the BI deployment to be location as unique securely reused hundreds of times by users across the enterprise, without logical objects to any duplication of effort. support reusability. • The model not only includes dimensions and metrics, but also covers filters, reports, prompts, custom groups, reports, dashboards, and documents - making it possible to flexibly reuse all of these objects multiple times across any application and BI project. -

Smart Home Automation and Security System Design Based on Iot Applications

SMART HOME AUTOMATION AND SECURITY SYSTEM DESIGN BASED ON IOT APPLICATIONS Shameem Ahmad1, Arghya Saha2, Leong Wen Chek1, Saad Mekhilef1, Tariful Azam3, Mahrous Ahmed4, 5, Mohamed Orabi5, Sherif Ghoneim4, 6, Mosleh Alharthi4, Farhan Salem4, and Basem Alamri4 1Power Electronics and Renewable Energy Research Laboratory (PEARL), Department of Electrical Engineering, University of Malaya, Kuala Lumpur, Malaysia, e-mail: [email protected], [email protected], [email protected] 2Renewable Energy and Technology, Institute of Energy, University of Dhaka, Dhaka, Bangladesh, e-mail: [email protected] 3Department of Electrical and Electronic Engineering, American International University-Bangladesh Dhaka, Bangladesh, e-mail: [email protected] 4Electrical Engineering Department, College of Engineering, Taif University, Saudi Arabia, e-mail: [email protected], [email protected], [email protected], [email protected] 5APEARC, Faculty of Engineering, Aswan University, Aswan, Egypt, e-mail: [email protected] 6Electrical Department, Faculty of Industrial Education, Suez University, Suez, Egypt, e-mail: [email protected] Received Date: March 11, 2019; Revised Date: October 19, 2019; Acceptance Date: November 20, 2019 Abstract Conventional Home Automation Systems lack many of the essential properties of home automation system, which are now easily accessible with modern technologies like IoT where every device is connected and can be controlled through internet. The proposed system presents a standard home automation system with all requirements an automation system has like – scalable, configurable, DIY and serves the most important function which is plug and play. Firstly, this paper discuss the merits and demerits of mainstream works on home automation system. Next, key enabling technologies – Cloud (Pubnub), Android Applications, WiFi Module (ESP 8266), Raspberry Pi have been discussed. -

A Microchip Technology Inc. Publication Sept/Oct 2016

A MICROCHIP TECHNOLOGY INC. PUBLICATION SEPT/OCT 2016 Taking on Jump Start Your Solving the the World Internet of Things Security 7 17 Designs 20 Conundrum A MICROCHIP TECHNOLOGY INC. PUBLICATION SEPT/OCT 2016 COVER STORY DESIGN CORNER 15 Jump Start Your Internet of 4 Bridging the Gap Low-Power, Cost-Effective PIC32MM Family of Things Designs Microcontrollers Offers Compelling 32-bit Solution for Applications with Budget, Power and Size Constraints 17 Unifying the Internet of Things 20 Solving the Security Conundrum NEW PRODUCTS 23 Rapid Prototyping, 7 Taking on the World Seamless Integration Next-Generation Bluetooth® Low Energy Solutions Deliver Easy-to-Use Interface and Embedded 24 Custom Control Scripting Capability 25 What Is the True Cost? 9 Now That Was Easy Add Wi-Fi® Connectivity to Your Design with the 27 Simplifying Clock Generation ATWINC1500 Wi-Fi Network Controller 30 Smarter Programming 11 Music Minus Wires Measuring Time Dual-Mode Bluetooth Audio Devices Deliver 33 Superior Sound Quality for High-End Headsets, Speakers and Sound Bars FEATURED VIDEO NEW TOOLS 13 Get Ready to Innovate Delivering Over Four Times More Battery Life and Faster Time to Market, New Platform Includes MCU, Bluetooth Low Energy and Sensor Technology Weighing In 14 contents Add Intelligence and Bluetooth Connectivity to Digital Weight Scales with Microchip’s Flexible and Remote Temperature Reading Across Vacant Lot Cost-Effective Solutions with MCP9902; 1°C Accurate Down to −40°C The Microchip name and logo, the Microchip logo, dsPIC, FlashFlex, KEELOQ, KEELOQ logo, MOST, MPLAB, mTouch, PIC, PICmicro, PICSTART, PIC32 logo, Quiet-WIRE, rfPIC, SST, SST Logo, SuperFlash and UNI/O are registered trademarks of Microchip Technology Incorporated in the U.S.A. -

Android Based Interactive Home Automation System Through Internet of Things

International Journal of Science and Research (IJSR) ISSN (Online): 2319-7064 Index Copernicus Value (2013): 6.14 | Impact Factor (2015): 6.391 Android based Interactive Home Automation System through Internet of Things Rutuja Ekatpure1, Devendra Ingale2 1Savitribai Phule Pune University, JSPM’s JSCOE, Handewadi Road, Hadapsar, Pune-28, India 2Savitribai Phule Pune University, JSPM’s JSCOE, Handewadi Road, Hadapsar, Pune-28, India Abstract: Now a day’s automated systems are taking over manual system. Home Automation system using IoT is a system that uses laptops or smart phones to control the basic home functions and features using internet from anywhere around the world. It is used to save the electricity and human energy. Home automation is the automatic control and monitoring of household appliances and residential house features like TV, fans, lights, doors, gate and even the windows. Events can be programmed to be triggered under specific conditions (such as depending on the sensors data), and this can be used in reducing the total energy consumed by some appliances. On the other hand, the system can suggest smart task scheduling. In simple installations, domotics may be as straightforward as turning on the lights when a person enters the room. In advanced installations, rooms can sense not only the presence of a person inside but know who that person is and perhaps set appropriate lighting, temperature, taking into account the day of the week, the time of day, and other factors. Keywords: Internet of Things (IoT), Raspberry Pi, Arduino, Zigbee, Automation, Image Processing 1. Introduction automation systems, the use of wireless technologies gives several advantages that could not be achieved using a wired 1.1. -

Winter 2012 Jan/Feb 2015

Jan/FebWinter 2015 2012 GESTURES AUDIO MEMORY IoT SECURITY MEDICAL Jan/Feb 2015 Contents SHOWCASE DESIGN ARTICLES 4 From Concept to Prototype in Minutes 16 How Fast Is Your Memory? ® 19 Securing Internet of Things Free code configuration plug-in for MPLAB X IDE makes Communication developing firmware on 8- and 16-bit PIC® microcontrollers faster and easier 24 Constant, Off-Time, Buck-Based LED Drivers Using the HV9910B NEW PRODUCTS 28 Designing a Glucose Meter Using an 8-bit Microcontroller 7 It's a Breeze 33 Decoding Windows Media® New controller with GestIC® technology enables one-step design-in Audio in PIC32-Based Designs of 3D gesture recognition in embedded devices 34 A Multi-Screen Approach to Your 9 Speedy and Reliable Digital Strategy Latest additions to low-power 1.8V Serial Quad I/O™ family of 35 Ready, Set, Go! SuperFlash® technology memory devices offer 4-Mbit and 8-Mbit of memory FEATURES 13 Product Spotlight TECHNOLOGY 14 Heartwarming Deals 11 Whole Home Audio Fourth generation JukeBlox® platform delivers the ultimate streaming audio entertainment experience The Microchip name and logo, the Microchip logo, dsPIC, FlashFlex, KEELOQ, KEELOQ logo, MPLAB, mTouch, PIC, PICmicro, PICSTART, PIC32 logo, rfPIC, SST, SST Logo, SuperFlash and UNI/O are registered trademarks of Microchip Technology Incorporated in the U.S.A. and other countries. FilterLab, Hampshire, HI-TECH C, Linear Active Thermistor, MTP, SEEVAL and The Embedded Control Solutions Company are registered trademarks of Microchip Technology Incorporated in the U.S.A. Silicon Storage Technology is a registered trademark of Microchip Technology Inc. in other countries. -

Why Does Iot Need a Data Stream Network?

THE ONLY SECURE DATA STREAM NETWORK FOR THE INTERNET OF THINGS Why does IoT need a Data • 200 million connected devices Stream Network? • 14 data centers • 2-way secure signaling with 1/4 second latencies • 4 continents • Syndicate data streams to business partners • Reliable signaling on unreliable networks (survives cell/wi • 1000+ customers switching, dropped connections) • Low power drain and minimal bandwidth usage • Monitor and manage device status in real-time PubNub enables Internet of Things deployments to perform and scale in the real world Connected Car Home Automation Consumer Electronics Easy-to-use SDKs for 70+ embedded, desktop, browser, and server environments Atmel Microchip MPLAB Texas Instruments RaspberryPi mBed Imp SAMA5D3 PIC32 Harmony Arduino (ARM) Electric TI CC3200 / TI MSP430 F5529 JavaScript iOS Android Python Node.JS Java Objective C Windows www.pubnub.com © Copyright 2015, PubNub, Inc. All rights reserved. All trademarks, trade names, service marks and logos referenced herein belong to their respective companies. THE ONLY SECURE DATA STREAM NETWORK FOR THE INTERNET OF THINGS Focus on your IoT products instead of infrastructure and accelerate time to market Secure bi-directional Realtime connectivity data streams for the real world Data encryption, access control 14 data centers across four and no open ports ensure secure continents provides 1/4 second IoT communication. latencies and 99.999% SLAs. Reliable signaling on Monitor & manage unreliable networks device state in realtime Global replication for redundancy Automatically detect device state and auto catchup for as it changes in realtime with missed messages. PubNub Presence. Minimize battery and Fast time to market network consumption 70+ highly optimized SDKs Easy-to-use APIs for popular minimize bandwidth and embedded chipsets and battery consumption. -

A Practical Approach of an Internet of Robotic Things Platform

DEGREE PROJECT IN MECHANICAL ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2018 A Practical Approach of an Internet of Robotic Things Platform ROBERT YOUSIF KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF INDUSTRIAL ENGINEERING AND MANAGEMENT Master of Science Thesis TRITA-ITM-EX 2018:737 A Practical Approach of an Internet of Robotic Things Platform Robert Yousif KTH Industrial Engineering and Management Approved Examiner Supervisor Martin Törngren Jinzhi Lu Commissioner Contact person ÅF Håkan Roos Abstract This thesis aims to design and develop a platform based on a novel concept - the Internet of Robotic Things (IoRT) constructed by a robotic platform, an Internet of Things (IoT) platform and cloud computing services. A robotic platform enables hardware abstraction, facilitating the management of input/output between software, mechanical devices and electronic systems. The IoT platform is a global network enabling a massive number of devices known as things to communicate with each other and transfer data over the Internet. Cloud computing is a shared pool of scalable hardware usually provisioned as cloud services by third party cloud vendors. The integration of these concepts constitutes the core of the IoRT platform, as a global infrastructure facilitating robots to interconnect over the Internet utilizing common communication technology. Moreover, the pool of cloud resources shared by the connected robots enables scalable storage and processing power. The IoRT platform developed in this study constitutes firstly of the Amazon Web Service (AWS) IoT core serving as the IoT platform. Secondly, it incorporates the Robot Operating system (ROS) as the robotic platform and thirdly the cloud services Amazon DynamoDB and AWS Lambda for data storing and data processing respectively.