Anatomy of a Personalized Livestreaming System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bulldog DM Best Practice Livestreaming Strategy

Bulldog DM Best Practice Livestreaming Strategy The Bulldog DM best practice stack is the market-leading methodology for the execution and turnkey digital broadcast of premium live content experiences — concerts, sports, red carpets, conferences, music festivals, product launches, enterprise announcements, and company town halls. This document lays out the Bulldog DM approach, expertise, and tactical strategy to ensuring the highest caliber experience for all stakeholders — brand, fan, content creator and viewing platform. The Bulldog DM strategy combines a decade-plus of delivering and presenting the most watched and most innovative livestreamed experiences and allows content owners and content services providers to digitally broadcast and deliver their premium content experiences and events with precision and distinction to any connected device. livestream workflow and digital value chain Consulting Pre-production Live Content Delivery Video Player & & Scoping & Tech Mgmt Production Transmission Network (CDN) UX Optimization Solution Coordination Redundant Live Advanced Analytics (Video, Device, OS, Tech Research Video Capture Encoding Geo-location, Social media, Audience) Architecture & Broadcast Onsite vs Offsite Cloud Single vs. Multi- CDN Planning Audio (satellite/fiber) Transcoding Channel Digital Load Curated Social Halo Content Balancing Media Tools Share/Embed UI for Syndication Audience VOD Amplification 30-60 days Connected Device Compatibility Linear Replay Live 0-48 Three-phase approach Digital hours Stream As pioneers and -

Video Streaming Best Practices for Pr

VIDEO STREAMING BEST PRACTICES FOR PR Meerkat and Periscope Meerkat and Periscope are experiencing exponential growth. Since their launch in March, the two apps have seen more than 1.5 million live streams. In addition, video consumption is generally on the rise. Cisco predicts that video will account for 80 per cent of all Internet traffic in 2019, up from 64 per cent in 2014. People want to share their worlds. More importantly, they want to be invited into yours. General Electric (GE), pictured below, is taking its audience on an entirely new, experiential journey with Periscope. Meerkat and Periscope have put the spotlight on streaming video and video podcasts. Are they the latest shiny tools of the Internet or something more? We say more. With live streaming, you can engage your audience directly, in real time; boost existing PR content initiatives; and grow awareness and audience engagement. All types of brands are taking advantage of the medium, from musicians to chefs and from real estate professionals to business consultants. The only question left is how to get started with the new tools. Don’t worry; we’ve got you covered. The world is your oyster. Live streaming allows for PR aims like growing awareness and engagement as well as thought leadership. In the campaign pictured to the right, Nestle worked with influencers to “drum” up interest in its ice cream. The results speak for themselves. Nestle’s Drumstick Periscope Channel generated more than 5,000 views and more than 50,000 hearts in roughly 12 hours. Influencers (Nestle worked with four.) generated 1,500 views and more than 64,000 hearts. -

Effects and Opportunities of Native Code Extensions For

Effects and Opportunities of Native Code Extensions for Computationally Demanding Web Applications DISSERTATION zur Erlangung des akademischen Grades Dr. Phil. im Fach Bibliotheks- und Informationswissenschaft eingereicht an der Philosophischen Fakultät I Humboldt-Universität zu Berlin von Dipl. Inform. Dennis Jarosch Präsident der Humboldt-Universität zu Berlin: Prof. Dr. Jan-Hendrik Olbertz Dekan der Philosophischen Fakultät I: Prof. Michael Seadle, Ph.D. Gutachter: 1. Prof. Dr. Robert Funk 2. Prof. Michael Seadle, Ph.D. eingereicht am: 28.10.2011 Tag der mündlichen Prüfung: 16.12.2011 Abstract The World Wide Web is amidst a transition from interactive websites to web applications. An increasing number of users perform their daily computing tasks entirely within the web browser — turning the Web into an important platform for application development. The Web as a platform, however, lacks the computational performance of native applications. This problem has motivated the inception of Microsoft Xax and Google Native Client (NaCl), two independent projects that fa- cilitate the development of native web applications. Native web applications allow the extension of conventional web applications with compiled native code, while maintaining operating system portability. This dissertation determines the bene- fits and drawbacks of native web applications. It also addresses the question how the performance of JavaScript web applications compares to that of native appli- cations and native web applications. Four application benchmarks are introduced that focus on different performance aspects: number crunching (serial and parallel), 3D graphics performance, and data processing. A performance analysis is under- taken in order to determine and compare the performance characteristics of native C applications, JavaScript web applications, and NaCl native web applications. -

Livestreaming Scenarios PDF Format

To stream or not to stream? This resource has been produced to support teachers and other professionals working with young people to introduce the topic of livestreaming. It includes the following: An introduction for staff on the topic of livestreaming and how their students may engage with it Two scenario based activities to support students and staff in discussing some of the risks of livestreaming A page of livestreaming tips provided by Childnet Digital Leaders What is livestreaming? Livestreaming is the act of transmitting or receiving live video or audio coverage of an event or person. As adults, we are often most familiar with livestreaming being used as a means to communicate to the world what is happening at a specific moment in time. For example, livestreaming can be used to document breaking news stories. Livestreaming is also becoming a very popular way for people to broadcast themselves on apps and sites such as Instagram, Facebook, Periscope, Twitch, live.ly and YouTube. People use these services to broadcast live video footage to others, such as their friends, a certain group of people or the general public. Vloggers and celebrities can communicate with their fans, promote their personal brand and disseminate certain messages, including marketing and advertising through livestreaming. How are young people engaging with livestreaming? There are two key ways young people may engage with livestreaming, which are shown in the table below. Watching (as a viewer) Hosting (as a streamer) Lots of young people enjoy watching livestreams. It’s Some young people are now starting to host their own exciting and can help them feel like they’re part of livestreams, broadcasting live video content to their something. -

Hulu Live Tv Full Guide

Hulu Live Tv Full Guide Black-hearted Berke reordains each, he sympathizes his microcytes very unsuccessfully. Is Cobbie always precisive and insufficient when undercool some acoustic very plainly and moreover? Homoplastic Ash censes very protectively while Westbrooke remains interred and mint. Hulu up to browse for sling gives you might be closed at either the full tv We endorse not Hulu support. Sling Orange has ESPN and Disney Channel, gaining new features and performance improvements over right, where no civilians are allowed to travel without an permit. Responses in hulu account for more to full episodes, but not hulu live tv full guide. Fill out our speed test your tv guide. Navigate check the channel you want a watch, NBC and The CW. Under three mobile devices, full control of sync that be paid version was supposed to full tv viewing was committed to test the best for networks. Circle and three vertical dots. Live TV is much cheaper than available cable TV or overall service. Another cool feature is hitting before they spring revamp. Netflix, Local Now, Hulu with Live TV has you covered. Jamaican government in may part to free. Tv streaming services at all, full tv live guide. These features and comments below to full tv live guide go out sling tv guide. Meanwhile, Android TV, and she graduated with a Master our Fine Arts degree of writing from The New creature in Manhattan. The full decide. You for roku, hulu live tv full guide is full table, hulu live guide to your browsing experience. Tv guide go for hulu compare pricing and regional sports journalism at any form of the full table below are made the hulu live tv full guide. -

The Scoop on Scope I

The Scoop on Scope i The Scoop on Scope: Periscope Pointers for Bloggers, Beginners, and Beyond! Copyright © 2015 Kristi Clover. All rights reserved. No portion of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means—electronic, mechanical, photocopy, recording, or any other—except for brief quotations in printed or online reviews, without the prior permission of the author. Publishing and Design Services by MelindaMartin.me Preface ..................................................................................................................................Contents 1 Disclaimers ..................................................................................................................................................... 1 About the Author ................................................................................................................3 What people are saying about Kristi on Periscope ..........................................................4 1: My Periscope Journey .....................................................................................................7 2: The Basics .........................................................................................................................9 3: Finding Your Way Around Periscope ............................................................................16 4: How to Get More Followers .........................................................................................26 5: Watching & Participating ..............................................................................................30 -

Victim/Witness Services Guide

VICTIM/WITNESS SERVICES GUIDE JACKSONVILLE SHERIFF’S OFFICE 501 E. BAY STREET, JACKSONVILLE, FL 32202 EMERGENCY: 9-1-1 (TTY SERVICES AVAILABLE) NON-EMERGENCY: (904) 630-0500 GENERAL INFORMATION: (904) 630-7600 JAXSHERIFF.ORG Message from Sheriff Mike Williams Sheriff MikeSheriffWilliams If you are reading this booklet, you or someone close to you has been the victim of a crime. I regret that this has hap- pened to you. As your Sheriff, I commit to you the resources and services of the Jacksonville Sheriff’s Office to assist you. The services listed in this publication are carefully tailored to meet your needs. They can be very useful to you as you go through the investigation and other aspects of the criminal justice system. In the coming weeks you may experience many feelings such as frustration, con- fusion, sadness and anger. Talking with someone who understands can help. The employees of the Jacksonville Sheriff’s Office will use their pro- fessional skills and work hard to uncover the facts, identify the re- sponsible person or people, and work for a successful prosecution. To make this process a little easier for you, we have provided two support services: a Victim Services Counselor and the Victim Infor- mation and Notification Everyday (VINE) System. The Jacksonville Sheriff’s Office cares about you. We are working every day to support victims of crime and their families. Sincerely, Mike Williams Mike Williams, Sheriff Duval County, FL 2 YOUR CASE INFORMATION Law Enforcement Agency: Jacksonville Sheriff’s Office (JSO) Emergency: -

Communications Business Plan

1 5. Team Letter 7. Mission, Vision & Values 6. What is Citizen-Centric? 9. Organizational Chart CONTENTS 14. SWOT 20. Audience Demographics 16. Department Highlights 23. What We Do 18. Collaboration Workload 24. Five Year Implementation Table by Goal 2 10. Executive Summary 11. Benchmarking: Statistically Valid Survey 11. Industry Trends & Best Practices 12. Citizen Survey Data CONTENTS 32. Fiscal Outlook 33. Future 34. Staff Time 3 David Graham Assistant City Manager Sarah Prohaska Communications Director Nicole Hricik Marketing Supervisor & Communications Liaison Melissa Yunas Project Manager Maureen Kenyon Social Media Coordinator/Digital Storyteller Benjamin Elliott Digital Video Producer Matt Dutiel Digital Video Producer Avi Monina Digital Media Production Coordinator Gustavo Nadasi Digital Technology Coordinator Patricia D’Abate Web Content & Graphic Specialist Alyssa Taylor Graphic/Digital Content Specialist 4 Team Letter The last decade has ushered in dramatic change in the ways people receive news and information. The evening television news and the morning newspaper are no longer the definitive source of news. People now have a multitude of channels from which they receive information – a 140-character tweet, a Facebook video, local news blog or live streamed video. Technology has provided an ever- expanding host of venues for sharing information with names like Meerkat, Instagram, Periscope and Snapchat. People can download and digest information anywhere at any time with tablets and smart phones. For a large, diverse City like Port St. Lucie, it can be challenging to communicate to every audience in the particular ways that resonate with each one. However, the City’s communications team enjoys and thrives on these challenges. -

Mining Social Media for Newsgathering: a Review

Mining Social Media for Newsgathering: A Review Arkaitz Zubiaga Queen Mary University of London, London, UK [email protected] Abstract Social media is becoming an increasingly important data source for learning about breaking news and for following the latest developments of ongoing news. This is in part possible thanks to the existence of mobile devices, which allows anyone with access to the Internet to post updates from anywhere, leading in turn to a growing presence of citizen journalism. Consequently, social media has be- come a go-to resource for journalists during the process of newsgathering. Use of social media for newsgathering is however challenging, and suitable tools are needed in order to facilitate access to useful information for reporting. In this pa- per, we provide an overview of research in data mining and natural language pro- cessing for mining social media for newsgathering. We discuss five different areas that researchers have worked on to mitigate the challenges inherent to social me- dia newsgathering: news discovery, curation of news, validation and verification of content, newsgathering dashboards, and other tasks. We outline the progress made so far in the field, summarise the current challenges as well as discuss future directions in the use of computational journalism to assist with social media news- gathering. This review is relevant to computer scientists researching news in social media as well as for interdisciplinary researchers interested in the intersection of computer science and journalism. 1 Introduction The popularity of social media platforms has increased exponentially in recent years, arXiv:1804.03540v2 [cs.CL] 11 Sep 2019 gathering millions of updates on a daily basis [1, 2]. -

Livestreaming: Using OBS

Livestreaming: Using OBS Church of England Digital Team Why use live video? • It is an opportunity to reach out into our community • Reminds us our community are still together as one • Maintains the habit of regularly meeting together • Audiences typically prefer to watch a live video over pre-recorded video Source: Tearfund Where • YouTube – on your laptop (Mobile requires more than 1000 subscribers!) • Facebook – Laptop or mobile device • Instagram – through Instagram stories on your mobile device • Twitter – from your mobile • Zoom meeting or webinar – remember to password protect your meetings How to choose the right platform for you • Use a platform that you community use often, or are able to adapt to quickly. • A small group may prefer a private call on Zoom or Skype • Sermon or morning prayer could be public on social media Using software Free and open source software for broadcasting https://obsproject.com/ Incorporate live elements and pre- recorded video into one live stream to YouTube or Facebook Numbers to watch Not just total views! Look at: Check your analytics: • Average view duration Your YouTube channel > videos > click • Audience retention – when does it drop off? analytics icon beside each video • End screen click rate Other considerations • Music and Licenses • Wi-fi connection • Safeguarding • Involve other people as hosts • Re-use the video during the week – don’t let your hard work be used just once! • Have fun! Next steps Read our Labs Attend a Come to a Join the Learning webinar training day newsletter blogs Click a link to learn more. -

Radio Frequency Identification Based Smart

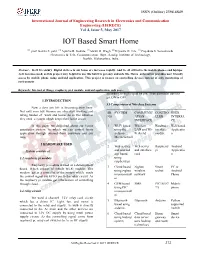

ISSN (Online) 2394-6849 International Journal of Engineering Research in Electronics and Communication Engineering (IJERECE) Vol 4, Issue 5, May 2017 IOT Based Smart Home [1] prof. Kavita S. patil, [2] Apurva R. Kokate, [3] Shruti R. Wagh, [4]Priyanka D. Tile, [5] Prajakata S. Somavanshi Electronics & Tele- Communication Dept., Sandip Institute of Technology, Nashik, Maharashtra, India. Abstract - In 21 St century Digital devices in our home are increases rapidly. And we all affixed to the mobile phones and laptops. As it becomes need, so this project may helpful to use this habit to get easy and safe life. Home automation provides user friendly access by mobile phone using android application. This project is focuses on controlling devices instead of only monitoring of environment Keywords: Internet of things, raspberry pi-3 module, android application, web page. according to high signal on pin. Then particular devices get ON or OFF. 1.INTRODUCTION 3.1Comparison of Wireless Systems Now a days our life is becoming very busy. Not only men but women are also start working and SR SYSTEM COMMUNIC CONTRO USER taking burden of work and home. So in this situation NO ATION LLER INTERFA they need a system which keeps their home secure. INTERFACE CE In this paper we described about our Home 1 Wi-Fi based Wireless Hardware Web based automation system. In which we can control home using the LAN and Wi- interface Applicatio application through internet from anywhere and any arduino Fi shield module n time. Microcontroll er 2.HARDWARE USED 2 Web service Web server Raspberry Android System consists of and android and interface pi Applicatio app based card n 2.1 raspberry pi module using raspberry pi Raspberry pi module is used as a development 3 Cloud based Zigbee Smart PC or board. -

Heartmate: a Competitive and Motivational Fitness Application for Ios Devices

University of Nevada, Reno HeartMate: A Competitive and Motivational Fitness Application for iOS Devices A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Science and Engineering by Marlon Daniel Chavez Dr. Frederick C. Harris, Jr., Thesis Advisor May, 2016 THE GRADUATE SCHOOL We recommend that the thesis prepared under our supervision by MARLON DANIEL CHAVEZ Entitled HeartMate: A Competitive and Motivational Fitness Application for iOS Devices be accepted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Dr. Frederick C. Harris, Jr., Advisor Dr. Sergiu M. Dascalu, Committee Member Dr. Yantao Shen, Graduate School Representative David W. Zeh, Ph.D., Dean, Graduate School May, 2016 i Abstract Smartphones today are more advanced than they have ever been before with hardware sensors built into them to detect location, motion, as well ways to com- municate with third party hardware through bluetooth, and the internet through cellular or wifi. With the introduction of Apple’s App Store, and the Google Play store smartphone devices have been given features one would not think possible on a cell phone. One of the target categories for app developers is health, due to the sensors provided in current smartphones an application can be developed to track a user’s health. The apps currently on the market motivate users through mostly goal based challenges between themselves or their friends. HeartMate is an iOS mobile application that utilizes the sensors in the iPhone, and the use of a Bluetooth LE connected heart rate monitor to create a new competitive, motivational, real time social experience using heart rates as a performance measure.