Universal Planning Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Channel Lineup

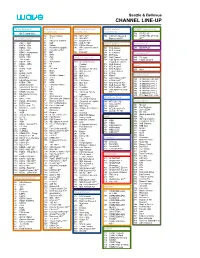

Seattle & Bellevue CHANNEL LINEUP TV On Demand* Expanded Content* Expanded Content* Digital Variety* STARZ* (continued) (continued) (continued) (continued) 1 On Demand Menu 716 STARZ HD** 50 Travel Channel 774 MTV HD** 791 Hallmark Movies & 720 STARZ Kids & Family Local Broadcast* 51 TLC 775 VH1 HD** Mysteries HD** HD** 52 Discovery Channel 777 Oxygen HD** 2 CBUT CBC 53 A&E 778 AXS TV HD** Digital Sports* MOVIEPLEX* 3 KWPX ION 54 History 779 HDNet Movies** 4 KOMO ABC 55 National Geographic 782 NBC Sports Network 501 FCS Atlantic 450 MOVIEPLEX 5 KING NBC 56 Comedy Central HD** 502 FCS Central 6 KONG Independent 57 BET 784 FXX HD** 503 FCS Pacific International* 7 KIRO CBS 58 Spike 505 ESPNews 8 KCTS PBS 59 Syfy Digital Favorites* 507 Golf Channel 335 TV Japan 9 TV Listings 60 TBS 508 CBS Sports Network 339 Filipino Channel 10 KSTW CW 62 Nickelodeon 200 American Heroes Expanded Content 11 KZJO JOEtv 63 FX Channel 511 MLB Network Here!* 12 HSN 64 E! 201 Science 513 NFL Network 65 TV Land 13 KCPQ FOX 203 Destination America 514 NFL RedZone 460 Here! 14 QVC 66 Bravo 205 BBC America 515 Tennis Channel 15 KVOS MeTV 67 TCM 206 MTV2 516 ESPNU 17 EVINE Live 68 Weather Channel 207 BET Jams 517 HRTV PayPerView* 18 KCTS Plus 69 TruTV 208 Tr3s 738 Golf Channel HD** 800 IN DEMAND HD PPV 19 Educational Access 70 GSN 209 CMT Music 743 ESPNU HD** 801 IN DEMAND PPV 1 20 KTBW TBN 71 OWN 210 BET Soul 749 NFL Network HD** 802 IN DEMAND PPV 2 21 Seattle Channel 72 Cooking Channel 211 Nick Jr. -

A Decade of Deceit How TV Content Ratings Have Failed Families EXECUTIVE SUMMARY Major Findings

A Decade of Deceit How TV Content Ratings Have Failed Families EXECUTIVE SUMMARY Major Findings: In its recent report to Congress on the accuracy of • Programs rated TV-PG contained on average the TV ratings and effectiveness of oversight, the 28% more violence and 43.5% more Federal Communications Commission noted that the profanity in 2017-18 than in 2007-08. system has not changed in over 20 years. • Profanity on PG-rated shows included suck/ Indeed, it has not, but content has, and the TV blow, screw, hell/damn, ass/asshole, bitch, ratings fail to reflect “content creep,” (that is, an bastard, piss, bleeped s—t, bleeped f—k. increase in offensive content in programs with The 2017-18 season added “dick” and “prick” a given rating as compared to similarly-rated to the PG-rated lexicon. programs a decade or more ago). Networks are packing substantially more profanity and violence into youth-rated shows than they did a decade ago; • Violence on PG-rated shows included use but that increase in adult-themed content has not of guns and bladed weapons, depictions affected the age-based ratings the networks apply. of fighting, blood and death and scenes We found that on shows rated TV-PG, there was a of decapitation or dismemberment; The 28% increase in violence; and a 44% increase in only form of violence unique to TV-14 rated profanity over a ten-year period. There was also a programming was depictions of torture. more than twice as much violence on shows rated TV-14 in the 2017-18 television season than in the • Programs rated TV-14 contained on average 2007-08 season, both in per-episode averages and 84% more violence per episode in 2017-18 in absolute terms. -

Xfinity Channel Lineup

Channel Lineup 1-800-XFINITY | xfinity.com SARASOTA, MANATEE, VENICE, VENICE SOUTH, AND NORTH PORT Legend Effective: April 1, 2016 LIMITED BASIC 26 A&E 172 UP 183 QUBO 738 SPORTSMAN CHANNEL 1 includes Music Choice 27 HLN 179 GSN 239 JLTV 739 NHL NETWORK 2 ION (WXPX) 29 ESPN 244 INSP 242 TBN 741 NFL REDZONE <2> 3 PBS (WEDU SARASOTA & VENICE) 30 ESPN2 42 BLOOMBERG 245 PIVOT 742 BTN 208 LIVE WELL (WSNN) 31 THE WEATHER CHANNEL 719 HALLMARK MOVIES & MYSTERIES 246 BABYFIRST TV AMERICAS 744 ESPNU 5 HALLMARK CHANNEL 32 CNN 728 FXX (ENGLISH) 746 MAV TV 6 SUNCOAST NEWS (WSNN) 33 MTV 745 SEC NETWORK 247 THE WORD NETWORK 747 WFN 7 ABC (WWSB) 34 USA 768-769 SEC NETWORK (OVERFLOW) 248 DAYSTAR 762 CSN - CHICAGO 8 NBC (WFLA) 35 BET 249 JUCE 764 PAC 12 9 THE CW (WTOG) 36 LIFETIME DIGITAL PREFERRED 250 SMILE OF A CHILD 765 CSN - NEW ENGLAND 10 CBS (WTSP) 37 FOOD NETWORK 1 includes Digital Starter 255 OVATION 766 ESPN GOAL LINE <14> 11 MY NETWORK TV (WTTA) 38 FOX SPORTS SUN 57 SPIKE 257 RLTV 785 SNY 12 IND (WMOR) 39 CNBC 95 POP 261 FAMILYNET 47, 146 CMT 13 FOX (WTVT) 40 DISCOVERY CHANNEL 101 WEATHERSCAN 271 NASA TV 14 QVC 41 HGTV 102, 722 ESPNEWS 279 MLB NETWORK MUSIC CHOICE <3> 15 UNIVISION (WVEA) 44 ANIMAL PLANET 108 NAT GEO WILD 281 FX MOVIE CHANNEL 801-850 MUSIC CHOICE 17 PBS (WEDU VENICE SOUTH) 45 TLC 110 SCIENCE 613 GALAVISION 17 ABC (WFTS SARASOTA) 46 E! 112 AMERICAN HEROES 636 NBC UNIVERSO ON DEMAND TUNE-INS 18 C-SPAN 48 FOX SPORTS ONE 113 DESTINATION AMERICA 667 UNIVISION DEPORTES <5> 19 LOCAL GOVT (SARASOTA VENICE & 49 GOLF CHANNEL 121 DIY NETWORK 721 TV GAMES 1 includes Limited Basic VENICE SOUTH) 50 VH1 122 COOKING CHANNEL 734 NBA TV 1, 199 ON DEMAND (MAIN MENU) 19 LOCAL EDUCATION (MANATEE) 51 FX 127 SMITHSONIAN CHANNEL 735 CBS SPORTS NETWORK 194 MOVIES ON DEMAND 20 LOCAL GOVT (MANATEE) 55 FREEFORM 129 NICKTOONS 738 SPORTSMAN CHANNEL 299 FREE MOVIES ON DEMAND 20 LOCAL EDUCATION (SARASOTA, 56 AMC 130 DISCOVERY FAMILY CHANNEL 739 NHL NETWORK 300 HBO ON DEMAND VENICE & VENICE SOUTH) 58 OWN 131 NICK JR. -

Netflix and the Development of the Internet Television Network

Syracuse University SURFACE Dissertations - ALL SURFACE May 2016 Netflix and the Development of the Internet Television Network Laura Osur Syracuse University Follow this and additional works at: https://surface.syr.edu/etd Part of the Social and Behavioral Sciences Commons Recommended Citation Osur, Laura, "Netflix and the Development of the Internet Television Network" (2016). Dissertations - ALL. 448. https://surface.syr.edu/etd/448 This Dissertation is brought to you for free and open access by the SURFACE at SURFACE. It has been accepted for inclusion in Dissertations - ALL by an authorized administrator of SURFACE. For more information, please contact [email protected]. Abstract When Netflix launched in April 1998, Internet video was in its infancy. Eighteen years later, Netflix has developed into the first truly global Internet TV network. Many books have been written about the five broadcast networks – NBC, CBS, ABC, Fox, and the CW – and many about the major cable networks – HBO, CNN, MTV, Nickelodeon, just to name a few – and this is the fitting time to undertake a detailed analysis of how Netflix, as the preeminent Internet TV networks, has come to be. This book, then, combines historical, industrial, and textual analysis to investigate, contextualize, and historicize Netflix's development as an Internet TV network. The book is split into four chapters. The first explores the ways in which Netflix's development during its early years a DVD-by-mail company – 1998-2007, a period I am calling "Netflix as Rental Company" – lay the foundations for the company's future iterations and successes. During this period, Netflix adapted DVD distribution to the Internet, revolutionizing the way viewers receive, watch, and choose content, and built a brand reputation on consumer-centric innovation. -

Comcast Channel Lineup for WCUPA HD Channels Only When HDMI Cable Used to Connect to Set Top Cable Box

Comcast Channel Lineup for WCUPA HD Channels only when HDMI cable used to connect to Set Top Cable Box CH. NETWORK Standard Def CH. NETWORK Standard Def CH. MUSIC STATIONS 2 MeTV (KJWP, Phila.) 263 RT (WYBE, Phila.) 401 Music Choice Hit List 3 CBS 3 (KYW, Phila.) 264 France 24 (WYBE, Phila.) 402 Music Choice Max 4 WACP 265 NHK World (WYBE, Phila.) 403 Dance / EDM 6 6 ABC (WPVI, Phila.) 266 Create (WLVT, Allentown) 404 Music Choice Indie 7 PHL 17 (WPHL, Phila.) 267 V-me (WLVT, Allentown) 405 Hip-Hop and R&B 8 NBC 8 (WGAL, Phila.) 268 Azteca (WZPA, Phila.) 406 Music Choice Rap 9 Fox 29 (WTXF, Phila.) 278 The Works (WTVE, Phila.) 407 Hip-Hop Classics 10 NBC 10 (WCAU, Phila.) 283 EVINE Live 408 Music Choice Throwback Jamz 11 QVC 287 Daystar 409 Music Choice R&B Classics 12 WHYY (PBS, Phila.) 291 EWTN 410 Music Choice R&B Soul 13 CW Philly 57 (WPSG, Phila.) 294 The Word 411 Music Choice Gospel 14 Animal Planet 294 Inspiration 412 Music Choice Reggae 15 WFMZ 500 On Demand Previews 413 Music Choice Rock 16 Univision (WUVP. Phila.) 550 XFINITY Latino 414 Music Choice Metal 17 MSNBC 556 TeleXitos (WWSI, Phila.) 415 Music Choice Alternative 18 TBN (WGTW, Phila.) 558 V-me (WLVT, Allentown) 416 Music Choice Adult Alternative 19 NJTV 561 Univision (WUVP, Phila.) 417 Music Choice Retro Rock 20 HSN 563 UniMás (WFPA, Phila.) 418 Music Choice Classic Rock 21 WMCN 565 Telemundo (WWSI, Phila.) 419 Music Choice Soft Rock 22 EWTN 568 Azteca (WZPA, Phila.) 420 Music Choice Love Songs 23 39 PBS (WLVT, Allentown) 725 FXX 421 Music Choice Pop Hits 24 Telemundo (WWSI, Phila.) 733 NFL Network 422 Music Choice Party Favorites 25 WTVE 965 Chalfont Borough Gov. -

XFINITY®TV Channel Line Up

xfirnty XFINITY®TV Channel Line up Effective January 2016 King County/Pierce County/ Snohomish County ~k WA-009 COMCAST 677 Disney Channel HD " 50 Bloomberg TV /\ 679 Nickelodeon HD " 53 FX XFINITY®TV 681 Disney XD HD " 54 TNT A Secondary Audio Programming (SAP) available • Channels in bold are HD 696 Science HD 55 TBS 720 Sprout HD 59 Syfy /\ 109 KCTS HD (PBS) Limited Basic 721 Discovery Family 62 VH1 110 KZJO HD (JOETV) Channel HD 63 MTV 111 KSTW HD (CW) 2 NWCN 64 MTV 2 1121126 KUNS HD (Univision) 3 KWPX-TV ION Digital Economy 66 Bravo /\ 113 KCPQ HD (FOX) 4 KOMO (ABC) 68 HGTV 321 Seattle Channel HD Includes Limited Basic 5 KING (NBC) 70 Golf Channel 322 KCTV-HD 35 Food Network 6 KONG 71 Oxygen 325 KIRO Get TV 37 History /\ 7 KIRO (CBS) 118 Sprout 328 KOMO ThisTV (ABC) 41 Disney Channel /\ 8 Discovery Channel 128 WGN 331 Live Well Network 42 Cartoon Network /\ 9 KCTS (PBS) 149 MoviePlex /\ 334 KBTC-MHz 43 Animal Planet 10 KZJO (JOETV) 150 C-SPAN3 336 KCTS-Create 44 CNN 11 KSTW (CW) 152 Crossings TV 337 KCTS Vme 48 Fox News Channel 12 KBTC (PBS) 162 BBC America /\ 340 Antenna TV 49 truTV 12 KVOS Me TV (Marysvillel 173 ESPN HD 343 KVOS Movies! 51 Lifetime /\ Arlington) 174 ESPN 2 HD 346/738 KUNS (MundoFox) 52 A&E /\ 13 KCPQ (FOX) 183 Esquire 349 Azteca America 56 BET 14 KBCB (IND) 271 Investigation Discovery 350 KFFV Antenna TV 58 USA Network /\ 15 KFFV (IND) 273 National Geographic 351 KFFV KBS World 60 Comedy Central 16 QVC Channel 352 CoziTV 65 E!/\ 17 HSN 275 fyi, 353 KSTW-Decades 67 AMC /\ 18 KWDK (Daystar) 276 H2 599 XFINITY Latino -

Channel and Setup Guide

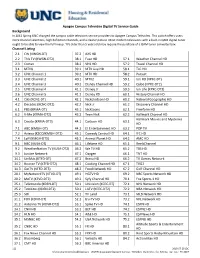

Apogee Campus Televideo Digital TV Service Guide Background In 2013 Spring UNC changed the campus cable television service providers to Apogee Campus Televideo. This switch offers users more channel selections, high definition channels, and a clearer picture. Most modern televisions with a built-in QAM digital tuner ought to be able to view the full lineup. TVs older than 5 years old may require the purchase of a QAM tuner converter box. Channel Listing 2.1 CW (KWGN-DT) 37.2 AXS HD 2.2 This TV (KWGN-DT2) 38.1 Fuse HD 57.1 Weather Channel HD 2.3 Comet 38.2 VH1 HD 57.2 Travel Channel HD 3.1 MTVU 39.1 MTV Live HD 58.1 TLC HD 3.2 UNC Channel 1 39.2 MTV HD 58.2 Pursuit 3.3 UNC Channel 2 40.1 MTV2 59.1 Ion HD (KPXC-DT) 3.4 UNC Channel 3 40.2 Disney Channel HD 59.2 Qubo (KPXC-DT2) 3.5 UNC Channel 4 41.1 Disney Jr 59.3 Ion Life (KPXC-DT3) 3.6 UNC Channel 5 41.2 Disney XD 60.1 History Channel HD 4.1 CBS (KCNC-DT) 42.1 Nickelodeon HD 60.2 National Geographic HD 4.2 Decades (KCNC-DT2) 42.2 Nick Jr 61.2 Discovery Channel HD 6.1 PBS (KRMA-DT) 43.1 Nicktoons 62.1 Freeform HD 6.2 V-Me (KRMA-DT2) 43.2 Teen Nick 62.2 Hallmark Channel HD Hallmark Movies and Mysteries 6.3 Create (KRMA-DT3) 44.1 Cartoon HD 63.1 HD 7.1 ABC (KMGH-DT) 44.2 E! Entertainment HD 63.2 POP TV 7.2 Azteca (KZCO/KMGH-DT2) 45.1 Comedy Central HD 64.1 IFC HD 7.4 Laff (KMGH-DT3) 45.2 Animal Planet HD 64.2 AMC HD 9.1 NBC (KUSA-DT) 46.1 Lifetime HD 65.1 ReelzChannel 9.2 WeatherNation TV (KUSA-DT2) 46.2 We TV HD 65.2 TBS HD 9.3 Justice Network 47.1 Oxygen 66.1 TNT HD 14.1 UniMás (KTFD-DT) -

Standard TV Channel Guide APRIL 2020 HD at Its Best • Video on Demand • TV Everywhere

Standard TV Channel Guide APRIL 2020 HD at its Best • Video On Demand • TV Everywhere www.cincinnatibell.com/fioptics BASIC SD HD• VOD SD HD• SD HD• VOD BASIC PEG CHANNELS (STB Lease Required) Lifetime Real Women . 234• ABC (WCPO) Cincinnati . 9 509 4 Florence . 822 MLB Network . 208• 608 AntennaTV . 257• 4 ICRC . 834, . 838, 845, 847-848 MSNBC . .64 . 564 Bounce . 258. • 4 NKU . 818. MTV . 71 . 571 Bulldog . .246 . • 4 TBNK . 815-817, 819-821 NBC Sports Network . 202• 602 C-SPAN . 21. 4 Waycross . 850-855. Nat Geo Wild . 281• 681 C-SPAN 2 . 22 . National Geographic . 43. 543 4 CBS (WKRC) Cincinnati . 12. 512 4 Newsy . 508 4 Circle . 253. • PREFERRED SD HD• VOD Nickelodeon . 45 . 545 4 Court TV . 8, 271• Includes BASIC TV package OWN . 54 . 554 Oxygen . .53 . 553 4 COZI TV . .290 . • A&E . 36. 536 4 Paramount Network . .70 570 4 Decades . 289• AMC . 33 . 533 4 Pop . 10. 510 4 EWTN . 97,. 264• Animal Planet . 44 . 544 4 RIDE TV . 621. Fioptics TV . 100 . • BBC America . 267 . • 667 Stingray Ambiance . 520. FOX (WXIX) Cincinnati . 3. 503 4 BET . .72 572 4 Sundance TV . 227 . • 627 GEM Shopping . 243• 643 Big Ten Network . 206• 606 Syfy . 38. 538 4 Big Ten Overflow Network . 207• getTV . 292 . • TBS . .41 541 4 Bravo . 56 . 556 4 Grit . 242• TCM . .34 534 4 Cartoon Network . .46 546 4 Heroes & Icons . 270• Tennis Channel . .214 . • 614 CLEO TV . 629 HSN . 4. .504 . TLC . 57. 557 4 CMT . 74 . 574 4 INSP . 506 TNT . .40 540 4 CNBC . -

Comcast and Viacomcbs Announce “Skyshowtime,” a New Streaming Service to Launch in Select European Markets

Comcast and ViacomCBS Announce “SkyShowtime,” a New Streaming Service to Launch in Select European Markets August 18, 2021 Subscription Video on Demand Partnership to Include Premium and Original Content from Sky, NBCUniversal, Universal Pictures, Peacock, Paramount+, SHOWTIME®, Paramount Pictures, and Nickelodeon SkyShowtime to Roll Out in 20+ European Markets Including Spain, Portugal, The Nordics, Netherlands, Central and Eastern Europe starting in 2022 Press Assets Available HERE LONDON & NEW YORK & PHILADELPHIA--(BUSINESS WIRE)--Aug. 18, 2021-- Comcast Corporation (NASDAQ: CMCSA) and ViacomCBS Inc. (NASDAQ: VIAC, VIACA) today announced a partnership to launch a new subscription video on demand (SVOD) service in more than 20 European territories encompassing 90 million homes. SkyShowtime will bring together decades of direct-to-consumer experience and the very best entertainment, movies, and original series from the NBCUniversal, Sky and ViacomCBS portfolio of brands, including titles from SHOWTIME, Nickelodeon, Paramount Pictures, Paramount+ Originals, Sky Studios, Universal Pictures, and Peacock. The service’s vast slate will span all genres and audience categories, including scripted dramas, kids and family, key franchises, premiere movies, local programming, documentaries/factual content, and more. This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20210818005282/en/ The new SVOD service is expected to launch in 2022, subject to regulatory approval. It will ultimately be available to consumers in Albania, Andorra, Bosnia and Herzegovina, Bulgaria, Croatia, Czech Republic, Denmark, Finland, Hungary, Kosovo, Montenegro, Netherlands, North Macedonia, Norway, Poland, Portugal, Romania, Serbia, Slovakia, Slovenia, Spain, and Sweden. SkyShowtime will be home to 10,000+ hours of entertainment from some of the top creators and most beloved content from around the world. -

2021 Channel Lineup

Hispanic MiVisión Lite 800 History en Español 824 Music Choice Pop Tropicales 780 FXX 801 WGBO Univision 825 Discovery Familia 784 De Pelicula Clasico 802 WSNS Telemundo 826 Sorpresa 785 De Pelicula 803 WXFT UniMas 827 Ultra Familia 786 Cine Mexicano 804 Galavision 828 Disney XD (SAP) 787 Cine Latino 806 Fox Deportes 829 Boomerang (SAP) 788 Tr3s 809 TBN Enlace 830 Semillitas 789 Bandamax 810 EWTN en Español 831 Tele El Salvador 790 Telehit 811 Mundo FOX 832 TV Dominicana 791 Ritmoson 813 CentroAmerica TV 833 Pasiones 792 Tele Novela 814 WCHU 793 FOX Life 815 WAPA America MiVisión Plus 794 NBC Univsero 816 Telemicro Internacional Includes ALL MiVisión Lite channels PLUS 795 Discovery en Español 817 Caracol TV 369 (805 HD) ESPN Deportes 796 TVN Chile 818 Ecuavisa Internacional 808 beIN Sport 797 TV Española 821 Music Choice Pop Latino 820 Gran Cine 798 CNN en Español 822 Music Choice Mexicana 834 Viendo Movies 799 Nat Geo Mundo 823 Music Choice Urbana RCN On Demand With RCN On Demand get unlimited access to thousands of hours of popular content whenever you want - included FREE* with your Streaming TV subscription! We’ve added 5x the capacity to RCN On Demand, so you never have to miss a moment. Get thousands of hours of programming including more of your favorites from Fox, NBC, A&E, Disney Jr. and more with over 40 new networks. The best part is it’s all included with your RCN Streaming TV!* It’s easy as 1-2-3: 1. Press the VOD or On Demand button on your RCN remote. -

Channel Lineup November 2020

MyTV CHANNEL LINEUP NOVEMBER 2020 ON ON ON SD HD• DEMAND SD HD• DEMAND SD HD• DEMAND Foundation Pack My64 (WSTR) Cincinnati 11 511 Kids & Family Music Choice Channels 300-349• 4 A&E 36 536 4 Boomerang 284• 4 National Geographic 43 543 4 ABC (WCPO) Cincinnati 9 509 4 Cartoon Network 46 546 4 NBC (WLWT) Cincinnati 5 505 4 AntennaTV 257• Discovery Family 48 548 4 Newsy 508 Big Ten Network 206• 606 Disney 49 549 4 NKU 818+ Big Ten Overflow Network 207• Disney Junior 50 550 4 PBS Dayton/Community Access 16 Boone County 831+ Disney XD 282• 682 4 Quest 298• Bounce 258• Nickelodeon 45 545 4 QVC 15 515 Bulldog 246• Nick Jr. 286• 686 4 QVC2 518 Campbell County 805-807, 810-812+ Nicktoons 285• QVC3 637 CBS (WKRC) Cincinnati 12 512 4 Teen Nick 287• 4 Shop HQ 245• Cincinnati 800-804, 860 TV Land 35 535 4 Shop LC 243• 643 Circle 253• Universal Kids 283• 4 SonLife 265• CLEO TV 629 Stadium Channel 260• CNN 67 567 4 Movies & Series Start TV 299• COZI TV 290• Stingray Ambiance 520 MGM HD 628 C-SPAN 21 4 Sundance TV 227• 627 STARZEncore Family 479 C-SPAN 2 22 4 TBN 18 STARZEncore 482 DayStar 262• 4 TBNK 815-817, 819-821+ STARZEncore West 483 Decades 289• 4 The CW 17 517 STARZEncore Westerns 484 Discovery Channel 32 532 4 4 The Lebanon Channel/WKET2 6 STARZEncore Westerns West 485 ESPN 28 528 4 4 The Word Network 263• STARZEncore Classic 486 ESPN2 29 529 4 This TV 259• STARZEncore Classic West 487 EWTN 264•/97 4 TLC 57 557 4 STARZEncore Suspense 488 FidoTV 688 4 Travel Channel 59 559 4 STARZEncore Suspense West 489 Florence 822+ 4 Waycross 850-855+ STARZEncore Black 490 Food Network 62 562 4 4 WCET (PBS) Cincinnati 13 513 STARZEncore Black West 491 FOX (WXIX) Cincinnati 3 503 4 4 WCET Arts 20 STARZEncore Action 492 FOX Business Network 269• 669 4 WCET Create 7 STARZEncore Action West 493 FOX News 66 566 4 WKET/Community Access 96 596 FLiX 432 FOX Sports 1 25 525 4 WKET1 294• Showtime 435 FOX Sports 2 219• 619 4 WKET2 295• Showtime West 436 FOX Sports Ohio 27 527 4 WPTO (PBS) Oxford 14 Showtime TOO 437 FOX Sports Ohio Alt Feed 601 4 Z Living 636 Showtime TOO West 438 Ft. -

Washington Residential Channel Guide

Fiber TV Washington Residential Channel Lineup Effective Date August 2021 Welcome to Fiber TV Here’s your complete list of available channels to help you decide what to watch. On-Demand With Fiber TV, every night is a movie night. Choose from thousands of On Demand movies, TV shows, concerts and sports. TV On-The-Go For TV on-the-go, check out apps available from our entertainment partners featuring live and on-demand content. Browse the list of participating entertainment partners here: https://ziplyfiber.com/resources/tveverywhere. Simply sign into an entertainment partner’s app with your Ziply Fiber username and password. Have questions? We have answers … When you have a question or need help with your Fiber TV Service, simply visit Help on your TV, or visit www.ziplyfiber.com/helpcenter for a complete library of How Tos. 2 Quick Reference Channels are grouped by programming categories in the following ranges: Local Channels 1–49 SD, 501–549 HD Local Plus Channels 460–499 SD Local Public/Education/Government (varies by location) 15–47 SD Entertainment 50–69 SD, 550–569 HD Sports 70–99 & 300–319 SD, 570–599 HD News 100–119 SD, 600–619 HD Info & Education 120–139 SD, 620–639 HD Home & Leisure/Marketplace 140–179 SD, 640–679 HD Pop Culture 180–199 SD, 680–699 HD Music 210–229 SD, 710–729 HD Movies/Family 230–249 SD, 730–749 HD Kids 250–269 SD, 780–789 HD People & Culture 270–279 SD Religion 280–299 SD Premium Movies 340–449 SD, 840–949 HD Pay Per View/Subscription Sports 1000–1499 Spanish Language 1500-1749 International 1750-1799 Digital Music** 1800–1900 3 Fiber TV Select Kong TV Bounce 466 Included with all Fiber TV KSTW Grit 481 packages.