An Unsupervised Method for the Extraction of Propositional Information from Text

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THE ROGER FEDERER STORY Quest for Perfection

THE ROGER FEDERER STORY Quest For Perfection RENÉ STAUFFER THE ROGER FEDERER STORY Quest For Perfection RENÉ STAUFFER New Chapter Press Cover and interior design: Emily Brackett, Visible Logic Originally published in Germany under the title “Das Tennis-Genie” by Pendo Verlag. © Pendo Verlag GmbH & Co. KG, Munich and Zurich, 2006 Published across the world in English by New Chapter Press, www.newchapterpressonline.com ISBN 094-2257-391 978-094-2257-397 Printed in the United States of America Contents From The Author . v Prologue: Encounter with a 15-year-old...................ix Introduction: No One Expected Him....................xiv PART I From Kempton Park to Basel . .3 A Boy Discovers Tennis . .8 Homesickness in Ecublens ............................14 The Best of All Juniors . .21 A Newcomer Climbs to the Top ........................30 New Coach, New Ways . 35 Olympic Experiences . 40 No Pain, No Gain . 44 Uproar at the Davis Cup . .49 The Man Who Beat Sampras . 53 The Taxi Driver of Biel . 57 Visit to the Top Ten . .60 Drama in South Africa...............................65 Red Dawn in China .................................70 The Grand Slam Block ...............................74 A Magic Sunday ....................................79 A Cow for the Victor . 86 Reaching for the Stars . .91 Duels in Texas . .95 An Abrupt End ....................................100 The Glittering Crowning . 104 No. 1 . .109 Samson’s Return . 116 New York, New York . .122 Setting Records Around the World.....................125 The Other Australian ...............................130 A True Champion..................................137 Fresh Tracks on Clay . .142 Three Men at the Champions Dinner . 146 An Evening in Flushing Meadows . .150 The Savior of Shanghai..............................155 Chasing Ghosts . .160 A Rivalry Is Born . -

The Championships 2002 Gentlemen's Singles Winner: L

The Championships 2002 Gentlemen's Singles Winner: L. Hewitt [1] 6/1 6/3 6/2 First Round Second Round Third Round Fourth Round Quarter-Finals Semi-Finals Final 1. Lleyton Hewitt [1]................................(AUS) 2. Jonas Bjorkman................................... (SWE) L. Hewitt [1]..................... 6/4 7/5 6/1 L. Hewitt [1] (Q) 3. Gregory Carraz..................................... (FRA) ....................6/4 7/6(5) 6/2 4. Cecil Mamiit.......................................... (USA) G. Carraz................6/2 6/4 6/7(5) 7/5 L. Hewitt [1] 5. Julian Knowle........................................(AUT) .........................6/2 6/1 6/3 6. Michael Llodra.......................................(FRA) J. Knowle.............. 3/6 6/4 6/3 3/6 6/3 J. Knowle (WC) 7. Alan MacKin......................................... (GBR) .........................6/3 6/4 6/3 8. Jarkko Nieminen [32]........................... (FIN) J. Nieminen [32]..........7/6(5) 6/3 6/3 L. Hewitt [1] 9. Gaston Gaudio [24]............................ (ARG) .........................6/3 6/3 7/5 (Q) 10. Juan-Pablo Guzman.............................(ARG) G. Gaudio [24]................. 6/3 6/4 6/3 M. Youzhny (LL) 11. Brian Vahaly......................................... (USA) ........6/0 1/6 7/6(2) 5/7 6/4 12. Mikhail Youzhny................................... (RUS) M. Youzhny.................6/3 1/6 6/3 6/2 M. Youzhny 13. Jose Acasuso....................................... (ARG) ...................6/2 1/6 6/3 6/3 14. Marc Rosset........................................... (SUI) M. Rosset..................... 6/3 7/6(4) 6/1 N. Escude [16] (WC) 15. Alex Bogdanovic...................................(GBR) ...................6/2 5/7 7/5 6/4 16. Nicolas Escude [16]............................ (FRA) N. Escude [16]........... 4/6 6/4 6/4 6/4 17. Juan Carlos Ferrero [9]...................... (ESP) 18. -

Best of N Contests: Implications of Simpson's Paradox in Tennis Benjamin Wright

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2012 Best of N Contests: Implications of Simpson's Paradox in Tennis Benjamin Wright Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY COLLEGE OF EDUCATION BEST OF N CONTESTS: IMPLICATIONS OF SIMPSON’S PARADOX IN TENNIS By BENJAMIN WRIGHT A thesis submitted to the Department of Sport Management in partial fulfillment of the requirements for the degree of Master of Science Degree Awarded: Summer Semester, 2012 Benjamin Wright defended this thesis on June 28, 2012. The members of the supervisory committee were: Ryan Rodenberg Professor Directing Thesis Yu Kyoum Kim Committee Member Michael Mondello Committee Member The Graduate School has verified and approved the above-named committee members, and certifies that the thesis has been approved in accordance with university requirements. ii ACKNOWLEDGEMENTS I would like to thank my parents, Bill and Donna Wright, for their support throughout my life. I also greatly appreciate their unquestioned support in my choice to further my education in obtaining a graduate degree. Both have assisted in making this thesis the best paper it can be throughout the editing process and I am indebted to them for this. Next, I would like to thank my major professor, Dr. Ryan Rodenberg, for his great contributions to not only this thesis but also my time in the Florida State University Sport Management Masters program. Working closely with Dr. Rodenberg on this thesis and other projects has been an excellent experience. -

OLYMPIC GAMES - Men - Singles Date: 23 Jul 1996 - 03 Aug 1996 Prize Money($): Code: OL-ATLA-96 Draw: 64 Surface: H (O) Nation: U.S.A

OLYMPIC GAMES - Men - Singles Date: 23 Jul 1996 - 03 Aug 1996 Prize Money($): Code: OL-ATLA-96 Draw: 64 Surface: H (O) Nation: U.S.A. Category: OL Surface Type: Plexipave Venue: Atlanta, GA Grade: 7.01 1 1 Andre AGASSI (USA) A.AGASSI (1) 2 Jonas BJORKMAN (SWE) 7-6(6) 7-6(5) A.AGASSI (1) 3 Karol KUCERA (SVK) K.KUCERA 6-4 6-4 WC 4 Dmitriy TOMASHEVICH (UZB) 6-3 2-6 6-0 A.AGASSI (1) WC 5 Dinu-Mihai PESCARIU (ROU) O.ORTIZ (A) 2-6 6-4 6-2 A 6 Oscar ORTIZ (MEX) 6-2 6-2 A.GAUDENZI 7 Andrea GAUDENZI (ITA) A.GAUDENZI 6-1 7-6(5) 15 8 Carlos COSTA (ESP) 6-3 6-2 A.AGASSI (1) 9 9 Jan SIEMERINK (NED) T.WOODBRIDGE 7-5 4-6 7-5 10 Todd WOODBRIDGE (AUS) 6-2 6-4 T.WOODBRIDGE 11 Shuzo MATSUOKA (JPN) T.HENMAN 7-6(6) 7-6(5) 12 Tim HENMAN (GBR) 7-6(4) 6-3 W.FERREIRA (5) 13 Byron BLACK (ZIM) B.BLACK 7-6(3) 7-6(5) A 14 Guillaume RAOUX (FRA) 6-3 3-6 6-2 W.FERREIRA (5) WC 15 Gaston ETLIS (ARG) W.FERREIRA (5) 6-2 7-5 5 16 Wayne FERREIRA (RSA) 6-4 6-3 A.AGASSI (1) 3 17 Thomas ENQVIST (SWE) T.ENQVIST (3) 7-6(5) 6-3 A 18 Marc-Kevin GOELLNER (GER) 7-6(4) 4-6 6-4 T.ENQVIST (3) WC 19 Sargis SARGSIAN (ARM) S.SARGSIAN (WC) 4-6 7-6(2) 6-4 WC 20 Daniel NESTOR (CAN) 6-4 6-4 L.PAES (WC) A 21 Nicolas PEREIRA (VEN) N.PEREIRA (A) 7-5 7-6(3) 22 Hernan GUMY (ARG) 6-4 6-0 L.PAES (WC) WC 23 Leander PAES (IND) L.PAES (WC) 6-2 6-3 11A 24 Richey RENEBERG (USA) 6-7(2) 7-6(7) 1-0 RET L.PAES (WC) 14 25 Renzo FURLAN (ITA) R.FURLAN (14) 6-1 7-5 26 Jiri NOVAK (CZE) 4-6 6-4 6-3 R.FURLAN (14) WC 27 Luis MOREJON (ECU) M.FILIPPINI (A) 7-5 6-2 A 28 Marcelo FILIPPINI (URU) 6-7(3) 7-5 6-1 R.FURLAN -

DELRAY BEACH ATP 250 SINGLES SEEDS (Thru 2020)

(DELRAY BEACH ATP 250 SINGLES SEEDS (thru 2020 2020 1. Nick Kyrgios WD (Withdrew before tournament) 2. Milos Raonic SF 3. Taylor Fritz 1R 4. Reilly Opelka W 5. John Millman 1R 6. Ugo Humbert SF 7. Adrian Mannarino 1R 8. Radu Albot 1R 2019 1. Juan Martin del Potro QF (Lost to Mackenzie McDonald) 2. John Isner SF 3. Frances Tiafoe 1R 4. Steve Johnson QF 5. John Millman 1R 6. Andreas Seppi QF 7. Taylor Fritz 1R 8. Adrian Mannarino QF 2018 1. Jack Sock 2R (Lost to Reilly Opelka) 2. Juan Martin del Potro 2R 3. Kevin Anderson WD 4. Sam Querrey 1R 5. Nick Kyrgios WD 6. John Isner 2R 7. Adrian Mannarino WD 8. Hyeon Chung QF 9. Milos Raonic 2R 2017 1. Milos Raonic F (Lost to Jack Sock) 2. Ivo Karlovic 1R 3. Jack Sock W 4. Sam Querrey QF 5. Steve Johnson QF 6. Bernard Tomic 1R 7. Juan Martin del Potro SF 8. Kyle Edmund QF 2016 1. Kevin Anderson 1R (Lost to Austin Krajicek) 2. Bernard Tomic 1R 3. Ivo Karlovic 1R 4. Grigor Dimitrov SF 5. Jeremy Chardy QF 6. Steve Johnson 2R 7. Donald Young 2R 8. Adrian Mannarino QF 2015 (Kevin Anderson 2R (Lost to Yen-Hsun Lu .1 2. John Isner 1R 3. Alexandr Dolgopolov QF 4. Ivo Karlovic W 5. Adrian Mannarino SF 6. Sam Querrey 1R 7. Steve Johnson QF 8. Viktor Troicki 2R 2014 1. Tommy Haas 2R (Lost to Q Steve Johnson) 2. John Isner SF 3. Kei Nishikori 2R 4. Kevin Anderson F 5. -

Una Mirada a La Prensa Deportiva Nacional: El Fenómeno Marcelo Ríos

UNIVERSIDAD DE CHILE FACULTAD DE CIENCIAS SOCIALES ESCUELA DE PERIODISMO Una Mirada a la Prensa Deportiva Nacional: El Fenómeno Marcelo Ríos. Rodrigo Andrés Miranda Sánchez. Memoria Para Optar al título de Periodista. Profesor Guía. Sergio Gilbert. Santiago, Marzo de 1999. I N D I C E Introducción 3 Orígenes Históricos 7 El Circuito ATP 9 Las Superficies del Tenis 12 Los Comienzos en Chile 15 Anita Lizana 18 Aparece Ayala 20 La Final contra Italia 24 La década de los ochenta 25 Zona de Promesas 28 El Repunte 32 Presente del Tenis Nacional 37 González - Massú 38 El Tenis en la Prensa (1993 - 1997) 41 Evolución 48 Una Mirada Revisionista 51 Conclusión: El Fenómeno Ríos 53 Anexos 62 Fuentes 90 pág. 2 I N T R O D U C C I O N Durante los últimos cinco años un nombre ha definido al tenis chileno. Y ese nombre no es otro que el de Marcelo Ríos. Desde sus primeros pasos en el profesionalismo, la figura del zurdo de Vitacura se ha convertido en uno de los ejes fundamentales de la prensa deportiva nacional, reactivando un segmento deportivo que estaba sumido en una de las crisis más grandes de los últimos años. Marcelo Ríos se ha transformado en una de las figuras noticiosas más importantes de nuestro país. Portada de diarios y revistas, ya sea deportivos o no; figura recurrente en los estelares de televisión; foco noticioso recurrente de noticieros; personaje de interés por su juego y personalidad ha contribuido enormemente a la difusión del deporte blanco en nuestro país que, por fin, cuenta con un ídolo que le permita recuperar el rumbo perdido. -

The Championships 2004 Gentlemen's Doubles Winners: J

The Championships 2004 Gentlemen's Doubles Winners: J. Bjorkman & T.A. Woodbridge [1] 6/1 6/4 4/6 6/4 First Round Second Round Third Round Quarter-Finals Semi-Finals Final 1. Jonas Bjorkman (SWE) & Todd Woodbridge (AUS) [1] J. Bjorkman & T.A. Woodbridge [1] J. Bjorkman & 2. Alberto Martin (ESP) & Albert Montanes (ESP).... ................................................6/1 6/2 T.A. Woodbridge [1] 3. Lucas Arnold (ARG) & Martin Garcia (ARG)......... L. Arnold & M. Garcia ...............................6/3 6/2 J. Bjorkman & 4. Jeff Coetzee (RSA) & Chris Haggard (RSA)......... .......................................7/6(3) 7/6(4) T.A. Woodbridge [1] 5. Rick Leach (USA) & Brian MacPhie (USA)........... R. Leach & B. MacPhie .........................3/6 6/4 9/7 R. Leach & (Q) 6. Rik De Voest (RSA) & Nathan Healey (AUS)........ ............................................7/6(4) 6/4 B. MacPhie 7. Tomas Cibulec (CZE) & Petr Pala (CZE).............. X. Malisse & O. Rochus [14] ..........6/7(3) 7/5 4/5 retired 8. Xavier Malisse (BEL) & Olivier Rochus (BEL) [14] ............................................6/1 7/6(2) 9. Gaston Etlis (ARG) & Martin Rodriguez (ARG) [9] G.A. Etlis & M. Rodriguez [9] 7/5 6/2 N. Davydenko & (WC)10. James Auckland (GBR) & Lee Childs (GBR)........ ................................................6/4 6/3 A. Fisher 11. David Ferrer (ESP) & Ruben Ramirez Hidalgo (ESP) N. Davydenko & A. Fisher ...........................7/5 7/6(2) N. Davydenko & 12. Nikolay Davydenko (RUS) & Ashley Fisher (AUS) ................................................6/2 6/1 A. Fisher (LL) 13. Devin Bowen (USA) & Tripp Phillips (USA)........... I. Flanagan & M. Lee ............6/7(4) 7/6(6) 15/13 M. Damm & J. Bjorkman & T.A. Woodbridge [1] (WC)14. -

Year-By-Year Seeds

DELRAY BEACH ATP 250 SINGLES SEEDS (thru 2021) 2021 1. Cristian Garin 2R 2. John Isner QF 3. Adrian Mannarino 2R 4. Hubert Hurkacz W 5. Tommy Paul 2R 6. Sam Querrey 2R 7. Pablo Andujar 1R 8. Frances Tiafoe QF 2020 1. Nick Kyrgios WD 2. Milos Raonic SF 3. Taylor Fritz 1R 4. Reilly Opelka W 5. John Millman 1R 6. Ugo Humbert SF 7. Adrian Mannarino 1R 8. Radu Albot 1R 2019 1. Juan Martin del Potro QF 2. John Isner SF 3. Frances Tiafoe 1R 4. Steve Johnson QF 5. John Millman 1R 6. Andreas Seppi QF 7. Taylor Fritz 1R 8. Adrian Mannarino QF 2018 1. Jack Sock 2R 2. Juan Martin del Potro 2R 3. Kevin Anderson WD 4. Sam Querrey 1R 5. Nick Kyrgios WD 6. John Isner 2R 7. Adrian Mannarino WD 8. Hyeon Chung QF 9. Milos Raonic 2R 2017 1. Milos Raonic F 2. Ivo Karlovic 1R 3. Jack Sock W 4. Sam Querrey QF 5. Steve Johnson QF 6. Bernard Tomic 1R 7. Juan Martin del Potro SF 8. Kyle Edmund QF 2016 1. Kevin Anderson 1R 2. Bernard Tomic 1R 3. Ivo Karlovic 1R 4. Grigor Dimitrov SF 5. Jeremy Chardy QF 6. Steve Johnson 2R 7. Donald Young 2R 8. Adrian Mannarino QF 2015 Kevin Anderson 2R .1 2. John Isner 1R 3. Alexandr Dolgopolov QF 4. Ivo Karlovic W 5. Adrian Mannarino SF 6. Sam Querrey 1R 7. Steve Johnson QF 8. Viktor Troicki 2R 2014 1. Tommy Haas 2R 2. John Isner SF 3. Kei Nishikori 2R 4. -

Evaluating Professional Tennis Players’ Career Performance: a Data Envelopment Analysis Approach

Munich Personal RePEc Archive Evaluating professional tennis players’ career performance: A Data Envelopment Analysis approach Halkos, George and Tzeremes, Nickolaos University of Thessaly, Department of Economics September 2012 Online at https://mpra.ub.uni-muenchen.de/41516/ MPRA Paper No. 41516, posted 24 Sep 2012 20:02 UTC Evaluating professional tennis players‘ career performance: A Data Envelopment Analysis approach By George E. Halkos and Nickolaos G. zeremes University of Thessaly, Department of Economics, Korai 43, 38333, Volos, Greece Abstract This paper by applying a sporting pro uction function evaluates 229 professional tennis players$ career performance. By applying Data Envelopment Analysis (DEA) the paper pro uces a unifie measure of nine performance in icators into a single career performance in ex. In a ition bootstrap techni,ues have been applie for bias correction an the construction of confi ence intervals of the efficiency estimates. The results reveal a highly competitive environment among the tennis players with thirty nine tennis players appearing to be efficient. Keywords: .rofessional tennis players/ Data Envelopment Analysis/ 0port pro uction function/ Bootstrapping. %EL classification: 114' 129' 383. 1 1. Introduction The economic theory behin sporting activity is base on the wor4 of Rottenberg (1952). 7owever, 0cully (1984) was the first to apply a pro uction function in or er to provi e empirical evi ence for the performance of baseball players. 0ince then several scholars have use frontier pro uction function in or er to measure teams$ performance an which has been escribe on the wor4s of 9a4, 7uang an 0iegfrie (1989), .orter an 0cully (1982) an :izel an D$Itri (1992, 1998). -

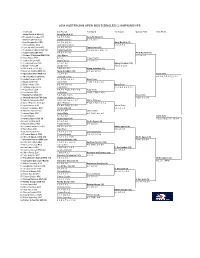

Australian Open 2002

2004 AUSTRALIAN OPEN MEN'S SINGLES CHAMPIONSHIPS 1st Round 2nd Round 3rd Round 4th Round Quarter Final Semi Finals 1 Andy Roddick USA (1) Andy Roddick (1) 2 Fernando Gonzalez CHI 6-2, 7-5, 7-6(4) Andy Roddick (1) 3 Bohdan Ulihrach CZE Bohdan Ulihrach 6-2, 6-2, 6-3 4 Lars Burgsmuller GER 6-4, 3-6, 6-2, 6-1 Andy Roddick (1) 5 Irakli Labadze GEO Juan Ignacio Chela 6-2, 6-0, 6-2 6 Juan Ignacio Chela ARG 6-4, 6-3, 3-6, 6-3 Taylor Dent (27) 7 Fernando Verdasco ESP (Q) Taylor Dent (27) 3-6, 6-4, 4-6, 7-6(4), 7-5 8 Taylor Dent USA (27) 6-2, 6-1, 2-1 ret. Andy Roddick (1) 9 Younes El Aynaoui MAR (18) Galo Blanco 6-1, 6-2, 6-3 10 Galo Blanco ESP 4-1 ret. Jurgen Melzer 11 Jurgen Melzer AUT Jurgen Melzer 6-3, 6-4, 6-3 12 Tomas Behrend GER 6-1, 6-2, 6-2 Sjeng Schalken (16) 13 David Ferrer ESP David Ferrer 7-6(1), 6-4, 6-1 14 Gilles Muller LUX (Q) 7-6(4), 6-1, 6-3 Sjeng Schalken (16) 15 Kenneth Carlsen DEN (Q) Sjeng Schalken (16) 6-3, 6-2, 5-7, 6-1 16 Sjeng Schalken NED (16) 7-5, 6-3, 6-1 Marat Safin 17 Nicolas Massu CHI (12) Jarkko Nieminen 2-6, 6-3, 7-5, 6-7(0), 6-4 18 Jarkko Nieminen FIN 6-1, 6-7(5), 6-2, 6-3 Marat Safin 19 Marat Safin RUS Marat Safin 7-6(5), 6-4, 4-6, 6-4 20 Brian Vahaly USA 6-2, 3-6, 6-3, 6-4 Marat Safin 21 Anthony Dupuis FRA Todd Martin 7-5, 1-6, 4-6, 6-0, 7-5 22 Todd Martin USA 4-6, 4-6, 7-6(5), 7-6(4), 6-3 Todd Martin 23 Ivo Karlovic CRO Ivo Karlovic 7-6(4), 7-6(4), 7-6(7) 24 Mardy Fish USA (21) 7-6, 7-6(5), 7-6(4) Marat Safin 25 Arnaud Clement FRA (30) Nikolay Davydenko 7-6(3), 6-3, 6-4 26 Nikolay Davydenko RUS 6-7(6), 4-6, -

EMIRATES ATP RANKINGS FACTS and FIGURES Atpworldtour.Com 2015 Year-End Emirates Atp Rankings

EMIRATES ATP RANKINGS FACTS AND FIGURES ATPWorldTour.com 2015 year-end emirates atp rankings As of November 30, 2015 1 Djokovic,Novak/SRB 64 Andujar,Pablo/ESP 127 Youzhny,Mikhail/RUS 190 Lapentti,Giovanni/ECU 2 Murray,Andy/GBR 65 Kukushkin,Mikhail/KAZ 128 Fratangelo,Bjorn/USA 191 Samper-Montana,Jordi/ESP 3 Federer,Roger/SUI 66 Haase,Robin/NED 129 Smith,John-Patrick/AUS 192 Volandri,Filippo/ITA 4 Wawrinka,Stan/SUI 67 Carreño Busta,Pablo/ESP 130 Coppejans,Kimmer/BEL 193 Przysiezny,Michal/POL 5 Nadal,Rafael/ESP 68 Lorenzi,Paolo/ITA 131 Carballes Baena,Roberto/ESP 194 Gojowczyk,Peter/GER 6 Berdych,Tomas/CZE 69 Kudla,Denis/USA 132 Soeda,Go/JPN 195 Karatsev,Aslan/RUS 7 Ferrer,David/ESP 70 Giraldo,Santiago/COL 133 Elias,Gastao/POR 196 Lamasine,Tristan/FRA 8 Nishikori,Kei/JPN 71 Mahut,Nicolas/FRA 134 Novikov,Dennis/USA 197 Stepanek,Radek/CZE 9 Gasquet,Richard/FRA 72 Cervantes,Iñigo/ESP 135 Donaldson,Jared/USA 198 Sarkissian,Alexander/USA 10 Tsonga,Jo-Wilfried/FRA 73 Almagro,Nicolas/ESP 136 Basic,Mirza/BIH 199 Moriya,Hiroki/JPN 11 Isner,John/USA 74 Pella,Guido/ARG 137 Ymer,Elias/SWE 200 Laaksonen,Henri/SUI 12 Anderson,Kevin/RSA 75 Muñoz-De La Nava,Daniel/ESP 138 Arguello,Facundo/ARG 201 Mertens,Yannick/BEL 13 Cilic,Marin/CRO 76 Lajovic,Dusan/SRB 139 Gombos,Norbert/SVK 202 McGee,James/IRL 14 Raonic,Milos/CAN 77 Lu,Yen-Hsun/TPE 140 Kravchuk,Konstantin/RUS 203 Yang,Tsung-Hua/TPE 15 Simon,Gilles/FRA 78 Pouille,Lucas/FRA 141 Bagnis,Facundo/ARG 204 Halys,Quentin/FRA 16 Goffin,David/BEL 79 Kuznetsov,Andrey/RUS 142 Gonzalez,Alejandro/COL 205 Bourgue,Mathias/FRA -

Andy Murray Articles 2006-2008|

ANDY MURRAY ARTICLES 2006-2008 | In 2006 Andy Murray began his voyage into World top 20 Tennis players. Starting with a tame Australian Open campaign which led to a strained relationship with the bullish British press. His first ATP tour title in San Jose beating two Former Grand Slam Champions along the way. While a rather upsetting coaching split and a trouncing of the World number 1 filled a year where progress was there for the tennis public to see but attitude and off court issues usually filled the journalists copy. 2006 Scrappy Murray prevails after sluggish start By Neil Harman, Tennis Correspondent Published at 12:00AM, January 4 2006 HIS first match of 2006 reassured Andy Murray’s followers yesterday that the element of his game that stands him in such stead as a professional of rare promise — his love of a scrap — is undimmed. From every standpoint, Murray’s defeat of Paolo Lorenzi, an Italian qualifier, in the first round of the Next Generation Adelaide International, was exactly the kind of thrash in the dark of which these first-week events are chock-full. He served seven aces, six double faults, broke serve seven times in 12 attempts, lost his own service three times and won the final five games. The one statistic to matter was a 3-6, 6-0, 6-2 victory and a place in the second round today against Tomas Berdych, a player being touted every bit as fiercely in his native Czech Republic as Murray is in Britain. Berdych’s last defeat was against Murray in the second round of the Davidoff Swiss Indoors in Basle in October, after which the Scot was almost buried in headlines about the passing of the British baton — he defeated Tim Henman in the first round — while Berdych went on to overhaul a star-studded field and lift the Paris Masters.