Time Complexity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Complexity

Chapter 3 Time complexity Use of time complexity makes it easy to estimate the running time of a program. Performing an accurate calculation of a program’s operation time is a very labour-intensive process (it depends on the compiler and the type of computer or speed of the processor). Therefore, we will not make an accurate measurement; just a measurement of a certain order of magnitude. Complexity can be viewed as the maximum number of primitive operations that a program may execute. Regular operations are single additions, multiplications, assignments etc. We may leave some operations uncounted and concentrate on those that are performed the largest number of times. Such operations are referred to as dominant. The number of dominant operations depends on the specific input data. We usually want to know how the performance time depends on a particular aspect of the data. This is most frequently the data size, but it can also be the size of a square matrix or the value of some input variable. 3.1: Which is the dominant operation? 1 def dominant(n): 2 result = 0 3 fori in xrange(n): 4 result += 1 5 return result The operation in line 4 is dominant and will be executedn times. The complexity is described in Big-O notation: in this caseO(n)— linear complexity. The complexity specifies the order of magnitude within which the program will perform its operations. More precisely, in the case ofO(n), the program may performc n opera- · tions, wherec is a constant; however, it may not performn 2 operations, since this involves a different order of magnitude of data. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4]has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) In Bubble sort, Selection sort and Insertion sort, the O(N ) time time complexity that limits its usefulness to small number of complexity limits the performance when N gets very big. element no more than a few thousand data points. -

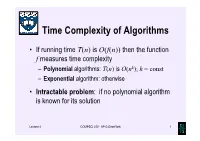

Time Complexity of Algorithms

Time Complexity of Algorithms • If running time T(n) is O(f(n)) then the function f measures time complexity – Polynomial algorithms: T(n) is O(nk); k = const – Exponential algorithm: otherwise • Intractable problem: if no polynomial algorithm is known for its solution Lecture 4 COMPSCI 220 - AP G Gimel'farb 1 Time complexity growth f(n) Number of data items processed per: 1 minute 1 day 1 year 1 century n 10 14,400 5.26⋅106 5.26⋅108 7 n log10n 10 3,997 883,895 6.72⋅10 n1.5 10 1,275 65,128 1.40⋅106 n2 10 379 7,252 72,522 n3 10 112 807 3,746 2n 10 20 29 35 Lecture 4 COMPSCI 220 - AP G Gimel'farb 2 Beware exponential complexity ☺If a linear O(n) algorithm processes 10 items per minute, then it can process 14,400 items per day, 5,260,000 items per year, and 526,000,000 items per century ☻If an exponential O(2n) algorithm processes 10 items per minute, then it can process only 20 items per day and 35 items per century... Lecture 4 COMPSCI 220 - AP G Gimel'farb 3 Big-Oh vs. Actual Running Time • Example 1: Let algorithms A and B have running times TA(n) = 20n ms and TB(n) = 0.1n log2n ms • In the “Big-Oh”sense, A is better than B… • But: on which data volume can A outperform B? TA(n) < TB(n) if 20n < 0.1n log2n, 200 60 or log2n > 200, that is, when n >2 ≈ 10 ! • Thus, in all practical cases B is better than A… Lecture 4 COMPSCI 220 - AP G Gimel'farb 4 Big-Oh vs. -

A Short History of Computational Complexity

The Computational Complexity Column by Lance FORTNOW NEC Laboratories America 4 Independence Way, Princeton, NJ 08540, USA [email protected] http://www.neci.nj.nec.com/homepages/fortnow/beatcs Every third year the Conference on Computational Complexity is held in Europe and this summer the University of Aarhus (Denmark) will host the meeting July 7-10. More details at the conference web page http://www.computationalcomplexity.org This month we present a historical view of computational complexity written by Steve Homer and myself. This is a preliminary version of a chapter to be included in an upcoming North-Holland Handbook of the History of Mathematical Logic edited by Dirk van Dalen, John Dawson and Aki Kanamori. A Short History of Computational Complexity Lance Fortnow1 Steve Homer2 NEC Research Institute Computer Science Department 4 Independence Way Boston University Princeton, NJ 08540 111 Cummington Street Boston, MA 02215 1 Introduction It all started with a machine. In 1936, Turing developed his theoretical com- putational model. He based his model on how he perceived mathematicians think. As digital computers were developed in the 40's and 50's, the Turing machine proved itself as the right theoretical model for computation. Quickly though we discovered that the basic Turing machine model fails to account for the amount of time or memory needed by a computer, a critical issue today but even more so in those early days of computing. The key idea to measure time and space as a function of the length of the input came in the early 1960's by Hartmanis and Stearns. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm In computer science, a sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) that require sorted lists to work correctly; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation, or reordering, of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2004). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and lower bounds. Classification Sorting algorithms used in computer science are often classified by: • Computational complexity (worst, average and best behaviour) of element comparisons in terms of the size of the list . For typical sorting algorithms good behavior is and bad behavior is . -

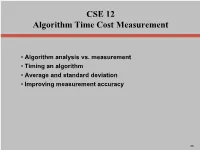

Algorithm Time Cost Measurement

CSE 12 Algorithm Time Cost Measurement • Algorithm analysis vs. measurement • Timing an algorithm • Average and standard deviation • Improving measurement accuracy 06 Introduction • These three characteristics of programs are important: – robustness: a program’s ability to spot exceptional conditions and deal with them or shutdown gracefully – correctness: does the program do what it is “supposed to” do? – efficiency: all programs use resources (time, space, and energy); how can we measure efficiency so that we can compare algorithms? 06-2/19 Analysis and Measurement An algorithm’s performance can be described by: – time complexity or cost – how long it takes to execute. In general, less time is better! – space complexity or cost – how much computer memory it uses. In general, less space is better! – energy complexity or cost – how much energy uses. In general, less energy is better! • Costs are usually given as functions of the size of the input to the algorithm • A big instance of the problem will probably take more resources to solve than a small one, but how much more? Figuring algorithm costs • For a given algorithm, we would like to know the following as functions of n, the size of the problem: T(n) , the time cost of solving the problem S(n) , the space cost of solving the problem E(n) , the energy cost of solving the problem • Two approaches: – We can analyze the written algorithm – Or we could implement the algorithm and run it and measure the time, memory, and energy usage 06-4/19 Asymptotic algorithm analysis • Asymptotic -

NP-Completeness General Problems, Input Size and Time Complexity

NP-Completeness Reference: Computers and Intractability: A Guide to the Theory of NP-Completeness by Garey and Johnson, W.H. Freeman and Company, 1979. Young CS 331 NP-Completeness 1 D&A of Algo. General Problems, Input Size and Time Complexity • Time complexity of algorithms : polynomial time algorithm ("efficient algorithm") v.s. exponential time algorithm ("inefficient algorithm") f(n) \ n 10 30 50 n 0.00001 sec 0.00003 sec 0.00005 sec n5 0.1 sec 24.3 sec 5.2 mins 2n 0.001 sec 17.9 mins 35.7 yrs Young CS 331 NP-Completeness 2 D&A of Algo. 1 “Hard” and “easy’ Problems • Sometimes the dividing line between “easy” and “hard” problems is a fine one. For example – Find the shortest path in a graph from X to Y. (easy) – Find the longest path in a graph from X to Y. (with no cycles) (hard) • View another way – as “yes/no” problems – Is there a simple path from X to Y with weight <= M? (easy) – Is there a simple path from X to Y with weight >= M? (hard) – First problem can be solved in polynomial time. – All known algorithms for the second problem (could) take exponential time . Young CS 331 NP-Completeness 3 D&A of Algo. • Decision problem: The solution to the problem is "yes" or "no". Most optimization problems can be phrased as decision problems (still have the same time complexity). Example : Assume we have a decision algorithm X for 0/1 Knapsack problem with capacity M, i.e. Algorithm X returns “Yes” or “No” to the question “Is there a solution with profit P subject to knapsack capacity M?” Young CS 331 NP-Completeness 4 D&A of Algo. -

New Worst-Case Upper Bound for #2-SAT and #3-SAT with the Number of Clauses As the Parameter

Proceedings of the Twenty-Fourth AAAI Conference on Artificial Intelligence (AAAI-10) New Worst-Case Upper Bound for #2-SAT and #3-SAT with the Number of Clauses as the Parameter Junping Zhou1,2, Minghao Yin2,3*, Chunguang Zhou1 1College of Computer Science and Technology, Jilin University, Changchun, P. R. China, 130012 2College of Computer, Northeast Normal University, Changchun, P. R. China, 130117 3Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, Changchun, P. R. China 130012 [email protected], [email protected], [email protected] Abstract improvement from O(ck) to O((c- )k) may significantly The rigorous theoretical analyses of algorithms for #SAT increase the size of the problem being tractable. have been proposed in the literature. As we know, previous Recently, tremendous efforts have been made on algorithms for solving #SAT have been analyzed only efficient #SAT algorithms with complexity analyses. By regarding the number of variables as the parameter. introducing independent clauses and combining formulas, However, the time complexity for solving #SAT instances Dubois (1991) presented a #SAT algorithm which ran in depends not only on the number of variables, but also on the O(1.6180n) for #2-SAT and O(1.8393n) for #3-SAT, where number of clauses. Therefore, it is significant to exploit the n is the number of variables of a formula. Based on a more time complexity from the other point of view, i.e. the elaborate analysis of the relationship among the variables, number of clauses. In this paper, we present algorithms for Dahllof et al. -

Evaluation of Sorting Algorithms, Mathematical and Empirical Analysis of Sorting Algorithms

International Journal of Scientific & Engineering Research Volume 8, Issue 5, May-2017 86 ISSN 2229-5518 Evaluation of Sorting Algorithms, Mathematical and Empirical Analysis of sorting Algorithms Sapram Choudaiah P Chandu Chowdary M Kavitha ABSTRACT:Sorting is an important data structure in many real life applications. A number of sorting algorithms are in existence till date. This paper continues the earlier thought of evolutionary study of sorting problem and sorting algorithms concluded with the chronological list of early pioneers of sorting problem or algorithms. Latter in the study graphical method has been used to present an evolution of sorting problem and sorting algorithm on the time line. An extensive analysis has been done compared with the traditional mathematical methods of ―Bubble Sort, Selection Sort, Insertion Sort, Merge Sort, Quick Sort. Observations have been obtained on comparing with the existing approaches of All Sorts. An “Empirical Analysis” consists of rigorous complexity analysis by various sorting algorithms, in which comparison and real swapping of all the variables are calculatedAll algorithms were tested on random data of various ranges from small to large. It is an attempt to compare the performance of various sorting algorithm, with the aim of comparing their speed when sorting an integer inputs.The empirical data obtained by using the program reveals that Quick sort algorithm is fastest and Bubble sort is slowest. Keywords: Bubble Sort, Insertion sort, Quick Sort, Merge Sort, Selection Sort, Heap Sort,CPU Time. Introduction In spite of plentiful literature and research in more dimension to student for thinking4. Whereas, sorting algorithmic domain there is mess found in this thinking become a mark of respect to all our documentation as far as credential concern2. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm A sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) which require input data to be in sorted lists; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation (reordering) of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2006). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and upper and lower bounds. Classification Sorting algorithms are often classified by: • Computational complexity (worst, average and best behavior) of element comparisons in terms of the size of the list (n). For typical serial sorting algorithms good behavior is O(n log n), with parallel sort in O(log2 n), and bad behavior is O(n2). -

The Maximum Clique Problem

Introduction Algorithms Application Conclusion The Maximum Clique Problem Dam Thanh Phuong, Ngo Manh Tuong November, 2012 Dam Thanh Phuong, Ngo Manh Tuong The Maximum Clique Problem Introduction Algorithms Application Conclusion Motivation How to put as much left-over stuff as possible in a tasty meal before everything will go off? Dam Thanh Phuong, Ngo Manh Tuong The Maximum Clique Problem Introduction Algorithms Application Conclusion Motivation Find the largest collection of food where everything goes together! Here, we have the choice: Dam Thanh Phuong, Ngo Manh Tuong The Maximum Clique Problem Introduction Algorithms Application Conclusion Motivation Find the largest collection of food where everything goes together! Here, we have the choice: Dam Thanh Phuong, Ngo Manh Tuong The Maximum Clique Problem Introduction Algorithms Application Conclusion Motivation Find the largest collection of food where everything goes together! Here, we have the choice: Dam Thanh Phuong, Ngo Manh Tuong The Maximum Clique Problem Introduction Algorithms Application Conclusion Motivation Find the largest collection of food where everything goes together! Here, we have the choice: Dam Thanh Phuong, Ngo Manh Tuong The Maximum Clique Problem Introduction Algorithms Application Conclusion Outline 1 Introduction 2 Algorithms 3 Applications 4 Conclusion Dam Thanh Phuong, Ngo Manh Tuong The Maximum Clique Problem Introduction Algorithms Application Conclusion Graph (G): a network of vertices (V(G)) and edges (E(G)). Graph Complement (G): the graph with the same vertex set of G but whose edge set consists of the edges not present in G. Complete Graph: every pair of vertices is connected by an edge. A Clique in an undirected graph G=(V,E) is a subset of the vertex set C ⊆ V ,such that for every two vertices in C, there exists an edge connecting the two. -

A Tutorial on Clique Problems in Communications and Signal Processing Ahmed Douik, Student Member, IEEE, Hayssam Dahrouj, Senior Member, IEEE, Tareq Y

1 A Tutorial on Clique Problems in Communications and Signal Processing Ahmed Douik, Student Member, IEEE, Hayssam Dahrouj, Senior Member, IEEE, Tareq Y. Al-Naffouri, Senior Member, IEEE, and Mohamed-Slim Alouini, Fellow, IEEE Abstract—Since its first use by Euler on the problem of the be found in the following reference [4]. The textbooks by seven bridges of Konigsberg,¨ graph theory has shown excellent Bollobas´ [5] and Diestel [6] provide an in-depth investigation abilities in solving and unveiling the properties of multiple of modern graph theory tools including flows and connectivity, discrete optimization problems. The study of the structure of some integer programs reveals equivalence with graph theory the coloring problem, random graphs, and trees. problems making a large body of the literature readily available Current applications of graph theory span several fields. for solving and characterizing the complexity of these problems. Indeed, graph theory, as a part of discrete mathematics, is This tutorial presents a framework for utilizing a particular particularly helpful in solving discrete equations with a well- graph theory problem, known as the clique problem, for solving defined structure. Reference [7] provides multiple connections communications and signal processing problems. In particular, the paper aims to illustrate the structural properties of integer between graph theory problems and their combinatoric coun- programs that can be formulated as clique problems through terparts. Multiple tutorials on the applications of graph theory multiple examples in communications and signal processing. To techniques to various problems are available in the literature, that end, the first part of the tutorial provides various optimal e.g., [8]–[15].