NP-Completeness General Problems, Input Size and Time Complexity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Complexity

Chapter 3 Time complexity Use of time complexity makes it easy to estimate the running time of a program. Performing an accurate calculation of a program’s operation time is a very labour-intensive process (it depends on the compiler and the type of computer or speed of the processor). Therefore, we will not make an accurate measurement; just a measurement of a certain order of magnitude. Complexity can be viewed as the maximum number of primitive operations that a program may execute. Regular operations are single additions, multiplications, assignments etc. We may leave some operations uncounted and concentrate on those that are performed the largest number of times. Such operations are referred to as dominant. The number of dominant operations depends on the specific input data. We usually want to know how the performance time depends on a particular aspect of the data. This is most frequently the data size, but it can also be the size of a square matrix or the value of some input variable. 3.1: Which is the dominant operation? 1 def dominant(n): 2 result = 0 3 fori in xrange(n): 4 result += 1 5 return result The operation in line 4 is dominant and will be executedn times. The complexity is described in Big-O notation: in this caseO(n)— linear complexity. The complexity specifies the order of magnitude within which the program will perform its operations. More precisely, in the case ofO(n), the program may performc n opera- · tions, wherec is a constant; however, it may not performn 2 operations, since this involves a different order of magnitude of data. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4]has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) In Bubble sort, Selection sort and Insertion sort, the O(N ) time time complexity that limits its usefulness to small number of complexity limits the performance when N gets very big. element no more than a few thousand data points. -

Complexity Theory Lecture 9 Co-NP Co-NP-Complete

Complexity Theory 1 Complexity Theory 2 co-NP Complexity Theory Lecture 9 As co-NP is the collection of complements of languages in NP, and P is closed under complementation, co-NP can also be characterised as the collection of languages of the form: ′ L = x y y <p( x ) R (x, y) { |∀ | | | | → } Anuj Dawar University of Cambridge Computer Laboratory NP – the collection of languages with succinct certificates of Easter Term 2010 membership. co-NP – the collection of languages with succinct certificates of http://www.cl.cam.ac.uk/teaching/0910/Complexity/ disqualification. Anuj Dawar May 14, 2010 Anuj Dawar May 14, 2010 Complexity Theory 3 Complexity Theory 4 NP co-NP co-NP-complete P VAL – the collection of Boolean expressions that are valid is co-NP-complete. Any language L that is the complement of an NP-complete language is co-NP-complete. Any of the situations is consistent with our present state of ¯ knowledge: Any reduction of a language L1 to L2 is also a reduction of L1–the complement of L1–to L¯2–the complement of L2. P = NP = co-NP • There is an easy reduction from the complement of SAT to VAL, P = NP co-NP = NP = co-NP • ∩ namely the map that takes an expression to its negation. P = NP co-NP = NP = co-NP • ∩ VAL P P = NP = co-NP ∈ ⇒ P = NP co-NP = NP = co-NP • ∩ VAL NP NP = co-NP ∈ ⇒ Anuj Dawar May 14, 2010 Anuj Dawar May 14, 2010 Complexity Theory 5 Complexity Theory 6 Prime Numbers Primality Consider the decision problem PRIME: Another way of putting this is that Composite is in NP. -

NP-Completeness (Chapter 8)

CSE 421" Algorithms NP-Completeness (Chapter 8) 1 What can we feasibly compute? Focus so far has been to give good algorithms for specific problems (and general techniques that help do this). Now shifting focus to problems where we think this is impossible. Sadly, there are many… 2 History 3 A Brief History of Ideas From Classical Greece, if not earlier, "logical thought" held to be a somewhat mystical ability Mid 1800's: Boolean Algebra and foundations of mathematical logic created possible "mechanical" underpinnings 1900: David Hilbert's famous speech outlines program: mechanize all of mathematics? http://mathworld.wolfram.com/HilbertsProblems.html 1930's: Gödel, Church, Turing, et al. prove it's impossible 4 More History 1930/40's What is (is not) computable 1960/70's What is (is not) feasibly computable Goal – a (largely) technology-independent theory of time required by algorithms Key modeling assumptions/approximations Asymptotic (Big-O), worst case is revealing Polynomial, exponential time – qualitatively different 5 Polynomial Time 6 The class P Definition: P = the set of (decision) problems solvable by computers in polynomial time, i.e., T(n) = O(nk) for some fixed k (indp of input). These problems are sometimes called tractable problems. Examples: sorting, shortest path, MST, connectivity, RNA folding & other dyn. prog., flows & matching" – i.e.: most of this qtr (exceptions: Change-Making/Stamps, Knapsack, TSP) 7 Why "Polynomial"? Point is not that n2000 is a nice time bound, or that the differences among n and 2n and n2 are negligible. Rather, simple theoretical tools may not easily capture such differences, whereas exponentials are qualitatively different from polynomials and may be amenable to theoretical analysis. -

If Np Languages Are Hard on the Worst-Case, Then It Is Easy to Find Their Hard Instances

IF NP LANGUAGES ARE HARD ON THE WORST-CASE, THEN IT IS EASY TO FIND THEIR HARD INSTANCES Dan Gutfreund, Ronen Shaltiel, and Amnon Ta-Shma Abstract. We prove that if NP 6⊆ BPP, i.e., if SAT is worst-case hard, then for every probabilistic polynomial-time algorithm trying to decide SAT, there exists some polynomially samplable distribution that is hard for it. That is, the algorithm often errs on inputs from this distribution. This is the ¯rst worst-case to average-case reduction for NP of any kind. We stress however, that this does not mean that there exists one ¯xed samplable distribution that is hard for all probabilistic polynomial-time algorithms, which is a pre-requisite assumption needed for one-way func- tions and cryptography (even if not a su±cient assumption). Neverthe- less, we do show that there is a ¯xed distribution on instances of NP- complete languages, that is samplable in quasi-polynomial time and is hard for all probabilistic polynomial-time algorithms (unless NP is easy in the worst case). Our results are based on the following lemma that may be of independent interest: Given the description of an e±cient (probabilistic) algorithm that fails to solve SAT in the worst case, we can e±ciently generate at most three Boolean formulae (of increasing lengths) such that the algorithm errs on at least one of them. Keywords. Average-case complexity, Worst-case to average-case re- ductions, Foundations of cryptography, Pseudo classes Subject classi¯cation. 68Q10 (Modes of computation (nondetermin- istic, parallel, interactive, probabilistic, etc.) 68Q15 Complexity classes (hierarchies, relations among complexity classes, etc.) 68Q17 Compu- tational di±culty of problems (lower bounds, completeness, di±culty of approximation, etc.) 94A60 Cryptography 2 Gutfreund, Shaltiel & Ta-Shma 1. -

Computational Complexity and Intractability: an Introduction to the Theory of NP Chapter 9 2 Objectives

1 Computational Complexity and Intractability: An Introduction to the Theory of NP Chapter 9 2 Objectives . Classify problems as tractable or intractable . Define decision problems . Define the class P . Define nondeterministic algorithms . Define the class NP . Define polynomial transformations . Define the class of NP-Complete 3 Input Size and Time Complexity . Time complexity of algorithms: . Polynomial time (efficient) vs. Exponential time (inefficient) f(n) n = 10 30 50 n 0.00001 sec 0.00003 sec 0.00005 sec n5 0.1 sec 24.3 sec 5.2 mins 2n 0.001 sec 17.9 mins 35.7 yrs 4 “Hard” and “Easy” Problems . “Easy” problems can be solved by polynomial time algorithms . Searching problem, sorting, Dijkstra’s algorithm, matrix multiplication, all pairs shortest path . “Hard” problems cannot be solved by polynomial time algorithms . 0/1 knapsack, traveling salesman . Sometimes the dividing line between “easy” and “hard” problems is a fine one. For example, . Find the shortest path in a graph from X to Y (easy) . Find the longest path (with no cycles) in a graph from X to Y (hard) 5 “Hard” and “Easy” Problems . Motivation: is it possible to efficiently solve “hard” problems? Efficiently solve means polynomial time solutions. Some problems have been proved that no efficient algorithms for them. For example, print all permutation of a number n. However, many problems we cannot prove there exists no efficient algorithms, and at the same time, we cannot find one either. 6 Traveling Salesperson Problem . No algorithm has ever been developed with a Worst-case time complexity better than exponential . -

NP-Completeness Part I

NP-Completeness Part I Outline for Today ● Recap from Last Time ● Welcome back from break! Let's make sure we're all on the same page here. ● Polynomial-Time Reducibility ● Connecting problems together. ● NP-Completeness ● What are the hardest problems in NP? ● The Cook-Levin Theorem ● A concrete NP-complete problem. Recap from Last Time The Limits of Computability EQTM EQTM co-RE R RE LD LD ADD HALT ATM HALT ATM 0*1* The Limits of Efficient Computation P NP R P and NP Refresher ● The class P consists of all problems solvable in deterministic polynomial time. ● The class NP consists of all problems solvable in nondeterministic polynomial time. ● Equivalently, NP consists of all problems for which there is a deterministic, polynomial-time verifier for the problem. Reducibility Maximum Matching ● Given an undirected graph G, a matching in G is a set of edges such that no two edges share an endpoint. ● A maximum matching is a matching with the largest number of edges. AA maximummaximum matching.matching. Maximum Matching ● Jack Edmonds' paper “Paths, Trees, and Flowers” gives a polynomial-time algorithm for finding maximum matchings. ● (This is the same Edmonds as in “Cobham- Edmonds Thesis.) ● Using this fact, what other problems can we solve? Domino Tiling Domino Tiling Solving Domino Tiling Solving Domino Tiling Solving Domino Tiling Solving Domino Tiling Solving Domino Tiling Solving Domino Tiling Solving Domino Tiling Solving Domino Tiling The Setup ● To determine whether you can place at least k dominoes on a crossword grid, do the following: ● Convert the grid into a graph: each empty cell is a node, and any two adjacent empty cells have an edge between them. -

Computability (Section 12.3) Computability

Computability (Section 12.3) Computability • Some problems cannot be solved by any machine/algorithm. To prove such statements we need to effectively describe all possible algorithms. • Example (Turing Machines): Associate a Turing machine with each n 2 N as follows: n $ b(n) (the binary representation of n) $ a(b(n)) (b(n) split into 7-bit ASCII blocks, w/ leading 0's) $ if a(b(n)) is the syntax of a TM then a(b(n)) else (0; a; a; S,halt) fi • So we can effectively describe all possible Turing machines: T0; T1; T2;::: Continued • Of course, we could use the same technique to list all possible instances of any computational model. For example, we can effectively list all possible Simple programs and we can effectively list all possible partial recursive functions. • If we want to use the Church-Turing thesis, then we can effectively list all possible solutions (e.g., Turing machines) to every intuitively computable problem. Decidable. • Is an arbitrary first-order wff valid? Undecidable and partially decidable. • Does a DFA accept infinitely many strings? Decidable • Does a PDA accept a string s? Decidable Decision Problems • A decision problem is a problem that can be phrased as a yes/no question. Such a problem is decidable if an algorithm exists to answer yes or no to each instance of the problem. Otherwise it is undecidable. A decision problem is partially decidable if an algorithm exists to halt with the answer yes to yes-instances of the problem, but may run forever if the answer is no. -

Homework 4 Solutions Uploaded 4:00Pm on Dec 6, 2017 Due: Monday Dec 4, 2017

CS3510 Design & Analysis of Algorithms Section A Homework 4 Solutions Uploaded 4:00pm on Dec 6, 2017 Due: Monday Dec 4, 2017 This homework has a total of 3 problems on 4 pages. Solutions should be submitted to GradeScope before 3:00pm on Monday Dec 4. The problem set is marked out of 20, you can earn up to 21 = 1 + 8 + 7 + 5 points. If you choose not to submit a typed write-up, please write neat and legibly. Collaboration is allowed/encouraged on problems, however each student must independently com- plete their own write-up, and list all collaborators. No credit will be given to solutions obtained verbatim from the Internet or other sources. Modifications since version 0 1. 1c: (changed in version 2) rewored to showing NP-hardness. 2. 2b: (changed in version 1) added a hint. 3. 3b: (changed in version 1) added comment about the two loops' u variables being different due to scoping. 0. [1 point, only if all parts are completed] (a) Submit your homework to Gradescope. (b) Student id is the same as on T-square: it's the one with alphabet + digits, NOT the 9 digit number from student card. (c) Pages for each question are separated correctly. (d) Words on the scan are clearly readable. 1. (8 points) NP-Completeness Recall that the SAT problem, or the Boolean Satisfiability problem, is defined as follows: • Input: A CNF formula F having m clauses in n variables x1; x2; : : : ; xn. There is no restriction on the number of variables in each clause. -

CSE 6321 - Solutions to Problem Set 5

CSE 6321 - Solutions to Problem Set 5 1. 2. Solution: n 1 T T 1 T T D-Q = fhA; P0;P1;:::;Pm; b; q0; q1; : : : ; qm; r1; : : : ; rm; ti j (9x 2 R ) 2 x P0x + q0 x ≤ t; 2 x Pix + qi x + ri ≤ 0; Ax = bg. We can show that the decision version of binary integer programming reduces to D-Q in polynomial time as follows: The constraint xi 2 f0; 1g of binary integer programming is equivalent to the quadratic constrain (possible in D-Q) xi(xi − 1) = 0. The rest of the constraints in binary integer programming are linear and can be represented directly in D-Q. More specifically, let hA; b; c; k0i be an instance of D-0-1-IP = fhA; b; c; k0i j (9x 2 f0; 1gn)Ax ≤ b; cT x ≥ 0 kg. We map it to the following instance of D-Q: P0 = 0, q0 = −b, t = −k , k = 0 (no linear equality 1 T T constraint Ax = b), and then use constraints of the form 2 x Pix + qi x + ri ≤ 0 to represent the constraints xi(xi − 1) ≥ 0 and xi(xi − 1) ≤ 0 for i = 1; : : : ; n and more constraints of the same form to represent the 0 constraint Ax ≤ b (using Pi = 0). Then hA; b; c; k i 2 D-0-1-IP iff the constructed instance of D-Q is in D-Q. The mapping is clearly polynomial time computable. (In an earlier statement of ps6, problem 1, I forgot to say that r1; : : : ; rm 2 Q are also part of the input.) 3. -

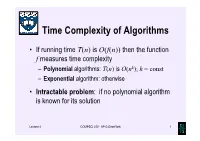

Time Complexity of Algorithms

Time Complexity of Algorithms • If running time T(n) is O(f(n)) then the function f measures time complexity – Polynomial algorithms: T(n) is O(nk); k = const – Exponential algorithm: otherwise • Intractable problem: if no polynomial algorithm is known for its solution Lecture 4 COMPSCI 220 - AP G Gimel'farb 1 Time complexity growth f(n) Number of data items processed per: 1 minute 1 day 1 year 1 century n 10 14,400 5.26⋅106 5.26⋅108 7 n log10n 10 3,997 883,895 6.72⋅10 n1.5 10 1,275 65,128 1.40⋅106 n2 10 379 7,252 72,522 n3 10 112 807 3,746 2n 10 20 29 35 Lecture 4 COMPSCI 220 - AP G Gimel'farb 2 Beware exponential complexity ☺If a linear O(n) algorithm processes 10 items per minute, then it can process 14,400 items per day, 5,260,000 items per year, and 526,000,000 items per century ☻If an exponential O(2n) algorithm processes 10 items per minute, then it can process only 20 items per day and 35 items per century... Lecture 4 COMPSCI 220 - AP G Gimel'farb 3 Big-Oh vs. Actual Running Time • Example 1: Let algorithms A and B have running times TA(n) = 20n ms and TB(n) = 0.1n log2n ms • In the “Big-Oh”sense, A is better than B… • But: on which data volume can A outperform B? TA(n) < TB(n) if 20n < 0.1n log2n, 200 60 or log2n > 200, that is, when n >2 ≈ 10 ! • Thus, in all practical cases B is better than A… Lecture 4 COMPSCI 220 - AP G Gimel'farb 4 Big-Oh vs. -

A Short History of Computational Complexity

The Computational Complexity Column by Lance FORTNOW NEC Laboratories America 4 Independence Way, Princeton, NJ 08540, USA [email protected] http://www.neci.nj.nec.com/homepages/fortnow/beatcs Every third year the Conference on Computational Complexity is held in Europe and this summer the University of Aarhus (Denmark) will host the meeting July 7-10. More details at the conference web page http://www.computationalcomplexity.org This month we present a historical view of computational complexity written by Steve Homer and myself. This is a preliminary version of a chapter to be included in an upcoming North-Holland Handbook of the History of Mathematical Logic edited by Dirk van Dalen, John Dawson and Aki Kanamori. A Short History of Computational Complexity Lance Fortnow1 Steve Homer2 NEC Research Institute Computer Science Department 4 Independence Way Boston University Princeton, NJ 08540 111 Cummington Street Boston, MA 02215 1 Introduction It all started with a machine. In 1936, Turing developed his theoretical com- putational model. He based his model on how he perceived mathematicians think. As digital computers were developed in the 40's and 50's, the Turing machine proved itself as the right theoretical model for computation. Quickly though we discovered that the basic Turing machine model fails to account for the amount of time or memory needed by a computer, a critical issue today but even more so in those early days of computing. The key idea to measure time and space as a function of the length of the input came in the early 1960's by Hartmanis and Stearns.