Is the Space Complexity of Planted Clique Recovery the Same As That of Detection?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Complexity

Chapter 3 Time complexity Use of time complexity makes it easy to estimate the running time of a program. Performing an accurate calculation of a program’s operation time is a very labour-intensive process (it depends on the compiler and the type of computer or speed of the processor). Therefore, we will not make an accurate measurement; just a measurement of a certain order of magnitude. Complexity can be viewed as the maximum number of primitive operations that a program may execute. Regular operations are single additions, multiplications, assignments etc. We may leave some operations uncounted and concentrate on those that are performed the largest number of times. Such operations are referred to as dominant. The number of dominant operations depends on the specific input data. We usually want to know how the performance time depends on a particular aspect of the data. This is most frequently the data size, but it can also be the size of a square matrix or the value of some input variable. 3.1: Which is the dominant operation? 1 def dominant(n): 2 result = 0 3 fori in xrange(n): 4 result += 1 5 return result The operation in line 4 is dominant and will be executedn times. The complexity is described in Big-O notation: in this caseO(n)— linear complexity. The complexity specifies the order of magnitude within which the program will perform its operations. More precisely, in the case ofO(n), the program may performc n opera- · tions, wherec is a constant; however, it may not performn 2 operations, since this involves a different order of magnitude of data. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4]has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) In Bubble sort, Selection sort and Insertion sort, the O(N ) time time complexity that limits its usefulness to small number of complexity limits the performance when N gets very big. element no more than a few thousand data points. -

The Strongish Planted Clique Hypothesis and Its Consequences

The Strongish Planted Clique Hypothesis and Its Consequences Pasin Manurangsi Google Research, Mountain View, CA, USA [email protected] Aviad Rubinstein Stanford University, CA, USA [email protected] Tselil Schramm Stanford University, CA, USA [email protected] Abstract We formulate a new hardness assumption, the Strongish Planted Clique Hypothesis (SPCH), which postulates that any algorithm for planted clique must run in time nΩ(log n) (so that the state-of-the-art running time of nO(log n) is optimal up to a constant in the exponent). We provide two sets of applications of the new hypothesis. First, we show that SPCH implies (nearly) tight inapproximability results for the following well-studied problems in terms of the parameter k: Densest k-Subgraph, Smallest k-Edge Subgraph, Densest k-Subhypergraph, Steiner k-Forest, and Directed Steiner Network with k terminal pairs. For example, we show, under SPCH, that no polynomial time algorithm achieves o(k)-approximation for Densest k-Subgraph. This inapproximability ratio improves upon the previous best ko(1) factor from (Chalermsook et al., FOCS 2017). Furthermore, our lower bounds hold even against fixed-parameter tractable algorithms with parameter k. Our second application focuses on the complexity of graph pattern detection. For both induced and non-induced graph pattern detection, we prove hardness results under SPCH, improving the running time lower bounds obtained by (Dalirrooyfard et al., STOC 2019) under the Exponential Time Hypothesis. 2012 ACM Subject Classification Theory of computation → Problems, reductions and completeness; Theory of computation → Fixed parameter tractability Keywords and phrases Planted Clique, Densest k-Subgraph, Hardness of Approximation Digital Object Identifier 10.4230/LIPIcs.ITCS.2021.10 Related Version A full version of the paper is available at https://arxiv.org/abs/2011.05555. -

Computational Lower Bounds for Community Detection on Random Graphs

JMLR: Workshop and Conference Proceedings vol 40:1–30, 2015 Computational Lower Bounds for Community Detection on Random Graphs Bruce Hajek [email protected] Department of ECE, University of Illinois at Urbana-Champaign, Urbana, IL Yihong Wu [email protected] Department of ECE, University of Illinois at Urbana-Champaign, Urbana, IL Jiaming Xu [email protected] Department of Statistics, The Wharton School, University of Pennsylvania, Philadelphia, PA, Abstract This paper studies the problem of detecting the presence of a small dense community planted in a large Erdos-R˝ enyi´ random graph G(N; q), where the edge probability within the community exceeds q by a constant factor. Assuming the hardness of the planted clique detection problem, we show that the computational complexity of detecting the community exhibits the following phase transition phenomenon: As the graph size N grows and the graph becomes sparser according to −α 2 q = N , there exists a critical value of α = 3 , below which there exists a computationally intensive procedure that can detect far smaller communities than any computationally efficient procedure, and above which a linear-time procedure is statistically optimal. The results also lead to the average-case hardness results for recovering the dense community and approximating the densest K-subgraph. 1. Introduction Networks often exhibit community structure with many edges joining the vertices of the same com- munity and relatively few edges joining vertices of different communities. Detecting communities in networks has received a large amount of attention and has found numerous applications in social and biological sciences, etc (see, e.g., the exposition Fortunato(2010) and the references therein). -

Spectral Algorithms (Draft)

Spectral Algorithms (draft) Ravindran Kannan and Santosh Vempala March 3, 2013 ii Summary. Spectral methods refer to the use of eigenvalues, eigenvectors, sin- gular values and singular vectors. They are widely used in Engineering, Ap- plied Mathematics and Statistics. More recently, spectral methods have found numerous applications in Computer Science to \discrete" as well \continuous" problems. This book describes modern applications of spectral methods, and novel algorithms for estimating spectral parameters. In the first part of the book, we present applications of spectral methods to problems from a variety of topics including combinatorial optimization, learning and clustering. The second part of the book is motivated by efficiency considerations. A fea- ture of many modern applications is the massive amount of input data. While sophisticated algorithms for matrix computations have been developed over a century, a more recent development is algorithms based on \sampling on the fly” from massive matrices. Good estimates of singular values and low rank ap- proximations of the whole matrix can be provably derived from a sample. Our main emphasis in the second part of the book is to present these sampling meth- ods with rigorous error bounds. We also present recent extensions of spectral methods from matrices to tensors and their applications to some combinatorial optimization problems. Contents I Applications 1 1 The Best-Fit Subspace 3 1.1 Singular Value Decomposition . .3 1.2 Algorithms for computing the SVD . .7 1.3 The k-means clustering problem . .8 1.4 Discussion . 11 2 Clustering Discrete Random Models 13 2.1 Planted cliques in random graphs . -

Resource-Competitive Algorithms1 1 Introduction

Resource-Competitive Algorithms1 Michael A. Bender Varsha Dani Department of Computer Science Department of Computer Science Stony Brook University University of New Mexico Stony Brook, NY, USA Albuquerque, NM, USA [email protected] [email protected] Jeremy T. Fineman Seth Gilbert Department of Computer Science Department of Computer Science Georgetown University National University of Singapore Washington, DC, USA Singapore [email protected] [email protected] Mahnush Movahedi Seth Pettie Department of Computer Science Electrical Eng. and Computer Science Dept. University of New Mexico University of Michigan Albuquerque, NM, USA Ann Arbor, MI, USA [email protected] [email protected] Jared Saia Maxwell Young Department of Computer Science Computer Science and Engineering Dept. University of New Mexico Mississippi State University Albuquerque, NM, USA Starkville, MS, USA [email protected] [email protected] Abstract The point of adversarial analysis is to model the worst-case performance of an algorithm. Un- fortunately, this analysis may not always reflect performance in practice because the adversarial assumption can be overly pessimistic. In such cases, several techniques have been developed to provide a more refined understanding of how an algorithm performs e.g., competitive analysis, parameterized analysis, and the theory of approximation algorithms. Here, we describe an analogous technique called resource competitiveness, tailored for dis- tributed systems. Often there is an operational cost for adversarial behavior arising from band- width usage, computational power, energy limitations, etc. Modeling this cost provides some notion of how much disruption the adversary can inflict on the system. In parameterizing by this cost, we can design an algorithm with the following guarantee: if the adversary pays T , then the additional cost of the algorithm is some function of T . -

A New Spectral Bound on the Clique Number of Graphs

A New Spectral Bound on the Clique Number of Graphs Samuel Rota Bul`o and Marcello Pelillo Dipartimento di Informatica - University of Venice - Italy {srotabul,pelillo}@dsi.unive.it Abstract. Many computer vision and patter recognition problems are intimately related to the maximum clique problem. Due to the intractabil- ity of this problem, besides the development of heuristics, a research di- rection consists in trying to find good bounds on the clique number of graphs. This paper introduces a new spectral upper bound on the clique number of graphs, which is obtained by exploiting an invariance of a continuous characterization of the clique number of graphs introduced by Motzkin and Straus. Experimental results on random graphs show the superiority of our bounds over the standard literature. 1 Introduction Many problems in computer vision and pattern recognition can be formulated in terms of finding a completely connected subgraph (i.e. a clique) of a given graph, having largest cardinality. This is called the maximum clique problem (MCP). One popular approach to object recognition, for example, involves matching an input scene against a stored model, each being abstracted in terms of a relational structure [1,2,3,4], and this problem, in turn, can be conveniently transformed into the equivalent problem of finding a maximum clique of the corresponding association graph. This idea was pioneered by Ambler et. al. [5] and was later developed by Bolles and Cain [6] as part of their local-feature-focus method. Now, it has become a standard technique in computer vision, and has been employing in such diverse applications as stereo correspondence [7], point pattern matching [8], image sequence analysis [9]. -

A Polynomial-Time Approximation Algorithm for All-Terminal Network Reliability

A Polynomial-Time Approximation Algorithm for All-Terminal Network Reliability Heng Guo School of Informatics, University of Edinburgh, Informatics Forum, Edinburgh, EH8 9AB, United Kingdom. [email protected] https://orcid.org/0000-0001-8199-5596 Mark Jerrum1 School of Mathematical Sciences, Queen Mary, University of London, Mile End Road, London, E1 4NS, United Kingdom. [email protected] https://orcid.org/0000-0003-0863-7279 Abstract We give a fully polynomial-time randomized approximation scheme (FPRAS) for the all-terminal network reliability problem, which is to determine the probability that, in a undirected graph, assuming each edge fails independently, the remaining graph is still connected. Our main contri- bution is to confirm a conjecture by Gorodezky and Pak (Random Struct. Algorithms, 2014), that the expected running time of the “cluster-popping” algorithm in bi-directed graphs is bounded by a polynomial in the size of the input. 2012 ACM Subject Classification Theory of computation → Generating random combinatorial structures Keywords and phrases Approximate counting, Network Reliability, Sampling, Markov chains Digital Object Identifier 10.4230/LIPIcs.ICALP.2018.68 Related Version Also available at https://arxiv.org/abs/1709.08561. Acknowledgements We thank Mark Huber for bringing reference [8] to our attention, Mark Walters for the coupling idea leading to Lemma 12, and Igor Pak for comments on an earlier version. We also thank the organizers of the “LMS – EPSRC Durham Symposium on Markov Processes, Mixing Times and Cutoff”, where part of the work is carried out. 1 Introduction Network reliability problems are extensively studied #P-hard problems [5] (see also [3, 22, 18, 2]). -

![Arxiv:1611.01647V4 [Cs.DS] 15 Jan 2019 Resample to Able of Are Class We Special Techniques, Our a with for Inst Extremal Solutions Answer](https://docslib.b-cdn.net/cover/7192/arxiv-1611-01647v4-cs-ds-15-jan-2019-resample-to-able-of-are-class-we-special-techniques-our-a-with-for-inst-extremal-solutions-answer-517192.webp)

Arxiv:1611.01647V4 [Cs.DS] 15 Jan 2019 Resample to Able of Are Class We Special Techniques, Our a with for Inst Extremal Solutions Answer

UNIFORM SAMPLING THROUGH THE LOVASZ´ LOCAL LEMMA HENG GUO, MARK JERRUM, AND JINGCHENG LIU Abstract. We propose a new algorithmic framework, called “partial rejection sampling”, to draw samples exactly from a product distribution, conditioned on none of a number of bad events occurring. Our framework builds new connections between the variable framework of the Lov´asz Local Lemma and some classical sampling algorithms such as the “cycle-popping” algorithm for rooted spanning trees. Among other applications, we discover new algorithms to sample satisfying assignments of k-CNF formulas with bounded variable occurrences. 1. Introduction The Lov´asz Local Lemma [9] is a classical gem in combinatorics that guarantees the existence of a perfect object that avoids all events deemed to be “bad”. The original proof is non- constructive but there has been great progress in the algorithmic aspects of the local lemma. After a long line of research [3, 2, 30, 8, 34, 37], the celebrated result by Moser and Tardos [31] gives efficient algorithms to find such a perfect object under conditions that match the Lov´asz Local Lemma in the so-called variable framework. However, it is natural to ask whether, under the same condition, we can also sample a perfect object uniformly at random instead of merely finding one. Roughly speaking, the resampling algorithm by Moser and Tardos [31] works as follows. We initialize all variables randomly. If bad events occur, then we arbitrarily choose a bad event and resample all the involved variables. Unfortunately, it is not hard to see that this algorithm can produce biased samples. -

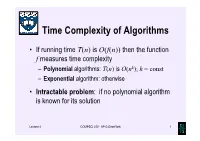

Time Complexity of Algorithms

Time Complexity of Algorithms • If running time T(n) is O(f(n)) then the function f measures time complexity – Polynomial algorithms: T(n) is O(nk); k = const – Exponential algorithm: otherwise • Intractable problem: if no polynomial algorithm is known for its solution Lecture 4 COMPSCI 220 - AP G Gimel'farb 1 Time complexity growth f(n) Number of data items processed per: 1 minute 1 day 1 year 1 century n 10 14,400 5.26⋅106 5.26⋅108 7 n log10n 10 3,997 883,895 6.72⋅10 n1.5 10 1,275 65,128 1.40⋅106 n2 10 379 7,252 72,522 n3 10 112 807 3,746 2n 10 20 29 35 Lecture 4 COMPSCI 220 - AP G Gimel'farb 2 Beware exponential complexity ☺If a linear O(n) algorithm processes 10 items per minute, then it can process 14,400 items per day, 5,260,000 items per year, and 526,000,000 items per century ☻If an exponential O(2n) algorithm processes 10 items per minute, then it can process only 20 items per day and 35 items per century... Lecture 4 COMPSCI 220 - AP G Gimel'farb 3 Big-Oh vs. Actual Running Time • Example 1: Let algorithms A and B have running times TA(n) = 20n ms and TB(n) = 0.1n log2n ms • In the “Big-Oh”sense, A is better than B… • But: on which data volume can A outperform B? TA(n) < TB(n) if 20n < 0.1n log2n, 200 60 or log2n > 200, that is, when n >2 ≈ 10 ! • Thus, in all practical cases B is better than A… Lecture 4 COMPSCI 220 - AP G Gimel'farb 4 Big-Oh vs. -

A Short History of Computational Complexity

The Computational Complexity Column by Lance FORTNOW NEC Laboratories America 4 Independence Way, Princeton, NJ 08540, USA [email protected] http://www.neci.nj.nec.com/homepages/fortnow/beatcs Every third year the Conference on Computational Complexity is held in Europe and this summer the University of Aarhus (Denmark) will host the meeting July 7-10. More details at the conference web page http://www.computationalcomplexity.org This month we present a historical view of computational complexity written by Steve Homer and myself. This is a preliminary version of a chapter to be included in an upcoming North-Holland Handbook of the History of Mathematical Logic edited by Dirk van Dalen, John Dawson and Aki Kanamori. A Short History of Computational Complexity Lance Fortnow1 Steve Homer2 NEC Research Institute Computer Science Department 4 Independence Way Boston University Princeton, NJ 08540 111 Cummington Street Boston, MA 02215 1 Introduction It all started with a machine. In 1936, Turing developed his theoretical com- putational model. He based his model on how he perceived mathematicians think. As digital computers were developed in the 40's and 50's, the Turing machine proved itself as the right theoretical model for computation. Quickly though we discovered that the basic Turing machine model fails to account for the amount of time or memory needed by a computer, a critical issue today but even more so in those early days of computing. The key idea to measure time and space as a function of the length of the input came in the early 1960's by Hartmanis and Stearns. -

On the Complexity of Partial Derivatives Ignacio Garcia-Marco, Pascal Koiran, Timothée Pecatte, Stéphan Thomassé

On the complexity of partial derivatives Ignacio Garcia-Marco, Pascal Koiran, Timothée Pecatte, Stéphan Thomassé To cite this version: Ignacio Garcia-Marco, Pascal Koiran, Timothée Pecatte, Stéphan Thomassé. On the complexity of partial derivatives. 2016. ensl-01345746v2 HAL Id: ensl-01345746 https://hal-ens-lyon.archives-ouvertes.fr/ensl-01345746v2 Preprint submitted on 30 May 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. On the complexity of partial derivatives Ignacio Garcia-Marco, Pascal Koiran, Timothée Pecatte, Stéphan Thomassé LIP,∗ Ecole Normale Supérieure de Lyon, Université de Lyon. May 30, 2017 Abstract The method of partial derivatives is one of the most successful lower bound methods for arithmetic circuits. It uses as a complexity measure the dimension of the span of the partial derivatives of a polynomial. In this paper, we consider this complexity measure as a computational problem: for an input polynomial given as the sum of its nonzero monomials, what is the complexity of computing the dimension of its space of partial derivatives? We show that this problem is ♯P-hard and we ask whether it belongs to ♯P. We analyze the “trace method”, recently used in combinatorics and in algebraic complexity to lower bound the rank of certain matri- ces.