Smoothsort's Behavior on Presorted Sequences by Stefan Hertel

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Complexity

Chapter 3 Time complexity Use of time complexity makes it easy to estimate the running time of a program. Performing an accurate calculation of a program’s operation time is a very labour-intensive process (it depends on the compiler and the type of computer or speed of the processor). Therefore, we will not make an accurate measurement; just a measurement of a certain order of magnitude. Complexity can be viewed as the maximum number of primitive operations that a program may execute. Regular operations are single additions, multiplications, assignments etc. We may leave some operations uncounted and concentrate on those that are performed the largest number of times. Such operations are referred to as dominant. The number of dominant operations depends on the specific input data. We usually want to know how the performance time depends on a particular aspect of the data. This is most frequently the data size, but it can also be the size of a square matrix or the value of some input variable. 3.1: Which is the dominant operation? 1 def dominant(n): 2 result = 0 3 fori in xrange(n): 4 result += 1 5 return result The operation in line 4 is dominant and will be executedn times. The complexity is described in Big-O notation: in this caseO(n)— linear complexity. The complexity specifies the order of magnitude within which the program will perform its operations. More precisely, in the case ofO(n), the program may performc n opera- · tions, wherec is a constant; however, it may not performn 2 operations, since this involves a different order of magnitude of data. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4]has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) In Bubble sort, Selection sort and Insertion sort, the O(N ) time time complexity that limits its usefulness to small number of complexity limits the performance when N gets very big. element no more than a few thousand data points. -

Overview of Sorting Algorithms

Unit 7 Sorting Algorithms Simple Sorting algorithms Quicksort Improving Quicksort Overview of Sorting Algorithms Given a collection of items we want to arrange them in an increasing or decreasing order. You probably have seen a number of sorting algorithms including ¾ selection sort ¾ insertion sort ¾ bubble sort ¾ quicksort ¾ tree sort using BST's In terms of efficiency: ¾ average complexity of the first three is O(n2) ¾ average complexity of quicksort and tree sort is O(n lg n) ¾ but its worst case is still O(n2) which is not acceptable In this section, we ¾ review insertion, selection and bubble sort ¾ discuss quicksort and its average/worst case analysis ¾ show how to eliminate tail recursion ¾ present another sorting algorithm called heapsort Unit 7- Sorting Algorithms 2 Selection Sort Assume that data ¾ are integers ¾ are stored in an array, from 0 to size-1 ¾ sorting is in ascending order Algorithm for i=0 to size-1 do x = location with smallest value in locations i to size-1 swap data[i] and data[x] end Complexity If array has n items, i-th step will perform n-i operations First step performs n operations second step does n-1 operations ... last step performs 1 operatio. Total cost : n + (n-1) +(n-2) + ... + 2 + 1 = n*(n+1)/2 . Algorithm is O(n2). Unit 7- Sorting Algorithms 3 Insertion Sort Algorithm for i = 0 to size-1 do temp = data[i] x = first location from 0 to i with a value greater or equal to temp shift all values from x to i-1 one location forwards data[x] = temp end Complexity Interesting operations: comparison and shift i-th step performs i comparison and shift operations Total cost : 1 + 2 + .. -

Batcher's Algorithm

18.310 lecture notes Fall 2010 Batcher’s Algorithm Prof. Michel Goemans Perhaps the most restrictive version of the sorting problem requires not only no motion of the keys beyond compare-and-switches, but also that the plan of comparison-and-switches be fixed in advance. In each of the methods mentioned so far, the comparison to be made at any time often depends upon the result of previous comparisons. For example, in HeapSort, it appears at first glance that we are making only compare-and-switches between pairs of keys, but the comparisons we perform are not fixed in advance. Indeed when fixing a headless heap, we move either to the left child or to the right child depending on which child had the largest element; this is not fixed in advance. A sorting network is a fixed collection of comparison-switches, so that all comparisons and switches are between keys at locations that have been specified from the beginning. These comparisons are not dependent on what has happened before. The corresponding sorting algorithm is said to be non-adaptive. We will describe a simple recursive non-adaptive sorting procedure, named Batcher’s Algorithm after its discoverer. It is simple and elegant but has the disadvantage that it requires on the order of n(log n)2 comparisons. which is larger by a factor of the order of log n than the theoretical lower bound for comparison sorting. For a long time (ten years is a long time in this subject!) nobody knew if one could find a sorting network better than this one. -

Hacking a Google Interview – Handout 2

Hacking a Google Interview – Handout 2 Course Description Instructors: Bill Jacobs and Curtis Fonger Time: January 12 – 15, 5:00 – 6:30 PM in 32‐124 Website: http://courses.csail.mit.edu/iap/interview Classic Question #4: Reversing the words in a string Write a function to reverse the order of words in a string in place. Answer: Reverse the string by swapping the first character with the last character, the second character with the second‐to‐last character, and so on. Then, go through the string looking for spaces, so that you find where each of the words is. Reverse each of the words you encounter by again swapping the first character with the last character, the second character with the second‐to‐last character, and so on. Sorting Often, as part of a solution to a question, you will need to sort a collection of elements. The most important thing to remember about sorting is that it takes O(n log n) time. (That is, the fastest sorting algorithm for arbitrary data takes O(n log n) time.) Merge Sort: Merge sort is a recursive way to sort an array. First, you divide the array in half and recursively sort each half of the array. Then, you combine the two halves into a sorted array. So a merge sort function would look something like this: int[] mergeSort(int[] array) { if (array.length <= 1) return array; int middle = array.length / 2; int firstHalf = mergeSort(array[0..middle - 1]); int secondHalf = mergeSort( array[middle..array.length - 1]); return merge(firstHalf, secondHalf); } The algorithm relies on the fact that one can quickly combine two sorted arrays into a single sorted array. -

Sorting Algorithms Correcness, Complexity and Other Properties

Sorting Algorithms Correcness, Complexity and other Properties Joshua Knowles School of Computer Science The University of Manchester COMP26912 - Week 9 LF17, April 1 2011 The Importance of Sorting Important because • Fundamental to organizing data • Principles of good algorithm design (correctness and efficiency) can be appreciated in the methods developed for this simple (to state) task. Sorting Algorithms 2 LF17, April 1 2011 Every algorithms book has a large section on Sorting... Sorting Algorithms 3 LF17, April 1 2011 ...On the Other Hand • Progress in computer speed and memory has reduced the practical importance of (further developments in) sorting • quicksort() is often an adequate answer in many applications However, you still need to know your way (a little) around the the key sorting algorithms Sorting Algorithms 4 LF17, April 1 2011 Overview What you should learn about sorting (what is examinable) • Definition of sorting. Correctness of sorting algorithms • How the following work: Bubble sort, Insertion sort, Selection sort, Quicksort, Merge sort, Heap sort, Bucket sort, Radix sort • Main properties of those algorithms • How to reason about complexity — worst case and special cases Covered in: the course book; labs; this lecture; wikipedia; wider reading Sorting Algorithms 5 LF17, April 1 2011 Relevant Pages of the Course Book Selection sort: 97 (very short description only) Insertion sort: 98 (very short) Merge sort: 219–224 (pages on multi-way merge not needed) Heap sort: 100–106 and 107–111 Quicksort: 234–238 Bucket sort: 241–242 Radix sort: 242–243 Lower bound on sorting 239–240 Practical issues, 244 Some of the exercise on pp. -

Sorting and Asymptotic Complexity

SORTING AND ASYMPTOTIC COMPLEXITY Lecture 14 CS2110 – Fall 2013 Reading and Homework 2 Texbook, chapter 8 (general concepts) and 9 (MergeSort, QuickSort) Thought question: Cloud computing systems sometimes sort data sets with hundreds of billions of items – far too much to fit in any one computer. So they use multiple computers to sort the data. Suppose you had N computers and each has room for D items, and you have a data set with N*D/2 items to sort. How could you sort the data? Assume the data is initially in a big file, and you’ll need to read the file, sort the data, then write a new file in sorted order. InsertionSort 3 //sort a[], an array of int Worst-case: O(n2) for (int i = 1; i < a.length; i++) { (reverse-sorted input) // Push a[i] down to its sorted position Best-case: O(n) // in a[0..i] (sorted input) int temp = a[i]; Expected case: O(n2) int k; for (k = i; 0 < k && temp < a[k–1]; k– –) . Expected number of inversions: n(n–1)/4 a[k] = a[k–1]; a[k] = temp; } Many people sort cards this way Invariant of main loop: a[0..i-1] is sorted Works especially well when input is nearly sorted SelectionSort 4 //sort a[], an array of int Another common way for for (int i = 1; i < a.length; i++) { people to sort cards int m= index of minimum of a[i..]; Runtime Swap b[i] and b[m]; . Worst-case O(n2) } . Best-case O(n2) . -

Edsger W. Dijkstra: a Commemoration

Edsger W. Dijkstra: a Commemoration Krzysztof R. Apt1 and Tony Hoare2 (editors) 1 CWI, Amsterdam, The Netherlands and MIMUW, University of Warsaw, Poland 2 Department of Computer Science and Technology, University of Cambridge and Microsoft Research Ltd, Cambridge, UK Abstract This article is a multiauthored portrait of Edsger Wybe Dijkstra that consists of testimo- nials written by several friends, colleagues, and students of his. It provides unique insights into his personality, working style and habits, and his influence on other computer scientists, as a researcher, teacher, and mentor. Contents Preface 3 Tony Hoare 4 Donald Knuth 9 Christian Lengauer 11 K. Mani Chandy 13 Eric C.R. Hehner 15 Mark Scheevel 17 Krzysztof R. Apt 18 arXiv:2104.03392v1 [cs.GL] 7 Apr 2021 Niklaus Wirth 20 Lex Bijlsma 23 Manfred Broy 24 David Gries 26 Ted Herman 28 Alain J. Martin 29 J Strother Moore 31 Vladimir Lifschitz 33 Wim H. Hesselink 34 1 Hamilton Richards 36 Ken Calvert 38 David Naumann 40 David Turner 42 J.R. Rao 44 Jayadev Misra 47 Rajeev Joshi 50 Maarten van Emden 52 Two Tuesday Afternoon Clubs 54 2 Preface Edsger Dijkstra was perhaps the best known, and certainly the most discussed, computer scientist of the seventies and eighties. We both knew Dijkstra |though each of us in different ways| and we both were aware that his influence on computer science was not limited to his pioneering software projects and research articles. He interacted with his colleagues by way of numerous discussions, extensive letter correspondence, and hundreds of so-called EWD reports that he used to send to a select group of researchers. -

Data Structures & Algorithms

DATA STRUCTURES & ALGORITHMS Tutorial 6 Questions SORTING ALGORITHMS Required Questions Question 1. Many operations can be performed faster on sorted than on unsorted data. For which of the following operations is this the case? a. checking whether one word is an anagram of another word, e.g., plum and lump b. findin the minimum value. c. computing an average of values d. finding the middle value (the median) e. finding the value that appears most frequently in the data Question 2. In which case, the following sorting algorithm is fastest/slowest and what is the complexity in that case? Explain. a. insertion sort b. selection sort c. bubble sort d. quick sort Question 3. Consider the sequence of integers S = {5, 8, 2, 4, 3, 6, 1, 7} For each of the following sorting algorithms, indicate the sequence S after executing each step of the algorithm as it sorts this sequence: a. insertion sort b. selection sort c. heap sort d. bubble sort e. merge sort Question 4. Consider the sequence of integers 1 T = {1, 9, 2, 6, 4, 8, 0, 7} Indicate the sequence T after executing each step of the Cocktail sort algorithm (see Appendix) as it sorts this sequence. Advanced Questions Question 5. A variant of the bubble sorting algorithm is the so-called odd-even transposition sort . Like bubble sort, this algorithm a total of n-1 passes through the array. Each pass consists of two phases: The first phase compares array[i] with array[i+1] and swaps them if necessary for all the odd values of of i. -

Sorting Networks on Restricted Topologies Arxiv:1612.06473V2 [Cs

Sorting Networks On Restricted Topologies Indranil Banerjee Dana Richards Igor Shinkar [email protected] [email protected] [email protected] George Mason University George Mason University UC Berkeley October 9, 2018 Abstract The sorting number of a graph with n vertices is the minimum depth of a sorting network with n inputs and n outputs that uses only the edges of the graph to perform comparisons. Many known results on sorting networks can be stated in terms of sorting numbers of different classes of graphs. In this paper we show the following general results about the sorting number of graphs. 1. Any n-vertex graph that contains a simple path of length d has a sorting network of depth O(n log(n=d)). 2. Any n-vertex graph with maximal degree ∆ has a sorting network of depth O(∆n). We also provide several results relating the sorting number of a graph with its rout- ing number, size of its maximal matching, and other well known graph properties. Additionally, we give some new bounds on the sorting number for some typical graphs. 1 Introduction In this paper we study oblivious sorting algorithms. These are sorting algorithms whose sequence of comparisons is made in advance, before seeing the input, such that for any input of n numbers the value of the i'th output is smaller or equal to the value of the j'th arXiv:1612.06473v2 [cs.DS] 20 Jan 2017 output for all i < j. That is, for any permutation of the input out of the n! possible, the output of the algorithm must be sorted. -

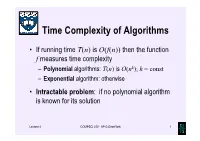

Time Complexity of Algorithms

Time Complexity of Algorithms • If running time T(n) is O(f(n)) then the function f measures time complexity – Polynomial algorithms: T(n) is O(nk); k = const – Exponential algorithm: otherwise • Intractable problem: if no polynomial algorithm is known for its solution Lecture 4 COMPSCI 220 - AP G Gimel'farb 1 Time complexity growth f(n) Number of data items processed per: 1 minute 1 day 1 year 1 century n 10 14,400 5.26⋅106 5.26⋅108 7 n log10n 10 3,997 883,895 6.72⋅10 n1.5 10 1,275 65,128 1.40⋅106 n2 10 379 7,252 72,522 n3 10 112 807 3,746 2n 10 20 29 35 Lecture 4 COMPSCI 220 - AP G Gimel'farb 2 Beware exponential complexity ☺If a linear O(n) algorithm processes 10 items per minute, then it can process 14,400 items per day, 5,260,000 items per year, and 526,000,000 items per century ☻If an exponential O(2n) algorithm processes 10 items per minute, then it can process only 20 items per day and 35 items per century... Lecture 4 COMPSCI 220 - AP G Gimel'farb 3 Big-Oh vs. Actual Running Time • Example 1: Let algorithms A and B have running times TA(n) = 20n ms and TB(n) = 0.1n log2n ms • In the “Big-Oh”sense, A is better than B… • But: on which data volume can A outperform B? TA(n) < TB(n) if 20n < 0.1n log2n, 200 60 or log2n > 200, that is, when n >2 ≈ 10 ! • Thus, in all practical cases B is better than A… Lecture 4 COMPSCI 220 - AP G Gimel'farb 4 Big-Oh vs. -

A Short History of Computational Complexity

The Computational Complexity Column by Lance FORTNOW NEC Laboratories America 4 Independence Way, Princeton, NJ 08540, USA [email protected] http://www.neci.nj.nec.com/homepages/fortnow/beatcs Every third year the Conference on Computational Complexity is held in Europe and this summer the University of Aarhus (Denmark) will host the meeting July 7-10. More details at the conference web page http://www.computationalcomplexity.org This month we present a historical view of computational complexity written by Steve Homer and myself. This is a preliminary version of a chapter to be included in an upcoming North-Holland Handbook of the History of Mathematical Logic edited by Dirk van Dalen, John Dawson and Aki Kanamori. A Short History of Computational Complexity Lance Fortnow1 Steve Homer2 NEC Research Institute Computer Science Department 4 Independence Way Boston University Princeton, NJ 08540 111 Cummington Street Boston, MA 02215 1 Introduction It all started with a machine. In 1936, Turing developed his theoretical com- putational model. He based his model on how he perceived mathematicians think. As digital computers were developed in the 40's and 50's, the Turing machine proved itself as the right theoretical model for computation. Quickly though we discovered that the basic Turing machine model fails to account for the amount of time or memory needed by a computer, a critical issue today but even more so in those early days of computing. The key idea to measure time and space as a function of the length of the input came in the early 1960's by Hartmanis and Stearns.