Unmanned Ground Vehicle Modelling in Gazebo/ROS-Based Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to ROS – Robot Operating System –

Introduction to ROS – Robot Operating System – Daniel Serrano Department of Intelligent Systems, ASCAMM Technology Center Parc Tecnològic del Vallès, Av. Universitat Autònoma, 23 08290 Cerdanyola del Vallès SPAIN [email protected] ABSTRACT This paper gives an introduction to the open-source Robot Operating System (ROS. ROS has been widely adopted by the research robotics community as it provides a powerful software framework to develop autonomous unmanned systems. In this paper, we discuss some of the components that are available on the ROS repository. 1.0 INTRODUCTION The Robot Operating System (ROS) [1] is an open source robot operating system. It provides libraries and tools to help software developers create robot applications. It offers hardware abstraction, device drivers, libraries, visualizers, message-passing, package management, and more. ROS is similar in some respects to 'robot frameworks,' such as Player, YARP, Orocos, CARMEN, Orca, MOOS, and Microsoft Robotics Studio. The primary goal of ROS is to support code reuse in robotics research and development. It has been quickly adopted by many robotics research institutions and companies as their standard development framework. The list of robots using ROS is quite extent and it includes platforms from diverse domains, ranging from aerial and ground robots to humanoids and underwater vehicles. To name some, the humanoid robots PR-2 and REEM-C, ground robots such as the ClearPath platforms and aerial platforms such as the ones from Ascending Technologies are fully developed in ROS. Furthermore, a quite extent list of popular sensors for robots is already supported in ROS. To name some, sensors such as Inertial Measurement Units, GPS receivers, cameras and lasers can already be accessed from the drivers widely available on the ROS system. -

Robot Operating System - the Complete Reference (Volume 4) Part of the Studies in Computational Intelligence Book Series (SCI, Volume 831)

Book Robot Operating System - The Complete Reference (Volume 4) Part of the Studies in Computational Intelligence book series (SCI, volume 831) Several authors CISTER-TR-190702 2019 Book CISTER-TR-190702 Robot Operating System - The Complete Reference (Volume 4) Robot Operating System - The Complete Reference (Volume 4) Several authors CISTER Research Centre Rua Dr. António Bernardino de Almeida, 431 4200-072 Porto Portugal Tel.: +351.22.8340509, Fax: +351.22.8321159 E-mail: https://www.cister-labs.pt Abstract This is the fourth volume of the successful series Robot Operating Systems: The Complete Reference, providing a comprehensive overview of robot operating systems (ROS), which is currently the main development framework for robotics applications, as well as the latest trends and contributed systems. The book is divided into four parts: Part 1 features two papers on navigation, discussing SLAM and path planning. Part 2 focuses on the integration of ROS into quadcopters and their control. Part 3 then discusses two emerging applications for robotics: cloud robotics, and video stabilization. Part 4 presents tools developed for ROS; the first is a practical alternative to the roslaunch system, and the second is related to penetration testing. This book is a valuable resource for ROS users and wanting to learn more about ROS capabilities and features. © 2019 CISTER Research Center 1 www.cister-labs.pt Studies in Computational Intelligence 831 Anis Koubaa Editor Robot Operating System (ROS) The Complete Reference (Volume 4) Studies in Computational Intelligence Volume 831 Series Editor Janusz Kacprzyk, Polish Academy of Sciences, Warsaw, Poland [email protected] The series “Studies in Computational Intelligence” (SCI) publishes new develop- ments and advances in the various areas of computational intelligence—quickly and with a high quality. -

Concurrency Patterns for Easier Robotic Coordination

Concurrency Patterns for Easier Robotic Coordination Andrey Rusakov∗ Jiwon Shin∗ Bertrand Meyer∗† ∗Chair of Software Engineering, Department of Computer Science, ETH Zurich,¨ Switzerland †Software Engineering Lab, Innopolis University, Kazan, Russia fandrey.rusakov, jiwon.shin, [email protected] Abstract— Software design patterns are reusable solutions to Guarded suspension – are chosen for their concurrent nature, commonly occurring problems in software development. Grow- applicability to robotic coordination, and evidence of use ing complexity of robotics software increases the importance of in existing robotics frameworks. The paper aims to help applying proper software engineering principles and methods such as design patterns to robotics. Concurrency design patterns programmers who are not yet experts in concurrency to are particularly interesting to robotics because robots often have identify and then to apply one of the common concurrency- many components that can operate in parallel. However, there based solutions for their applications on robotic coordination. has not yet been any established set of reusable concurrency The rest of the paper is organized as follows: After design patterns for robotics. For this purpose, we choose six presenting related work in Section II, it proceeds with a known concurrency patterns – Future, Periodic timer, Invoke later, Active object, Cooperative cancellation, and Guarded sus- general description of concurrency approach for robotics pension. We demonstrate how these patterns could be used for in Section III. Then the paper presents and describes in solving common robotic coordination tasks. We also discuss detail a number of concurrency patterns related to robotic advantages and disadvantages of the patterns and how existing coordination in Section IV. -

Lab Lecture 1: Introduction to Ros

16-311-Q INTRODUCTION TO ROBOTICS LAB LECTURE 1: INTRODUCTION TO ROS INSTRUCTOR: GIANNI A. DI CARO PROBLEM(S) IN ROBOTICS DEVELOPMENT In Robotics, before ROS • Lack of standards • Little code reusability • Keeping reinventing (or rewriting) device drivers, access to robot’s interfaces, management of on- board processes, inter-process communication protocols, … • Keeping re-coding standard algorithms • New robot in the lab (or in the factory) start re-coding (mostly) from scratch 2 ROBOT OPERATING SYSTEM (ROS) http://www.ros.org 3 WHAT IS ROS? . ROS is an open-source robot operating system . A set of software libraries and tools that help you build robot applications that work across a wide variety of robotic platforms . Originally developed in 2007 at the Stanford Artificial Intelligence Laboratory and development continued at Willow Garage . Since 2013 managed by OSRF (Open Source Robotics Foundation) Note: Some of the following slides are adapted from Roi Yehoshua 4 ROS MAIN FEATURES ROS has two "sides" . The operating system side, which provides standard operating system services such as: o hardware abstraction o low-level device control o implementation of commonly used functionality o message-passing between processes o package management . A suite of user contributed packages that implement common robot functionality such as SLAM, planning, perception, vision, manipulation, etc. 5 ROS MAIN FEATURES 6 ROS PHILOSOPHY . Peer to Peer o ROS systems consist of many small programs (nodes) which connect to each other and continuously exchange messages . Tools-based o There are many small, generic programs that perform tasks such as visualization, logging, plotting data streams, etc. -

Architecture of a Software System for Robotics Control

Architecture of a software system for robotics control Aleksey V. Shevchenko Oksana S. Mezentseva Information Systems Technologies Dept. Information Systems Technologies Dept. Stavropol City, NCFU Stavropol City, NCFU [email protected] [email protected] Dmitriy V. Mezentsev Konstantin Y. Ganshin Information Systems Technologies Dept. Information Systems Technologies Dept. Stavropol City, NCFU Stavropol City, NCFU [email protected] [email protected] Abstract This paper describes the architecture and features of RoboStudio, a software system for robotics control that allows complete and simulta- neous monitoring of all electronic components of a robotic system. This increases the safety of operating expensive equipment and allows for flexible configuration of different robotics components without changes to the source code. 1 Introduction Today, there are no clear standards for robotics programming. Each manufacturer creates their own software and hardware architecture based on their own ideas for optimizing the development process. Large manufacturers often buy their software systems from third parties, while small ones create theirs independently. As a result, the software often targets a specific platform (more often, a specific modification of a given platform). Thus, even minor upgrades of an existing robotic system often require a complete software rewrite. The lack of universal software products imposes large time and resource costs on developers, and often leads to the curtailment of promising projects. Some developers of robotic systems software partially solve the problem of universal software using the free Robotics Operation System (ROS), which also has limitations and is not always able to satisfy all the requirements of the manufacturers. 2 Equipment The research was conducted using two robots produced by the SPA "Android Technics" the "Mechatronics" stand and the full-size anthropomorphic robot AR-601E (figure 1). -

Design and Implementation of an Autonomous Robotics Simulator

DESIGN AND IMPLEMENTATION OF AN AUTONOMOUS ROBOTICS SIMULATOR by Adam Carlton Harris A thesis submitted to the faculty of The University of North Carolina at Charlotte in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering Charlotte 2011 Approved by: _______________________________ Dr. James M. Conrad _______________________________ Dr. Ronald R. Sass _______________________________ Dr. Bharat Joshi ii © 2011 Adam Carlton Harris ALL RIGHTS RESERVED iii ABSTRACT ADAM CARLTON HARRIS. Design and implementation of an autonomous robotics simulator. (Under the direction of DR. JAMES M. CONRAD) Robotics simulators are important tools that can save both time and money for developers. Being able to accurately and easily simulate robotic vehicles is invaluable. In the past two decades, corporations, robotics labs, and software development groups have released many robotics simulators to developers. Commercial simulators have proven to be very accurate and many are easy to use, however they are closed source and generally expensive. Open source simulators have recently had an explosion of popularity, but most are not easy to use. This thesis describes the design criteria and implementation of an easy to use open source robotics simulator. SEAR (Simulation Environment for Autonomous Robots) is designed to be an open source cross-platform 3D (3 dimensional) robotics simulator written in Java using jMonkeyEngine3 and the Bullet Physics engine. Users can import custom-designed 3D models of robotic vehicles and terrains to be used in testing their own robotics control code. Several sensor types (GPS, triple-axis accelerometer, triple-axis gyroscope, and a compass) have been simulated and early work on infrared and ultrasonic distance sensors as well as LIDAR simulators has been undertaken. -

ROS History and Basics

Computer Vision Laboratory Robot Vision Systems Lecture 9: ROS History and Basics Michael Felsberg [email protected] Goals • System design and programming in –OpenCV 3.0 rc1 –ROS (robot operating system) Indigo (Jade ?) • focus part 2: robot vision systems –distributed computing with ROS –efficient use of OpenCV in ROS • access to robotic hardware is not part of the course – make use of available resources from your lab • simulation as fallback Organization • lectures –systems basics in ROS Jade –Python introduction given by Hannes • seminars –participants who only participate in the ROS part (and did not read the OpenCV course): one seminar presentation required for credits • exercises –installation of ROS –going through essential first steps • project (example application) Organization • credits: 9hp if –project work –80% presence –one seminar presentation • without the project work: 6hp • note: if you have participated in the course ‘visual computing with OpenCV’, you can only get 6hp (3hp without project) • 'listen-only’: 0hp What is ROS? • Open Source Library for robotics middleware • Standford AI now supported by Willow Garage / Open Source Robotics Foundation • Free for use under the open source BSD license (most parts) • Linux (Ubuntu) –Experimental support Win, Mac, other Linux –rosjava (platform independent) Android, Matlab History of ROS • 2007: “Switchyard” by the Stanford Artificial Intelligence Laboratory • 2008-2013: development primarily at Willow Garage • Since 2013: Open Source Robotics Foundation Versions • 2010: -

Key Considerations When Choosing a Robot's Operating System

Key considerations when choosing a robot’s operating system August 2018 Introduction Robots have been the subject of science fiction films for decades, but with technological advances, including the rise of internet connected devices, a real robotic revolution is beginning to emerge. Converging with the growth of artificial intelligence and machine learning, robots are being adopted in every sector imaginable, including consumer, industrial, healthcare, and retail. According to IDC, worldwide robotic spending, encompassing hardware, software and related services, is set to reach US$230.7bn by 2021. Due to the sizeable market potential, demand, and evolution of technology, companies are seizing the opportunity to build robots. However as with many other initiatives in the wider Internet of Things (IoT) industry, there are key decisions that robotic manufacturers need to make from the development stage onwards to ensure they are building a sustainable, future-proof, and secure robot. One of the most important decisions to be made is that of the robot’s operating system. Unfortunately, this importance is not always obvious until the company is too invested to change it, which can lead to a slew of delays or issues to overcome. The operating system that’s perfect for hacking things together may be impossible to maintain once the robot reaches production. Similarly, the build-your-own option means maintaining the entire operating system (backporting upstream security updates, etc.) for the lifetime of the robot. The rock-solid, stable option may end up having versions of dependencies that are too old to use. That snazzy new one created by a niche startup that seems to tick all the boxes is less appealing if they suddenly go out of business. -

Programming Robots for Activities of Everyday Life

Thesis for The Degree of Licentiate of Engineering Programming Robots for Activities of Everyday Life Swaib Dragule Division of Software Engineering Department of Computer Science & Engineering Chalmers University of Technology and Gothenburg University Gothenburg, Sweden, 2021 Programming Robots for Activities of Everyday Life Swaib Dragule Copyright ©2021 Swaib Dragule except where otherwise stated. All rights reserved. Department of Computer Science & Engineering Division of Software Engineering Chalmers University of Technology and Gothenburg University Gothenburg, Sweden This thesis has been prepared using LATEX. Printed by Chalmers Reproservice, Gothenburg, Sweden 2021. ii ”Smiling to someone is an act of charity.” - Prophet Muhammad (P.B.U.H) iv v Abstract Text-based programming remains a challenge to novice programmers in all programming domains, including robotics. The use of robots is gaining considerable traction in several domains since robots are capable of assisting humans in repetitive and hazardous tasks. Soon robots will commonly be used in tasks-of-everyday-life in homes, hotels, airports, and museums. However, robotic missions have been either predefined or programmed using low-level APIs, making mission specification task-specific and error-prone. To harness the full potential of robots, it must be possible to define missions for specific application domains as needed. The specification of missions of robotic applications should be performed via easy-to-use, accessible ways, and at the same time, be accurate and unambiguous. Simplicity and flexibility in programming such robots are important since end-users come from diverse domains, not necessarily with sufficient programming knowledge. The main objective of this licentiate thesis is to empirically understand the state-of-the-art in languages and tools used for specifying robot missions by end-users. -

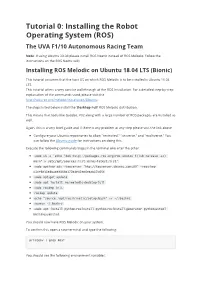

Tutorial 0: Installing the Robot Operating System (ROS) the UVA F1/10 Autonomous Racing Team

Tutorial 0: Installing the Robot Operating System (ROS) The UVA F1/10 Autonomous Racing Team Note: If using Ubuntu 20.04 please install ROS Noetic instead of ROS Melodic. Follow the instructions on the ROS Noetic wiki: Installing ROS Melodic on Ubuntu 18.04 LTS (Bionic) This tutorial assumes that the host OS on which ROS Melodic is to be installed is Ubuntu 18.04 LTS. This tutorial offers a very concise walkthrough of the ROS Installation. For a detailed step-by-step explanation of the commands used, please visit the http://wiki.ros.org/melodic/Installation/Ubuntu The steps listed below install the ‘Desktop-Full’ ROS Melodic distribution. This means that tools like Gazebo, rViz along with a large number of ROS packages are installed as well. Again, this is a very brief guide and if there is any problem at any step please visit the link above. Configure your Ubuntu repositories to allow "restricted," "universe," and "multiverse." You can follow the Ubuntu guide for instructions on doing this. Execute the following commands/steps in the terminal one after the other: sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu $(lsb_release -sc) main" > /etc/apt/sources.list.d/ros-latest.list' sudo apt-key adv --keyserver 'hkp://keyserver.ubuntu.com:80' --recv-key C1CF6E31E6BADE8868B172B4F42ED6FBAB17C654 sudo apt-get update sudo apt install ros-melodic-desktop-full sudo rosdep init rosdep update echo "source /opt/ros/kinetic/setup.bash" >> ~/.bashrc source ~/.bashrc sudo apt install python-rosinstall python-rosinstall-generator python-wstool build-essential You should now have ROS Melodic on your system. -

Tag: Job Control in Urbiscript

Tag: Job Control in urbiscript Jean-Christophe Baillie Akim Demaille Quentin Hocquet Matthieu Nottale May 7, 2010 Abstract The Urbi software platform for the development of applications for robots relies heavily on concur- rency. While concurrent programs have existed for decades, there is still no universal consensus for the proper means to control the complexity of parallelism. This paper presents some innovating features of Urbi: syntactic constructs to support concurrent jobs, and tags, used to control them. Urbi SDK is a software platform used to develop portable applications for robotics and artificial intel- ligence (Baillie, 2005). It is based on UObjects, a distributed component architecture, and on urbiscript, a parallel and event-driven scripting language. It’s current architecture has already described elsewhere (Baillie et al., 2008), this paper focuses on one specific feature of urbiscript: job control. Today, any computer runs many programs concurrently. The Operating System is in charge of provid- ing each process with the illusion of a fresh machine for it only, and of scheduling all these concurrent processes. In robots, jobs not only run concurrently, they collaborate. Each process is a piece of a complex behavior that emerges from the coordination/orchestration of these jobs. The Urbi platform aims to provide the developers with a convenient framework to orchestrate concur- rent, collaborating, tasks. Outline Section 1 introduces some of the features of urbiscript for parallel programming; it concludes on the common need for a high-level job control. Section 2 presents tags, the cornerstone of job control in Urbi. A real world example is presented in Section 3: safe interruption of the Nao walk. -

Programming Robots with Events Truong Giang Le, Dmitriy Fedosov, Olivier Hermant, Matthieu Manceny, Renaud Pawlak, Renaud Rioboo

Programming Robots With Events Truong Giang Le, Dmitriy Fedosov, Olivier Hermant, Matthieu Manceny, Renaud Pawlak, Renaud Rioboo To cite this version: Truong Giang Le, Dmitriy Fedosov, Olivier Hermant, Matthieu Manceny, Renaud Pawlak, et al.. Programming Robots With Events. 4th International Embedded Systems Symposium (IESS), Jun 2013, Paderborn, Germany. pp.14-25, 10.1007/978-3-642-38853-8_2. hal-00924489 HAL Id: hal-00924489 https://hal.inria.fr/hal-00924489 Submitted on 7 Jan 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Programming Robots with Events Truong-Giang Le1, Dmitriy Fedosov2, Olivier Hermant3, Matthieu Manceny1, Renaud Pawlak4, and Renaud Rioboo5 1 LISITE - ISEP, 28 rue Notre-Dame des Champs, 75006 Paris, France 2 Saint-Petersbourg University of Aerospace Instrumentation, 67 Bolshaya Morskaya street, 190000, Saint Petersburg, Russia 3 CRI - MINES ParisTech, 35 rue ST-Honor´e, 77300 Fontainebleau, France 4 IDCapture, 2 rue Duphot, 75001 Paris, France 5 ENSIIE, 1 square de la R´esistance, F-91025 Evry´ CEDEX, France {le-truong.giang,matthieu.manceny}@isep.fr, {dvfdsv,renaud.pawlak}@gmail.com, [email protected], [email protected] Abstract.