Modelling, Forecasting and Trading of Commodity Spreads

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Profit Model for Spread Trading with an Application to Energy Futures

A profit model for spread trading with an application to energy futures by Takashi Kanamura, Svetlozar T. Rachev, Frank J. Fabozzi No. 27 | MAY 2011 WORKING PAPER SERIES IN ECONOMICS KIT – University of the State of Baden-Wuerttemberg and National Laboratory of the Helmholtz Association econpapers.wiwi.kit.edu Impressum Karlsruher Institut für Technologie (KIT) Fakultät für Wirtschaftswissenschaften Institut für Wirtschaftspolitik und Wirtschaftsforschung (IWW) Institut für Wirtschaftstheorie und Statistik (ETS) Schlossbezirk 12 76131 Karlsruhe KIT – Universität des Landes Baden-Württemberg und nationales Forschungszentrum in der Helmholtz-Gemeinschaft Working Paper Series in Economics No. 27, May 2011 ISSN 2190-9806 econpapers.wiwi.kit.edu A Profit Model for Spread Trading with an Application to Energy Futures Takashi Kanamura J-POWER Svetlozar T. Rachev¤ Chair of Econometrics, Statistics and Mathematical Finance, School of Economics and Business Engineering University of Karlsruhe and KIT, Department of Statistics and Applied Probability University of California, Santa Barbara and Chief-Scientist, FinAnalytica INC Frank J. Fabozzi Yale School of Management October 19, 2009 ABSTRACT This paper proposes a profit model for spread trading by focusing on the stochastic move- ment of the price spread and its first hitting time probability density. The model is general in that it can be used for any financial instrument. The advantage of the model is that the profit from the trades can be easily calculated if the first hitting time probability density of the stochastic process is given. We then modify the profit model for a particular market, the energy futures market. It is shown that energy futures spreads are modeled by using a mean- reverting process. -

Specialty Strategies

RCM Alternatives: AlternaRCt M ve s Whitepaper Specialty Strategies 318 W Adams St 10th FL | Chicago, IL 60606 | 855-726-0060 www.rcmalternatives.com | [email protected] RCM Alternatives: Specialty Strategies RCM It’s fairly common for “trend following” and “managed futures” to be used interchangeably. But there are many more strategies out there beyond the standard approach – a variety of approaches that we call Specialty Strategies. Specialty strategies include short-term, options, and spread traders. Short-Term Systematic Traders The cousin to the multi-market systematic trend is the trading equivalent of an arms race that most follower, the short-term systematic program will also money managers want to stay as far away from as look to latch onto a “trend” in an effort to make a possible. profit. The difference here has to do with timeframe, and how that impacts their trend identification, What types of shorter-term traders can we length of trade, and performance during volatile expect to invest with? First, there are day trading times. Unlike longer-term trend followers, short-term strategies. A day trading system is defined by a systematic traders may believe an hours-long move is single characteristic: that it will NOT hold a position enough to represent a trend, allowing them to take overnight, with all positions covered by the end of advantage of market moves that are much shorter in the trading day. This appeals to many investors who duration. One man’s noise is another’s treasure in the don’t like the prospect of something happening in minds of short term traders. -

Chicago Mercantile Exchange, Chicago Board of Trade, New York

Sean M. Downey Senior Director and Associate General Counsel Legal Department March 15, 2013 VIA E-MAIL Ms. Melissa Jurgens Office of the Secretariat Commodity Futures Trading Commission Three Lafayette Centre 1155 21st Street, N.W. Washington, DC 20581 RE: Regulation 40.6(a). Chicago Mercantile Exchange Inc./ The Board of Trade of the City of Chicago, Inc./ The New York Mercantile Exchange, Inc./ Commodity Exchange, Inc. Submission # 13-066: Revisions to CME, CBOT and NYMEX/COMEX Position Limit, Position Accountability and Reportable Level Tables Dear Ms. Jurgens: Chicago Mercantile Exchange Inc. (“CME”), The Board of Trade of the City of Chicago, Inc. (“CBOT”), The New York Mercantile Exchange, Inc. (“NYMEX”) and Commodity Exchange, Inc. (“COMEX”), (collectively, the “Exchanges”) are self-certifying revisions to the Position Limit, Position Accountability and Reportable Level Tables (collectively, the “Tables”) in the Interpretations & Special Notices Section of Chapter 5 in the Exchanges’ Rulebooks. The revisions will become effective on April 1, 2013 and are being adopted to ensure that the Tables are in compliance with CFTC Core Principle 7 (“Availability of General Information”), which requires that DCMs make available to the public accurate information concerning the contract market’s rules and regulations, contracts and operations. In connection with CFTC Core Principle 7, the Exchanges launched a Rulebook Harmonization Project with the goal of eliminating old, erroneous and obsolete language, ensuring the accuracy of all listed values (e.g., position limits, aggregation, diminishing balances, etc.) and harmonizing the language and structure of the Tables and product chapters to the best extent possible. The changes to the Tables are primarily stylistic in nature (e.g., format, extra columns, tabs, product groupings, etc.). -

A Study on Advantages of Separate Trade Book for Bull Call Spreads and Bear Put Spreads

IOSR Journal of Business and Management (IOSR-JBM) e-ISSN: 2278-487X, p-ISSN: 2319-7668 PP 11-20 www.iosrjournals.org A Study on Advantages of Separate Trade book for Bull Call Spreads and Bear Put Spreads. Chirag Babulal Shah PhD (Pursuing), M.M.S., B.B.A., D.B.M., P.G.D.F.T., D.M.T.T. Assistant Professor at Indian Education Society’s Management College and Research Centre. Abstract: Derivatives are revolutionary instruments and have changed the way one looks at the financial world. Derivatives were introduced as a hedging tool. However, as the understanding of the intricacies of these instruments has evolved, it has become more of a speculative tool. The biggest advantage of Derivative instruments is the leverage it provides. The other benefit, which is not available in the spot market, is the ability to short the underlying, for more than a day. Thus it is now easy to take advantage of the downtrend. However, the small retail participants have not had a good overall experience of trading in derivatives and the public at large is still bereft of the advantages of derivatives. This has led to a phobia for derivatives among the small retail market participants, and they prefer to stick to the old methods of investing. The three main reasons identified are lack of knowledge, the margining methods and the quantity of the lot size. The brokers have also misled their clients and that has led to catastrophic results. The retail participation in the derivatives markets has dropped down dramatically. But, are these derivative instruments and especially options, so bad? The answer is NO. -

Affidavit of Stewart Mayhew

Affidavit of Stewart Mayhew I. Qualifications 1. I am a Principal at Cornerstone Research, an economic and financial consulting firm, where I have been working since 2010. At Cornerstone Research, I have conducted statistical and economic analysis for a variety of matters, including cases related to securities litigation, financial institutions, regulatory enforcement investigations, market manipulation, insider trading, and economic studies of securities market regulations. 2. Prior to working at Cornerstone Research, I worked at the U.S. Securities and Exchange Commission (“SEC” or “Commission”) as Deputy Chief Economist (2008-2010), Assistant Chief Economist (2004-2008), and Visiting Academic Scholar (2002-2004). From 2004 to 2008, I headed a group that was responsible for providing economic analysis and support for the Division of Trading and Markets (formerly known as the Division of Market Regulation), the Division of Investment Management, and the Office of Compliance Inspections and Examinations. Among my responsibilities were to analyze SEC rule proposals relating to market structure for the trading of stocks, bonds, options, and other products, and to perform analysis in connection with compliance examinations to assess whether exchanges, dealers, and brokers were complying with existing rules. I also assisted the Division of Enforcement on numerous investigations and enforcement actions, including matters involving market manipulation. 3. I have taught doctoral level, masters level, and undergraduate level classes in finance as Assistant Professor in the Finance Group at the Krannert School of Management, Purdue University (1996-1999), as Assistant Professor in the Department of Banking and Finance at the Terry College of Business, University of Georgia (2000-2004), and as a Lecturer at the Robert H. -

To Process Or Not to Process: the Production Transformation Option

TO PROCESS OR NOT TO PROCESS: THE PRODUCTION TRANSFORMATION OPTION by George Dotsis* and Nikolaos T. Milonas** February 2015 * Department of Economics, National and Kapodistrian University of Athens, 1 Sofokleous street, Athens 105 59 Greece, Tel. +30210 368 3973, e-mail: [email protected] ** Department of Economics, National and Kapodistrian University of Athens, 1 Sofokleous street, Athens 105 59 Greece, Tel. +30210 368 9442, e-mail: [email protected] The authors would like to thank the participants of the Finance Workshop at the University of Massachusetts-Amherst for useful comments on an earlier draft. The author alone has the responsibility for any remaining errors. TO PROCESS OR NOT TO PROCESS: THE PRODUCTION TRANSFORMATION OPTION Abstract This paper examines the implications of the production transformation asymmetry on prices of the commodity relative to the prices of its derivative products. When the production transformation process of a harvested good is irreversible, the price linkage between the harvested good and its derivatives breaks. This happens in the case where the supply of the good declines significantly and when independent demand for the good exists. Because the price of the good can rise above the combined value of its derivatives, it is associated with a valuable option not to process. The equilibrium processing margins are derived within a three period model. We show that the option not to process is valuable and can only be exercised by those who carry the commodity. Furthermore, it is shown that a partial hedging strategy is sufficient to reduce all price risk and it is superior to a strategy of no hedging. -

CHARLES UNIVERSITY Strategies for Spread Trading Using Futures

CHARLES UNIVERSITY FACULTY OF SOCIAL SCIENCES Institute of Economic Studies Oskar Gottlieb Strategies for Spread Trading using Futures Contracts Bachelor thesis Prague 2017 Author: Oskar Gottlieb Supervisor: doc. PhDr. Ladislav Kriˇstoufek Ph.D. Academic Year: 2016/2017 [Year of defense: 2017] Bibliografick´yz´aznam GOTTLIEB, Oskar. Strategies for Spread Trading Using Futures Contracts Praha 2017. 80 s. Bakal´aˇrsk´apr´ace (Bc.) Univerzita Karlova, Fakulta soci´aln´ıch vˇed, Institut ekonomick´ych studi´ı. Vedouc´ıdiplomov´epr´ace doc. PhDr. Ladislav Kriˇstoufek Ph.D. Anotace (abstrakt) Tato pr´ace se zamˇeˇruje na spready na futuritn´ıch trz´ıch, konkr´etnˇestuduje obchodn´ıstrategie zaloˇzen´ena dvou pˇr´ıstupech - kointegrace otestovan´ana inter-komoditn´ıch spreadech a sez´onnost kterou pozorujeme na kalend´aˇrn´ıch (intra-komoditn´ıch) spreadech. Na p´arech kontrakt˚u, kter´ejsou kointegrovan´e budeme testovat strategie zaloˇzen´ena n´avratu k pr˚umˇeru. Tˇri strategie budou vyuˇz´ıvat filtr tzv. ‘f´erov´ehodnoty’, jedna bude pracovat s hodnotou relativn´ı. Podobn´ym zp˚usobem budeme na kalend´aˇrn´ıch spreadech testovat strategie typu “buy and hold”. Vˇsechny strategie testujeme na in-sample a out-of-sample datech. Sez´onn´ıstrategie nevygenerovaly dostateˇcnˇeziskov´e strategie, nˇekter´einter-komoditn´ıspready se naopak uk´azaly jako profitab- iln´ıv obou testovac´ıch period´ach. V´yjimku u inter-komoditn´ıch spread˚u tvoˇrily zejm´ena vˇseobecnˇezn´am´espready, kter´ev out-of-sample testech neobst´aly. Kl´ıˇcov´aslova Futuritn´ıkontrakty, kointegrace, sez´onnost, strategie zaloˇzen´ena reverzi k pr˚umˇeru, futuritn´ıspready Bibliographic note GOTTLIEB, Oskar. Strategies for Spread Trading Using Futures Contracts Prague 2017. -

Copyrighted Material

Index A convertible, 106 MBS Index, 82 Adjustable-rate mortgages (ARMs), 97 stock-index, 137 Municipal Bond Index, 82 Agency securities. See Bonds Arnott, Robert, 84 BATS Global Markets, 52–53 Alaska, 91 Asian Development Bank, 87 Basis points, 20–21, 23–24, 32, 39, 44, 46–47, 48, 107, Alerian MLP Index, 117–118 Ask 109–110, 147, 149, 151, 153–154 Allied Capital, 113 premium, 141–142 benchmark Alternative assets. See Commodities, Real estate price, 5, 14, 27, 54–55, 66, 71, 94, 109, 116, 120, base metals prices, 131 Alternative Display Facility, 54, 55 132, 134, 142, 154, 162 CDS rate, 156 Alternative funds rate, 21, 149 commodities, 129 angel investing, 165–166, 170 Auctions, debt, 3, 18–20, 24–26, 30, 90 fund performance, 79, 128, 160–161, 164, funds of funds, 167 Australia, 113 168, 177 global macro, 166–167 Auto loans, 93 futures, 129 hedge funds, 63, 106, 113, 127, 165–169, 177 index, bonds, 81–83 long-biased, 167 B index, commodities, 174–175 long-short, 167 Backwardation, 135–136, 137 index, hard assets, 85 managed futures, 167 Bank for International Settlements (BIS), index, MLPs, 117 market neutral, 167 12, 150 index, stocks, 83, 125, 137 private equity, 63, 165–166, 169, 170 Bank of America, 100, 103 index, real estate, short-biased, 167 Merrill Lynch, 82, 175 index, REITs, 120–121 venture capital, 165–166, 170 Bank reserves, 36 interest rate, 8, 24, 32, 36, 97, 110, 147 Amazon.com, 164, 166 Bankers’ acceptances (BAs), 36 maturities, 49, American Depositary Bankruptcy. See also Eastman Kodak, Lehman mortgage-backed bonds, 95 Receipts (ADRs), 56 Brothers, Risk gold price, 65, 85 Shares (ADSs), 56 corporate, 21, 40, 54, 99–100, 103, 108, oil price, 70, 72, 131 Angel investing. -

Putting on the Crush: Day Trading the Soybean Complex Spread Dominic Rechner Geoffrey Poitras

Putting on the Crush: Day Trading the Soybean Complex Spread Dominic Rechner Geoffrey Poitras INTRODUCTION n recent years, spread trading strategies for financial commodities have received I considerable attention [Rentzler (1986); Poitras (1987); Yano (1989)] while, with some exceptions, spreads in agricultural commodities have been relatively ignored. Limited or no information is available, for example, on the performance of various profit margin trading rules arising from^roduction relationships between tradeable agricultural input and outgut-prices, e.g., soybean meal, corn and live hogs [Kenyon and Clay (1987)]. Of ttfea^icultural spreading strategies, the so-called "soy crush" or soybean complex spread is possibly the most well known.' Trading rules arising from this spread exploit the gross processing margin (GPM) inherent in the processing of raw soybeans into crude oil and meal. The primary objective of this study is to show that it is possible to use the GPM, derived from the known relative proportions of meal, oil, and beans, to specify profitable rules for day trading the soy crush spread.^ This article first reviews relevant results of previous studies on the soybean complex and its components followed by specifics on the GPM-based, intraday trading rule under consideration. Assumptions on contract selection, transactions costs, and margin costs are discussed. Simulation results for the trade on daily data over the period February 1, 1978 to July 30, 1991 are presented. The results indicate that, for sufficiently large filter sizes, the trading rule under consideration is profitable during the sample periods examined. It is argued that the results of this study refiect the potential profitability of fioor trading in the soybean pits. -

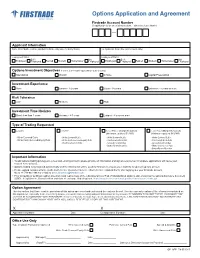

Options Application and Agreement Member FINRA/SIPC Firstrade Account Number (If Application Is for an Existing Account – Otherwise Leave Blank)

Options Application and Agreement Member FINRA/SIPC Firstrade Account Number (if application is for an existing account – otherwise leave blank) Applicant Information Name (First, Middle, Last for individual / indicate entity name for Entity/Trusts) Co-Applicant’s Name (For Joint Accounts Only) Employment Status Employment Status Self- Not Self- Not Employed Employed Retired Student Homemaker Employed Employed Employed Retired Student Homemaker Employed Options Investment Objectives (Level 2, 3 & 4 require “Speculation” to be checked) Speculation Growth Income Capital Preservation Investment Experience None Limited - 1-2 years Good - 3-5 years Extensive - 5 years or more Risk Tolerance Low Medium High Investment Time Horizon Short- less than 3 years Average - 4-7 years Longest - 8 years or more Type of Trading Requested Level 1 Level 2 Level Three (Margin Required) Level Four (Margin Required) (Minimum equity of $2,000) (Minimum equity of $10,000) • Write Covered Calls • Write Covered Calls • Write Covered Calls • Write Covered Calls • Write Cash-Secured Equity Puts • Write Cash-Secured Equity Puts • Purchase Calls & Puts • Purchase Calls & Puts • Purchase Calls & Puts • Spreads & Straddles • Spreads & Straddles • Buttery and Condor • Write Uncovered Puts • Buttery and Condor Important Information To add options trading privileges to a new and existing account, please provide all information and signature(s) below. Incomplete applications will cause your request to be delayed. Options trading is not granted automatically and the information will be used by Firstrade to assess your eligibility to open an options account. Please upload completed form via the Form Center (Customer Service ->Form Center ->Upload Form) after logging into your Firstrade account, fax to +1-718-961-3919 or e-mail to [email protected] Prior to buying or selling an option, investors must read a copy of the Characteristics & Risk of Standardized Options, also known as the options disclosure document (ODD). -

My Top 5 Rules for Successful Debit Spread Trading Trade with Lower Cost and Create More Consistency in Your Options Portfolio

My Top 5 Rules for Successful Debit Spread Trading Trade with Lower Cost and Create More Consistency in Your Options Portfolio Price Headley, CFA, CMT TABLE OF CONTENTS: How Debit Spreads Give You Growth AND Income Potential Rule #1. Buy In-The-Money and Sell At or Out-Of-The-Money Rule #2. Sell More Time Premium Than You Buy Rule #3. Profit Taking in Pieces Increases Win Percentage Rule #4. Don’t Be Greedy – Favor Early Profit Taking Rule #5. Trade in Pairs How Debit Spreads Give You Growth AND Income Potential If you’ve ever bought an option before, you know that one of the challenges of trading a time-limited asset is paying a “time premium” and overcoming the loss of time. And yet as a trader, I still see plenty of opportunities to take advantage of trends on various time frames, from quick moves over a few days to “swing trading” moves over several weeks. So how can you actually place time on your side in the options game? It’s not by just selling options, as that can be profitable, but many traders have seen how a small number of trades can cause you lots of trouble in option selling if a stock or market moves sharply against you. The answer that meets nicely in the middle – profiting from the growth potential of catching a piece of an existing trend, while lowering your total cost per contract and paying no net time in your options – is Debit Spreads. You may have heard them called Vertical Spreads, or Bull Call Spreads or Bear Put Spreads. -

Financial and Commodity-Specific Expectations in Soybean Futures Markets

Financial and Commodity-specific expectations in soybean futures markets A.N.Q. Cao; S.-C. Grosche Institute for Food and Resource Economics, Rheinische Friedrich-Wilhelms- Universität Bonn, , Germany Corresponding author email: [email protected] Abstract: We conceptualize the futures price of an agricultural commodity as an aggregate expectation for the spot price of a commodity. The market agents have divergent opinions about the price development and the price drivers, which initiates trading. In informationally efficient markets, the price will thus reflect expectations about its influencing variables. Using historical decompositions from an SVARX model, we analyze the contribution of financial and commodity- specific expectation shocks to changes in a trading- volume weighted price index for corn and soybean futures on the Chicago Board of Trade (CBOT) over the time period 2005- 2016. Financial expectations are instrumented with the DJ REIT Index, commodity demand expectations with the CNY/USD exchange rate and supply expectations with changes in the vapor pressure deficit. Results show that the price index was affected by cumulative shocks in the REIT index during the time of the food price crisis, but these shocks are only of small magnitude. Weather fluctuations have a minimal impact on the week-to-week fluctuation of the commodity price index. Acknowledegment: JEL Codes: C32, C52 #1831 Financial and commodity-specific expectations in soybean futures markets1 Abstract. We conceptualize the futures price of an agricultural commodity as an aggregate expectation for the spot price of a commodity. The market agents have divergent opinions about the price development and the price drivers, which initiates trading.