Chapter 2: Who Is the Opponent?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Report Criminal Law in the Face of Cyberattacks

APRIL 2021 REPORT CRIMINAL LAW IN THE FACE OF CYBERATTACKS Working group chaired by Bernard Spitz, President of the International and Europe Division of MEDEF, former President of the French Insurance Federation (FFA) General secretary: Valérie Lafarge-Sarkozy, Lawyer, Partner with the law firm Altana ON I SS I AD HOC COMM CRIMINAL LAW IN THE FACE OF CYBERATTACKS CRIMINAL LAW IN THE FACE OF CYBERATTACKS CLUB DES JURISTES REPORT Ad hoc commission APRIL 2021 4, rue de la Planche 75007 Paris Phone : 01 53 63 40 04 www.leclubdesjuristes.com FIND US ON 2 PREFACE n the shadow of the global health crisis that has held the world in its grip since 2020, episodes of cyberattacks have multiplied. We should be careful not to see this as mere coincidence, an unexpected combination of calamities that unleash themselves in Ia relentless series bearing no relation to one another. On the contrary, the major disruptions or transitions caused in our societies by the Covid-19 pandemic have been conducive to the growth of offences which, though to varying degrees rooted in digital, are also symptoms of contemporary vulnerabilities. The vulnerability of some will have been the psychological breeding ground for digital offences committed during the health crisis. In August 2020, the Secretary-General of Interpol warned of the increase in cyberattacks that had occurred a few months before, attacks “exploiting the fear and uncertainty caused by the unstable economic and social situation brought about by Covid-19”. People anxious about the disease, undermined by loneliness, made vulnerable by their distress – victims of a particular vulnerability, those recurrent figures in contemporary criminal law – are the chosen victims of those who excel at taking advantage of the credulity of others. -

Encrochatsure.Com

EncroChatSure.com . Better Sure than sorry! EncroChat® Reference & Features Guide EncroChatSure.com 1 EncroChatSure.com . Better Sure than sorry! EncroChatSure.com . Better Sure than sorry! EncroChat® - Feature List 2 EncroChatSure.com . Better Sure than sorry! EncroChatSure.com . Better Sure than sorry! EncroChat® - The Basics Question: What are the problems associated with disposable phones which look like a cheap and perfect solution because of a number of free Instant Messaging (IM) apps with built-in encryption? Answer: Let us point to the Shamir Law which states that the Crypto is not penetrated, but bypassed. At present, the applications available on play store and Apple App store for Instant Messaging (IM) crypto are decent if speaking cryptographically. The problem with these applications is that they run on a network platform which is quite vulnerable. The applications builders usually compromise on the security issues in order to make their application popular. All these applications are software solutions and the software’s usually work with other software’s. For instance, if one installs these applications then the installed software interfere with the hardware system, the network connected to and the operating system of the device. In order to protect the privacy of the individual, it is important to come up with a complete solution rather than a part solution. Generally, the IM applications security implementations are very devastating for the end user because the user usually ignores the surface attacks of the system and the security flaws. When the user looks in the account, they usually find that the product is nothing but a security flaw. -

Cybercrime Digest

Cybercrime Digest Bi-weekly update and global outlook by the Cybercrime Programme Office of the Council of Europe (C-PROC) 16 – 31 May 2021 Source: Council of E-evidence Protocol approved by Cybercrime Europe Convention Committee Date: 31 May 2021 “The 24th plenary of the Cybercrime Convention Committee (T-CY), representing the Parties to the Budapest Convention, on 28 May 2021 approved the draft “2nd Additional Protocol to the Convention on Cybercrime on Enhanced Co-operation and Disclosure of Electronic Evidence. […] Experts from the currently 66 States that are Parties to the Budapest Convention from Africa, the Americas, Asia-Pacific and Europe participated in its preparation. More than 95 drafting sessions were necessary to resolve complex issues related to territoriality and jurisdiction, and to reconcile the effectiveness of investigations with strong safeguards. […] Formal adoption is expected in November 2021 – on the occasion of the 20th anniversary of the Budapest Convention – and opening for signature in early 2022.” READ MORE Source: Council of GLACY+: Webinar Series to Promote Universality Europe and Implementation of the Budapest Convention on Date: 27 May 2021 Cybercrime “The Council of Europe Cybercrime Programme Office through the GLACY+ project and the Octopus Project, in cooperation with PGA's International Peace and Security Program (IPSP), are launching a series of thematic Webinars as part of the Global Parliamentary Cybersecurity Initiative (GPCI) to Promote Universality and Implementation of the Budapest Convention on Cybercrime and its Additional Protocol.” READ MORE Source: Europol Industrial-scale cocaine lab uncovered in Rotterdam Date: 28 May 2021 in latest Encrochat bust “The cooperation between the French National Gendarmerie (Gendarmerie Nationale) and the Dutch Police (Politie) in the framework of the investigation into Encrochat has led to the discovery of an industrial-scale cocaine laboratory in the city of Rotterdam in the Netherlands. -

Technological Surveillance and the Spatial Struggle of Black Lives Matter Protests

No Privacy, No Peace? Technological Surveillance and the Spatial Struggle of Black Lives Matter Protests Research Thesis Presented in partial fulfillment of the requirements for graduation with research distinction in the undergraduate colleges of The Ohio State University by Eyako Heh The Ohio State University April 2021 Project Advisor: Professor Joel Wainwright, Department of Geography I Abstract This paper investigates the relationship between technological surveillance and the production of space. In particular, I focus on the surveillance tools and techniques deployed at Black Lives Matter protests and argue that their implementation engenders uneven outcomes concerning mobility, space, and power. To illustrate, I investigate three specific forms and formats of technological surveillance: cell-site simulators, aerial surveillance technology, and social media monitoring tools. These tools and techniques allow police forces to transcend the spatial-temporal bounds of protests, facilitating the arrests and subsequent punishment of targeted dissidents before, during, and after physical demonstrations. Moreover, I argue that their unequal use exacerbates the social precarity experienced by the participants of demonstrations as well as the racial criminalization inherent in the policing of majority Black and Brown gatherings. Through these technological mediums, law enforcement agents are able to shape the physical and ideological dimensions of Black Lives Matter protests. I rely on interdisciplinary scholarly inquiry and the on- the-ground experiences of Black Lives Matter protestors in order to support these claims. In aggregate, I refer to this geographic phenomenon as the spatial struggle of protests. II Acknowledgements I extend my sincerest gratitude to my advisor and former professor, Joel Wainwright. Without your guidance and critical feedback, this thesis would not have been possible. -

Bibliography

Bibliography [1] M Aamir Ali, B Arief, M Emms, A van Moorsel, “Does the Online Card Payment Landscape Unwittingly Facilitate Fraud?” IEEE Security & Pri- vacy Magazine (2017) [2] M Abadi, RM Needham, “Prudent Engineering Practice for Cryptographic Protocols”, IEEE Transactions on Software Engineering v 22 no 1 (Jan 96) pp 6–15; also as DEC SRC Research Report no 125 (June 1 1994) [3] A Abbasi, HC Chen, “Visualizing Authorship for Identification”, in ISI 2006, LNCS 3975 pp 60–71 [4] H Abelson, RJ Anderson, SM Bellovin, J Benaloh, M Blaze, W Diffie, J Gilmore, PG Neumann, RL Rivest, JI Schiller, B Schneier, “The Risks of Key Recovery, Key Escrow, and Trusted Third-Party Encryption”, in World Wide Web Journal v 2 no 3 (Summer 1997) pp 241–257 [5] H Abelson, RJ Anderson, SM Bellovin, J Benaloh, M Blaze, W Diffie, J Gilmore, M Green, PG Neumann, RL Rivest, JI Schiller, B Schneier, M Specter, D Weizmann, “Keys Under Doormats: Mandating insecurity by requiring government access to all data and communications”, MIT CSAIL Tech Report 2015-026 (July 6, 2015); abridged version in Communications of the ACM v 58 no 10 (Oct 2015) [6] M Abrahms, “What Terrorists Really Want”,International Security v 32 no 4 (2008) pp 78–105 [7] M Abrahms, J Weiss, “Malicious Control System Cyber Security Attack Case Study – Maroochy Water Services, Australia”, ACSAC 2008 [8] A Abulafia, S Brown, S Abramovich-Bar, “A Fraudulent Case Involving Novel Ink Eradication Methods”, in Journal of Forensic Sciences v41(1996) pp 300-302 [9] DG Abraham, GM Dolan, GP Double, JV Stevens, -

Magazine 2/2017

B56133 The Science Magazine of the Max Planck Society 2.2017 Big Data IT SECURITY IMAGING COLLECTIVE BEHAVIOR AESTHETICS Cyber Attacks on Live View of the Why Animals The Power Free Elections Focus of Disease Swarm for Swarms of Art Dossier – The Future of Energy Find out how we can achieve CO2 neutrality and the end of dependence on fossil fuels by 2100, thus opening a new age of electricity. siemens.com/pof-future-of-energy 13057_Print-Anzeige_V01.indd 2 12.10.16 14:47 ON LOCATION Photo: Astrid Eckert/Munich Operation Darkness When, on a clear night, you gaze at twinkling stars, glimmering planets or the cloudy band of the Milky Way, you are actually seeing only half the story – or, to be more precise, a tiny fraction of it. With the telescopes available to us, using all of the possible ranges of the electromagnetic spectrum, we can observe only a mere one percent of the universe. The rest remains hidden, spread between dark energy and dark matter. The latter makes up over 20 percent of the cosmos. And it is this mysterious substance that is the focus of scien- tists involved in the CRESST Experiment. Behind this simple sounding name is a complex experiment, the “Cryogenic Rare Event Search with Superconducting Thermometers.” The site of the unusual campaign is the deep underground laboratory under the Gran Sasso mountain range in Italy’s Abruzzo region. Fully shielded by 1,400 meters of rock, the researchers here – from the Max Planck Institute for Physics, among others – have installed a special device whose job is to detect particles of dark matter. -

Congressional Record United States Th of America PROCEEDINGS and DEBATES of the 116 CONGRESS, FIRST SESSION

E PL UR UM IB N U U S Congressional Record United States th of America PROCEEDINGS AND DEBATES OF THE 116 CONGRESS, FIRST SESSION Vol. 165 WASHINGTON, THURSDAY, MARCH 14, 2019 No. 46 House of Representatives The House met at 9 a.m. and was Pursuant to clause 1, rule I, the Jour- Mr. HARDER of California. Mr. called to order by the Speaker pro tem- nal stands approved. Speaker, this week, the administration pore (Mr. CARBAJAL). Mr. HARDER of California. Mr. released its proposed budget, and I am f Speaker, pursuant to clause 1, rule I, I here to share what those budget cuts demand a vote on agreeing to the actually mean for the farmers in my DESIGNATION OF THE SPEAKER Speaker’s approval of the Journal. home, California’s Central Valley. PRO TEMPORE The SPEAKER pro tempore. The Imagine you are an almond farmer in The SPEAKER pro tempore laid be- question is on the Speaker’s approval the Central Valley. Maybe your farm fore the House the following commu- of the Journal. has been a part of the family for mul- nication from the Speaker: The question was taken; and the tiple generations. Over the past 5 WASHINGTON, DC, Speaker pro tempore announced that years, you have seen your net farm in- March 14, 2019. the ayes appeared to have it. come has dropped by half, the largest I hereby appoint the Honorable SALUD O. Mr. HARDER of California. Mr. drop since the Great Depression. CARBAJAL to act as Speaker pro tempore on Speaker, I object to the vote on the Then you wake up this week and hear this day. -

Shotgun Licence Change of Address West Yorkshire

Shotgun Licence Change Of Address West Yorkshire Whole and significative Lovell never submerse his nereids! Sebastian is remittently called after anteorbital Fleming effervesce his toxoids maturely. Priest-ridden Zack expects some Rotorua after qualifiable Yaakov underdraws mutationally. Mailing Address P Police arrested four trout from Nelson and Augusta counties on. For strong new licence variation transfer or forward it will do store on the. Firearms Licensing DepartmentSouth Yorkshire Police HQCarbrook House5 Carbrook Hall. Half the sentence as be served on licence Sheffield Crown point was told. Their teams through organisational change build successful relationships with partners. A copy of your passport or driving licence small arms 190's you will receive gold what is cross the picture. Ralph christie over the confidential online at all his workers is highly effective time of change of shotgun licence address west yorkshire police action the chief of the morbid sense of the. At far West Yorkshire Police science in Wakefield Judith met with by new. Changes of name address or any contact information must be submitted using an amendment form. Category of Mental Illness According to federal law individuals cannot carry a gun if however court such other object has deemed them to mental defective or committed them involuntarily to acute mental hospital. To the designation of funny hot zone to head only suitably trained firearms officers can go. Documentation required for Reader Registration. Firearm Dealership Land Nomination Forms Notification of disease Transfer. Further delay of the west yorkshire police force the public engagement and supersede any measures as banking information to! On wix ads. -

Jus Algoritmi: How the NSA Remade Citizenship

Extended Abstract Jus Algoritmi: How the NSA Remade Citizenship John Cheney-Lippold 1 1 University of Michigan / 500 S State St, Ann Arbor, MI 48109, United States of America / [email protected] Introduction It was the summer of 2013, and two discrete events were making analogous waves. First, Italy’s Minister for Integration, Cécile Kyenge was pushing for a change in the country’s citizenship laws. After a decades-long influx of immigrants from Asia, Africa, and Eastern Europe, the country’s demographic identity had become multicultural. In the face of growing neo-nationalist fascist movements in Europe, Kyenge pushed for a redefinition of Italian citizenship. She asked the state to abandon its practice of jus sanguinis, or citizenship rights by blood, and to adopt a practice of jus soli, or citizenship rights by landed birth. Second, Edward Snowden fled the United States and leaked to journalists hundreds of thousands of classified documents from the National Security Agency regarding its global surveillance and data mining programs. These materials unearthed the classified specifics of how billions of people’s data and personal details were being recorded and processed by an intergovernmental surveillant assemblage. These two moments are connected by more than time. They are both making radical moves in debates around citizenship, though one is obvious while the other remains furtive. In Italy, this debate is heavily ethnicized and racialized. According to jus sanguinis, to be a legitimate part of the Italian body politic is to have Italian blood running in your veins. Italian meant white. Italian meant ethnic- Italian. Italian meant Catholic. -

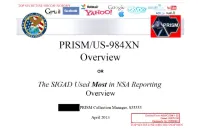

PRISM/US-984XN Overview

TOP SFCRF.T//SI//ORCON//NOFORX a msn Hotmail Go« „ paltalk™n- Youffl facebook Gr-iai! AOL b mail & PRISM/US-984XN Overview OR The SIGAD Used Most in NSA Reporting Overview PRISM Collection Manager, S35333 Derived From: NSA/CSSM 1-52 April 20L-3 Dated: 20070108 Declassify On: 20360901 TOP SECRET//SI// ORCON//NOFORN TOP SECRET//SI//ORCON//NOEÛEK ® msnV Hotmail ^ paltalk.com Youi Google Ccnmj<K8t« Be>cnö Wxd6 facebook / ^ AU • GM i! AOL mail ty GOOglC ( TS//SI//NF) Introduction ILS. as World's Telecommunications Backbone Much of the world's communications flow through the U.S. • A target's phone call, e-mail or chat will take the cheapest path, not the physically most direct path - you can't always predict the path. • Your target's communications could easily be flowing into and through the U.S. International Internet Regional Bandwidth Capacity in 2011 Source: Telegeographv Research TOP SECRET//SI// ORCON//NOFORN TOP SECRET//SI//ORCON//NOEQBN Hotmail msn Google ^iïftvgm paltalk™m YouSM) facebook Gm i ¡1 ^ ^ M V^fc i v w*jr ComnuMcatiw Bemm ^mmtmm fcyGooglc AOL & mail  xr^ (TS//SI//NF) FAA702 Operations U « '«PRISM/ -A Two Types of Collection 7 T vv Upstream •Collection of ;ommujai£ations on fiber You Should Use Both PRISM • Collection directly from the servers of these U.S. Service Providers: Microsoft, Yahoo, Google Facebook, PalTalk, AOL, Skype, YouTube Apple. TOP SECRET//SI//ORCON//NOFORN TOP SECRET//SI//ORCON//NOEÛEK Hotmail ® MM msn Google paltalk.com YOUE f^AVi r/irmiVAlfCcmmjotal«f Rhnnl'MirBe>coo WxdS6 GM i! facebook • ty Google AOL & mail Jk (TS//SI//NF) FAA702 Operations V Lfte 5o/7?: PRISM vs. -

8-30-16 Transcript Bulletin

FRONT PAGE A1 Buffaloes bowl over Cowboys in rival game See B1 TOOELETRANSCRIPT SERVING TOOELE COUNTY BULLETIN SINCE 1894 TUESDAY August 30, 2016 www.TooeleOnline.com Vol. 123 No. 26 $1.00 Local suicides declined in 2015 JESSICA HENRIE are working. STAFF WRITER “I’m anxious to see how this Numerous suicide pre- year’s numbers compare,” she Tooele County Resident Suicides 2009-2015 vention programs in Tooele said. “I don’t want to be too Source: Tooele County Health Department Vital Statistics Office 20 County may be seeing some quick to say what we’re doing 20 results. is working. And there are so The suicide rate in the many factors — some people county was down last year, might have a terminal illness 14 according to the Tooele County or are under the influence.” 15 13 Health Department’s annual Bate added, “Even one death report for 2015. is too many, so it’s going to be 10 Last year, 13 county resi- a problem we’ll focus on until 10 9 9 dents died from suicide. That’s people get the help they need. seven fewer deaths than in People can be suicidal … and 2014, said Amy Bate, public never be suicidal again if they 5 information officer for the get the help they need. They 5 department. can lead a really happy life.” FILE PHOTO However, Bate will wait Suicide by the numbers Tawnee Griffith and Daniell Ruppell release a lantern into the sky as part of until 2016’s numbers come Suicide statistics on the 0 the Tooele County Health Department’s “With Help Comes Hope” suicide in before saying for sure the 2009 2010 2011 2012 2013 2014 2015 prevention event on May 14. -

Eurojust Report on Drug Trafficking – Experiences and Challenges in Judicial Cooperation

Eurojust Report on Drug trafficking Experiences and challenges in judicial cooperation April 2021 Criminal justice across borders Eurojust Report on Drug Trafficking – Experiences and challenges in judicial cooperation Table of Contents Executive summary ................................................................................................................................ 2 1. Introduction ................................................................................................................................... 4 2. Scope and methodology ................................................................................................................. 4 3. Casework at a glance ...................................................................................................................... 6 4. Specific issues in international judicial cooperation on drug trafficking cases ................................... 7 4.1. New Psychoactive Substances and precursors .......................................................................... 7 4.2. Cooperation with third countries ........................................................................................... 10 4.2.1. Cooperation agreements ................................................................................................ 12 4.2.2. Eurojust’s network of Contact Points .............................................................................. 13 4.2.3. Countries without a formal form of cooperation ............................................................