The Early Years of Academic Computing: a Collection of Memoirs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vcf Pnw 2019

VCF PNW 2019 http://vcfed.org/vcf-pnw/ Schedule Saturday 10:00 AM Museum opens and VCF PNW 2019 starts 11:00 AM Erik Klein, opening comments from VCFed.org Stephen M. Jones, opening comments from Living Computers:Museum+Labs 1:00 PM Joe Decuir, IEEE Fellow, Three generations of animation machines: Atari and Amiga 2:30 PM Geoff Pool, From Minix to GNU/Linux - A Retrospective 4:00 PM Chris Rutkowski, The birth of the Business PC - How volatile markets evolve 5:00 PM Museum closes - come back tomorrow! Sunday 10:00 AM Day two of VCF PNW 2019 begins 11:00 AM John Durno, The Lost Art of Telidon 1:00 PM Lars Brinkhoff, ITS: Incompatible Timesharing System 2:30 PM Steve Jamieson, A Brief History of British Computing 4:00 PM Presentation of show awards and wrap-up Exhibitors One of the defining attributes of a Vintage Computer Festival is that exhibits are interactive; VCF exhibitors put in an amazing amount of effort to not only bring their favorite pieces of computing history, but to make them come alive. Be sure to visit all of them, ask questions, play, learn, take pictures, etc. And consider coming back one day as an exhibitor yourself! Rick Bensene, Wang Laboratories’ Electronic Calculators, An exhibit of Wang Labs electronic calculators from their first mass-market calculator, the Wang LOCI-2, through the last of their calculators, the C-Series. The exhibit includes examples of nearly every series of electronic calculator that Wang Laboratories sold, unusual and rare peripheral devices, documentation, and ephemera relating to Wang Labs calculator business. -

Technical Details of the Elliott 152 and 153

Appendix 1 Technical Details of the Elliott 152 and 153 Introduction The Elliott 152 computer was part of the Admiralty’s MRS5 (medium range system 5) naval gunnery project, described in Chap. 2. The Elliott 153 computer, also known as the D/F (direction-finding) computer, was built for GCHQ and the Admiralty as described in Chap. 3. The information in this appendix is intended to supplement the overall descriptions of the machines as given in Chaps. 2 and 3. A1.1 The Elliott 152 Work on the MRS5 contract at Borehamwood began in October 1946 and was essen- tially finished in 1950. Novel target-tracking radar was at the heart of the project, the radar being synchronized to the computer’s clock. In his enthusiasm for perfecting the radar technology, John Coales seems to have spent little time on what we would now call an overall systems design. When Harry Carpenter joined the staff of the Computing Division at Borehamwood on 1 January 1949, he recalls that nobody had yet defined the way in which the control program, running on the 152 computer, would interface with guns and radar. Furthermore, nobody yet appeared to be working on the computational algorithms necessary for three-dimensional trajectory predic- tion. As for the guns that the MRS5 system was intended to control, not even the basic ballistics parameters seemed to be known with any accuracy at Borehamwood [1, 2]. A1.1.1 Communication and Data-Rate The physical separation, between radar in the Borehamwood car park and digital computer in the laboratory, necessitated an interconnecting cable of about 150 m in length. -

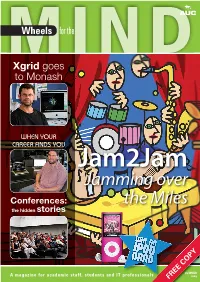

Jamming Over the Miles Man’S History Just Been a Re-Mix of a Few Basic Ideas

MINDWheels for the Xgrid goes to Monash WHEN YOUR CAREER FINDS YOU Jam2Jam Jamming over Conferences: the Miles the hidden stories REE SUMMERSSUMMER A magazine for academic staff, students and ITT professionalsprofessionals FREEF COPY20092009 PRODUCT ROUND-UP WHAT’S NEW IN THE WORLD OF TECH Screen grabs, made to order Make your photos Funtastic New look, better Filemaker If you do screenshots often, you’ll love If you’re aching to get creative with your Filemaker long ago became synonymous Layers. Instead of grabbing one single photos, Funtastic Photos will let you fl ex with ‘Mac desktop database’, but the latest bitmap image of the screen, Layers creates your right brain until it hurts. Mix and version further improves the platform with a Photoshop document fi le that isolates each match more than 40 graphical effects, make a host of new features. Most obvious is screen window, menu, desktop icon, menu greeting cards, add refl ections, add speech a heavily redesigned and simplifi ed user bar and the Dock in a separate layer. Do bubbles, email photo cards, create printable interface, but under the covers you’ll benefi t your screen grab once, then mix and match 3D photo cubes, export to iPod or mobile, from new features like user-triggered the elements until they’re arranged the way make photo collages and more. Works with scripts, saved fi nds, themes and templates, that’s most meaningful. $US19.95 ($A30, iPhoto, and non-destructive editing means embedded Web content, dynamic reports trial available) from the.layersapp.com. all changes can be rolled back. -

1117 M. Stahl Obsoletes Rfcs: 1062, 1020, 997, 990, 960, 943, M

Network Working Group S. Romano Request for Comments: 1117 M. Stahl Obsoletes RFCs: 1062, 1020, 997, 990, 960, 943, M. Recker 923, 900, 870, 820, 790, 776, 770, 762, SRI-NIC 758, 755, 750, 739, 604, 503, 433, 349 August 1989 Obsoletes IENs: 127, 117, 93 INTERNET NUMBERS Status of this Memo This memo is an official status report on the network numbers and the autonomous system numbers used in the Internet community. Distribution of this memo is unlimited. Introduction This Network Working Group Request for Comments documents the currently assigned network numbers and gateway autonomous systems. This RFC will be updated periodically, and in any case current information can be obtained from Hostmaster at the DDN Network Information Center (NIC). Hostmaster DDN Network Information Center SRI International 333 Ravenswood Avenue Menlo Park, California 94025 Phone: 1-800-235-3155 Network mail: [email protected] Most of the protocols used in the Internet are documented in the RFC series of notes. Some of the items listed are undocumented. Further information on protocols can be found in the memo "Official Internet Protocols" [40]. The more prominent and more generally used are documented in the "DDN Protocol Handbook" [17] prepared by the NIC. Other collections of older or obsolete protocols are contained in the "Internet Protocol Transition Workbook" [18], or in the "ARPANET Protocol Transition Handbook" [19]. For further information on ordering the complete 1985 DDN Protocol Handbook, contact the Hostmaster. Also, the Internet Activities Board (IAB) publishes the "IAB Official Protocol Standards" [52], which describes the state of standardization of protocols used in the Internet. -

The Early Years of Academic Computing: L a Memoir by Douglas S

03 G A The Early Years of Academic Computing: L A Memoir by Douglas S. Gale E This is from a collection of reflections/memoirs concerning the early years of academic comput- ing, emphasizing the period when Cornell developed its own decentralized computing environ- ments and networking became a national strategic goal. ©2016 Henrietta Gale Initial Release: April 2016 (Increment 01) Second Release: April 2017 (Increments 02-04) Published by The Internet-First University Press Copy editor and proofreader: Dianne Ferriss The entire incremental book is openly available at http://hdl.handle.net/1813/36810 Books and Articles Collection – http://ecommons.library.cornell.edu/handle/1813/63 The Internet-First University Press – http://ecommons.library.cornell.edu/handle/1813/62 C. Memoir by Douglas S. Gale Preface and Introduction Bill Arms and Ken King have chronicled much of the early history of academic computing. Accordingly, I have tried to focus my contribution to The Early Years of Academic Computing on topics less covered by King and Arms. My contribution is organized into four chronological periods, roughly spanning the last 50 years of the 20th century, and each has an organizational theme: career transitions, the microcomputer revolution, the networking revolution, and the changing role of academic computing and networking. The theme of the first period -- career transitions -- will consider where the early practitioners of academic computing came from. Computer Science was first recognized as an academic discipline in 1962, and the early years of academic computing were led by people who had received their academic training in other disciplines. Many, if not most, came from the community of computer users and early adopters. -

Redalyc.Public Entrepreneurs and the Adoption of Broad-Based Merit Aid

Education Policy Analysis Archives/Archivos Analíticos de Políticas Educativas ISSN: 1068-2341 [email protected] Arizona State University Estados Unidos Kyle Ingle, William; Ann Petroff, Ruth Public Entrepreneurs and the Adoption of Broad-based Merit Aid Beyond the Southeastern United States Education Policy Analysis Archives/Archivos Analíticos de Políticas Educativas, vol. 21, enero, 2013, pp. 1-26 Arizona State University Arizona, Estados Unidos Available in: http://www.redalyc.org/articulo.oa?id=275029728058 How to cite Complete issue Scientific Information System More information about this article Network of Scientific Journals from Latin America, the Caribbean, Spain and Portugal Journal's homepage in redalyc.org Non-profit academic project, developed under the open access initiative education policy analysis archives A peer-reviewed, independent, open access, multilingual journal Arizona State University Volume 21 Number 58 July 8, 2013 ISSN 1068-2341 Public Entrepreneurs and the Adoption of Broad-based Merit Aid Beyond the Southeastern United States William Kyle Ingle Ruth Ann Petroff Bowling Green State University USA Citation: Ingle, W. K. & Petroff, R. A. (2013). Public entrepreneurs and the adoption of broad- based merit aid beyond the Southeastern United States. Education Policy Analysis Archives, 21 (58) Retrieved [date], from http://epaa.asu.edu/ojs/article/view/1252 Abstract: The concentration of broad-based merit aid adoption in the southeastern United States has been well noted in the literature. However, there are states that have adopted broad-based merit aid programs outside of the Southeast. Guided by multiple theoretical frameworks, including innovation diffusion theory (e.g., Gray, 1973, 1994; Rogers, 2003), Roberts and King’s (1991) typology of public entrepreneurs, and Anderson’s (2003) stages of the policymaking process, this qualitative study sought to answer the following questions. -

Annual Report

2015 Annual Report ANNUAL 2015 REPORT CONTENTS i Letter from the President 4 ii NYSERNet Names New President 6 iii NYSERNet Members Institutions 8 iv Membership Update 9 v Data Center 10 vi VMWare Quilt Project 11 vii Working Groups 12 viii Education Services 13 ix iGlass 14 x Network 16 xi Internet Services 17 xii Board Members 18 xiii Our Staff 19 xiv Human Face of Research 20 LETTER FROM THE PRESIDENT Dear Colleagues, I am pleased to present to you NYSERNet’s 2015 Annual Report. Through more than three decades, NYSERNet’s members have addressed the education and research community’s networking and other technology needs together, with trust in each other guiding us through every transition. This spring inaugurates more change, as City. The terrible attack of Sept. 11, 2001, we welcome a new president and I will step complicated achievement of that goal, made down from that position to focus on the it more essential, and taught a sobering research community’s work and needs. lesson concerning the importance of communication and the need to harden the By itself, working with NYSERNet’s infrastructure that supports it. We invested extraordinary Board and staff to support in a wounded New York City, deploying fiber and building what today has become a global exchange point at “ These two ventures formed pieces 32 Avenue of the Americas. In the process, we forged partnerships in a puzzle that, when assembled, that have proved deep and durable. benefited all of New York and beyond.” Despite inherent risks, and a perception that New York City the collective missions of our members institutions might principally benefit, for the past 18 years has been a privilege NYSERNet’s Board unanimously supported beyond my imagining. -

“The Past and Future of the Internet”

“The Past and Future of the Internet” presented by Dr Glenn Ricart – Ph.D Synopsis: Glenn Ricart will share some of his first-hand stories about how the ARPAnet grew into an international commercial Internet during the 1980s. Then, looking forward, Dr. Ricart will turn to the developments which he believes will need to take place in technology, economics, and politics in order to allow the Internet to continue to grow. Glitches and weaknesses which are tolerable for an entertainment medium will not be acceptable as Internets become more integral to the economy, health and safety, and national defense. Curriculum Vitae: Dr. Glenn Ricart is an Internet pioneer and entrepreneur with a broad range of technology and business leadership experience in large corporations, startups, academia, the U.S. military, and government research. His positions have included President and CEO of National LambdaRail, Managing Director at PricewaterhouseCoopers, Co-founder and Chief Technology Officer of CenterBeam, and Executive Vice President and Chief Technology Officer for Novell. He is known for his pioneering work in bringing the ARPAnet protocols into academic and commercial use. In the mid-1980s, while academic CIO at the University of Maryland College Park, his teams created the first implementation of TCP/IP for the IBM PC; created the first campus-wide TCP/IP network, shipped and managed the software that powered the first non-military TCP/IP national network, the NSFnet; created the first open Internet interchange point (the FIX and later MAE-EAST); and proposed and created the first operating NSFnet regional network, SURAnet. From 1995-1999 he was Novell's EVP and Chief Technology Officer responsible for its technology. -

Core Magazine February 2002

FEBRUARY 2002 CORE 3.1 A PUBLICATION OF THE COMPUTER HISTORY MUSEUM WWW.COMPUTERHISTORY.ORG PAGE 1 February 2002 OUR ACTIONS TODAY COREA publication of the Computer History3.1 Museum IN THIS MISSION ISSUE TO PRESERVE AND PRESENT FOR POSTERITY THE ARTIFACTS AND STORIES OF THE INFORMATION AGE INSIDE FRONT COVER VISION OUR ACTIONS TODAY The achievements of tomorrow must be was an outstanding success, and I simply doesn’t exist anywhere else in TO EXPLORE THE COMPUTING REVOLUTION AND ITS John C Toole rooted in the actions we take today. hope you caught the impact of these the world. With your sustained help, our IMPACT ON THE HUMAN EXPERIENCE Many exciting and important events announcements that have heightened actions have been able to speak much 2 THE SRI VAN AND COMPUTER have happened since our last CORE awareness of our enterprise in the louder than words, and it is my goal to INTERNETWORKING publication, and they have been community. I’m very grateful to Harry see that we are able to follow through Don Nielson carefully chosen to strategically shape McDonald (director of NASA Ames), Len on our dreams! EXECUTIVE STAFF where we will be in five years. Shustek (chairman of our Board of 7 John C Toole David Miller Trustees), Donna Dubinsky (Museum This issue of CORE is loaded with THE SRI VAN AND EARLY PACKET SPEECH EXECUTIVE DIRECTOR & CEO VICE PRESIDENT OF DEVELOPMENT 2 Don Nielson First, let me officially introduce our Trustee and CEO of Handspring), and technical content and information about Karen Mathews Mike Williams new name and logo to everyone who Bill Campbell (chairman of Intuit) who our organization—from a wonderful EXECUTIVE VICE PRESIDENT HEAD CURATOR 8 has not seen them before. -

Hannes Mehnert [email protected] December

Introduction Dylan Going Further Dylan Hannes Mehnert [email protected] December 16, 2004 Hannes Mehnert [email protected] Dylan Introduction Dylan Going Further Introduction Overview Hello World History Dylan Libraries and Modules Classes Generic Functions Types Sealing Going Further Hannes Mehnert [email protected] Dylan Introduction Overview Dylan Hello World Going Further History Overview I DYnamic LANguage Hannes Mehnert [email protected] Dylan Introduction Overview Dylan Hello World Going Further History Overview I DYnamic LANguage I Object Oriented: everything is an object Hannes Mehnert [email protected] Dylan Introduction Overview Dylan Hello World Going Further History Overview I DYnamic LANguage I Object Oriented: everything is an object I Safe: type-checking of arguments and values, no buffer overruns, no implicit casting, no raw pointers Hannes Mehnert [email protected] Dylan Introduction Overview Dylan Hello World Going Further History Overview I DYnamic LANguage I Object Oriented: everything is an object I Safe: type-checking of arguments and values, no buffer overruns, no implicit casting, no raw pointers I Efficient: can compile to code nearly as efficient as C Hannes Mehnert [email protected] Dylan Introduction Overview Dylan Hello World Going Further History Hello world define method hello-world () format-out(``Hello world\n''); end method; Hannes Mehnert [email protected] Dylan Introduction Overview Dylan Hello World Going Further History factorial define method factorial ( i ) if (i = 0) 1 else i -

Smith 1 CINE-GT 1807 Fall 2019 Madeline Smith December 13

Smith 1 CINE-GT 1807 Fall 2019 Madeline Smith December 13, 2019 Assignment 2: Final Research Paper The History of Web Archiving and the Evolution of Archive-It’s Brozzler Since the beginning of the Internet and the World Wide Web in 1991, an unimaginable amount of data and content has been created, shared, posted, and stored on the World Wide Web. When the World Wide Web began, there was no system in place to archive and preserve the Web content, as no one could imagine how massive the infrastructure would become. It was not until the 1990s that users began to recognize the gravity of archiving and preserving all the incredibly diverse born-digital data and content on the Web. Web crawlers are a key component in accomplishing the mission to archive Web content before it is lost for good. While there have been advancements in web archiving over the years, considering the speed at which digital technology and the varied uses of the Internet are changing, there is still work to be done. One of the more recent web crawling technologies is Archive-It’s Brozzler. This report aims to frame Brozzler within the larger landscape of web archiving and web crawler technology. The report will attempt to answer whether Brozzler as a web archiving tool is effective for capturing dynamic websites and the rapidly evolving content of the modern web. The end of this report will be an examination of my own experience attempting to use Brozzler. In order to understand web archiving and web crawling, a few terms used in describing the evolution of the Internet and the World Wide Web need to be defined. -

The People Who Invented the Internet Source: Wikipedia's History of the Internet

The People Who Invented the Internet Source: Wikipedia's History of the Internet PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 22 Sep 2012 02:49:54 UTC Contents Articles History of the Internet 1 Barry Appelman 26 Paul Baran 28 Vint Cerf 33 Danny Cohen (engineer) 41 David D. Clark 44 Steve Crocker 45 Donald Davies 47 Douglas Engelbart 49 Charles M. Herzfeld 56 Internet Engineering Task Force 58 Bob Kahn 61 Peter T. Kirstein 65 Leonard Kleinrock 66 John Klensin 70 J. C. R. Licklider 71 Jon Postel 77 Louis Pouzin 80 Lawrence Roberts (scientist) 81 John Romkey 84 Ivan Sutherland 85 Robert Taylor (computer scientist) 89 Ray Tomlinson 92 Oleg Vishnepolsky 94 Phil Zimmermann 96 References Article Sources and Contributors 99 Image Sources, Licenses and Contributors 102 Article Licenses License 103 History of the Internet 1 History of the Internet The history of the Internet began with the development of electronic computers in the 1950s. This began with point-to-point communication between mainframe computers and terminals, expanded to point-to-point connections between computers and then early research into packet switching. Packet switched networks such as ARPANET, Mark I at NPL in the UK, CYCLADES, Merit Network, Tymnet, and Telenet, were developed in the late 1960s and early 1970s using a variety of protocols. The ARPANET in particular led to the development of protocols for internetworking, where multiple separate networks could be joined together into a network of networks. In 1982 the Internet Protocol Suite (TCP/IP) was standardized and the concept of a world-wide network of fully interconnected TCP/IP networks called the Internet was introduced.