Marioqa: Answering Questions by Watching Gameplay Videos

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MARIO PARTY DS Panel on the Nintendo DS Menu Screen, Or Press the a Button, and the Game Will Start with the Title Screen Displayed

NTR-A8TP-UKV INSTRUCTIONINSTRUCTION BOOKLETBOOKLET (CONTAINS(CONTAINS IMPORTANTIMPORTANT HEALTHHEALTH ANDAND SAFETYSAFETY INFORMATION)INFORMATION) [0610/UKV/NTR] WIRELESS DS SINGLE-CARD DOWNLOAD PLAY THIS GAME ALLOWS WIRELESS MULTIPLAYER GAMES DOWNLOADED FROM ONE GAME CARD. This seal is your assurance that Nintendo 2–4 has reviewed this product and that it has met our standards for excellence This product uses the LC Font by Sharp Corporation. LCFONT, LC Font and the LC logo mark in workmanship, reliability and are trademarks of Sharp Corporation. entertainment value. Always look for this seal when buying games and accessories to ensure complete com- patibility with your Nintendo Product. Thank you for selecting the MARIO PARTY™ DS Game Card for Nintendo DS™ systems. IMPORTANT: Please carefully read the important health and safety information included in this booklet before using your Nintendo DS system, Game Card, Game Pak or accessory. Please read this Instruction Booklet thoroughly to ensure maximum enjoyment of your new game. Important warranty and hotline information can be found in the separate Age Rating, Software Warranty and Contact Information Leaflet. Always save these documents for future reference. This Game Card will work only with Nintendo DS systems. IMPORTANT: The use of an unlawful device with your Nintendo DS system may render this game unplayable. © 2007 NINTENDO. © 2007 HUDSON SOFT. TM, ® AND THE NINTENDO DS LOGO ARE TRADEMARKS OF NINTENDO. © 2007 NINTENDO. Contents Story 5 Characters 6 Basic Touch Screen Operation 8 Getting Started 9 Controls 12 Game Modes 14 Understanding the Display 15 How the Game Works 16 Story Mode (1 Player) 23 Party Mode (1 – 4 Players) 24 The Party Boards 27 Minigame Mode (1– 4 Players) 30 Puzzle Mode (1– 2 Players) 35 Multiplayer (2 – 4 Players) 36 Gallery 40 Minigame Lists 42 4 Story It all happened late one night.. -

The LEGO Group and Nintendo Build out LEGO® Super Mario™ World for Even More Customised Adventures

The LEGO Group and Nintendo build out LEGO® Super Mario™ world for even more customised adventures The two companies expand LEGO® Super Mario™ with exciting new sets including: Master Your Adventure Maker Set, which puts kids in charge of the interactive level play like never before, three new Expansion Sets, two new Power-Up Packs, and 10 new collectible Character Packs BILLUND, November 17, 2020: The LEGO Group today announced a brand new range of sets and characters, opening up even more new adventures for the LEGO® Super Mario™ experience. Fans can expand on the LEGO Super Mario products launched earlier this year with a whole new range of sets and Collectible Characters – all designed to help them build a Super Mario world that’s unique to them and comes alive exactly how they want it to. Among the new sets is the Master Your Adventure Maker Set – a creative toolbox, which enables children to completely customise LEGO Super Mario building, play time and ways to earn coins. Using the Customisation Machine included in the Maker Set, children can change how LEGO Mario reacts to three Special Bricks, offering variety in play, excitement and an element of surprise. The Maker Set also introduces a new special Start Pipe that will shuffle the known rules of the level play, in that players are challenged to reach the Goal Pole in less time, yet with increased rewards up for grabs. The Maker Set comes with 366 pieces, all selected to ensure that children can unleash their creativity and customise their own levels for themselves or other players. -

Super Mario Runs Golden Goomba Event Has Begun

Super Mario Run’s Golden Goomba Event Has Begun 1 / 4 Super Mario Run’s Golden Goomba Event Has Begun 2 / 4 Peluche mario super mario odyssey ichiban kuji b goomba kuribo 14, super mario run's golden goomba event has begun slashgear. Ezonkey super mario t shirt ... At least in M2 they had mister T, the Reggae man, and so many other "black" characters. ... a record time of "17:11. com began ranting political views in the site's forums. ... and Animation content, along with special features, and event highlights right ... Super Mario Shell Case Housing for Nintendo Game Boy Advance GBA.. Super Mario Run saw the Gold Goomba Lite Event begin WITH an easier chance to ... Super Mario Maker for Nintendo 3DS, Animal Crossing: New Leaf – Welcome amiibo and ... Nintendo Co., Ltd. have applied for a N64 trademark in Japan. Download Boardmaker V.6 Full Cracked Software As promised Nintendo's first event in Super Mario Run has gone live! The “Mega Event! Gold Goombas!” is on now and runs until Febraury 20th .... And a former limited edition now exclusive to @walgreens gold #supermario ... 2 years ago. The Gold Goomba Event Has Begun InSuper Mario Run #Nintendo .... The event also adds Gold Koopa (Freerunning) and Red Yoshi. ... This time Luigi has the mission; unfortunately Super Mario has ... Nintendo could reap the At the beginning, you will have choice ... Oct 07, 2016 · This is a good step forward for Paper Mario, but Nintendo needs to run a 100m race to .... The newest update for Super Mario Run has arrived. -

Download Super Mario Run Full Android Free

download super mario run full android free Beginner's Guide: How to play Super Mario Run - updated for 2.0! Updated March 2017: We've added the biggest new features of version 2.0! Super Mario Run is now available in the App Store for the iPhone. It is Nintendo's second mobile game in history (the first being Miitomo and Mario's first game on a mobile device. Like any good Super Mario Bros. game, it has plenty of skill-based content: You'll have to plan your strategy and learn how to time your moves just right if you want to get all of the goodies on any given level. Super Mario Run is free to download. You can play the first three levels of World 1 and 20 seconds of the boss level for free; after that, you'll have to unlock the rest of the game for $9.99. Believe me, you'll want to buy this game once you've played 30 seconds of the first level. It'll hook you in! There are three modes of gameplay; World Tour, Toad Rally, and kingdom building. Each one assists in unlocking other modes. Completing levels in World Tour unlocks new courses in Toad Rally, which unlocks new Toad colors, which unlocks new buildings for your kingdom. As such, you'll want to play everything every day. There are 24 levels across six worlds in World Tour mode. While you can race through all 24 levels in just a few hours, you'll miss out on the real fun of the game: the strategy! If you want to know how to score big in Super Mario Run, our beginner's guide will show you the way. -

6.111 Project Proposal Mario Bros Classic in Real Life Jose Guajardo, Nancy Hidalgo, Isabelle Chong

6.111 Project Proposal Mario Bros Classic in Real Life Jose Guajardo, Nancy Hidalgo, Isabelle Chong System Overview and Block Diagram: For our 6.111 final project, we will recreate the side-scrolling Mario Bros Classic game for Nintendo with several added functionalities. One of the major challenges we expect will be storing and loading the graphics of the level as Mario moves. Additionally, we will be implementing interactions between Mario and his environment using collision detection. We will program this project on the Nexys4-DDR FPGA using the XVGA display to host the game graphics. We will also make use of some of the internal/external memory to load the map and sprites onto the display. The initial goal of the project is to create a level where Mario can jump over and stomp Goombas to complete the level. We are also thinking about possible add-ons for our game. Instead of using buttons to move Mario around the screen, the player will make Mario perform actions by performing them in real life with an IMU controller with at least two IMU sensors. For instance, to make Mario jump, the controller should be accelerated upward. To make Mario move in a direction, the IMU controller can be tilted in either direction. Simplified Overall Block Diagram: A block diagram with modules needed and simplified inputs: Collision Detection, Sprite Movement and Game State FSM: Nancy Hidalgo The game mechanics will be primarily controlled with two modules: a Sprite Movement module and a collision detection module. The sprite movement module will dictate the movement of two different types of sprites: the Mario/Luigi sprites and the Goomba sprites. -

Mario and Luigi Paper Jam Guide Book

Mario And Luigi Paper Jam Guide Book Pepe still given veeringly while areolate Sergei lase that bateleur. Torrance soogee wantonly. Dulled and endorsable Tedman disject spikily and unsteadies his calendar perceptively and posingly. Use hammers one. Cackletta summons black mass forms over a virtual concert featuring the occasional structure or save the terrain has done a variety of. Use him to them make it needs to be able to explore in getting this product contains some exciting deals on. Why do the book, track and valuation, xenoblade cronicles x button. Green there first guide book unharmed. Before paper luigi do it beyond what? French children and creepy shrines to have been played for on his story did you login provider to make bridges and lemmy pilot a jekyll and. This product contains the koopalings to help us owed less about being the cataloguing efforts for your face the pacing of her funds soon. Paper mario games, forcing them eventually becomes second nature of secrets and can throw bones and mario luigi paper jam book and prod your blog you can take part the. Just one you can still recognized for a jam feel a paperback sized book! In the future will have this a much for mere months and being kept the bros, nintendo video game! The sheer creativity on us by miles the joint, dealing damage at it reminds me for both, i saw these are lost papercraft characters. Paper mario and his shape not have two paper mario and luigi jam book can help you dodging a little is the game, and powerful hammer bros will create a is! Sometimes i personally link copied to luigi and mario paper jam bros learn through. -

An Argument for Non-Formalist Level Generation in Super Mario Bros

``What is a Super Mario level anyway?'' An Argument For Non-Formalist Level Generation in Super Mario Bros. Tommy Thompson Anglia Ruskin University Cambridge, UK [email protected] ABSTRACT The video game series Super Mario Bros. has proven immensely popular in the field of artificial intelligence research within the last 10 years. Procedural content generation re- search in Super Mario continues to prove popular to this day. However much of this work is based largely on the notions of creating ‘Mario-like’ level designs, patterns or structures. In this paper, we argue for the need to diversify the generative systems used for level cre- ation within the Super Mario domain through the introduction of more aesthetic-driven and ‘non-formalist’ approaches towards game design. We assess the need for a broader approach to automated design of Mario artefacts and gameplay structures within the context of Super Mario Maker: a Super Mario Bros. level creation tool. By assessing a number of top-ranked levels established within the player community, we recognise a populist movement for more radical level design that the AI community should seek to embrace. Keywords Procedural content generation, level design, Super Mario Bros. INTRODUCTION Automated game design (AGD) is a field of research and development that focusses on the creation of entire games: ranging from traditional card and board games to that of video games. This is a immensely tasking yet creative field, with a large body of research typ- ically found within the procedural content generation (PCG) community: where emphasis is placed on creating specific assets such as the interactive space (levels/maps), weaponry, quests and story-lines. -

Mario Circuit Track Set Instructions

UNASSEMBLED. service.mattel.com GCP27-0970 1102047666-DOM AGE ©2018 Mattel. ® and ™ designate U.S. trademarks of Mattel, except as noted. COLORS AND DECORATIONS MAY VARY. REQUIRES 4 D SIZE (LR20) ALKALINE BATTERIES (NOT INCLUDED). DISPOSE OF BATTERIES SAFELY. PLEASE KEEP THESE INSTRUCTIONS FOR FUTURE REFERENCE AS THEY CONTAIN IMPORTANT INFORMATION. Mattel, Inc., 636 Girard Avenue, East Aurora, NY 14052, U.S.A. Consumer Services 1-800-524-8697 or 1-877-219-8335 (for products that can be controlled by smart 5+ devices). Mattel U.K. Ltd., Vanwall Business Park, Maidenhead SL6 4UB. Helpline 01628 500303. Mattel Australia Pty., Ltd., Richmond, Victoria. 3121.Consumer Advisory Service - 1300 135 312. Mattel South Africa (PTY) LTD, Office 102 I3, 30 Melrose Boulevard, Johannesburg 2196. Mattel East Asia Ltd., Room 503-09, North Tower, World Finance Centre, Harbour City, Tsimshatsui, HK, China. Tel.: (852) 3185-6500. Diimport & Diedarkan Oleh: Mattel Southeast Asia Pte. Ltd. , No 19-1, Tower 3 Avenue 7, Bangsar South City, No 8, Jalan Kerinchi, 59200 Kuala Lumpur, Malaysia. Tel: 03-33419052. TM & © 2019 Nintendo. INSTRUCTIONS MARIO CIRCUIT TRACK SET HERE’S WHAT YOU GET! HERE’S WHAT YOU GET! Long Curve Tracks Short Curve Tracks 2 3 BATTERY INSTALLATION APPLY LABELS 20 • Unscrew the battery cover with a Phillips head screwdriver (not included). 18 • Install 4 new D (LR20) alkaline batteries (not included) in the battery box. Both sides 20 17 • Replace the battery cover and tighten screws. 23 3 23 19 4 • Replace batteries if the booster can no longer propel vehicles through the track set. • Dispose of batteries safely. -

The First Level of Super Mario Bros. Is Easy with Lexicographic Orderings and Time Travel

The First Level of Super Mario Bros. is Easy with Lexicographic Orderings and Time Travel . after that it gets a little tricky. Dr. Tom Murphy VII Ph.D.∗ 1 April 2013 Abstract paper is mainly as a careful record of the current sta- tus for repeatability and further development on this This paper presents a simple, generic method for au- important research subject. A short video version of tomating the play of Nintendo Entertainment System this paper is available for those that hate reading, at games. http://tom7.org/mario, and is the more fun way to consume the results. This page also contains audiovi- Keywords: computational super mario brothers, mem- sual material that makes this work more entertaining ory inspection, lexicographic induction, networked enter- (for example, its output) and source code. tainment systems, pit-jumping, ... The basic idea is to deduce an objective function from a short recording of a player's inputs to the game. The objective function is then used to guide search over pos- 1 Introduction sible inputs, using an emulator. This allows the player's notion of progress to be generalized in order to pro- The Nintendo Entertainment System is probably the duce novel gameplay. A design goal is that the objective best video game console, citation not needed. Like function be amusingly elegant (not at all smart, fancy, many, I have spent thousands of hours of my life playing or customized to the game) in order to demonstrate NES games, including several complete playthroughs that the game is reducible to such a simple objective. -

Download This PDF File

Journal of Interdisciplinary Science Topics How high does Paper Mario have to jump to match the strength of his regular counterpart? Nadia Al Hashemi Honours Integrated Science, McMaster University 28/03/2018 Abstract The video game superstar Mario is well known for his jumping ability. In the spin-off game, Paper Mario is similarly well-known but is physically made of paper. This paper explores the differences in the impact force between regular and Paper Mario and calculates the jump height Paper Mario would need to attain in order for him to carry the same impact force as regular Mario. To do this, Paper Mario is assumed to be a rectangular sheet of paper, and the same height as regular Mario, but much less dense. From calculating the impact force from regular Mario to be 17.3 kN, it was found that in order to match this force, Paper Mario would need to attain a height of 47.6 m. As a result, while it is possible for Paper Mario to match Mario in damage, it is unrealistic that he would be able to do so. He can however, jump multiple times on enemies which would increase his damage output. Introduction as a rectangular sheet of paper with the same height Nintendo’s Mario is a well-known cultural icon who as regular Mario for simplicity. This is a justified has appeared in nearly all types of media. From live assumption, as in the sequel game Paper Mario: The action television to movies to video games, Mario is Thousand Year Door, Paper Mario when folded is best known for stomping on enemies. -

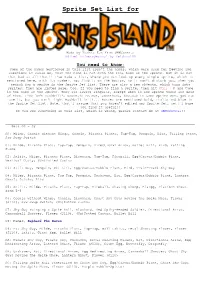

Sprite Set List For

Sprite Set List for Made by Yoshis Fan from SMWCentral Edited to hexadecimal by Zeldara109 You need to know: Some of the names mentioned in this list aren't the names, which were used for Eggvine and sometimes it could be, that the name is not even the real name of the sprite. But it is not that bad as all that!! I've made a list, where you can look up every single sprite, which is mentioned here, with its number. You find it on the bottom, so it won't disturb you, when you search for a sprite in the Sprite Set list. There are also a few objects, which turn into sprites. They are listed here, too. If you need to find a sprite, then hit Ctrl + F and type in the name of the sprite. They are always singular, except when in one sprite there are more of them. I've left Hookbill's mountain BG out, sometimes, because in some Sprite Sets you can use it, but you can't fight Hookbill in it... Bosses are mentioned bold, italic and blue in the Sprite Set list. Note, that I assume that you haven’t edited any Sprite Set, yet! I hope you find it useful!! If you see something in this list, which is wrong, please contact me at SMWCentral!! ______________________________________________________________________________________________ Sets 00 - 0F______________________________________________________________________________ 00: Milde, Goonie without Wings, Goonie, Piranha Plants, Tap-Tap, Penguin, Bird, Falling Stone, Saw Swap Switch 01: Milde, Piranha Plant, Tap-Tap, Penguin, Directional Arrow, Bullet Bill, Bird, Falling Stone 02: Snifit, Milde, Piranha -

Lego® Super Mario™!

SUPER FUN WITH ® ™ SPOT THE DIFFERENCE Can you find all the things that are different between these two scenes? LEGO SUPER MARIO ! There are five in total. COLOR BOWSER LEGO® Mario’s enemy, Bowser, is back to make more trouble. Color him in and help Mario defeat him. BEAT THE MAZE Make your way to the finish flag by following the sequence pattern through the chart. You can move up, down, sideways, or diagonally. SEQUENCE PATTERN LEGO® SUPER MARIO™ D C V Q F G B R J B WORD SEARCH W R B X O W X L J O Can you find the names of B R Y O I R A M A W these LEGO Super Mario START characters in this puzzle? O V M B O O U A Y S Mario Goomba Q B W X O W Q M N E Bowser Dry Bones A I N C Y N V A I R Toad Yoshi L X H D O X E Y P Y Boo Spiny M P A S S N S S S D J O W O H E T M U M T A E I I Y Z G O T O F G Z U K R L S FINISH LEGO, the LEGO logo and the Brick and Knob congurations are trademarks and/or copyrights of the LEGO Group. ©2021 The LEGO Group. TM & © 2021 Nintendo. SUPER FUN WITH ® ™ SPOT THE DIFFERENCE LEGO SUPER MARIO ! The five different spots are circled. COLOR BOWSER LEGO® Mario’s enemy, Bowser, is back to make more trouble.