Where Is My URI?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Curriculum Vitae

Curriculum vitae Personal details: Dr. rer. nat. Frank-Michael Schleif Hechtstrasse 41 01097 Dresden, Germany Phone: 0351 / 32041753 Email: [email protected] male, born 11. 12. 1977 in Leipzig, Germany single, nationality: German Professional address: Dr. rer. nat. habil. Frank-Michael Schleif School of Computer Science The University of Birmingham Edgbaston Birmingham B15 2TT United Kingdom Email: [email protected] Education: 2013 Habilitation (postdoctoral lecture qualification) 2004-2006 PhD studies in machine learning. PhD Thesis on Prototype based Machine Learning for Clinical Proteomics (magna cum laude), supervised by Prof. Barbara Hammer (University of Clausthal) 1997-2002 Studies of computer science, Diploma thesis: Moment based methods for cha- racter recognition, supervised by Prof. Dietmar Saupe (University of Leipzig, now University of Konstanz) Professional experience 2014{now Marie Curie Fellow (own project) Probabilistic Models in Pseudo- Euclidean Spaces (IEF-EU funding) in the group of Reader Peter Tino, University of Birmingham 2010{2013 Postdoctoral Researcher Project leader in the project Relevance learning for temporal neural maps (DFG) and Researcher at the Chair of Prof. Barbara Hammer (Technical University of Clausthal until April 2010, now University of Bielefeld) 2009{2011 Part-Project leader in the project Fuzzy imaging and deconvolution of mass spectra in system biology (FH-Mittweida / Bruker) 2008{now Part-Project leader in the project Biodiversity funded by the state of Saxony. Research and development for signal processing and pattern recognition al- gorithms for the analysis of mass spectrometry data of bacteria biodiversity. 2006{2009 Postdoctoral Researcher Researcher & part project leader in the project MetaStem (University hos- pital Leipzig, BMBF). -

Training in Cultural Policy and Management International Directory of Training Centres

Training in Cultural Policy and Management International Directory of Training Centres Europe Russian Federation Caucasus Central Asia 2003 UNESCO Division of Cultural Policies and Intercultural Dialogue 1, Rue Miollis F-75732 Paris cedex 15 Tel: +33 1 45 68 55 97 Fax: +33 1 45 68 43 30 e-mail: [email protected] www.unesco.org/culture ENCATC 19, Square Sainctelette B-1000 Brussels Tel: +32.2.201.29.12 Fax: + 32.2.203.02.26 e-mail: [email protected] - [email protected] www.encatc.org Training in Cultural Policy and Management International Directory of Training Centers Europe, Russian Federation, Caucasus, Central Asia May 2003 Survey commissioned to the European Network of Cultural Administration Training Centres (ENCATC) by UNESCO. This publication is available only in English. Publisher: UNESCO Content: European Network of Cultural Administration Centres in cooperation with UNESCO Foreword: Ms Katérina Stenou, UNESCO, Director, Division of Cultural Policies and Intercultural Dialogue Introduction: Ms GiannaLia Cogliandro, ENCATC, Executive Director Data collection:May 2002 - May 2003 This publication is also available on the internet: www. encatc.org and www.unesco.org/culture Contact data can be found at the end of this publication © UNESCO/ENCATC Reproduction is authorized provided the source is acknowledged. The opinions expressed in this document are the responsibility of the authors and do not necessarily reflect the official position of UNESCO. The information in the second part of the document was reproduced as provided by the institutions participating in this survey. The institutions carry the responsibility for the accuracy and presentation of this information. -

2007 Conference Abstracts

Abstracts 49th Annual Conference Western Social Science Association WSSA 49th Annual Conference Abstracts i Abstracts 49th Annual Conference Western Social Science Association CALGARY, ALBERTA, CANADA April 11 to April 14, 2007 Abstracts are organized by section. Within Sections, the abstracts appear alphabetically by the last name of the first author. A Table of Contents appears on the next page. WSSA 49th Annual Conference Abstracts ii Section Coordinators Listing ...................................................................................... iii African American and African Studies ........................................................................ 1 American Indian Studies .............................................................................................. 4 American Studies........................................................................................................ 15 Anthropology............................................................................................................... 18 Arid Lands Studies...................................................................................................... 19 Asian Studies .............................................................................................................. 20 Association for Borderlands Studies ........................................................................ 27 Canadian Studies ........................................................................................................ 58 Chicano Studies/Land Grants -

Postdoctoral Researcher

Reference number 161/2021 Postdoctoral Researcher: Environmental and Resource Economist (m/f/d) Founded in 1409, Leipzig University is one of Germany’s largest universities and a leader in research and medical training. With around 30,000 students and more than 5000 members of staff across 14 faculties, it is at the heart of the vibrant and outward-looking city of Leipzig. Leipzig University offers an innovative and international working environment as well as an exciting range of career opportunities in research, teaching, knowledge and technology transfer, infrastructure and administration. The German Centre for Integrative Biodiversity Research (iDiv) Halle-Jena-Leipzig and the Faculty of Economics and Management Science of Leipzig University seek to fill the above position from 1 October 2021 or at the earliest opportunity. Background The German Centre for Integrative Biodiversity Research (iDiv) Halle-Jena-Leipzig is a National Research Centre funded by the German Research Foundation (DFG). Its central mission is to promote theory- driven synthesis and data-driven theory in this emerging field. Located in the city of Leipzig, it is a central institution of Leipzig University and jointly hosted by the Martin Luther University Halle-Wittenberg, the Friedrich Schiller University Jena and the Helmholtz Centre for Environmental Research (UFZ). More Information about iDiv: www.idiv.de. Leipzig is a vibrant hotspot for creativity in eastern Germany, known for its world-class research in atmospheric sciences, biodiversity research, economics, and remote sensing. Leipzig University aims to tap into the full potential of interdisciplinary research to understand how economic dynamics interact with climate and biodiversity. The advertised position is part of the endeavour. -

See the Final Program

2nd INTERNATIONAL SYMPOSIUM OF THE CRC 1052 “OBESITY MECHANISMS” September 2–4, 2019 in Leipzig Venue Mediencampus Villa Ida Poetenweg 28 04155 Leipzig phone +49 341-56296-704 The Mediencampus is an attractive meeting location in the center of Leipzig close to the Zoo. Highlights of the Meeting: - Outstanding research lectures in obesity - Poster exhibition - Get together with Blondie & The Brains (The band consists of members of the CRC 1052, legal musicians and the female singer) CRC 1052 Spokesperson Organizers Prof. Matthias Blüher Dr. Lysann Penkalla E-mail: [email protected] Leipzig University Phone: +49 341 97 22146 Faculty of Medicine Susanne Renno E-mail: [email protected] Clinic for Endocrinology and Phone: +49 341 97 13320 Nephrology This conference will be followed by the closing meeting of the Integrated Research and Treatment Center (IFB) AdiposityDiseases (September 3–4, 2019). Legend: PL Plenary lecture CRC-T CRC talk KL Keynote lecture IFB-T IFB talk PROGRAM Monday, 2nd September Tuesday, 3rd September Wednesday, 4th September 9:00 Session III IFB Symposium Adipose Tissue Heterogeneity Keynote Lecture 9:00-11:00 9:00-10:00 10:00 IFB Session II Arrival and Registration Psychosocial Aspects of 10:15-11:45 Obesity and Eating Disorder 10:00-11:00 11:00 Coffee Break Coffee Break 11:00-11:15 11:00-11:30 Session IV IFB Session III Lunch Time Snack Adipokines Highlights of the IFB in 12:00 11:45-12:45 11:15-13:00 Genetics and Neuroimaging Research Welcome 11:30-13:00 13:00 Session I Lunch -

Progress and Visions in Quantum Theory in View of Gravity Bridging Foundations of Physics and Mathematics October 01 – 05, 2018

The conference is supported by: Conference of the Max Planck Institute for Mathematics Johannes-Kepler-Forschungszentrum Sonderforschungsbereich in the Sciences Leibniz-Forschungsschule für Mathematik Designed Quantum States of Matter Max Planck Institute Progress and Visions in Quantum Theory in View of Gravity Bridging foundations of physics and mathematics October 01 – 05, 2018 SPEAKERS ABSTRACT Markus Aspelmeyer – UNIVERSITY OF VIENNA The conference focuses on a critical discussion of the status Časlav Brukner – IQOQI VIENNA and prospects of current approaches in quantum mechanics Tian Yu Cao – BOSTON UNIVERSITY and quantum fi eld theory, in particular concerning gravity. In Dirk Deckert – LUDWIG MAXIMILLIAN UNIVERSITY OF MUNICH contrast to typical conferences, instead of reporting on the Chris Fewster – UNIVERSITY OF YORK most recent technical results, participants are invited to discuss Jürg Fröhlich – ETH ZURICH Lucien Hardy – PERIMETER INSTITUTE • visions and new ideas in foundational physics, in particular Olaf Lechtenfeld – UNIVERSITY OF HANOVER concerning foundations of quantum fi eld theory Shahn Majid – QUEEN MARY UNIVERSITY OF LONDON • which physical principles of quantum (fi eld) theory can be Gregory Naber – DREXEL UNIVERSITY considered fundamental in view of gravity Hermann Nicolai – MPI FOR GRAVITATIONAL PHYSICS • new experimental perspectives in the interplay of gravity Rainer Verch – LEIPZIG UNIVERSITY and quantum theory. DISCUSSANTS In tradition of the meetings in Blaubeuren (2003 and 2005), Leipzig (2007) and Regensburg (2010 and 2014), the conference Eric Curiel – MCMP MUNICH / BHI HARVARD brings together physicists, mathematicians and philosophers Claudio Dappiaggi – UNIVERSITY OF PAVIA working in foundations of mathematical physics. Detlef Dürr – LUDWIG MAXIMILLIAN UNIVERSITY OF MUNICH Michael Dütsch – UNIVERSITY OF GÖTTINGEN The conference is dedicated to Eberhard Zeidler, who sadly Christian Fleischhack – PADERBORN UNIVERSITY passed away on 18 November 2016. -

AEN Secretariat General Update

AGM – 27 April 2017 Agenda Item 12 (i) Action required – for information AEN Secretariat General Update Secretariat Update The AEN Secretariat is situated within Western Sydney University, having transitioned from Deakin University in late 2015. The current Secretariat Officer is Rohan McCarthy-Gill, having taken over this role upon the departure of Caroline Reid from Western Sydney University in August 2016. There has been some impact upon the AEN Secretariat’s business operations due to staffing changes and shortages within Western Sydney University’s international office. However, as at March 2017, operations have largely normalised. The Secretariat is scheduled to move to Edith Cowan University in November 2017 and very preliminary discussions have started between Western Sydney University and Edith Cowan University around this transition. AEN-UN Agreement After the process of renewal, the AEN-UN Agreement renewal was finalised on 4 July 2016, upon final signature by the President of the Utrecht Network. AEN Website The AEN website continues to pose some challenges and due to technical problems and resourcing issues in the AEN Secretariat there is still some work that needs to be done to enhance it. This is scheduled for the second half of 2017. A superficial update of the website was conducted in late 2016, including an update of process instructions and tools for AEN members, contact details and FAQs. Macquarie University The AEN Secretariat was notified by Macquarie University on 31 October 2016 that, due to a realignment of the University’s mobility partnerships with its strategic priorities, that it would withdraw from the AEN consortium, with effect from 1 January 2017. -

Selling Ethnicity Program

Thursday, November 7 9:15 – 9:45 Registration / Breakfast Buffet Neues Seminargebäude (NSG), Rooms S 202 / S 203 (2. on map) 10:00 – 10:30 Welcome Addresses 10:30 – 11:45 Keynote Lecture Marilyn Halter (Boston University): Mainstreaming Multiethnic America: Commerce and Culture in the New Millennium 12:00 – 13:30 Lunch 13:30 – 15:00 PANEL I: Commodified Ethnic Identity in Music Markus Heide (Humboldt University Berlin): Narcocorridos: Ethnic Tradition, Local Knowledge, and Commercialization Robert K. Collins (San Francisco State University): Commoditized Culture as Ethnicity Maintenance: An Exhibited Case Study of Garifuna Survival in 21st Century Los Angeles 15:00 – 15:30 Coffee Break 15:30 – 17:00 PANEL II: Representation and Forms of Capital Gabriele Pisarz-Ramirez (Leipzig University): Multiraciality and ‘Racial Capital’: The Commodification of Mixed Racial Identity Frank Usbeck (Technical University Dresden): Selling the Warrior Image: (Self) Representations of Nativeness in Military and Law Enforcement 17:45 – 18:15 Walking tour through Leipzig 18:15 – 19:45 Dinner Restaurant Mio (3. on map) 20:00 Reading of the Picador Guest Professor for Literature Jennine Capó Crucet KAFIC , black box (4. on map) Friday, November 8 8:30 – 9:00 Breakfast Buffet Neues Seminargebäude (NSG), Rooms S 202 / S 203 (2. on map) 9:00 – 10:30 PANEL III: Aesthetics in Representations of Asians/Asian Americans Jeffrey Santa Ana (Stony Brook University): The Yellow Peril Aesthetic: Managing Global Capital through Fear in Visual Representations of Asians Maria -

Studying at the University of Leipzig

• Aptitude tests: Some institutions by checking your documents Additional Admission Requirements co-tutelles program. For additional information about bi-national General help and advice before application can be obtained from the decide whether or not you are eligible for the study course. Other doctorates, please consult the following website: http://www.zv.uni- following Institutions: institutions may have additional evaluations in the form of tests or • In addition to the above mentioned requirements, for courses which leipzig.de/en/research/phd/binational-doctoral-degrees.html International Centre | AAA interviews. are in high demand, there exist further admission restrictions. For For more information about the application and admission Advice, Application, International Centre at the www.uni-leipzig.de/aaa Admission University of Leipzig • Language skills: For a Master’s degree in the German language such courses only a limited number of places of study are available procedure please see: www.uni-leipzig.de/international/phd Goethestrasse 6 04109 Leipzig you need to have knowledge of the German language equaling and the allocation of these places depend on the academic grades For information about structured PhD programs please visit the Germany level C1 as described by the Common European Framework of of the applicant and is decided via a selection process at the website of the Research Academy Leipzig: Reference for Languages (eg: DSH-2 or TestDaF TDN 4). University of Leipzig. http://www.zv.uni-leipzig.de/en/research/ral Application University of Leipzig www.uni-assist.de For a Master’s degree offered in English you need to provide • Some study courses require certain foreign language skills or c/o uni-assist e. -

Functional Specialisation of Neuroglia As Critical Determinants of Brain Activity

Annual meeting Priority Programme Functional Specialisation of Neuroglia as Critical Determinants of Brain Activity Homburg | Oct 8 - 10, 2015 1 Scientific Program Thursday, October 8, 2015 13:00 – 14:00 Registration and Lunch (Foyer) 14:00 – 14:15 Christine Rose/Frank Kirchhoff Opening Remarks 14:15 – 15:00 Kenji Tanaka Dept. Neuropsychiatry, School of Medicine, Keio University, Tokyo Tetracycline-controllable gene expression system in glial cell research 15:00 – 15:30 Johannes Hirrlinger Carl-Ludwig-Institute for Physiology, University of Leipzig Max Planck Institute for Experimental Medicine, Göttingen Brain energy metabolism assessed by genetically encoded sensors for metabolites 15:30 – 15:45 Coffee break Venue: Center for Integrative Physiology and Molecular Medicine CIPMM 15:45 – 16:15 Leda Dimou Auditorium Physiological Genomics, Ludwig Maximilian University, University of Saarland | Medical School Munich Building 48 | 66421 Homburg The heterogenous nature of NG2-glia: new approaches to study them in the adult brain 16:15 – 18:00 Poster session I 18:00 – 21:00 Dinner and open discussions in CIPMM (2nd floor) 2 3 Friday, October 9, 2015 Friday, October 9, 2015 9:00 – 9:45 Dmitri Rusakov UCL Institute of Neurology, University College London 12:00 – 13:30 Lunch (2nd floor) Astroglial microenvironment of synapses and memory trace formation 13:30 – 14.45 Arthur Butt 9:45 – 10:10 Young Investigator presentation Cellular Neurophysiology, School of Pharmacy and Michel Herde Biomedical Sciences, University of Portsmouth Universität Bonn, -

Global Partners —

EXCHANGE PROGRAM Global DESTINATION GROUPS Group A: USA/ASIA/CANADA Group B: EUROPE/UK/LATIN AMERICA partners Group U: UTRECHT NETWORK CONTACT US — Office of Global Student Mobility STUDENTS MUST CHOOSE THREE PREFERENCES FROM ONE GROUP ONLY. Student Central (Builing 17) W: uow.info/study-overseas GROUP B AND GROUP U HAVE THE SAME APPLICATION DEADLINE. E: [email protected] P: +61 2 4221 5400 INSTITUTION GROUP INSTITUTION GROUP INSTITUTION GROUP AUSTRIA CZECH REPUBLIC HONG KONG University of Graz Masaryk University City University of Hong Kong Hong Kong Baptist University DENMARK BELGIUM The Education University of Aarhus University Hong Kong University of Antwerp University of Copenhagen The Hong Kong Polytechnic KU Leuven University of Southern University BRAZIL Denmark UOW College Hong Kong Federal University of Santa ESTONIA HUNGARY Catarina University of Tartu Eötvös Loránd University (ELTE) Pontifical Catholic University of Campinas FINLAND ICELAND Pontifical Catholic University of Rio de Janeiro University of Eastern Finland University of Iceland University of São Paulo FRANCE INDIA CANADA Audencia Business School Birla Institute of Management Technology (BIMTECH) Concordia University ESSCA School of Management IFIM Business School HEC Montreal Institut Polytechnique LaSalle Manipal Academy of Higher McMaster University Beauvais Education University of Alberta Lille Catholic University (IÉSEG School of Management) O.P. Jindal Global University University of British Columbia National Institute of Applied University of Calgary -

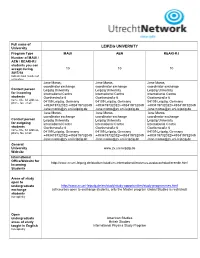

Leipzig University

Full name of LEIPZIG UNIVERSITY University Program Type MAUI AEN REARI-RJ Number of MAUI / AEN / REARI-RJ students you can accept during 10 10 10 2017/18 Indicate total number of semesters. Jane Moros, Jane Moros, Jane Moros, coordinator exchange coordinator exchange coordinator exchange Contact person Leipzig University Leipzig University Leipzig University for incoming International Centre International Centre International Centre students Goethestraße 6 Goethestraße 6 Goethestraße 6 name, title, full address, phone, fax, email 04109 Leipzig, Germany 04109 Leipzig, Germany 04109 Leipzig, Germany +493419732032/+493419732049 +493419732032/+493419732049 +493419732032/+493419732049 [email protected] [email protected] [email protected] Jane Moros, Jane Moros, Jane Moros, coordinator exchange coordinator exchange coordinator exchange Contact person Leipzig University Leipzig University Leipzig University for outgoing International Centre International Centre International Centre students Goethestraße 6 Goethestraße 6 Goethestraße 6 name, title, full address, phone, fax, email 04109 Leipzig, Germany 04109 Leipzig, Germany 04109 Leipzig, Germany +493419732032/+493419732049 +493419732032/+493419732049 +493419732032/+493419732049 [email protected] [email protected] [email protected] General University www.zv.uni-leipzig.de Website International Office/Website for http://www.zv.uni-leipzig.de/studium/studium-international/erasmus-austauschstudierende.html Incoming Students Areas