GRACE-2: Integrating Fine-Grained Application Adaptation with Global Adaptation for Saving Energy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ACM MM 96 Text ACM MULTIMEDIA 96

ACM MM 96 Text ACM MULTIMEDIA 96 General Information Technical Program Demonstrations Art Program Workshops Tutorials Stephan Fischer Last modified: Fri Nov 29 09:28:01 MET 1996 file:///C|/Documents%20and%20Settings/hsu.cheng-hsin/Desktop/acmmm96/index.htm[1/28/2010 9:42:17 AM] ACM MM 96 ACM MULTIMEDIA 96 The Fourth ACM International Multimedia Conference and Exhibition ADVANCE PROGRAM 18-22 November 1996 Hynes Convention Center Boston, Massachusetts, USA Co-located with SPIE's Symposium on Voice, Video and Data Communication, and Broadband Network Engineering program and overlapping with CSCW, to be held in nearby Cambridge. Welcome to ACM Multimedia '96 Special Events Conference-at-a-Glance Ongoing Events Courses Technical Papers Panels Registration Course Selections Hotels Conference Organization Welcome to ACM Multimedia '96 In what seems in retrospect to have been an astonishingly short time, multimedia has progressed from a technically- challenging curiosity to an essential feature of most computer systems -- both professional and consumer. Accordingly, leading-edge research in multimedia no longer is confined to dealing with processing or information- access bottlenecks, but addresses the ever-broadening ways in which the technology is changing and improving interpersonal communication, professional practice, entertainment, the arts, education, and community life. This year's program emphasizes this trend: off-the-shelf building blocks are now available to construct useful and appealing applications which are highlighted in the Demonstration and Art venues. In addition to the full complement of panels, courses, and workshops, the conference program features a distinguished set of technical papers. Keynotes will be provided by Glenn Hall, the Technical Director of Aardman Animations whose work includes Wallace and Gromit; and Professor Bill Buxton of the University of Toronto and Alias | Wavefront Inc. -

ACM Multimedia 2008 - Home

ACM Multimedia 2008 - Home Home Contact Us About ACM News Flash * The competition for student conference participation grant is open. * The update on tutorials is available. * The instructions for camera ready paper submission have been posted. Menu Home Events Calendar Home Latest updates: « < January 2010 > » S M T W T F S Call For Papers *** October 20, 2008: Full conference program is now available*** 27 28 29 30 31 1 2 Important Dates ACM International Conference on Multimedia 3 4 5 6 7 8 9 Organizing Commitee 10 11 12 13 14 15 16 Submission Instructions Pan Pacific Hotel, Vancouver, BC, Canada 17 18 19 20 21 22 23 October 27 – 31, 2008 Information for Presenters 24 25 26 27 28 29 30 http://www.mcrlab.uottawa.ca/acmmm2008/ Technical Program 31 1 2 3 4 5 6 Arts Program ACM Multimedia 2008 invites your participation in the premier annual « < February 2010 > » Workshops multimedia conference, covering all aspects of multimedia computing: from S M T W T F S MIR 2008 underlying technologies to applications, theory to practice, and servers to 31 1 2 3 4 5 6 networks to devices. Tutorials 7 8 9 10 11 12 13 Registration The technical program will consist of plenary sessions and talks with topics 14 15 16 17 18 19 20 21 22 23 24 25 26 27 Venue of interest in: 28 1 2 3 4 5 6 Sponsors (a) Multimedia content analysis, processing, and retrieval; (b) Multimedia networking and systems support; and News Random Image (c) Multimedia tools, end-systems, and applications; Awards (d) Human-centered multimedia. -

Table of Contents

SELF-STUDY QUESTIONNAIRE FOR THE REVIEW OF Software Engineering Prepared for: Engineering Accreditation Commission Accreditation Board for Engineering and Technology 111 Market Place, Suite 1050 Baltimore, Maryland 21202-4012 Phone: 410-347-7700 Fax: 410-625-2238 e-mail: [email protected] www: http://www.abet.org/ Prepared by: Department of Computer Science and Software Engineering Rose-Hulman Institute of Technology June 9, 2006 ROSE-HULMAN INSTITUTE OF TECHNOLOGY DEPARTMENT OF COMPUTER SCIENCE AND SOFTWARE ENGINEERING 5500 WABASH AVENUE, CM 97 TERRE HAUTE, INDIANA 47803-3999 TELEPHONE: (812) 877-8402 FAX: (812) 872-6060 EMAIL: [email protected] Table of Contents A. Background Information................................................................................................... 1 1. Degree Titles………………………………………………………………………….. 1 2. Program Modes……………………………………………………………………….. 1 3. Actions to Correct Previous Shortcomings………………………………………........ 1 4. Contact Information…………………………………………………………………... 1 B. Accreditation Summary..................................................................................................... 2 1. Students………………………………………………………………………………... 2 a. Evaluation…………………………………………………………………………. 2 b. Advising…………………………………………………………………………… 2 c. Monitoring…………………………………………………………………………. 2 d. Acceptance of Transfer Students…………………………………………………... 4 e. Validation of Transfer Credit……………………………………………………… 4 2. Program Educational Objectives………………………………………………………. 6 a. Mission…………………………………………………………………………….. 6 b. Program Constituencies……………………………………………………………. -

Appendix C SURPLUS/LOSS: $291.34 SIGCSE 0.00 Sigada 100.00 SIGAPP 0.00 SIGPLAN 0.00

Starts Ends Conference Actual SIGs and their % SIGACCESS 21-Oct-13 23-Oct-13 ASSETS '13: The 15th International ACM SIGACCESS ATTENDANCE: 155 SIGACCESS 100.00 Conference on Computers and Accessibility INCOME: $74,697.30 EXPENSE: $64,981.11 SURPLUS/LOSS: $9,716.19 SIGACT 12-Jan-14 14-Jan-14 ITCS'14 : Innovations in Theoretical Computer Science ATTENDANCE: 76 SIGACT 100.00 INCOME: $19,210.00 EXPENSE: $21,744.07 SURPLUS/LOSS: ($2,534.07) 22-Jul-13 24-Jul-13 PODC '13: ACM Symposium on Principles ATTENDANCE: 98 SIGOPS 50.00 of Distributed Computing INCOME: $62,310.50 SIGACT 50.00 EXPENSE: $56,139.24 SURPLUS/LOSS: $6,171.26 23-Jul-13 25-Jul-13 SPAA '13: 25th ACM Symposium on Parallelism in ATTENDANCE: 45 SIGACT 50.00 Algorithms and Architectures INCOME: $45,665.50 SIGARCH 50.00 EXPENSE: $39,586.18 SURPLUS/LOSS: $6,079.32 23-Jun-14 25-Jun-14 SPAA '14: 26th ACM Symposium on Parallelism in ATTENDANCE: 73 SIGARCH 50.00 Algorithms and Architectures INCOME: $36,107.35 SIGACT 50.00 EXPENSE: $22,536.04 SURPLUS/LOSS: $13,571.31 31-May-14 3-Jun-14 STOC '14: Symposium on Theory of Computing ATTENDANCE: SIGACT 100.00 INCOME: $0.00 EXPENSE: $0.00 SURPLUS/LOSS: $0.00 SIGAda 10-Nov-13 14-Nov-13 HILT 2013:High Integrity Language Technology ATTENDANCE: 60 SIGBED 0.00 ACM SIGAda Annual INCOME: $32,696.00 SIGCAS 0.00 EXPENSE: $32,404.66 SIGSOFT 0.00 Appendix C SURPLUS/LOSS: $291.34 SIGCSE 0.00 SIGAda 100.00 SIGAPP 0.00 SIGPLAN 0.00 SIGAI 11-Nov-13 15-Nov-13 ASE '13: ACM/IEEE International Conference on ATTENDANCE: 195 SIGAI 25.00 Automated Software Engineering -

ACM Multimedia 2010 - Worldwide Premier Multimedia Conference - 25-29 October 2010 - Florence (I)

ACM Multimedia 2010 - worldwide premier multimedia conference - 25-29 October 2010 - Florence (I) Conference Program Attendees General History People Program at a glance Technical Program Competitions Interactive Art Exhibit Tutorials Workshops Registration Travel Accommodation Venues Instructions for Presenting Authors You are here: Home Welcome to the ACM Multimedia 2010 website Sponsors Social ACM Multimedia 2010 is the worldwide premier multimedia conference and a key event to display scientific achievements and innovative industrial products. The Conference offers to scientists and practitioners in the area of Multimedia plenary scientific and technical sessions, tutorials, panels and discussion meetings on relevant and challenging questions for the next years horizon of multimedia. The Interactive Art program ACM Multimedia 2010 will provide the opportunity of interaction between artists and computer scientists and investigation on the application of multimedia technologies to art and cultural heritage. Please look inside the ACM MULTIMEDIA Website for more information. Conference videos online! By WEBCHAIRS | Published: FEBRUARY 4, 2011 A. Del Bimbo – Opening Shih-Fu Chang – Opening A. Smeulders – Opening D. Watts – Keynotes M. Shah – Keynotes R. Jain – Tech. achiev. award E. Kokiopoulou – SIGMM award S. Bhattacharya – Best papers ACM Multimedia 2010 Twitter http://www.acmmm10.org/[20/11/2011 7:16:00 PM] ACM Multimedia 2010 - worldwide premier multimedia conference - 25-29 October 2010 - Florence (I) S. Tan – Best papers R. Hong – Best papers S. Milani – Best papers Exhibit VideoLectures.net has finally released the videos of the important events recorded during the conference! ACM Multimedia 2010 Best Paper and Demos Awards at Gala Dinner By WEBCHAIRS | Published: OCTOBER 29, 2010 ACM Multimedia 2010 Gala Dinner was held yesterday in Salone dei 500 at Palazzo Vecchio. -

Ranking of the ACM International Conference on Multimedia Retrieval - ICMR

Int. Jnl. Multimedia Information Retrieval, IJMIR, vol. 2, no. 4, 2013 The final publication is available at Springer via http://dx.doi.org/10.1007/s13735-013-0047-3 International Ranking of the ACM International Conference on Multimedia Retrieval - ICMR In 2010, there were two well respected multimedia retrieval conferences supported by ACM: CIVR and MIR. At that time, a committee consisting of both the CIVR and MIR steering committees was formed to decide on the future. From those discussions, a merged conference was formed: the ACM International Conference on Multimedia Retrieval (acmICMR.org) which would be governed by the ICMR steering committee. Our goal for ICMR was not to create the largest conference, but the highest quality multimedia retrieval meeting worldwide. The Chinese Computing Federation (CCF) Ranking List provides a ranking of all international peer-reviewed conferences and journals in the broad area of computer science. This list is typically consulted by academic institutions in China as a quality metric for PhD promotions and tenure track jobs. Because ICMR was a young conference, it was not on the list in 2012. So, in late 2012, a proposal was assembled by Prabha Balakrishnan, Ramesh Jain, Klara Nahrstedt and myself towards eventual placement of ICMR on the CCF Ranking. The CCF committee examined both the quality of the content and the quality of the meeting and they gave us a ranking: The ranking released in 2013 for "Multimedia and Graphics" is first split into sections A, B, and C, and the conferences are ranked numerically within each section. Section A (only has 3 conferences in this section) 1. -

Qualis Conferências - Ciência Da Computação - 2016

QUALIS CONFERÊNCIAS - CIÊNCIA DA COMPUTAÇÃO - 2016 SIGLA NOME DA CONFERÊNCIA QUALIS AAAI AAAI - Conference on Artificial Intelligence A1 AAMAS AAMAS - International Conference on Autonomous Agents and Multiagent Systems A1 ACL ACL - Annual Meeting of the Association for Computational Linguistics A1 ACMSOCC SoCC - ACM Symposium on Cloud Computing A1 ALLERTON ALLERTON - Allerton Conference on Communication, Control, and Computing A1 ASE ASE - IEEE/ACM International Conference on Automated Software Engineering A1 ASIACRYPT ASIACRYPT - Annual International Conference on the Theory and Applications of Cryptology and Information Security A1 ASPLOS ASPLOS - International Conference on Architectural Support for Programming Languages and Operating Systems A1 BMVC BMVC - British Machine Vision Conference A1 CCGRID CCGrid - IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing A1 CCS CCS/ASIACCS - ACM Symposium on Information, Computer and Communications Security A1 CEC CEC - IEEE Congress on Evolutionary Computation A1 CHES CHES - Conference on Cryptographic Hardware and Embedded Systems A1 CHI CHI - ACM Conference on Human Factors in Computing Systems A1 CIKM CIKM - ACM International Conference on Information and Knowledge Management A1 CloudCom CloudCom - IEEE International Conference on Cloud Computing Technology and Science A1 CONEXT CONEXT - Conference on Emerging Network Experiment and Technology A1 CSCW CSCW - ACM Conference on Computer Supported Cooperative Work & Social Computing A1 CVPR CVPR - IEEE Conference on Computer -

Dinesh Manocha Distinguished Professor Computer Science University of North Carolina at Chapel Hill

Dinesh Manocha Distinguished Professor Computer Science University of North Carolina at Chapel Hill Awards and honors and year received (list--no more than *five* items): • 2011: Distinguished Alumni Award, Indian Institute of Technology, Delhi • 2011: Fellow of Institute of Electrical and Electronics Engineers (IEEE) • 2011: Fellow of American Association for the Advancement of Science (AAAS) • 2009: Fellow of Association for Computing Machinery (ACM) • 1997: Office of Naval Research Young Investigator Award Have you previously been involved in any CRA activities? If so, describe. Manocha has been actively involved with organization and participation in CCC workshops that were related to robotics, spatial computing and human-computer interaction. List any other relevant experience and year(s) it occurred (list--no more than *five* items). • SIGGRAPH Executive Committee, Director-At-Large, 2011 – 2014 • General Chair/Co-Chair of 8 conferences and workshops; (1995- onwards) • Member of Editorial board and Guest editor of 20 journals in computer graphics, robotics, geometric computing, and parallel computing. (1995 onwards) • 34 Keynote and Distinguished Lectures between 2011-2015 • Program Chair/Co-Chair of 5 conferences and workshops; 100+ program committees in computer graphics, virtual reality, robotics, gaming, acoustics, and geometric computing Research interests: (list only) • Computer graphics • Virtual reality • Robotics • Geometric computing • Parallel computing Personal Statement Given the dynamic nature of our field, CRA is playing an ever-increasing role in terms of research and education. Over the last 25 years, my research has spanned a variety of different fields, including geometric/symbolic computing, computer graphics, robotics, virtual-reality and parallel computing. I have worked closely with researchers/developers in engineering, medical, and social sciences and also interacted with leading industrial organizations, federal laboratories, and startups. -

What's Happening

FORUM What’s Happening http://www.salford.ac.uk/iti/rsa/ Computer Science Department Meetings ecai-ive.html Clemson University Clemson, SC 29634-1906 ECAI-98: European Conference Tel: 864-656-2258 on Artificial Intelligence IPDS ’98: IEEE International Fax: 864-656-0145 August 23–28, 1998, Brighton, UK Computer Performance and Email: [email protected] Dependability Symposium The 13th biennial European Confer- September 7–9, 1998, Durham, North ence on Artificial Intelligence Carolina, USA PROLAMAT ’98: The (ECAI-98) will take place at the Globalization of Manufacturing in Brighton Centre, Brighton, UK on With the advent of parallel-process- the Digital Communications Era August 23–28, 1998. The aim of the ing computers and high-speed net- of the 21st Century: Innovation, conference is to serve as a forum for works, questions of the performance Agility, and the Virtual international scientific exchange and and dependability of computer sys- Enterprise presentation of AI research and to tems and networks have become September 9–11, 1998, Trento, Italy bring together basic and applied re- closely related. Both design and search. The Conference Technical evaluation methods must consider The PROLAMAT conference is an Program will include paper presenta- the relationships between the occur- international event for demonstrat- tions, invited talks, panels, work- rence of errors/failures and their ing and evaluating activities and shops, and tutorials. ECAI-98 will consequent impact on performance. progress in the field of discrete also host an exhibition of AI tech- Relating analytical techniques to manufacturing. Sponsored by the nology located in the heart of the simulations, actual measurements, International Federation for Infor- conference venue itself. -

Chandrajit L. Bajaj

CHANDRAJIT L. BAJAJ Professor of Computer Sciences, Computational Applied Mathematics Chair in Visualization, Director, Center for Computational Visualization, Institute for Computational Engineering and Sciences The University of Texas at Austin ACES 2.324, 201 East 24th Street, Austin, TX 78712 Phone: (512) 471-8870 Fax: (512) 471-0982 Email: [email protected], [email protected] Web: http://www.cs.utexas.edu/users/bajaj, http://www.ices.utexas.edu/CCV =================================================================== PERSONAL Born April 19, 1958 Married, 2 sons, 1 daughter United States Citizen EDUCATION 1980 B.TECH. (Electrical Engineering), Indian Institute of Technology, Delhi 1983 M.S. (Computer Science), Cornell University 1984 Ph.D. (Computer Science), Cornell University PROFESSIONAL EXPERIENCE • Assistant Professor of Computer Sciences, Purdue University, 1984-89 • Associate Professor of Computer Sciences, Purdue University, 1989-93 • Visiting Associate Professor of Computer Sciences, Cornell University, 1990-91 • Professor of Computer Sciences, Purdue University, 1993-97 • Director of Image Analysis and Visualization Center, Purdue University, 1996-97 • CAM (Comp. Appd. Math) Chair of Visualization, University of Texas at Austin, 1997- Present • Professor of Computer Sciences, University of Texas at Austin, 1997- Present • Director of Computational Visualization Center, University of Texas at Austin, 1997- Present HONORS, AWARDS & MEMBERSHIP IN PROFESSIONAL SOCIETIES • National Science Talent Scholarship, 1975. Dean's -

Present the 2006 RESCUE SEMINAR SERIES

Calit2 UC Irvine division and donald bren school of information & computer sciences present the 2006 RESCUE SEMINAR SERIES HYDRA - High Performance Data Recording Architecture for Streaming Media DATE: Friday, August 11, 2006 TIME: 2:00 p.m. LOCATION: Calit2 Room 2006 RELATED LINK: http://dmrl.usc.edu/roger.html For additional information on this series, contact Quent Cassen, (949) 824-1741; [email protected] Faculty Sponsor: Sharad Mehrotra SPEAKER Roger Zimmermann, Research Asst. Prof. Computer Science Dept., USC HYDRA - High Performance Data Recording Architecture for Streaming Media Roger Zimmermann University of Southern California [email protected] In recent years, a considerable amount of research has focused on the efficient retrieval of streaming media. Scant attention has been paid to servers that can record and synchronously manipulate such streams in real-time. This talk presents our framework to enable large-scale, multi-modal, real-time media recording support in a broad class of applications. We introduce the High-performance Data Recording Architecture (HYDRA) which aims to provide a unified paradigm that integrates multi-channel media streaming, recording, retrieval and control in a coherent manner. We elaborate on the technology we have developed for live streaming and our experimental experiences. Some of the properties that make the manipulation of continuous media challenging and different from traditional alphanumeric, text, image, and alphanumeric streaming data, are the combination of real-time storage and retrieval, high bandwidth and large size, and inter-stream synchronization requirements. This talk presents some of the technologies that we have developed for HYDRA and our experimental experiences with several applications. -

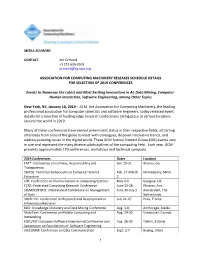

Association for Computing Machinery Releases Schedule Details for Selection of 2019 Conferences

MEDIA ADVISORY CONTACT: Jim Ormond +1 212-626-0505 [email protected] ASSOCIATION FOR COMPUTING MACHINERY RELEASES SCHEDULE DETAILS FOR SELECTION OF 2019 CONFERENCES Events to Showcase the Latest and Most Exciting Innovations in AI, Data Mining, Computer- Human Interaction, Software Engineering, among Other Topics New York, NY, January 10, 2019 – ACM, the Association for Computing Machinery, the leading professional association for computer scientists and software engineers, today released event details for a selection of leading-edge research conferences taking place at various locations around the world in 2019. Many of these conferences have earned preeminent status in their respective fields, attracting attendees from around the globe to meet with colleagues, discover innovative trends, and address pressing issues in the digital world. These ACM Special Interest Group (SIG) events vary in size and represent the many diverse subdisciplines of the computing field. Each year, ACM presents approximately 170 conferences, workshops and technical symposia. 2019 Conferences Dates Location FAT*: Conference on Fairness, Accountability and Jan. 29-31 Atlanta, Ga. Transparency SIGCSE: Technical Symposium on Computer Science Feb. 27-March Minneapolis, Minn. Education 2 CHI: Conference on Human Factors in Computing Systems May 4-9 Glasgow, UK FCRC: Federated Computing Research Conference June 22-28 Phoenix, Ariz. SIGMOD/PODS: International Conference on Management June 30-July 5 Amsterdam, The of Data Netherlands SIGIR: Intl. Conference on Research and Development in July 21-25 Paris, France Information Retrieval KDD: Knowledge Discovery and Data Mining Conference Aug. 3-8 Anchorage, Alaska MobiCom: Conference on Mobile Computing and Aug. 24-30 Vancouver, Canada Networking ESEC/FSE: European Software Engineering Conference and Aug.