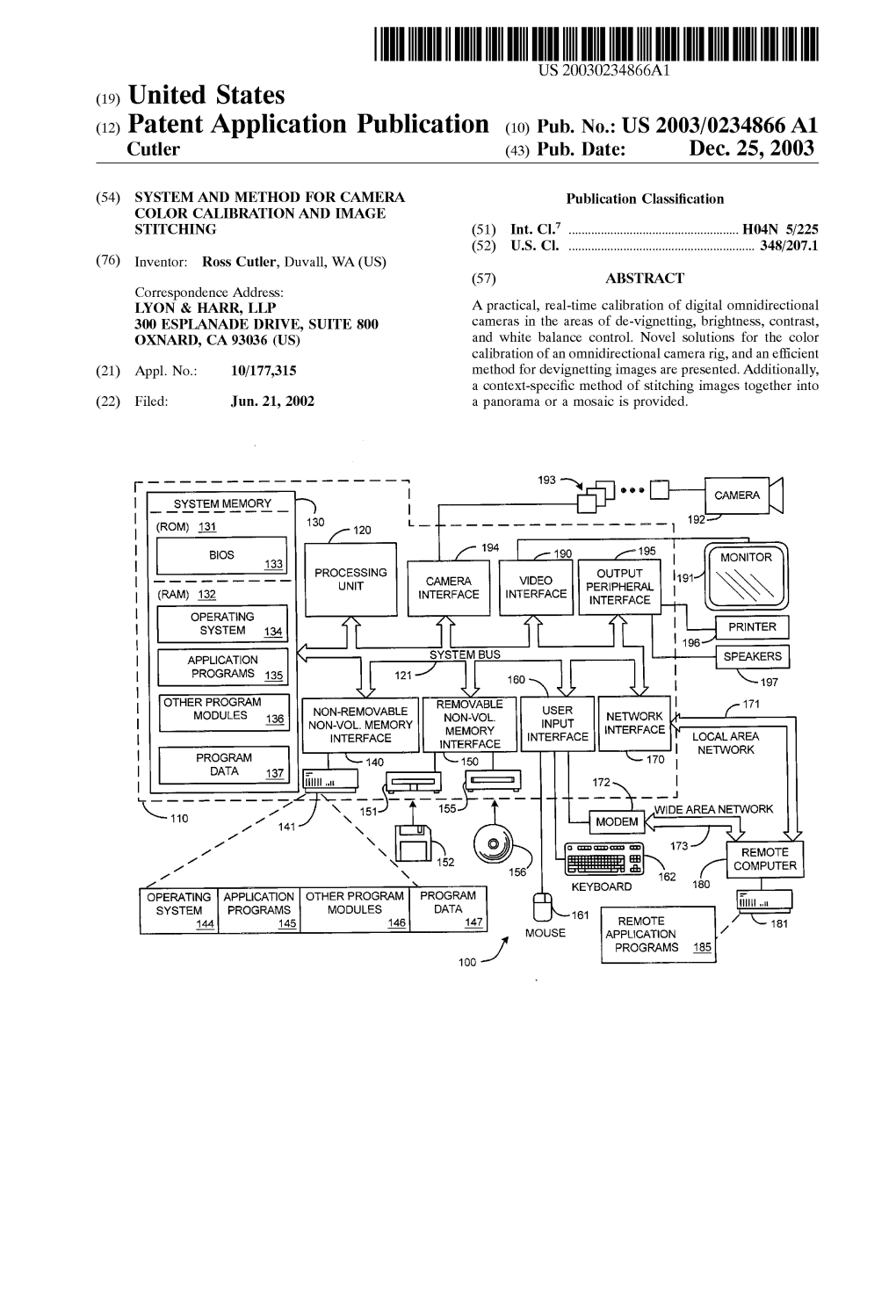

I SYSTEM 1 3 4 ? Jt ? T PRINTER

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Upgrading and Repairing Pcs, 21St Edition Editor-In-Chief Greg Wiegand Copyright © 2013 by Pearson Education, Inc

Contents at a Glance Introduction 1 1 Development of the PC 5 2 PC Components, Features, and System Design 19 3 Processor Types and Specifications 29 4 Motherboards and Buses 155 5 BIOS 263 UPGRADING 6 Memory 325 7 The ATA/IDE Interface 377 AND 8 Magnetic Storage Principles 439 9 Hard Disk Storage 461 REPAIRING PCs 10 Flash and Removable Storage 507 21st Edition 11 Optical Storage 525 12 Video Hardware 609 13 Audio Hardware 679 14 External I/O Interfaces 703 15 Input Devices 739 16 Internet Connectivity 775 17 Local Area Networking 799 18 Power Supplies 845 19 Building or Upgrading Systems 929 20 PC Diagnostics, Testing, and Maintenance 975 Index 1035 Scott Mueller 800 East 96th Street, Indianapolis, Indiana 46240 Upgrading.indb i 2/15/13 10:33 AM Upgrading and Repairing PCs, 21st Edition Editor-in-Chief Greg Wiegand Copyright © 2013 by Pearson Education, Inc. Acquisitions Editor All rights reserved. No part of this book shall be reproduced, stored in a retrieval Rick Kughen system, or transmitted by any means, electronic, mechanical, photocopying, Development Editor recording, or otherwise, without written permission from the publisher. No patent Todd Brakke liability is assumed with respect to the use of the information contained herein. Managing Editor Although every precaution has been taken in the preparation of this book, the Sandra Schroeder publisher and author assume no responsibility for errors or omissions. Nor is any Project Editor liability assumed for damages resulting from the use of the information contained Mandie Frank herein. Copy Editor ISBN-13: 978-0-7897-5000-6 Sheri Cain ISBN-10: 0-7897-5000-7 Indexer Library of Congress Cataloging-in-Publication Data in on file. -

CHAPTER 4 Motherboards and Buses 05 0789729741 Ch04 7/15/03 4:03 PM Page 196

05 0789729741 ch04 7/15/03 4:03 PM Page 195 CHAPTER 4 Motherboards and Buses 05 0789729741 ch04 7/15/03 4:03 PM Page 196 196 Chapter 4 Motherboards and Buses Motherboard Form Factors Without a doubt, the most important component in a PC system is the main board or motherboard. Some companies refer to the motherboard as a system board or planar. The terms motherboard, main board, system board, and planar are interchangeable, although I prefer the motherboard designation. This chapter examines the various types of motherboards available and those components typically contained on the motherboard and motherboard interface connectors. Several common form factors are used for PC motherboards. The form factor refers to the physical dimensions (size and shape) as well as certain connector, screw hole, and other positions that dictate into which type of case the board will fit. Some are true standards (meaning that all boards with that form factor are interchangeable), whereas others are not standardized enough to allow for inter- changeability. Unfortunately, these nonstandard form factors preclude any easy upgrade or inexpen- sive replacement, which generally means they should be avoided. The more commonly known PC motherboard form factors include the following: Obsolete Form Factors Modern Form Factors All Others ■ Baby-AT ■ ATX ■ Fully proprietary designs ■ Full-size AT ■ micro-ATX (certain Compaq, Packard Bell, Hewlett-Packard, ■ ■ LPX (semiproprietary) Flex-ATX notebook/portable sys- ■ WTX (no longer in production) ■ Mini-ITX (flex-ATX tems, and so on) ■ ITX (flex-ATX variation, never variation) produced) ■ NLX Motherboards have evolved over the years from the original Baby-AT form factor boards used in the original IBM PC and XT to the current ATX and NLX boards used in most full-size desktop and tower systems. -

GMB-486UNP 80486 VESA Mainboard User's Guide

GMB-486UNP 80486 VESA Mainboard User's Guide Version 3.01 i ABOUT THIS GUIDE This guide contains instructions for configuring and installing the mainboard. • Chapter 1, Introduction, acquaints user with the special features of the mainboard. • Chapter 2, Hardware Configuration, gives information on configuring memory and setting the mainboard's jumpers. Brief sections on installing memory. • Chapter 3, Mainboard Installation, is an overview of how to install the mainboard in a system. • Chapter 4, BIOS Setup, provides the BIOS information for system configuration. • Chapter 5, Hard Disk Types, provides a Default fixed Disk table. • Chapter 6, Error Codes, provides references for all POST communicate errors. • Chapter 7, Connector Pin Assignment, provides the VESA Local Bus Pin Assignment on VESA Connectors. TRADEMARKS USED IN THIS MANUAL MS-DOS, XENIX, Microsoft, WINDOWS are trademarks of Microsoft Corp. NOVELL, Netware are trademarks of Novell, Inc. Wordstar is a trademark of MicroPro International. Lotus 1-2-3 is a trademark of Lotus Development Corp. AT is a trademark of International Business Machines Corp. OS/2 is a trademark of Microsoft Corp. and International Business Machines Corp. UNIX is the trademark of AT&T. Weitek is a trademark of Weitek Corp. The information presented in this publication has been carefully checked for reliability; however, no responsibility is assumed for inaccuracies, whereas, specification is subjected to change without notice. All rights reserved. No part of this Manual may be reproduced in any form without the written permission. ii UNPACKING THE MAINBOARD The Mainboard comes packed in a sturdy cardboard shipping carton. The carton contains: • The Mainboard • This User's Guide Note: Do not remove the mainboard from its original packing until ready to install. -

Gépfejlesztés 2011 Befoltozva

DVD DVD Windows fejlesztése ingyen R 60 1 Friss 9 GB 2011 A LEGÚJABB DRIVEREK, HASZNOS PROGRAMOK, Tuningolja fel PC-jét eredeti, szuperbiztonságos Microsoft eszközökkel – mind a DVD-n! A HÓNAP JÁTÉKAI, EXKLUZÍV CSOMAgok… Így lesz tökéletesen néma a PC-je! R 74 GO DIGITAL! Anonim az interneten Okos cserékkel a lehető legkevesebből 2011/1 _ CHIPONLINE.HU A nagy biztonsági csomag A legjobb vírusirtók, valóban Egy kattintással: védett mód böngészőjében, maximális védelem minden fontos adatának 760 biztonsági rés Mindent frissít Új biztonsági szoftver: zseniális automatikus frissítés funkcióval befoltozva Rések, hibák, bugok: így védheti meg PC-jét a hanyagul programozott szoftverektől R 28 A legjobb Minden HDD eszközök internetes Drive Washer+ nyomkövető Disk Rescue törölve! Mit érdemes bővíteni? Exkluzív! Most a DVD-n: Gépfejlesztés 2011 Az összes korábbi Spóroljon sokat: csak a leghatékonyabb fejlesztések 40 teljes verzió R A 25 legjobb fizetős program Nagy karácsonyi ajándékcsomag: mind ingyen, mind teljes funkcionalitással R 18 Plusz: Az optikai tárolás vége 6 további exkluzív Ellepték a CD/DVD lemezek? Nem bízik bennük? CHIP csomag Megmutatjuk, mivel helyettesítse őket megbízhatóan R 46 1995 Ft, előfizetéssel 1395 Ft XXIII. évfolyam, 1. szám, 2011. január 25 teljes verzió most ajándékba 760 >> biztonsági rés befoltozva Windows >> fejlesztése ingyen Az >> optikai adattárolás Gépfejlesztésvége >> 2011A tökéletesen>> néma PCA 10>> legjobb jelszótipp PC-hozzáférés >> bárhonnan Kiadja a Motor-Presse Budapest Lapkiadó Kft. DVD DVD Windows -

Bus Interface Bus Interfaces Different Types of Buses: ISA (Industry

Systems Design & Programming Bus Interface CMPE 310 Bus Interfaces Different types of buses: P ISA (Industry Standard Architecture) P EISA (Extended ISA) P VESA (Video Electronics Standards Association, VL Bus) P PCI (Peripheral Component Interconnect) P USB (Universal Serial Bus) P AGP (Advanced Graphics Port) ISA is the oldest of all these and today's computers still have a ISA bus interface in form of an ISA slot (connection) on the main board. ISA has 8-bit and 16-bit standards along with the 32-bit version (EISA). All three versions operate at 8MHz. 1 Systems Design & Programming Bus Interface CMPE 310 8-Bit ISA Bus connector Pin # 1 GND IO CHK 2 RESET 3 +5V 4 IRQ9 5 -5V 6 DRQ2 D0-D7 7 -12V 8 OWS 9 +12V ISA Bus Connector Contains 10 GND IO RDY } 11 MEMW AEN 12 MEMR 8- bit Data Bus 13 IOW 14 IOR 15 DACK3 Demultiplexed 20-bit address Bus 16 DRQ3 17 DACK1 18 DRQ1 I/O and Memory Control Signals 19 DACK0 20 CLOCK 21 IRQ7 Interrupt Request Lines (IRQ2->IRQ9) 22 IRQ6 A0-A19 23 IRQ5 DMA channels 1-3 Control Signals 24 IRQ4 25 IRQ3 26 DACK2 Power, RESET and misc. signals 27 T/C 28 ALE 29 +5V 30 OSC 31 GND } 2 Systems Design & Programming Bus Interface CMPE 310 8-Bit ISA Bus Output Interface D0-D7 Connector DB37 D0 1Y1 . D0. Q0. D7 2Y1 74LS244 D7 Q7 OC 74LS374 CLK A0 A Y0 A1 B . D0 Q0 . IOW C . A3 G1 . G2A Y7 G2B D7 74LS374 Q7 OC CLK D0 Q0 A4 A Y0 . -

((Notes)) ---Course Objective

((Notes)) ------------------- Course objective: This course is about introducing the basic requirements for using digital microcomputers for monitoring and control. Corse description: The course covers the basic theoretical, hardware, and software issues necessary to achieve the course objectives. Interface definition: Interfacing is the coupling between a system under consideration and another system or between devices of a system, through which information passes. or: Interfacing is the point of interaction or communication between a computer and any other entity, such as a printer or human operator. 1 Requirements for Microcomputer based monitoring and control systems: These requirements come into three major parts, theoretical, hardware, and software. These issues are interacting ones. The subjects involved in these issues are many and depends on the application nature, and below are just few to mention: 2 3 Communicating with the computer When using the computer for monitoring and control of a given system, information will pass in both directions as shown. 4 This requires the existence of IN and OUT ports in the computer, which is actually the case. There are many ways of communicating with the compute which come in two main categories, buses and ports. Of course all these ways use connectors or slots for the actual physical connections. Some of these ways are universal and some are dedicated. Data are sent or received either in parallel or serial way. Computer architecture: Generally the microcomputer consists of the microprocessor, memory, and input/output units. It is important to notice that there are many different computer architectures. Figure below shows just a simple partial clarifying block diagram. -

Materialsammlung — Grundlagen Der Rechnerarchitektur

Materialsammlung — Grundlagen der Rechnerarchitektur Prof. Dr. Hans-Jurgen¨ Buhl 2012 Fachbereich Mathematik und Naturwissenschaften(C) Mathematik und Informatik Bergische Universit¨at Wuppertal Interner Bericht der Integrierten Arbeitsgruppe Mathematische Probleme aus dem Ingenieurbereich IAGMPI – 1201 April 2012 13. Auflage, 2012 Praktische Informatik 01 Inhaltsverzeichnis 1 Rechnerarchitektur 3 1.1 Der von-Neumann-Rechner .................... 40 1.2 CISC-Rechner ........................... 70 1.2.1 Der Computer im Computer ............... 70 1.2.2 Redesign von CISC-Konzepten .............. 72 1.2.3 Ein Intermezzo: die RISC-Workstation/ der RISC-Server 75 1.2.4 Befehlspipelines ...................... 75 1.2.5 Caches ........................... 76 1.2.6 Superskalarit¨at ...................... 79 1.2.7 Hyperthreading ...................... 79 1.2.8 Ein weiteres Intermezzo: EPIC / IA-64 / Itanium ... 83 1.2.9 64Bit ............................ 83 1.2.10 Mehrkern-Prozessoren .................. 86 1.2.11 paketbasierte Bussysteme ................ 88 1.2.12 Intel-Core-i, Intel-Core-i7, ... .............. 92 1.2.13 GPGPUs in der CPU ................... 92 1.2.14 Supercomputing im eigenen Tower ........... 92 1.2.15 Befehle aktueller CPUs .................. 93 1.3 HighEnd-Server und Großrechner ................ 94 1.4 Multiuser-Betriebssysteme .................... 95 1.5 Multitasking und Timesharing .................. 95 1.6 Multiuser-Multitasking-Betriebssysteme ............. 96 1.7 Multithreading .......................... 98 1.8 PentiumM ............................. 99 1.9 Pentium/Core Mehrkern-Prozessoren ..............100 1.10 AMD-Doppel- und Mehrkernprozessoren ............103 1.11 Ultra-SPARC T1, T2 und Rock .................104 1.12 Stromsparende Mobilprozessoren: Intel Atom ..........104 1.13 Cell-Prozessor fur¨ dedicated Processing .............104 i A Der Weg fort von der von-Neumann-Architektur 105 A.1 SPARC ..............................106 A.2 Der Intel Pentium .........................126 A.3 RISC (= reduced instruction set computer)-Designprinzip . -

PCI (Peripheral Component Interconnect) VESA Local Bus

PCI (Peripheral Component Interconnect) PCI is an interconnection system between a microprocessor and attached devices in which expansion slots are spaced closely for high speed operation. Using PCI, a computer can support both new PCI cards while continuing to support ISA expansion cards, currently the most common kind of expansion card. Designed by Intel, the original PCI was similar to the VESA Local Bus. However, PCI 2.0 is no longer a local bus and is designed to be independent of microprocessor design. PCI is designed to be synchronized with the clock speed of the microprocessor, in the range of 20 to 33 Mhz. PCI is now installed on most new desktop computers, not only those based on Intel's Pentium processor but also those based on the PowerPC. PCI transmits 32 bits at a time in a 124-pin connection (the extra pins are for power supply and grounding) and 64 bits in a 188-pin connection in an expanded implementation. PCI uses all active paths to transmit both address and data signals, sending the address on one clock cycle and data on the next. Burst data can be sent starting with an address on the first cycle and a sequence of data transmissions on a certain number of successive cycles. VESA Local Bus (Video Electronics Standards Association Local Bus) VESA Local Bus (sometimes called the VESA VL bus) is a standard interface between your computer and its expansion slots that provides faster data flow between the devices controlled by the expansion cards and your computer's microprocessor. A "local bus" is a physical path on which data flows at almost the speed of the microprocessor, increasing total system performance. -

Bluecat Linux Board Support Guide

3 BlueCat Linux Board Support Guide BlueCat Linux Release 5.0 DOC-0579-01 for x86 Boards Product names mentioned in BlueCat Linux Board Support Guide for x86 Boards are trademarks of their respective manufacturers and are used here for identification purposes only. Copyright ©1987 - 2004, LynuxWorks, Inc. All rights reserved. U.S. Patents 5,469,571; 5,594,903 Printed in the United States of America. All rights reserved. No part of BlueCat Linux Board Support Guide for x86 Boards may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photographic, magnetic, or otherwise, without the prior written permission of LynuxWorks, Inc. LynuxWorks, Inc. makes no representations, express or implied, with respect to this documentation or the software it describes, including (with no limitation) any implied warranties of utility or fitness for any particular purpose; all such warranties are expressly disclaimed. Neither LynuxWorks, Inc., nor its distributors, nor its dealers shall be liable for any indirect, incidental, or consequential damages under any circumstances. (The exclusion of implied warranties may not apply in all cases under some statutes, and thus the above exclusion may not apply. This warranty provides the purchaser with specific legal rights. There may be other purchaser rights which vary from state to state within the United States of America.) Contents PREFACE ............................................................................................................... -

PCI Bus Demystified.Pdf

PCI Bus Demystified by Doug Abbott A VOLUME IN THE DEMYSTIFYING TECHNOLOGY™ SERIES Eagle Rock, Virginia www.LLH-Publishing.com Copyright © 2000 by LLH Technology Publishing All rights reserved. No part of this book may be reproduced, in any form or means whatsoever, without written permission of the publisher. While every precaution has been taken in the prepara- tion of this book, the publisher and author assume no responsibil- ity for errors or omissions. Neither is any liability assumed for damages resulting from the use of information contained herein. Printed in the United States of America. ISBN 1-878707-78-7 (LLH eBook) LLH Technology Publishing and HighText Publications are trade- marks of Lewis Lewis & Helms LLC, 3578 Old Rail Road, Eagle Rock, VA, 24085 To Susan: My best friend, my soul mate. Thanks for sharing life’s journey with me. To Brian: Budding DJ, future pilot and all around neat kid. Thanks for keeping me young at heart. Contents Introduction ......................................................................... 1 Intended Audience .......................................................................2 The Rest of This Book .................................................................. 3 Chapter 1: Introducing the Peripheral Component Interconnect (PCI) Bus .......................................................... 5 So What is a Computer Bus? ........................................................ 6 Bus Taxonomy...............................................................................7 What’s Wrong with -

Vesa Local Bus 3486

VESA LOCAL BUS 3486 VL-BUS 3486 786 MINI-BOARD USER’S MANUAL Introduction ....................................................................2 Chapter 1 Main Features of System Board.. ....................3 Intel 486SX, 486DXf2,486DX, 386DX Microprocessor ..................................................3 64 MB On-Board Main Memory.. ......................3 Secondary Cache Memory System .....................3 USA Integration Chip Set ..................................4 8 Fully Accessible Bus Slots ..............................7 Component Layout ................................8 Chapter 2 Hardware Configuration .................................9 Static Discharge Precautions .1 ............................9 Jumper Settings ..................................................9 Cache Memory Size Jumper .................. 10 486 DX/ SX Selection Jumper.. .............12 Numeric Processor Jumper ....................12 CPU Type Jumper .................................13 486-50 MHz CPU Jumper .....................13 CPU Clock Frequency Jumper.. .............14 VESA Local Bus Jumper .......................15 Battery Setting Jumper .......................... 15 Processor Installation .........................................16 Installing CPU .......................................16 Memory Installation...........................................17 Available SIMM Configurations........... .17 InstaIling SIMMs ...................................19 Automatic Memory Sizing.....................19 Available Cache SRAM Configuration.. 20 Installing Cache -

CS-350: Topics for Term Papers

CS-350: Topics for Term Papers © 2008 Charles Abzug NOTE: Two levels of topics are presented here: numbered major topics, and lettered sub-topics. You may select either a numbered topic, and build you paper around a comparison of several of its sub-topics, or you may choose a lettered sub-topic, and discuss only that item. Only one student group will be permitted to function within each major topic (i.e., either on the major topic or on one of its sub-topics). 1. System buses a. The Original PC Bus, the PC/AT Bus, and the ISA Bus b. Marketplace failures: the MCA and EISA Buses c. VESA Local Bus (VLB) d. PCI Bus e. Advanced Graphics Port (AGP) f. IEEE 1394 FireWire, and/or the Universal Serial Bus (USB) g. Digital Equipment Corporation (DEC) Unibus h. Other buses 2. Device Controllers a. Serial Port (COM1, COM2) and Parallel Port (LP1, LP2) b. Universal Serial Bus (USB) c. Integrated Device Electronics (IDE) and Enhanced IDE (EIDE) d. Small Computer System Interface (SCSI) in all its multiple manifestations 3. Main-product-line Intel CPUs a. 4004 & 8008 b. 8086/8088, 80186, 80286 c. 80386SX/DX & 80486SX/DX d. Pentium, Pentium with MMX, Pentium Pro, Pentium II, Xeon, & Celeron (“Socket 370”) e. Pentium III & Pentium 4 f. IA-64: Intel Core 2 Duo, Core 2 Quad, Core 2 Extreme, etc. 4. Competitor Chips to Intel: AMD a. K5, K6, K7 (Athlon “Slot A”, Duron, Sempron) b. Athlon 64 (Opteron, Phenom, Turion) 5. Competitor Chips to Intel: a. Cyrix b. Rise Technology’s mP6 c.