Nvidia Ampere Ga102 Gpu Architecture the Ultimate Play

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NVIDIA GEFORCE GT 630 Step up to Better Video, Photo, Game, and Web Performance

NVIDIA GEFORCE GT 630 Step up to better video, photo, game, and web performance. Every PC deserves dedicated graphics. The NVIDIA® GeForce® GT 630 graphics card delivers a premium multimedia experience “Sixty percent of integrated and reliable gaming—every time. Insist on NVIDIA dedicated graphics owners want dedicated graphics for the faster, more immersive performance your graphics in their next PC1”. customers want. GEFORCE GT 630 TECHNICAL SPECIFICATIONS CUDA CORES > 96 GRAPHICS CLOCK > 810 MHz PROCESSOR CLOCK > 1620 MHz Deliver more Accelerate performance by up Give customers the freedom performance—and fun— to 5x over today’s integrated to connect their PC to any MEMORY INTERFACE with amazing HD video, graphics solutions2 and provide 3D-enabled TV for a rich, > 128 Bit photo, web, and gaming. additional dedicated memory. cinematic Blu-ray 3D experience FRAME BUFFER in their homes4. > 512/1024 MB GDDR5 or 1024 MB DDR3 MICROSOFT DIRECTX > 11 BEST-IN-CLASS PERFORMANCE MICROSOFT DIRECTCOMPUTE > Yes BLU-RAY 3D4 > Yes TRUEHD AND DTS-HD AUDIO BITSTREAMING > Yes NVIDIA PHYSX™-READY > Yes MAX. ANALOG / DIGITAL RESOLUTION > 2048x1536 (analog) / 2560x1600 (digital) DISPLAY CONNECTORS > Dual Link DVI, HDMI, VGA NVIDIA GEFORCE GT 630 | SELLSHEET | MAY 12 NVIDIA® GEFORCE® GT 630 Features Benefits HD VIDEOS Get stunning picture clarity, smooth video, accurate color, and precise image scaling for movies and video with NVIDIA PureVideo® HD technology. WEB ACCELERATION Enjoy a 2x faster web experience with the latest generation of GPU-accelerated web browsers (Internet Explorer, Google Chrome, and Firefox) 5. PHOTO EDITING Perfect and share your photos in half the time with popular apps like Adobe® Photoshop® and Nik Silver EFX Pro 25. -

In the United States District Court for the District of Delaware

IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE SEMCON TECH, LLC, Plaintiff, v. C.A. No. _________ APPLIED MATERIALS, INC., APPLIED MATERIALS SOUTH EAST ASIA PTE. JURY TRIAL DEMANDED LTD., APPLIED MATERIALS TAIWAN, LTD., APPLIED MATERIALS CHINA, APPLIED MATERIALS FRANCE S.A.R.L., AND APPLIED MATERIALS ITALIA SRL, Defendants. COMPLAINT FOR PATENT INFRINGEMENT This is an action for patent infringement arising under the Patent Laws of the United States of America, 35 U.S.C. § 1 et seq. in which Plaintiff Semcon Tech, LLC makes the following allegations against Defendants Applied Materials, Inc., Applied Materials South East Asia Pte. Ltd., Applied Materials Taiwan, Ltd., Applied Materials China, Applied Materials France S.A.R.L., and Applied Materials Italia Srl (collectively, “AMAT” or “Defendants”). PARTIES 1. Plaintiff Semcon Tech, LLC (“Semcon”) is a Delaware limited liability company. 2. On information and belief, Defendant Applied Materials, Inc. (“AMAT- US”) is a Delaware corporation with its principal place of business at 3050 Bowers Avenue, Santa Clara, California. On information and belief, AMAT can be served through its registered agent, The Corporation Trust Company, Corporation Trust Center, 1 1209 Orange Street, Wilmington, Delaware 19801. 3. On information and belief, Defendant Applied Materials South East Asia Pte. Ltd. (“AMAT-SG”) is a corporation organized under the laws of Singapore with its principal place of business at 8 Upper Changi Road North, Singapore 506906. 4. On information and belief, Defendant Applied Materials Taiwan, Ltd. (“AMAT-TW”) is a corporation organized under the laws of Taiwan with its principal place of business at No. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

Scalability of Terrain Visualization in Large Virtual Environments

WORDT ' Facultyof Mathematics and Natural Department of NIET UITGELEEND Mathematics and Computing Science Scalability of Terrain Visualization in Large Virtual Environments Esger G.N. Abbink Advisors: dr. J.B.TM. Roerdink Department of Mathematics and Computing Science University of Groningen drs. M.J.B. van Delden drs. M. Wierda Virtual Environments, Systems, and Consultancy Zeegse .(,-,-, June,2000 Rkunlv,,,IOIOi*S DDothk W1s*ud. 'iMP* IRS&SIICSIWI'U :.'von S r-;tus800 9' Avr')1rs.r Scalability of terrain visualization in large Virtual Environments Esger G.N. Abbink Department of Mathematics and Computing Science Graduate specialization High Performance Computing and Imaging Studentnumber 0831425 Gerkesklooster/ Zeegse, April 2000 Rapport vesc WR-2000/3 •1 Table of Contents Scalability of terrain visualization in large Virtual Environments..1 1. Introduction 4 2. Virtual Environments 6 2.1 Startingpoints 6 2.2 Means-end analysis 6 2.3 Man-in-the-loop 6 2.4 Hardware 8 2.5 Real-world simulators 9 2.5.1 Flight Simulators 9 2.5.2 Driving Simulators 9 2.5.3 Rail vehicle simulators 10 2.5.4 Ship Simulator 11 2.5.5 yE Walkthrough 11 2.5.6 Entertainment Simulators 12 2.5.7 Telepresence 12 2.5.8 Virtual GIS 13 2.6 Summary & practical continuation 13 3. Scalable terrain visualization 15 3.1 Introduction 15 3.2 The sample implementation 15 3.2.1 Introduction 15 3.2.2 Getting it to work 15 3.2.3 Porting the sample implementation to 4Space 16 3.3 Design 16 3.3.1 Goal 16 3.3.2 Requirements 16 3.3.3 Terrain representation 16 3.3.4 Basic setup 18 3.3.5 Tessellation algorithm 19 3.3.6 Implementation approach 23 3.4 Implementation 24 3.4.1 Terrain data generation 24 3.4.2 Implementing Central Differencing 24 3.4.2 Interfacing 4Space 28 3.4.3 Memory management 29 3.4.4 Dynamic data storages 30 3.4.4.1 Queue 30 3.4.4.2 Dynamic List & Pool 31 3.4.4.3 Red-Black Tree 31 3.4.4.4 Dynamic Storage 32 3.4.4.5 Evaluating performance 33 2 4. -

Adding RTX Acceleration to Iray with Optix 7

Adding RTX acceleration to Iray with OptiX 7 Lutz Kettner Director Advanced Rendering and Materials July 30th, SIGGRAPH 2019 What is Iray? Production Rendering on CUDA In Production since > 10 Years Bring ray tracing based production / simulation quality rendering to GPUs New paradigm: Push Button rendering (open up new markets) Plugins for 3ds Max Maya Rhino SketchUp … 2 SIMULATION QUALITY 3 iray legacy ARTISTIC FREEDOM 4 How Does it Work? 99% physically based Path Tracing To guarantee simulation quality and Push Button • Limit shortcuts and good enough hacks to minimum • Brute force (spectral) simulation no intermediate filtering scale over multiple GPUs and hosts even in interactive use GTC 2014 19 VCA * 8 Q6000 GPUs 5 How Does it Work? 99% physically based Path Tracing To guarantee simulation quality and Push Button • Limit shortcuts and good enough hacks to minimum • Brute force (spectral) simulation no intermediate filtering scale over multiple GPUs and hosts even in interactive use • Two-way path tracing from camera and (opt.) lights 6 How Does it Work? 99% physically based Path Tracing To guarantee simulation quality and Push Button • Limit shortcuts and good enough hacks to minimum • Brute force (spectral) simulation no intermediate filtering scale over multiple GPUs and hosts even in interactive use • Two-way path tracing from camera and (opt.) lights • Use NVIDIA Material Definition Language (MDL) 7 How Does it Work? 99% physically based Path Tracing To guarantee simulation quality and Push Button • Limit shortcuts and good -

Nvidia Video Codec Sdk - Encoder

NVIDIA VIDEO CODEC SDK - ENCODER Application Note vNVENC_DA-6209-001_v14 | July 2021 Table of Contents Chapter 1. NVIDIA Hardware Video Encoder.......................................................................1 1.1. Introduction............................................................................................................................... 1 1.2. NVENC Capabilities...................................................................................................................1 1.3. NVENC Licensing Policy...........................................................................................................3 1.4. NVENC Performance................................................................................................................ 3 1.5. Programming NVENC...............................................................................................................5 1.6. FFmpeg Support....................................................................................................................... 5 NVIDIA VIDEO CODEC SDK - ENCODER vNVENC_DA-6209-001_v14 | ii Chapter 1. NVIDIA Hardware Video Encoder 1.1. Introduction NVIDIA GPUs - beginning with the Kepler generation - contain a hardware-based encoder (referred to as NVENC in this document) which provides fully accelerated hardware-based video encoding and is independent of graphics/CUDA cores. With end-to-end encoding offloaded to NVENC, the graphics/CUDA cores and the CPU cores are free for other operations. For example, in a game recording scenario, -

Data Sheet: Quadro GV100

REINVENTING THE WORKSTATION WITH REAL-TIME RAY TRACING AND AI NVIDIA QUADRO GV100 The Power To Accelerate AI- FEATURES > Four DisplayPort 1.4 Enhanced Workflows Connectors3 The NVIDIA® Quadro® GV100 reinvents the workstation > DisplayPort with Audio to meet the demands of AI-enhanced design and > 3D Stereo Support with Stereo Connector3 visualization workflows. It’s powered by NVIDIA Volta, > NVIDIA GPUDirect™ Support delivering extreme memory capacity, scalability, and > NVIDIA NVLink Support1 performance that designers, architects, and scientists > Quadro Sync II4 Compatibility need to create, build, and solve the impossible. > NVIDIA nView® Desktop SPECIFICATIONS Management Software GPU Memory 32 GB HBM2 Supercharge Rendering with AI > HDCP 2.2 Support Memory Interface 4096-bit > Work with full fidelity, massive datasets 5 > NVIDIA Mosaic Memory Bandwidth Up to 870 GB/s > Enjoy fluid visual interactivity with AI-accelerated > Dedicated hardware video denoising encode and decode engines6 ECC Yes NVIDIA CUDA Cores 5,120 Bring Optimal Designs to Market Faster > Work with higher fidelity CAE simulation models NVIDIA Tensor Cores 640 > Explore more design options with faster solver Double-Precision Performance 7.4 TFLOPS performance Single-Precision Performance 14.8 TFLOPS Enjoy Ultimate Immersive Experiences Tensor Performance 118.5 TFLOPS > Work with complex, photoreal datasets in VR NVIDIA NVLink Connects 2 Quadro GV100 GPUs2 > Enjoy optimal NVIDIA Holodeck experience NVIDIA NVLink bandwidth 200 GB/s Realize New Opportunities with AI -

DRAFT - DATED MATERIAL the CONTENTS of THIS REVIEWER’S GUIDE IS INTENDED SOLELY for REFERENCE WHEN REVIEWING Rev a VERSIONS of VOODOO5 5500 REFERENCE BOARDS

Voodoo5™ 5500 for the PC Reviewer’s Guide DRAFT - DATED MATERIAL THE CONTENTS OF THIS REVIEWER’S GUIDE IS INTENDED SOLELY FOR REFERENCE WHEN REVIEWING Rev A VERSIONS OF VOODOO5 5500 REFERENCE BOARDS. THIS INFORMATION WILL BE REGULARLY UPDATED, AND REVIEWERS SHOULD CONTACT THE PERSONS LISTED IN THIS GUIDE FOR UPDATES BEFORE EVALUATING ANY VOODOO5 5500BOARD. 3dfx Interactive, Inc. 4435 Fortran Dr. San Jose, Ca 95134 408-935-4400 Brian Burke 214-570-2113 [email protected] PT Barnum 214-570-2226 [email protected] Bubba Wolford 281-578-7782 [email protected] www.3dfx.com www.3dfxgamers.com Copyright 2000 3dfx Interactive, Inc. All Rights Reserved. All other trademarks are the property of their respective owners. Visit the 3dfx Virtual Press Room at http://www.3dfx.com/comp/pressweb/index.html. Voodoo5 5500 Reviewers Guide xxxx 2000 Table of Contents Benchmarking the Voodoo5 5500 Page 5 • Cures for common benchmarking and image quality mistakes • Tips for benchmarking • Overclocking Guide INTRODUCTION Page 11 • Optimized for DVD Acceleration • Scalable Performance • Trend-setting Image quality Features • Texture Compression • Glide 2.x and 3.x Compatibility: The Most Titles • World-class Window’s GUI/video Acceleration SECTION 1: Voodoo5 5500 Board Overview Page 12 • General Features • 3D Features set • 2D Features set • Voodoo5 Video Subsystem • SLI • Texture compression • Fill Rate vs T&L • T-Buffer introduction • Alternative APIs (Al Reyes on Mac, Linux, BeOS and more) • Pricing & Availability • Warranty • Technical Support • Photos, screenshots, -

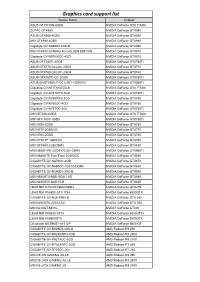

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

Intel and Micron Produce Breakthrough Memory Technology

July 28, 2015 Intel and Micron Produce Breakthrough Memory Technology New Class of Memory Unleashes the Performance of PCs, Data Centers and More NEWS HIGHLIGHTS ● Intel and Micron begin production on new class of non-volatile memory, creating the first new memory category in more than 25 years. 1 ● New 3D XPoint™ technology brings non-volatile memory speeds up to 1,000 times faster than NAND, the most popular non-volatile memory in the marketplace today. ● The companies invented unique material compounds and a cross point architecture for a memory technology that is 10 times denser than conventional memory2. ● New technology makes new innovations possible in applications ranging from machine learning to real-time tracking of diseases and immersive 8K gaming. SANTA CLARA, Calif. & BOISE, Idaho--(BUSINESS WIRE)-- Intel Corporation and Micron Technology, Inc. today unveiled 3D XPoint™ technology, a non-volatile memory that has the potential to revolutionize any device, application or service that benefits from fast access to large sets of data. Now in production, 3D XPoint technology is a major breakthrough in memory process technology and the first new memory category since the introduction of NAND flash in 1989. This Smart News Release features an interactive multimedia capsule. View the full release here: http://www.businesswire.com/news/home/20150728005534/en/ The explosion of connected devices and digital services is generating massive amounts of new data. To make this data useful, it must be stored and analyzed very quickly, creating challenges for service providers and system builders who must balance cost, power and performance trade-offs when they design memory and storage solutions. -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

Mobile 3D Devices They're Not Little

Mobile 3D Devices -- They’re not little PCs! Stephen Wilkinson – Graphics Software Technical Lead Texas Instruments CSSD/OMAP Who is this guy? ! Involved with simulation and games since 1995 ! Worked on SIMNET for the US Army ! PC gaming ! Titles include WarBirds 1 & 2, Fighter Squadron, AMA SuperBike, NHRA Drag Racing, NHRA Main Event ! 30+ Casino Games (yeah, yeah) ! Worked on core technology for several console games ! D&D Heroes, Mission Impossible: Operation SURMA, and T3: Redemption (NGC, PS2, Xbox) ! Former member of the Graphics and Computer Vision group at Nokia Research Center ! Now the CSSD graphics software technical lead at Texas Instruments working on OMAP Introduction ! Mobile 3D hardware is not a “Mini-me” of your desktop PC. ! To obtain similar features and relatively high levels of performance, mobile hardware has made some tradeoffs compared to the power of your desktop Introduction… ! If you are just learning mobile 3D or have learned about graphics and performance on a more “traditional” platform such as a PC or console, this presentation should help you: ! Recognize the most important areas in mobile power and performance for 3D and game development ! Take appropriate lessons learned from desktop development whenever possible… ! Avoid lessons from the desktop which can make your game slower or more power-hungry than it could be! Mobile performance preview ! Some you know, some you may not… Sorted by acronym the main problems are: ! CPU ! GPU ! Memory ! Your game The Mobile CPU ! No, it doesn’t have a Hemi. The Mobile GPU ! No, there’s not really a tiny 3dfx Voodoo™ card in that handset Mobile Memory ! It’s SLOOOOOOOW….