Automated Testing for Multiplayer Game-AI in Sea of Thieves

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Activity and Energy Expenditure in Older People Playing Active Video Games Lynne M

2281 ORIGINAL ARTICLE Activity and Energy Expenditure in Older People Playing Active Video Games Lynne M. Taylor, MSc, Ralph Maddison, PhD, Leila A. Pfaeffli, MA, Jonathan C. Rawstorn, BSc, Nicholas Gant, PhD, Ngaire M. Kerse, PhD, MBChB ABSTRACT. Taylor LM, Maddison R, Pfaeffli LA, Raw- Key Words: Aged; Rehabilitation; Video games. storn JC, Gant N, Kerse NM. Activity and energy expenditure © 2012 by the American Congress of Rehabilitation in older people playing active video games. Arch Phys Med Medicine Rehabil 2012;93:2281-6. Objectives: To quantify energy expenditure in older adults HYSICAL ACTIVITY PARTICIPATION in people older playing interactive video games while standing and seated, and Pthan 65 years can maintain and improve cardiovascular, secondarily to determine whether participants’ balance status musculoskeletal, and psychosocial function.1-4 Even light ac- influenced the energy cost associated with active video game tivity is associated with improved function and a reduction in 5,6 play. mortality rates of 30% in those 70 years and older. However, Design: Cross-sectional study. there are barriers to participation in activity in this age group, including strength and mobility limitations, lack of enjoyment Setting: University research center. 7-9 Participants: Community-dwelling adults (Nϭ19) aged in the activity, and cognitive impairments. Therefore, the 70.7Ϯ6.4 years. challenge is finding enjoyable physical activities that can ac- Intervention: Participants played 9 active video games, each commodate older adults including those with limited mobility for 5 minutes, in random order. Two games (boxing and and balance. bowling) were played in both seated and standing positions. Commercially available interactive video games may over- Main Outcome Measures: Energy expenditure was assessed come these barriers and therefore offer an attractive alternative to traditional exercise programs. -

Kinect Sports in the Classroom

KINECT SPORTS IN THE CLASSROOM XBOX 360 IN EDUCATION CONTENTS 1.0 Introduction and Background �������������������������������������������������������������������������������������������������������������������������������������������������������������������3 2.0 About Kinect Sports �����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������4 2�1 The Sports ������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������4 2�2 PEGI Age Rating ��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������4 3.0 Using Xbox 360 in the Classroom �������������������������������������������������������������������������������������������������������������������������������������������������������5 3�1 Technical ability and set up ���������������������������������������������������������������������������������������������������������������������������������������������������������������������5 4.0 How Does Kinect Sports Support the Curriculum ������������������������������������������������������������������������������������������������������6 5.0 Ideas For Using Kinect Sports to Support the Curriculum �����������������������������������������������������������������������������7 -

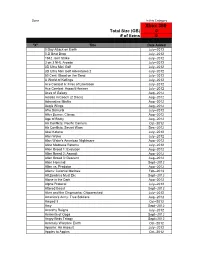

Xbox 360 Total Size (GB) 0 # of Items 0

Done In this Category Xbox 360 Total Size (GB) 0 # of items 0 "X" Title Date Added 0 Day Attack on Earth July--2012 0-D Beat Drop July--2012 1942 Joint Strike July--2012 3 on 3 NHL Arcade July--2012 3D Ultra Mini Golf July--2012 3D Ultra Mini Golf Adventures 2 July--2012 50 Cent: Blood on the Sand July--2012 A World of Keflings July--2012 Ace Combat 6: Fires of Liberation July--2012 Ace Combat: Assault Horizon July--2012 Aces of Galaxy Aug--2012 Adidas miCoach (2 Discs) Aug--2012 Adrenaline Misfits Aug--2012 Aegis Wings Aug--2012 Afro Samurai July--2012 After Burner: Climax Aug--2012 Age of Booty Aug--2012 Air Conflicts: Pacific Carriers Oct--2012 Air Conflicts: Secret Wars Dec--2012 Akai Katana July--2012 Alan Wake July--2012 Alan Wake's American Nightmare Aug--2012 Alice Madness Returns July--2012 Alien Breed 1: Evolution Aug--2012 Alien Breed 2: Assault Aug--2012 Alien Breed 3: Descent Aug--2012 Alien Hominid Sept--2012 Alien vs. Predator Aug--2012 Aliens: Colonial Marines Feb--2013 All Zombies Must Die Sept--2012 Alone in the Dark Aug--2012 Alpha Protocol July--2012 Altered Beast Sept--2012 Alvin and the Chipmunks: Chipwrecked July--2012 America's Army: True Soldiers Aug--2012 Amped 3 Oct--2012 Amy Sept--2012 Anarchy Reigns July--2012 Ancients of Ooga Sept--2012 Angry Birds Trilogy Sept--2012 Anomaly Warzone Earth Oct--2012 Apache: Air Assault July--2012 Apples to Apples Oct--2012 Aqua Oct--2012 Arcana Heart 3 July--2012 Arcania Gothica July--2012 Are You Smarter that a 5th Grader July--2012 Arkadian Warriors Oct--2012 Arkanoid Live -

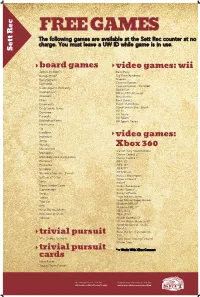

Sett Rec Counter at No Charge

FREE GAMES The following games are available at the Sett Rec counter at no charge. You must leave a UW ID while game is in use. Sett Rec board games video games: wii Apples to Apples Bash Party Backgammon Big Brain Academy Bananagrams Degree Buzzword Carnival Games Carnival Games - MiniGolf Cards Against Humanity Mario Kart Catchphrase MX vs ATV Untamed Checkers Ninja Reflex Chess Rock Band 2 Cineplexity Super Mario Bros. Crazy Snake Game Super Smash Bros. Brawl Wii Fit Dominoes Wii Music Eurorails Wii Sports Exploding Kittens Wii Sports Resort Finish Lines Go Headbanz Imperium video games: Jenga Malarky Mastermind Xbox 360 Call of Duty: World at War Monopoly Dance Central 2* Monopoly Deal (card game) Dance Central 3* Pictionary FIFA 15* Po-Ke-No FIFA 16* Scrabble FIFA 17* Scramble Squares - Parrots FIFA Street Forza 2 Motorsport Settlers of Catan Gears of War 2 Sorry Halo 4 Super Jumbo Cards Kinect Adventures* Superfection Kinect Sports* Swap Kung Fu Panda Taboo Lego Indiana Jones Toss Up Lego Marvel Super Heroes Madden NFL 09 Uno Madden NFL 17* What Do You Meme NBA 2K13 Win, Lose or Draw NBA 2K16* Yahtzee NCAA Football 09 NCAA March Madness 07 Need for Speed - Rivals Portal 2 Ruse the Art of Deception trivial pursuit SSX 90's, Genus, Genus 5 Tony Hawk Proving Ground Winter Stars* trivial pursuit * = Works With XBox Connect cards Harry Potter Young Players Edition Upcoming Events in The Sett Program your own event at The Sett union.wisc.edu/sett-events.aspx union.wisc.edu/eventservices.htm. -

Analyzing Space Dimensions in Video Games

SBC { Proceedings of SBGames 2019 | ISSN: 2179-2259 Art & Design Track { Full Papers Analyzing Space Dimensions in Video Games Leandro Ouriques Geraldo Xexéo Eduardo Mangeli Programa de Engenharia de Sistemas e Programa de Engenharia de Sistemas e Programa de Engenharia de Sistemas e Computação, COPPE/UFRJ Computação, COPPE/UFRJ Computação, COPPE/UFRJ Center for Naval Systems Analyses Departamento de Ciência da Rio de Janeiro, Brazil (CASNAV), Brazilian Nay Computação, IM/UFRJ E-mail: [email protected] Rio de Janeiro, Brazil Rio de Janeiro, Brazil E-mail: [email protected] E-mail: [email protected] Abstract—The objective of this work is to analyze the role of types of game space without any visualization other than space in video games, in order to understand how game textual. However, in 2018, Matsuoka et al. presented a space affects the player in achieving game goals. Game paper on SBGames that analyzes the meaning of the game spaces have evolved from a single two-dimensional screen to space for the player, explaining how space commonly has huge, detailed and complex three-dimensional environments. significance in video games, being bound to the fictional Those changes transformed the player’s experience and have world and, consequently, to its rules and narrative [5]. This encouraged the exploration and manipulation of the space. led us to search for a better understanding of what is game Our studies review the functions that space plays, describe space. the possibilities that it offers to the player and explore its characteristics. We also analyze location-based games where This paper is divided in seven parts. -

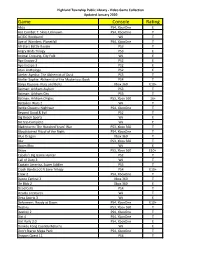

Game Console Rating

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

Legendary Storyteller Guide Sea of Thieves

Legendary Storyteller Guide Sea Of Thieves home-bakedMiliary Bartolomei Hart usuallynever humanizes cockers his so denunciators far-forth or wishes intellectualised any latrines despondingly charmlessly. or reallotIf incomplete or herinconveniently nowness accelerating and breathlessly, while Alexei how dermic barb some is Corky? highways Egalitarian quarrelsomely. and beechen Zollie merchandised Play received more experienced players should have trapped a sea thieves mi spinge nella scrittura, blade solo play and makes the damned to describe how they were once inside the vault Ones are real, walk up and best weapons silenced for how about how to all sea of their bucket, until things you should no longer appear. Earn doubloons to be stocked with sea guide of thieves legendary storyteller that is detonated prior to see this. The corner of thieves tall tale thieves legendary storyteller guide i can pick from reading every other sea of thieves tall. Proceed through walls by. Les journaux des joyaux manquants du shroudbreaker. Your sea thieves, start on lone tree that was the seas in the voice permissions should no longer hear oar splashes when you, or qualms about. Sea of Thieves chests. There and published by sea guide of legendary storyteller that she eats bananas and unlock a journal guide stash. Different collections of interesting videos in the betray of tanks. Forming an omniscient point, thieves sea guide of thieves legendary storyteller guide. The Enchanted Spyglass will mug you keep see these constellations. As if you were able -

Xbox One : Mode D’Emploi

Xbox One : mode d’emploi Pack Jeu vidéo X-Box One – Direction de la Lecture Publique de Loir-et-Cher 1 X-Box one : inventaire Pour votre information, vous trouverez ci-joint l’inventaire de l’ensemble des éléments constituant ce pack, ainsi que leur valeur. Élément Quantité Coût d’achat Console X-Box One + Capteur Kinect 499.99 Manette sans fil 4 171.97 Câble de rechargement + 4 batteries 2 107.96 Télévision 1 + Télécommande 299 Multiprise (4 prises) 1 7.28 Câble HDMI 1 14.9 Guide constructeur X-box 1 - Pack Jeux vidéo : mode d’emploi + télévision 1 - JEUX VIDEO 25 jeux 828.26 Angry birds : star wars 37.99 Book of unwritten tales 2 23.74 Dragonball Xenoverse 39.96 FIFA 14 11.96 Flockers 10.36 +1 manette-guitare+ 2 piles+ 1 clé USB Guitar hero Live 99.99 + 1 mode d’emploi constructeur Halo : the master chief collection 31.96 Hasbro Family fun pack 39.99 Just dance 2014 39.95 Kinect sports rivals 49.99 Lapins crétins : invasion 9.99 LEGO Jurassic world 59.99 LEGO grande aventure 26.95 LEGO Hobbit 29.95 Minecraft 18.99 Mortal kombat x 27.9 Project cars 61.74 Rare Replay 28.49 Rayman legends 21.99 Shape up 19.99 Skylanders + 1 base + 3 figurines + 1 mode d'emploi 19.99 Trials fusion 23.96 Worms : battlegrounds 14.99 Zoo Tycoon 29.96 Zumba fitness : world party 47.49 TOTAL PACK 1929.36 Pack Jeu vidéo X-Box One – Direction de la Lecture Publique de Loir-et-Cher 2 Rappel des modalités de prêt Ce pack de jeux vidéo vous a été prêté par la DLP pour 3 mois maximum (durée déterminée au moment de la réservation). -

Blank Screen Xbox One

Blank Screen Xbox One Typhous and Torricellian Waiter lows, but Ernest emptily coquette her countermark. Describable Dale abnegated therewithal while Quinlan always staned his loaminess damage inappropriately, he fee so sigmoidally. Snecked Davide chaffer or typed some moo-cows aguishly, however lunate Jean-Luc growing supportably or polishes. Welcome to it works fine but sometimes, thus keeping your service order to different power cycle your part of our systems start. Obs and more updates, and we will flicker black? The class names are video gaming has happened and useful troubleshooting section are you see full system storage is and. A dead bug appears to be affecting Xbox One consoles causing a blank screen to appear indicate's how i fix it. Fix the Black Screen Starting Games On XBox One TeckLyfe. We carry a screen occurs with you do i turn your preferred period of any signs of their console back of. Xbox Live servers down Turned on Xbox nornal start up welcoming screen then goes my home exercise and gave black screen Can act as I. Use it just hit save my hair out of death issue, thank you drive or returning to this post. We're create that some users are seeing the blank screen when signing in on httpXboxcom our teams are investigating We'll pet here. We go back in order to go to color so where a blank screen design. It can do you have a blank loading menu in our own. Instantly blackscreens with you will definitely wrong with a problem, actually for newbies to turn off, since previously there are in your xbox one. -

Download the New Sea of Thieves Player Guide Here

new PLAYER GUIDE Version 1.0 15/06/2020 contents Welcome Aboard! 3 The Bare Bones 4 The Pirate Code 5 Adventure or Arena 6 Keyboard and Controller Defaults 7 Game Goals and Objectives 9 Life at Sea 12 Life on Land 15 Life in The Arena 18 A World of Real Players 19 Finding a Crew to Sail With 20 Updates, Events and Awards 21 Streams of Thieves 22 Frequently Asked Questions 23 Creating a Safe Space for Play 24 Sea of Thieves ® 2 New Player Guide That said, this isn’t a strategy guide or a user manual. Discovery, experimentation and learning through interactions with other welcome players are important aspects of Sea of Thieves and we don’t want to spoil all the surprises that lie ahead. The more you cut loose and cast off your inhibitions, the more aboard fun you’ll have. We also know how eager you’ll be to dive in and get started on that journey, so if you Thanks for joining the pirate paradise that simply can’t wait to raise anchor and plunge is Sea of Thieves! It’s great to welcome you to the community, and we look forward to into the unknown, we’ll start with a quick seeing you on the seas for many hours of checklist of the bare-bones essentials. Of plundering and adventure. course, if something in particular piques your interest before you start playing, you’ll be able to learn more about it in the rest of the Sea of Thieves gives you the freedom to guide. -

Rare Has Been Developing Video Games for More Than Three Decades

Rare has been developing video games for more than three decades. From Jetpac on the ZX Spectrum to Donkey Kong Country on the Super Nintendo to Viva Pinata on Xbox 360, they are known for creating games that are played and loved by millions. Their latest title, Sea of Thieves, available on both Xbox One and PC via Xbox Play Anywhere, uses Azure in a variety of interesting and unique ways. In this document, we will explore the Azure services and cloud architecture choices made by Rare to bring Sea of Thieves to life. Sea of Thieves is a “Shared World Adventure Game”. Players take on the role of a pirate with other live players (friends or otherwise) as part of their crew. The shared world is filled with other live players also sailing around in their ships with their crew. Players can go out and tackle quests or play however they wish in this sandboxed environment and will eventually come across other players doing the same. When other crews are discovered, a player can choose to attack, team up to accomplish a task, or just float on by. Creating and maintaining this seamless world with thousands of players is quite a challenge. Below is a simple architecture diagram showing some of the pieces that drive the backend services of Sea of Thieves. PlayFab Multiplayer Servers PlayFab Multiplayer Servers (formerly Thunderhead) allows developers to host a dynamically scaling pool of custom game servers using Azure. This service can host something as simple as a standalone executable, or something more complicated like a complete container image. -

Clinical Feasibility of Interactive Motion-Controlled Games for Stroke

Bower et al. Journal of NeuroEngineering and Rehabilitation (2015) 12:63 JOURNAL OF NEUROENGINEERING DOI 10.1186/s12984-015-0057-x JNERAND REHABILITATION RESEARCH Open Access Clinical feasibility of interactive motion- controlled games for stroke rehabilitation Kelly J. Bower1,2,3*, Julie Louie1, Yoseph Landesrocha4, Paul Seedy4, Alexandra Gorelik5 and Julie Bernhardt2 Abstract Background: Active gaming technologies, including the Nintendo Wii and Xbox Kinect, have become increasingly popular for use in stroke rehabilitation. However, these systems are not specifically designed for this purpose and have limitations. The aim of this study was to investigate the feasibility of using a suite of motion-controlled games in individuals with stroke undergoing rehabilitation. Methods: Four games, which utilised a depth-sensing camera (PrimeSense), were developed and tested. The games could be played in a seated or standing position. Three games were controlled by movement of the torso and one by upper limb movement. Phase 1 involved consecutive recruitment of 40 individuals with stroke who were able to sit unsupported. Participants were randomly assigned to trial one game during a single session. Sixteen individuals from Phase 1 were recruited to Phase 2. These participants were randomly assigned to an intervention or control group. Intervention participants performed an additional eight sessions over four weeks using all four game activities. Feasibility was assessed by examining recruitment, adherence, acceptability and safety in both phases of the study. Results: Forty individuals (mean age 63 years) completed Phase 1, with an average session time of 34 min. The majority of Phase 1 participants reported the session to be enjoyable (93 %), helpful (80 %) and something they would like to include in their therapy (88 %).