Fine-Grained Painting Classification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supplementary Information For

1 2 Supplementary Information for 3 Dissecting landscape art history with information theory 4 Byunghwee Lee, Min Kyung Seo, Daniel Kim, In-seob Shin, Maximilian Schich, Hawoong Jeong, Seung Kee Han 5 Hawoong Jeong 6 E-mail:[email protected] 7 Seung Kee Han 8 E-mail:[email protected] 9 This PDF file includes: 10 Supplementary text 11 Figs. S1 to S20 12 Tables S1 to S2 13 References for SI reference citations www.pnas.org/cgi/doi/10.1073/pnas.2011927117 Byunghwee Lee, Min Kyung Seo, Daniel Kim, In-seob Shin, Maximilian Schich, Hawoong Jeong, Seung Kee Han 1 of 28 14 Supporting Information Text 15 I. Datasets 16 A. Data curation. Digital scans of landscape paintings were collected from the two major online sources: Wiki Art (WA) (1) 17 and the Web Gallery of Art (WGA) (2). For our purpose, we collected 12,431 landscape paintings by 1,071 artists assigned to 18 61 nationalities from WA, and 3,610 landscape paintings by 816 artists assigned with 20 nationalities from WGA. While the 19 overall number of paintings from WGA is relatively smaller than from WA, the WGA dataset has a larger volume of paintings 20 produced before 1800 CE. Therefore, we utilize both datasets in a complementary way. 21 As same paintings can be included in both datasets, we carefully constructed a unified dataset by filtering out the duplicate 22 paintings from both datasets by using meta-information of paintings (title, painter, completion date, etc.) to construct a unified 23 set of painting images. The filtering process is as follows. -

The Russian Revolution: a Wider Perspective

- THE RUSSIAN REVOLUTION: A WIDER PERSPECTIVE PREFACE to the Source Collection on the Russian Revolution This is a collection of sources provided by members of the Euroclio network and curated by three members of the Historiana team. It Insert Source here: is not a comprehensive overview of the Russian Revolution. Its purpose is to provide some insights into how politicians, diplomats, senior military officers, other officials, revolutionaries, eye witnesses, bystanders, newspaper editors and journalists, ordinary people and even children perceived some of the key events in Russia from January 1917 through to December 1922. We hope that this transnational and multiperspective collection will widen students’ understanding of what happened in Russia in those critical years. The sources have been provided by history teachers and historians from 13 countries, including the Russian Federation, neighbouring states that in 1917 were part of the Russian Empire, states that were then allies or enemies of Russia and even states which were neutral non-combatants in 1917. To obtain these sources the contributors turned to their own national digital and physical archives. Where necessary, contributors summarised texts in English. Painting by British artist David For EUROCLIO this was a pilot experiment in collecting historical Jagger, entitled The Bolshevik sources and we are very grateful to everyone who took part. We (1918). The image combines the think the experiment was successful and EUROCLIO will be planning features of several Bolshevik further crowd-sourcing of collections on other significant moments leaders. and developments in world history in the future. Source: Canadian War Museum Bob Stradling, Louise Sträuli and Giulia Rossi Public Domain Summer 2019 THE HISTORICAL CONTEXT This collection is divided into four Introductionsections. -

Post-War British Painting

A New Individualism: Post-war British Painting On a trip to Russia in the spring of 2013 I visited The State Tretyakov Gallery in Moscow for the first time and found myself admiring Nicholai Ge’s “What is truth?” (1890), Ilya Repin’s Religious Procession in Kursk Province (1880-83), Valentin Serov’s Girl with Peaches (1887), Vasily Surikov’s Morning of the Execution of the Streltsy (1881) and a room full of pictures by Vasily Vereshchagin. Here was a museum containing art by master painters whose output spoke with a resonance which moved me deeply, and as a British painter whose own work focuses on socio- political subjects, I found myself feeling simultaneously ashamed that I’d never heard of these great artists, whilst at the same time being intensely captivated by their work. Most of these painters had been part of a group known as ‘The Peredvizhniki’ or ‘Wanderers’, they were artists who had decided to break away from the creative traditions and limitations of the Academy and its exacting separations between low and high art. Instead they had decided to set their own agenda. As artists they were often critical of social injustice, yet they also wanted to celebrate the simple beauty and dignity they found in peasant life. This led me to reflect on the many parallels I saw between the Peredvizhniki and post- war British painting, especially ‘The School of London’, as both groups defied conventional attitudes and artistic fashions in favour of pursuing their own programme which often focused on the politics of human experience. -

Cubism (Surface—Plane), 1912

perished because of a negative and exclusive solution to the question of shading. Our age is obliged by force of circumstances to finish what our predeces- sors passed on to us. The path of search in this direction is broad, its bends are diverse, its forks numerous; the solutions will be many. Among them, those connected in our art with the name of A. Exter will remain as an ex- ample of courage, freedom, and subtlety. The upsurge of strength and courage in the plastic arts wanes neither beyond the Rhine nor at home, and it is expressed in the high level of pure painting unprecedented in our coun- try, a phenomenon that is characteristic of its contemporary state. DAVID BURLIUK Cubism (Surface—Plane), 1912 For biography see p. 8. The text of this piece, "Kubizm," is from an anthology of poems, prose pieces, and articles, Poshchechina obshchestvennomu vkusu [A Slap in the Face of Public Taste] (Moscow, December 1912 [according to bibl. R350, p. 17, although January 1913, according to KL], pp. 95-101 [bibl. R275]. The collection was prefaced by the famous declaration of the same name signed by David Burliuk, Velimir Khlebni- kov, Aleksei Kruchenykh, and Vladimir Mayakovsky and dated December 1912. The volume also contained a second essay by David Burliuk on texture [bibl. R269], verse by Khlebnikov and Benedikt Livshits, and four prose sketches by Vasilii Kan- dinsky [for further details see bibl. 133, pp. 45-50]. Both the essay on cubism and the one on texture were signed by N. Burliuk, although it is obvious that both were written by David and not by Nikolai (David's youngest brother and a poet of some merit). -

General Interest

GENERAL INTEREST GeneralInterest 4 FALL HIGHLIGHTS Art 60 ArtHistory 66 Art 72 Photography 88 Writings&GroupExhibitions 104 Architecture&Design 116 Journals&Annuals 124 MORE NEW BOOKS ON ART & CULTURE Art 130 Writings&GroupExhibitions 153 Photography 160 Architecture&Design 168 Catalogue Editor Thomas Evans Art Direction Stacy Wakefield Forte Image Production BacklistHighlights 170 Nicole Lee Index 175 Data Production Alexa Forosty Copy Writing Cameron Shaw Printing R.R. Donnelley Front cover image: Marcel Broodthaers,“Picture Alphabet,” used as material for the projection “ABC-ABC Image” (1974). Photo: Philippe De Gobert. From Marcel Broodthaers: Works and Collected Writings, published by Poligrafa. See page 62. Back cover image: Allan McCollum,“Visible Markers,” 1997–2002. Photo © Andrea Hopf. From Allan McCollum, published by JRP|Ringier. See page 84. Maurizio Cattelan and Pierpaolo Ferrari, “TP 35.” See Toilet Paper issue 2, page 127. GENERAL INTEREST THE MUSEUM OF MODERN ART,NEW YORK De Kooning: A Retrospective Edited and with text by John Elderfield. Text by Jim Coddington, Jennifer Field, Delphine Huisinga, Susan Lake. Published in conjunction with the first large-scale, multi-medium, posthumous retrospective of Willem de Kooning’s career, this publication offers an unparalleled opportunity to appreciate the development of the artist’s work as it unfolded over nearly seven decades, beginning with his early academic works, made in Holland before he moved to the United States in 1926, and concluding with his final, sparely abstract paintings of the late 1980s. The volume presents approximately 200 paintings, sculptures, drawings and prints, covering the full diversity of de Kooning’s art and placing his many masterpieces in the context of a complex and fascinating pictorial practice. -

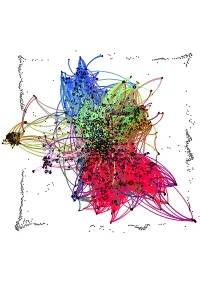

Network Map of Knowledge And

Humphry Davy George Grosz Patrick Galvin August Wilhelm von Hofmann Mervyn Gotsman Peter Blake Willa Cather Norman Vincent Peale Hans Holbein the Elder David Bomberg Hans Lewy Mark Ryden Juan Gris Ian Stevenson Charles Coleman (English painter) Mauritz de Haas David Drake Donald E. Westlake John Morton Blum Yehuda Amichai Stephen Smale Bernd and Hilla Becher Vitsentzos Kornaros Maxfield Parrish L. Sprague de Camp Derek Jarman Baron Carl von Rokitansky John LaFarge Richard Francis Burton Jamie Hewlett George Sterling Sergei Winogradsky Federico Halbherr Jean-Léon Gérôme William M. Bass Roy Lichtenstein Jacob Isaakszoon van Ruisdael Tony Cliff Julia Margaret Cameron Arnold Sommerfeld Adrian Willaert Olga Arsenievna Oleinik LeMoine Fitzgerald Christian Krohg Wilfred Thesiger Jean-Joseph Benjamin-Constant Eva Hesse `Abd Allah ibn `Abbas Him Mark Lai Clark Ashton Smith Clint Eastwood Therkel Mathiassen Bettie Page Frank DuMond Peter Whittle Salvador Espriu Gaetano Fichera William Cubley Jean Tinguely Amado Nervo Sarat Chandra Chattopadhyay Ferdinand Hodler Françoise Sagan Dave Meltzer Anton Julius Carlson Bela Cikoš Sesija John Cleese Kan Nyunt Charlotte Lamb Benjamin Silliman Howard Hendricks Jim Russell (cartoonist) Kate Chopin Gary Becker Harvey Kurtzman Michel Tapié John C. Maxwell Stan Pitt Henry Lawson Gustave Boulanger Wayne Shorter Irshad Kamil Joseph Greenberg Dungeons & Dragons Serbian epic poetry Adrian Ludwig Richter Eliseu Visconti Albert Maignan Syed Nazeer Husain Hakushu Kitahara Lim Cheng Hoe David Brin Bernard Ogilvie Dodge Star Wars Karel Capek Hudson River School Alfred Hitchcock Vladimir Colin Robert Kroetsch Shah Abdul Latif Bhittai Stephen Sondheim Robert Ludlum Frank Frazetta Walter Tevis Sax Rohmer Rafael Sabatini Ralph Nader Manon Gropius Aristide Maillol Ed Roth Jonathan Dordick Abdur Razzaq (Professor) John W. -

Russian Art 1

RUSSIAN ART 1 RUSSIAN ART Christie’s dominated the global market for Russian Works of Art and Fabergé in 2016, with our Russian Art sales achieving more than £12 million internationally. For the tenth consecutive season, our Russian Art auctions saw the highest sell-through rates in the market. With a focus on outstanding quality, Christie’s continues to attract both emerging and established collectors in the field. For over a decade, Christie’s has set world auction records in every Russian Art sale. We have broken a total of six records in the past two years, including two in excess of £4 million. Christie’s has set world records for over 50 of Russia’s foremost artists, including Goncharova, Repin, Levitan, Vereshchagin, Vasnetsov, Borovikovsky, Serov, Somov, Lentulov, Mashkov, Annenkov and Tchelitchew. Six of the 10 most valuable paintings ever purchased in a Russian Art sale were sold at Christie’s. Christie’s remains the global market leader in the field of Russian Works of Art and Fabergé, consistently achieving the highest percentage sold by both value and lot for Russian Works of Art. Christie’s closes 2016 with a 60% share of the global Fabergé market, and a 62% share of the global market for Russian Works of Art. cover PROPERTY FROM AN IMPORTANT EUROPEAN COLLECTION KONSTANTIN KOROVIN (1861–1939) Woodland brook, 1921 Estimate: £120,000–150,000 Sold for: £317,000 London, King Street · November 2016 back cover PROPERTY OF A MIDDLE EASTERN COLLECTOR A GEM-SET PARCEL-GILT SILVER-MOUNTED CERAMIC TOBACCO HUMIDOR The mounts marked K. -

Romanov News Новости Романовых

Romanov News Новости Романовых By Ludmila & Paul Kulikovsky №114 September 2017 Emperor Nicholas I. Watercolour by Alexander I. Klünder Monument to Emperor Nicholas I unveiled in Czech Republic September 19.TASS - A monument to Emperor Nicholas I (1825-1855) was opened in the spa town of Teplice in the north of the Czech Republic. This was announced by Consul-General of the Consulate General of the Russian Federation in Karlovy Vary (West Bohemia) Igor Melnik. "The monument to Nicholas I was erected in the very centre of Teplice next to the monuments of Peter the Great and Alexander I," he stressed. "This idea supported by local authorities, was conceived long ago, but was postponed, primarily because of the lack of necessary funds." Monuments to Russian autocrats in Teplice were created by People's Artist of Russia Vladimir Surovtsev. The patrons of the project are the organization of Russian compatriots in the Czech Republic "The Ark-Arch" and the General Consulate of the Russian Federation in Karlovy Vary. Sovereigns from the Romanov dynasty, actively implementing the idea of uniting the Slavs under the sceptre of mighty Russia on the international arena, have forever entered the history of Teplice. The Grand Duke and the future Emperor of Russia Nicholas I twice visited this city: in 1815 at the age of 19, and in 1818, when he turned 22. He took part in laying the foundation and then opening a monument to Russian soldiers who died for Europe's freedom in the struggle against Napoleon. The elder brother of Nicholas I, Emperor Alexander I, arrived in Teplice during the foreign campaigns of the Russian Imperial Army during the Napoleonic wars in 1813. -

Henryk Siemiradzki and the International Artistic Milieu

ACCADEMIA POL ACCA DELLE SCIENZE DELLE SCIENZE POL ACCA ACCADEMIA BIBLIOTECA E CENTRO DI STUDI A ROMA E CENTRO BIBLIOTECA ACCADEMIA POLACCA DELLE SCIENZE BIBLIOTECA E CENTRO DI STUDI A ROMA CONFERENZE 145 HENRYK SIEMIRADZKI AND THE INTERNATIONAL ARTISTIC MILIEU FRANCESCO TOMMASINI, L’ITALIA E LA RINASCITA E LA RINASCITA L’ITALIA TOMMASINI, FRANCESCO IN ROME DELLA INDIPENDENTE POLONIA A CURA DI MARIA NITKA AGNIESZKA KLUCZEWSKA-WÓJCIK CONFERENZE 145 ACCADEMIA POLACCA DELLE SCIENZE BIBLIOTECA E CENTRO DI STUDI A ROMA ISSN 0239-8605 ROMA 2020 ISBN 978-83-956575-5-9 CONFERENZE 145 HENRYK SIEMIRADZKI AND THE INTERNATIONAL ARTISTIC MILIEU IN ROME ACCADEMIA POLACCA DELLE SCIENZE BIBLIOTECA E CENTRO DI STUDI A ROMA CONFERENZE 145 HENRYK SIEMIRADZKI AND THE INTERNATIONAL ARTISTIC MILIEU IN ROME A CURA DI MARIA NITKA AGNIESZKA KLUCZEWSKA-WÓJCIK. ROMA 2020 Pubblicato da AccademiaPolacca delle Scienze Bibliotecae Centro di Studi aRoma vicolo Doria, 2 (Palazzo Doria) 00187 Roma tel. +39 066792170 e-mail: [email protected] www.rzym.pan.pl Il convegno ideato dal Polish Institute of World Art Studies (Polski Instytut Studiów nad Sztuką Świata) nell’ambito del programma del Ministero della Scienza e dell’Istruzione Superiore della Repubblica di Polonia (Polish Ministry of Science and Higher Education) “Narodowy Program Rozwoju Humanistyki” (National Programme for the Develop- ment of Humanities) - “Henryk Siemiradzki: Catalogue Raisonné of the Paintings” (“Tradition 1 a”, no. 0504/ nprh4/h1a/83/2015). Il convegno è stato organizzato con il supporto ed il contributo del National Institute of Polish Cultural Heritage POLONIKA (Narodowy Instytut Polskiego Dziedzictwa Kul- turowego za Granicą POLONIKA). Redazione: Maria Nitka, Agnieszka Kluczewska-Wójcik Recensione: Prof. -

Un Etrusco Nel Novecento L'arte in Russia Negli Anni Che Sconvolsero

01 1 18-DEC-17 15:53:09 n° 383 Un etrusco nel Novecento L’arte in Russia negli anni che sconvolsero il mondo Al di là delle nuvole Insolito senese Hammershøi, il pittore di stanze tranquille n° 383 - gennaio 2018 © Diritti riservati Fondazione Internazionale Menarini - vietata la riproduzione anche parziale dei testi e delle fotografie - Direttore Responsabile Lorenzo Gualtieri Redazione, corrispondenza: «Minuti» Edificio L - Strada 6 - Centro Direzionale Milanofiori I-20089 Rozzano (Milan, Italy) www.fondazione-menarini.it NOTEBOOK Galileo Returns to Padua Rivoluzione Galileo, at Pa- lazzo del Monte di Pietà of Padua until 18 March 2018, illustrates the com- plex figure of one of the absolute protagonists of the Italian and European Seicento, who taught for 18 years at the Venetian city’s university – years re- membered by the great scientist as the happiest of his life due to the freedom accorded him to teach as he saw fit. The exhibition shows us the many facets of Galileo the man: from the scientist, father of the experimental method, to the music theorist and lute Guercino: Atlas Giandomenico Tiepolo: Hercules player; to his qualities as a draughtsman; from Galileo owners’ heirs to the Palladio The Dutch 17th Cen- the inventor – not only of Museum of Vicenza where tury in Shanghai an improved spyglass but they have been on show and Abu Dhabi also of the microscope and since November 2017. Until 25 February 2017, the compass – to the ‘eve- The frescoes were painted at the Long Museum of ryday’ Galileo, scholar of two decades after the Tie- Shanghai, Rembrandt, viticulture and compoun- polos, father and son, de- Vermeer and Hals in the der and merchant of me- corated Villa Valmarana Dutch Golden Age: Ma- dicines in pill form. -

International Scholarly Conference the PEREDVIZHNIKI ASSOCIATION of ART EXHIBITIONS. on the 150TH ANNIVERSARY of the FOUNDATION

International scholarly conference THE PEREDVIZHNIKI ASSOCIATION OF ART EXHIBITIONS. ON THE 150TH ANNIVERSARY OF THE FOUNDATION ABSTRACTS 19th May, Wednesday, morning session Tatyana YUDENKOVA State Tretyakov Gallery; Research Institute of Theory and History of Fine Arts of the Russian Academy of Arts, Moscow Peredvizhniki: Between Creative Freedom and Commercial Benefit The fate of Russian art in the second half of the 19th century was inevitably associated with an outstanding artistic phenomenon that went down in the history of Russian culture under the name of Peredvizhniki movement. As the movement took shape and matured, the Peredvizhniki became undisputed leaders in the development of art. They quickly gained the public’s affection and took an important place in Russia’s cultural life. Russian art is deeply indebted to the Peredvizhniki for discovering new themes and subjects, developing critical genre painting, and for their achievements in psychological portrait painting. The Peredvizhniki changed people’s attitude to Russian national landscape, and made them take a fresh look at the course of Russian history. Their critical insight in contemporary events acquired a completely new quality. Touching on painful and challenging top-of-the agenda issues, they did not forget about eternal values, guessing the existential meaning behind everyday details, and seeing archetypal importance in current-day matters. Their best paintings made up the national art school and in many ways contributed to shaping the national identity. The Peredvizhniki -

Invisible Architecture: Ideologies of Space in the Nineteenth-Century City

Invisible Architecture: Ideologies of Space in the Nineteenth- Century City A thesis submitted to The University of Manchester for the degree of Doctor of Philosophy in the Faculty of Humanities. 2014 Ben Moore School of Arts, Languages and Cultures 2 Contents Abstract 5 Declaration and Copyright Statement 6 Acknowledgments 7 Note on Abbreviations and Editions 8 Introduction 9 ‘Invisible’ vs ‘Ideology’ 11 The Constellation and the Dialectical Image 17 The Optical Unconscious 21 Architectural Unconscious 24 The City from Above and the City from Below 29 Reading Modernity, Reading Architecture 34 Cities and Texts 36 Chapters 1. Gogol’s Dream-City 41 Unity and Multiplicity 45 Imagination and Dreams 57 Arabesque, Ecstasy, Montage 66 The Overcoat 75 2. The Underground City: Kay, Engels and Gaskell 80 James Kay and Friedrich Engels’s Manchester 84 Cellar-dwelling 93 Mary Barton’s Cellars 100 3. The Unstable City: Dombey and Son 113 The Two Houses of Dombey 117 The Trading House 120 The Domestic House 132 The Railway 141 4. The Uncanny City: Our Mutual Friend 154 3 In Search of a Clue 158 The River and the Gaze 166 Uncanny Houses 171 Hidden Secrets and Hauntings 174 Uncanny Return 180 The Hollow down by the Flare 184 5. Zola’s Transparent City 192 The Spectacle as Relation between Part and Whole 192 Zola and Montage 199 La Curée and Haussmann’s Paris 204 Au Bonheur des Dames and the Commodified World 215 Conclusion: Thoughts on Whiteness 226 The Whiteness of the Whale 227 Two Theories of Whiteness 232 Whiteness in the City I: Concealment and Revelation 234 Whiteness in the City II: Ornament and Fashion 239 Whiteness in the City III: Targeted by Whiteness 244 Carker’s Whiteness 246 Bibliography 249 List of Illustrations 1.