Evaluation of Off-The-Shelf OCR Technologies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

OCR Pwds and Assistive Qatari Using OCR Issue No

Arabic Optical State of the Smart Character Art in Arabic Apps for Recognition OCR PWDs and Assistive Qatari using OCR Issue no. 15 Technology Research Nafath Efforts Page 04 Page 07 Page 27 Machine Learning, Deep Learning and OCR Revitalizing Technology Arabic Optical Character Recognition (OCR) Technology at Qatar National Library Overview of Arabic OCR and Related Applications www.mada.org.qa Nafath About AboutIssue 15 Content Mada Nafath3 Page Nafath aims to be a key information 04 Arabic Optical Character resource for disseminating the facts about Recognition and Assistive Mada Center is a private institution for public benefit, which latest trends and innovation in the field of Technology was founded in 2010 as an initiative that aims at promoting ICT Accessibility. It is published in English digital inclusion and building a technology-based community and Arabic languages on a quarterly basis 07 State of the Art in Arabic OCR that meets the needs of persons with functional limitations and intends to be a window of information Qatari Research Efforts (PFLs) – persons with disabilities (PWDs) and the elderly in to the world, highlighting the pioneering Qatar. Mada today is the world’s Center of Excellence in digital work done in our field to meet the growing access in Arabic. Overview of Arabic demands of ICT Accessibility and Assistive 11 OCR and Related Through strategic partnerships, the center works to Technology products and services in Qatar Applications enable the education, culture and community sectors and the Arab region. through ICT to achieve an inclusive community and educational system. The Center achieves its goals 14 Examples of Optical by building partners’ capabilities and supporting the Character Recognition Tools development and accreditation of digital platforms in accordance with international standards of digital access. -

Reconocimiento De Escritura Lecture 4/5 --- Layout Analysis

Reconocimiento de Escritura Lecture 4/5 | Layout Analysis Daniel Keysers Jan/Feb-2008 Keysers: RES-08 1 Jan/Feb-2008 Outline Detection of Geometric Primitives The Hough-Transform RAST Document Layout Analysis Introduction Algorithms for Layout Analysis A `New' Algorithm: Whitespace Cuts Evaluation of Layout Analyis Statistical Layout Analysis OCR OCR - Introduction OCR fonts Tesseract Sources of OCR Errors Keysers: RES-08 2 Jan/Feb-2008 Outline Detection of Geometric Primitives The Hough-Transform RAST Document Layout Analysis Introduction Algorithms for Layout Analysis A `New' Algorithm: Whitespace Cuts Evaluation of Layout Analyis Statistical Layout Analysis OCR OCR - Introduction OCR fonts Tesseract Sources of OCR Errors Keysers: RES-08 3 Jan/Feb-2008 Detection of Geometric Primitives some geometric entities important for DIA: I text lines I whitespace rectangles (background in documents) Keysers: RES-08 4 Jan/Feb-2008 Outline Detection of Geometric Primitives The Hough-Transform RAST Document Layout Analysis Introduction Algorithms for Layout Analysis A `New' Algorithm: Whitespace Cuts Evaluation of Layout Analyis Statistical Layout Analysis OCR OCR - Introduction OCR fonts Tesseract Sources of OCR Errors Keysers: RES-08 5 Jan/Feb-2008 Hough-Transform for Line Detection Assume we are given a set of points (xn; yn) in the image plane. For all points on a line we must have yn = a0 + a1xn If we want to determine the line, each point implies a constraint yn 1 a1 = − a0 xn xn Keysers: RES-08 6 Jan/Feb-2008 Hough-Transform for Line Detection The space spanned by the model parameters a0 and a1 is called model space, parameter space, or Hough space. -

An Accuracy Examination of OCR Tools

International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075, Volume-8, Issue-9S4, July 2019 An Accuracy Examination of OCR Tools Jayesh Majumdar, Richa Gupta texts, pen computing, developing technologies for assisting Abstract—In this research paper, the authors have aimed to do a the visually impaired, making electronic images searchable comparative study of optical character recognition using of hard copies, defeating or evaluating the robustness of different open source OCR tools. Optical character recognition CAPTCHA. (OCR) method has been used in extracting the text from images. OCR has various applications which include extracting text from any document or image or involves just for reading and processing the text available in digital form. The accuracy of OCR can be dependent on text segmentation and pre-processing algorithms. Sometimes it is difficult to retrieve text from the image because of different size, style, orientation, a complex background of image etc. From vehicle number plate the authors tried to extract vehicle number by using various OCR tools like Tesseract, GOCR, Ocrad and Tensor flow. The authors in this research paper have tried to diagnose the best possible method for optical character recognition and have provided with a comparative analysis of their accuracy. Keywords— OCR tools; Orcad; GOCR; Tensorflow; Tesseract; I. INTRODUCTION Optical character recognition is a method with which text in images of handwritten documents, scripts, passport documents, invoices, vehicle number plate, bank statements, Fig.1: Functioning of OCR [2] computerized receipts, business cards, mail, printouts of static-data, any appropriate documentation or any II. OCR PROCDURE AND PROCESSING computerized receipts, business cards, mail, printouts of To improve the probability of successful processing of an static-data, any appropriate documentation or any picture image, the input image is often ‘pre-processed’; it may be with text in it gets processed and the text in the picture is de-skewed or despeckled. -

Durchleuchtet PDF Ist Der Standard Für Den Austausch Von Dokumenten, Denn PDF-Dateien Sehen Auf

WORKSHOP PDF-Dateien © alphaspirit, 123RF © alphaspirit, PDF-Dateien verarbeiten und durchsuchbar machen Durchleuchtet PDF ist der Standard für den Austausch von Dokumenten, denn PDF-Dateien sehen auf Daniel Tibi, allen Rechnern gleich aus. Für Linux gibt es zahlreiche Tools, mit denen Sie alle Möglich- Christoph Langner, Hans-Georg Eßer keiten dieses Dateiformats ausreizen. okumente unterschiedlichster Art, in einem gedruckten Text, Textstellen mar- denen Sie über eine Texterkennung noch von Rechnungen über Bedie- kieren oder Anmerkungen hinzufügen. eine Textebene hinzufügen müssen. D nungsanleitungen bis hin zu Bü- Als Texterkennungsprogramm für Linux chern und wissenschaftlichen Arbeiten, Texterkennung empfiehlt sich die OCR-Engine Tesseract werden heute digital verschickt, verbrei- Um die Möglichkeiten des PDF-Formats [1]. Die meisten Distributionen führen das tet und genutzt – vorzugsweise im platt- voll auszureizen, sollten PDF-Dateien Programm in ihren Paketquellen: formunabhängigen PDF-Format. Durch- durchsuchbar sein. So durchstöbern Sie l Unter OpenSuse installieren Sie tesse suchbare Dokumente erleichtern das etwa gleich mehrere Dokumente nach be- ractocr und eines der Sprachpakete, schnelle Auffinden einer bestimmten stimmten Wörtern und finden innerhalb z. B. tesseractocrtraineddatagerman. Stelle in der Datei, Metadaten liefern zu- einer Datei über die Suchfunktion des (Das Paket für die englische Sprache sätzliche Informationen. PDF-Betrachters schnell die richtige Stelle. richtet OpenSuse automatisch mit ein.) Zudem gibt es zahlreiche Möglichkei- PDF-Dateien, die Sie mit LaTeX oder Libre- l Für Ubuntu und Linux Mint wählen ten, PDF-Dokumente zu bearbeiten: Ganz Office erstellen, lassen sich üblicherweise Sie tesseractocr und ein Sprachpaket, nach Bedarf lassen sich Seiten entfernen, bereits durchsuchen. Anders sieht es je- wie etwa tesseractocrdeu. -

Igalia Desktop Summit, Berlin, Aug 2011

Igalia Desktop Summit, Berlin, Aug 2011 Juan José Sánchez Penas | [email protected] | www.igalia.com About Igalia 2 ● Open source consultancy founded in 2001 ● Privately owned (independent), flat internal structure ● Headquarters: north west of Spain (A Coruña, Galicia) ● ~45 open source developers from many countries, working from different locations ● What we do: ● Development, consultancy, training,... ● We offer our upstream expertise to help others building platforms, products and solutions Juan José SánchezPenas | [email protected] | www.igalia.com What we do 3 ● Areas/Teams: Kernel/OS, multimedia, graphics, browsers, compilers, accessibility, ... ● Platforms/Technologies: GNOME, WebKit, MeeGo, Freedesktop.org, Qt, ... ● Experience: Many projects for relevant international companies Related to platform, middleware and app development Creation of and contribution to upstream components Juan José Sánchez | [email protected] | www.igalia.com Main affiliations 4 ● Members of GNOME Foundation's Advisory Board (2007) ● Patrons of FSF (2011) ● Members of Linux Foundation (2011) Juan José Sánchez | [email protected] | www.igalia.com Events 5 ● WebKitGTK+ Hackfest, A Coruña, 2009, 2010 and 2011 ● GUADEC Hispana, A Coruña, 2005 and 2010 ● GUADEMY, A Coruña, 2007 ● GTK+ Hackfest, A Coruña, October 2010 ● ATK Hackfest, A Coruña, May 2011 Juan José Sánchez | [email protected] | www.igalia.com WebKit 6 Juan José Sánchez | [email protected] | www.igalia.com WebKit 7 ● Key for integration of web technologies in the desktop ● Stable -

Tecnologías Libres Para La Traducción Y Su Evaluación

FACULTAD DE CIENCIAS HUMANAS Y SOCIALES DEPARTAMENTO DE TRADUCCIÓN Y COMUNICACIÓN Tecnologías libres para la traducción y su evaluación Presentado por: Silvia Andrea Flórez Giraldo Dirigido por: Dra. Amparo Alcina Caudet Universitat Jaume I Castellón de la Plana, diciembre de 2012 AGRADECIMIENTOS Quiero agradecer muy especialmente a la Dra. Amparo Alcina, directora de esta tesis, en primer lugar por haberme acogido en el máster Tecnoloc y el grupo de investigación TecnoLeTTra y por haberme animado luego a continuar con mi investigación como proyecto de doctorado. Sus sugerencias y comentarios fueron fundamentales para el desarrollo de esta tesis. Agradezco también al Dr. Grabriel Quiroz, quien como profesor durante mi último año en la Licenciatura en Traducción en la Universidad de Antioquia (Medellín, Colombia) despertó mi interés por la informática aplicada a la traducción. De igual manera, agradezco a mis estudiantes de Traducción Asistida por Computador en la misma universidad por interesarse en el software libre y por motivarme a buscar herramientas alternativas que pudiéramos utilizar en clase sin tener que depender de versiones de demostración ni recurrir a la piratería. A mi colega Pedro, que comparte conmigo el interés por la informática aplicada a la traducción y por el software libre, le agradezco la oportunidad de llevar la teoría a la práctica profesional durante todos estos años. Quisiera agradecer a Esperanza, Anna, Verónica y Ewelina, compañeras de aventuras en la UJI, por haber sido mi grupo de apoyo y estar siempre ahí para escucharme en los momentos más difíciles. Mis más sinceros agradecimientos también a María por ser esa voz de aliento y cordura que necesitaba escuchar para seguir adelante y llegar a feliz término con este proyecto. -

Gradu04243.Pdf

Paperilomakkeesta tietomalliin Kalle Malin Tampereen yliopisto Tietojenkäsittelytieteiden laitos Tietojenkäsittelyoppi Pro gradu -tutkielma Ohjaaja: Erkki Mäkinen Toukokuu 2010 i Tampereen yliopisto Tietojenkäsittelytieteiden laitos Tietojenkäsittelyoppi Kalle Malin: Paperilomakkeesta tietomalliin Pro gradu -tutkielma, 61 sivua, 3 liitesivua Toukokuu 2010 Tässä tutkimuksessa käsitellään paperilomakkeiden digitalisointiin liittyvää kokonaisprosessia yleisellä tasolla. Prosessiin tutustutaan tarkastelemalla eri osa-alueiden toimintoja ja laitteita kokonaisjärjestelmän vaatimusten näkökul- masta. Tarkastelu aloitetaan paperilomakkeiden skannaamisesta ja lopetetaan kerättyjen tietojen tallentamiseen tietomalliin. Lisäksi luodaan silmäys markki- noilla oleviin valmisratkaisuihin, jotka sisältävät prosessin kannalta oleelliset toiminnot. Avainsanat ja -sanonnat: lomake, skannaus, lomakerakenne, lomakemalli, OCR, OFR, tietomalli. ii Lyhenteet ADRT = Adaptive Document Recoginition Technology API = Application Programming Interface BAG = Block Adjacency Graph DIR = Document Image Recognition dpi= Dots Per Inch ICR = Intelligent Character Recognition IFPS = Intelligent Forms Processing System IR = Information Retrieval IRM = Image and Records Management IWR = Intelligent Word Recognition NAS = Network Attached Storage OCR = Optical Character Recognition OFR = Optical Form Recognition OHR = Optical Handwriting Recognition OMR = Optical Mark Recognition PDF = Portable Document Format SAN = Storage Area Networks SDK = Software Development Kit SLM -

Informe Tradución Ao Galego Do Contorno GNOME 3.0

INFORME DE TRADUCIÓN AO GALEGO DO CONTORNO GNOME 3.0 ABRIL 2011 Oficina de Software Libre da USC www.usc.es/osl [email protected] LICENZA DO DOCUMENTO Este documento pode empregarse, modificarse e redistribuírse baixo dos termos de unha das seguintes licenzas, a escoller: GNU Free Documentation License 1.3 Copyright (C) 2009 Oficina de Software Libre da USC. Garántese o permiso para copiar, distribuír e/ou modificar este documento baixo dos termos da GNU Free Documentation License versión 1.3 ou, baixo o seu criterio, calquera versión posterior publicada pola Free Software Foundation; sen seccións invariantes, sen textos de portada e sen textos de contraportada. Pode achar o texto íntegro da licenza en: http://www.gnu.org/copyleft/fdl.html Creative Commons Atribución – CompartirIgual 3.0 Copyright (C) 2009 Oficina de Software Libre da USC. Vostede é libre de: • Copiar, distribuír e comunicar publicamente a obra • Facer obras derivadas Baixo das condicións seguintes: • Recoñecemento. Debe recoñecer os créditos da obra do xeito especificado polo autor ou polo licenciador (pero non de xeito que suxira que ten o seu apoio ou apoian o uso que fan da súa obra. • Compartir baixo a mesma licenza.. Se transforma ou modifica esta obra para crear unha obra derivada, só pode distribuír a obra resultante baixo a mesma licenza, unha similar ou unha compatíbel. Pode achar o texto íntegro da licenza en: http://creativecommons.org/licenses/by-sa/3.0/es/deed.gl TÁBOA DE CONTIDOS Licenza do documento............................................................................................................3 -

Extracción De Eventos En Prensa Escrita Uruguaya Del Siglo XIX Por Pablo Anzorena Manuel Laguarda Bruno Olivera

UNIVERSIDAD DE LA REPÚBLICA Extracción de eventos en prensa escrita Uruguaya del siglo XIX por Pablo Anzorena Manuel Laguarda Bruno Olivera Tutora: Regina Motz Informe de Proyecto de Grado presentado al Tribunal Evaluador como requisito de graduación de la carrera Ingeniería en Computación en la Facultad de Ingeniería 1 1. Resumen En este proyecto, se plantea el diseño y la implementación de un sistema de extracción de eventos en prensa uruguaya del siglo XIX digitalizados en formato de imagen, generando clusters de eventos agrupados según su similitud semántica. La solución propuesta se divide en 4 módulos: módulo de preprocesamiento compuesto por el OCR y un corrector de texto, módulo de extracción de eventos implementado en Python y utilizando Freeling1, módulo de clustering de eventos implementado en Python utilizando Word Embeddings y por último el módulo de etiquetado de los clusters también utilizando Python. Debido a la cantidad de ruido en los datos que hay en los diarios antiguos, la evaluación de la solución se hizo sobre datos de prensa digital de la actualidad. Se evaluaron diferentes medidas a lo largo del proceso. Para la extracción de eventos se logró conseguir una Precisión y Recall de un 56% y 70% respectivamente. En el caso del módulo de clustering se evaluaron las medidas de Silhouette Coefficient, la Pureza y la Entropía, dando 0.01, 0.57 y 1.41 respectivamente. Finalmente se etiquetaron los clusters utilizando como etiqueta las secciones de los diarios de la actualidad, realizándose una evaluación del etiquetado. 1 http://nlp.lsi.upc.edu/freeling/demo/demo.php 2 Índice general 1. -

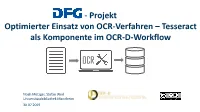

Tesseract Als Komponente Im OCR-D-Workflow

- Projekt Optimierter Einsatz von OCR-Verfahren – Tesseract als Komponente im OCR-D-Workflow OCR Noah Metzger, Stefan Weil Universitätsbibliothek Mannheim 30.07.2019 Prozesskette Forschungdaten aus Digitalisaten Digitalisierung/ Text- Struktur- Vorverarbeitung erkennung parsing (OCR) Strukturierung Bücher Generierung Generierung der digitalen Inhalte digitaler Ausgangsformate der digitalen Inhalte (Datenextraktion) 28.03.2019 2 OCR Software (Übersicht) kommerzielle fett = eingesetzt in Bibliotheken Software ABBYY Finereader Tesseract freie Software BIT-Alpha OCRopus / Kraken / Readiris Calamari OmniPage CuneiForm … … Adobe Acrobat CorelDraw ABBYY Cloud OCR Microsoft OneNote Google Cloud Vision … Microsoft Azure Computer Vision OCR.space Online OCR … Cloud OCR 28.03.2019 3 Tesseract OCR • Open Source • Komplettlösung „All-in-1“ • Mehr als 100 Sprachen / mehr als 30 Schriften • Liest Bilder in allen gängigen Formaten (nicht PDF!) • Erzeugt Text, PDF, hOCR, ALTO, TSV • Große, weltweite Anwender-Community • Technologisch aktuell (Texterkennung mit neuronalem Netz) • Aktive Weiterentwicklung u. a. im DFG-Projekt OCR-D 28.03.2019 4 Tesseract an der UB Mannheim • Verwendung im DFG-Projekt „Aktienführer“ https://digi.bib.uni-mannheim.de/aktienfuehrer/ • Volltexte für Deutscher Reichsanzeiger und Vorgänger https://digi.bib.uni-mannheim.de/periodika/reichsanzeiger • DFG-Projekt „OCR-D“ http://www.ocr-d.de/, Modulprojekt „Optimierter Einsatz von OCR-Verfahren – Tesseract als Komponente im OCR-D-Workflow“: Schnittstellen, Stabilität, Performance -

Integral Estimation in Quantum Physics

INTEGRAL ESTIMATION IN QUANTUM PHYSICS by Jane Doe A dissertation submitted to the faculty of The University of Utah in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Mathematical Physics Department of Mathematics The University of Utah May 2016 Copyright c Jane Doe 2016 All Rights Reserved The University of Utah Graduate School STATEMENT OF DISSERTATION APPROVAL The dissertation of Jane Doe has been approved by the following supervisory committee members: Cornelius L´anczos , Chair(s) 17 Feb 2016 Date Approved Hans Bethe , Member 17 Feb 2016 Date Approved Niels Bohr , Member 17 Feb 2016 Date Approved Max Born , Member 17 Feb 2016 Date Approved Paul A. M. Dirac , Member 17 Feb 2016 Date Approved by Petrus Marcus Aurelius Featherstone-Hough , Chair/Dean of the Department/College/School of Mathematics and by Alice B. Toklas , Dean of The Graduate School. ABSTRACT Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. -

Paperport 14 Getting Started Guide Version: 14.7

PaperPort 14 Getting Started Guide Version: 14.7 Date: 2019-09-01 Table of Contents Legal notices................................................................................................................................................4 Welcome to PaperPort................................................................................................................................5 Accompanying programs....................................................................................................................5 Install PaperPort................................................................................................................................. 5 Activate PaperPort...................................................................................................................6 Registration.............................................................................................................................. 6 Learning PaperPort.............................................................................................................................6 Minimum system requirements.......................................................................................................... 7 Key features........................................................................................................................................8 About PaperPort.......................................................................................................................................... 9 The PaperPort desktop.....................................................................................................................