Adaptive Observer Design for Parabolic Partial Differential Equations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Use of Boundary Integral Equations and Linear Operators in Room Acoustics

Guimarães - Portugal paper ID: 169 /p.1 On the Use of Boundary Integral Equations and Linear Operators in Room Acoustics D. Alarcão and J. L. Bento Coelho CAPS – Instituto Superior Técnico, Av. Rovisco Pais, P-1049-001 Lisbon, Portugal, [email protected] RESUMO: Este artigo introduz alguns conceitos de base sobre equações integrais e mostra a sua aplicação na determinação da propagação de energia acústica em recintos fechados. Mostra-se que as equações integrais resultantes podem ser formuladas na linguagem dos operadores lineares, daí resultando uma notação simplificada em que as propriedades algébricas das equações que determinam a propagação da energia são mais facilmente caracterizadas. São apresentados alguns métodos gerais de resolução de equações integrais tal como métodos aproximados e métodos de bases vectoriais finitas. Apresentam-se, ainda, as definições necessárias envolvidas na descrição de campos de energia acústica, que servem de ponto de partida à aplicação das técnicas apresentadas. ABSTRACT: This paper introduces some basic concepts of boundary integral equations and their application for the determination of the propagation of sound energy inside enclosures. Linear operators are shown to provide a simplified notation and to emphasize the algebraic properties of the resulting integral equations. Some general methods of solving linear operator and integral equations are reviewed and discussed, such as approximation methods and finite basis methods. In addition, some of the necessary definitions involved in describing acoustic energy fields for applying these techniques in the field of room acoustics prediction are presented. 1. INTRODUCTION Energy based methods offer an interesting and valid alternative mid and high frequency technique to classical predictive methods such as FEM, BEM and others. -

Current Affairs Quiz – August, September & October for IBPS Exams

Current Affairs Quiz – August, September & October for IBPS Exams August - Current Affairs Quiz: Q.1) The Rajya Sabha passed the Constitution Q.8) Who came up with a spirited effort to beat _____ Bill, 2017 with amendments for setting up Florian Kaczur of Hungary and finish second in of a National Commission for Backward Classes, the Czech International Open Chess tournament was passed after dropping Clause 3. at Pardubidze in Czech Republic? a) 121st b) 122nd c) 123rd a) Humpy Koneru b) Abhijeet Gupta d) 124th e) 125th c) Vishwanathan Anand d) Harika Dronavalli Q.2) From which month of next year onwards e) Tania Sachdev government has ordered state-run oil companies Q.9) Which country will host 2024 summer to raise subsidised cooking gas, LPG, prices by Olympics? four rupees per cylinder every month to eliminate a) Japan b) Australia c) India all the subsidies? d) France e) USA a) January b) February c) March Q.10) Who beats Ryan Harrison to claim fourth d) April e) May ATP Atlanta Open title, he has reached the final in Q.3) Who will inaugurate the two-day Conclave of seven of eight editions of the tournament, added Tax Officers ―Rajaswa Gyansangam‖ scheduled to a fourth title to those he won in 2013, 2014 and be held on 1st and 2nd September, 2017 in New 2015? Delhi? a) Roger Federer b) Nick Kyrgios a) Arun Jaitley b) Narendra Modi c) Andy Murray d) John Isner c) Rajnath Singh d) Nitin Gadkari e) Kevin Anderson e) Narendra Singh Tomar Q.11) Who was the youngest of the famous seven Q.4) The Executive Committee of National Mission ‗Dagar Bandhus‘ and had dedicated his life to for Clean Ganga (4th meeting) approved seven keeping the Dhrupad tradition alive, died projects worth Rs _____ crore in the sector of recently. -

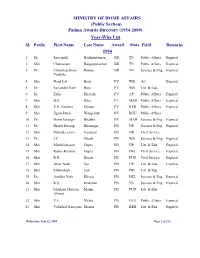

(Public Section) Padma Awards Directory (1954-2009) Year-Wise List Sl

MINISTRY OF HOME AFFAIRS (Public Section) Padma Awards Directory (1954-2009) Year-Wise List Sl. Prefix First Name Last Name Award State Field Remarks 1954 1 Dr. Sarvapalli Radhakrishnan BR TN Public Affairs Expired 2 Shri Chakravarti Rajagopalachari BR TN Public Affairs Expired 3 Dr. Chandrasekhara Raman BR TN Science & Eng. Expired Venkata 4 Shri Nand Lal Bose PV WB Art Expired 5 Dr. Satyendra Nath Bose PV WB Litt. & Edu. 6 Dr. Zakir Hussain PV AP Public Affairs Expired 7 Shri B.G. Kher PV MAH Public Affairs Expired 8 Shri V.K. Krishna Menon PV KER Public Affairs Expired 9 Shri Jigme Dorji Wangchuk PV BHU Public Affairs 10 Dr. Homi Jehangir Bhabha PB MAH Science & Eng. Expired 11 Dr. Shanti Swarup Bhatnagar PB UP Science & Eng. Expired 12 Shri Mahadeva Iyer Ganapati PB OR Civil Service 13 Dr. J.C. Ghosh PB WB Science & Eng. Expired 14 Shri Maithilisharan Gupta PB UP Litt. & Edu. Expired 15 Shri Radha Krishan Gupta PB DEL Civil Service Expired 16 Shri R.R. Handa PB PUN Civil Service Expired 17 Shri Amar Nath Jha PB UP Litt. & Edu. Expired 18 Shri Malihabadi Josh PB DEL Litt. & Edu. 19 Dr. Ajudhia Nath Khosla PB DEL Science & Eng. Expired 20 Shri K.S. Krishnan PB TN Science & Eng. Expired 21 Shri Moulana Hussain Madni PB PUN Litt. & Edu. Ahmed 22 Shri V.L. Mehta PB GUJ Public Affairs Expired 23 Shri Vallathol Narayana Menon PB KER Litt. & Edu. Expired Wednesday, July 22, 2009 Page 1 of 133 Sl. Prefix First Name Last Name Award State Field Remarks 24 Dr. -

Convex Programming-Based Phase Retrieval: Theory and Applications

Convex programming-based phase retrieval: Theory and applications Thesis by Kishore Jaganathan In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy CALIFORNIA INSTITUTE OF TECHNOLOGY Pasadena, California 2016 Defended May 16, 2016 ii © 2016 Kishore Jaganathan All Rights Reserved iii To my family and friends. iv En vazhi thani vazhi (my way is a unique way). - Rajnikanth v ACKNOWLEDGEMENTS Firstly, I would like to express my sincere gratitude to my advisor Prof. Babak Hassibi. I could not have imagined having a better advisor and mentor for my Ph.D. studies. His immense knowledge, guidance, kindness and support over the years have played a crucial role in making this work possible. My understanding of many topics, including convex optimization, signal processing and entropy vectors, have significantly increased because of him. His exceptional problem solving abilities, teaching qualities and deep understanding of a wide variety of subjects have inspired me a lot. Furthermore, the intellectual freedom he offered throughout the course of my graduate studies helped me pursue my passion and grow as a research scientist. Besides my advisor, I am also extremely indebted to Prof. Yonina C. Eldar. I have been privileged to have had the opportunity to collaborate with her. Her vision and ideas have played a very important role in shaping this work. Her vast knowledge, attention to detail, work ethic and energy have influenced me significantly. Additionally, I would like to thank her for providing me the opportunity to contribute to a book chapter on phase retrieval. I would also like to thank Prof. -

Partial Differential Equations

CALENDAR OF AMS MEETINGS THIS CALENDAR lists all meetings which have been approved by the Council pnor to the date this issue of the Nouces was sent to press. The summer and annual meetings are joint meetings of the Mathematical Association of America and the Ameri· can Mathematical Society. The meeting dates which fall rather far in the future are subject to change; this is particularly true of meetings to which no numbers have yet been assigned. Programs of the meetings will appear in the issues indicated below. First and second announcements of the meetings will have appeared in earlier issues. ABSTRACTS OF PAPERS presented at a meeting of the Society are published in the journal Abstracts of papers presented to the American Mathematical Society in the issue corresponding to that of the Notices which contains the program of the meet ing. Abstracts should be submitted on special forms which are available in many departments of mathematics and from the office of the Society in Providence. Abstracts of papers to be presented at the meeting must be received at the headquarters of the Society in Providence, Rhode Island, on or before the deadline given below for the meeting. Note that the deadline for ab stracts submitted for consideration for presentation at special sessions is usually three weeks earlier than that specified below. For additional information consult the meeting announcement and the list of organizers of special sessions. MEETING ABSTRACT NUMBER DATE PLACE DEADLINE ISSUE 778 June 20-21, 1980 Ellensburg, Washington APRIL 21 June 1980 779 August 18-22, 1980 Ann Arbor, Michigan JUNE 3 August 1980 (84th Summer Meeting) October 17-18, 1980 Storrs, Connecticut October 31-November 1, 1980 Kenosha, Wisconsin January 7-11, 1981 San Francisco, California (87th Annual Meeting) January 13-17, 1982 Cincinnati, Ohio (88th Annual Meeting) Notices DEADLINES ISSUE NEWS ADVERTISING June 1980 April 18 April 29 August 1980 June 3 June 18 Deadlines for announcements intended for the Special Meetings section are the same as for News. -

AMATH 731: Applied Functional Analysis Lecture Notes

AMATH 731: Applied Functional Analysis Lecture Notes Sumeet Khatri November 24, 2014 Table of Contents List of Tables ................................................... v List of Theorems ................................................ ix List of Definitions ................................................ xii Preface ....................................................... xiii 1 Review of Real Analysis .......................................... 1 1.1 Convergence and Cauchy Sequences...............................1 1.2 Convergence of Sequences and Cauchy Sequences.......................1 2 Measure Theory ............................................... 2 2.1 The Concept of Measurability...................................3 2.1.1 Simple Functions...................................... 10 2.2 Elementary Properties of Measures................................ 11 2.2.1 Arithmetic in [0, ] .................................... 12 1 2.3 Integration of Positive Functions.................................. 13 2.4 Integration of Complex Functions................................. 14 2.5 Sets of Measure Zero......................................... 14 2.6 Positive Borel Measures....................................... 14 2.6.1 Vector Spaces and Topological Preliminaries...................... 14 2.6.2 The Riesz Representation Theorem........................... 14 2.6.3 Regularity Properties of Borel Measures........................ 14 2.6.4 Lesbesgue Measure..................................... 14 2.6.5 Continuity Properties of Measurable Functions................... -

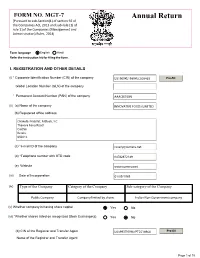

IFL Annual Return 2018-19

FORM NO. MGT-7 Annual Return [Pursuant to sub-Section(1) of section 92 of the Companies Act, 2013 and sub-rule (1) of rule 11of the Companies (Management and Administration) Rules, 2014] Form language English Hindi Refer the instruction kit for filing the form. I. REGISTRATION AND OTHER DETAILS (i) * Corporate Identification Number (CIN) of the company Pre-fill Global Location Number (GLN) of the company * Permanent Account Number (PAN) of the company (ii) (a) Name of the company (b) Registered office address (c) *e-mail ID of the company (d) *Telephone number with STD code (e) Website (iii) Date of Incorporation (iv) Type of the Company Category of the Company Sub-category of the Company (v) Whether company is having share capital Yes No (vi) *Whether shares listed on recognized Stock Exchange(s) Yes No (b) CIN of the Registrar and Transfer Agent Pre-fill Name of the Registrar and Transfer Agent Page 1 of 15 Registered office address of the Registrar and Transfer Agents (vii) *Financial year From date 01/04/2018 (DD/MM/YYYY) To date 31/03/2019 (DD/MM/YYYY) (viii) *Whether Annual general meeting (AGM) held Yes No (a) If yes, date of AGM 24/09/2019 (b) Due date of AGM 30/09/2019 (c) Whether any extension for AGM granted Yes No II. PRINCIPAL BUSINESS ACTIVITIES OF THE COMPANY *Number of business activities 1 S.No Main Description of Main Activity group Business Description of Business Activity % of turnover Activity Activity of the group code Code company I I2 III. PARTICULARS OF HOLDING, SUBSIDIARY AND ASSOCIATE COMPANIES (INCLUDING JOINT VENTURES) *No. -

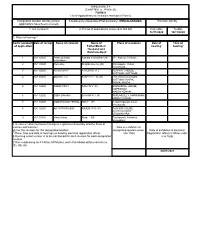

(CHAPTER V , PARA 25) FORM 9 List of Applications for Inclusion

ANNEXURE 5.8 (CHAPTER V , PARA 25) FORM 9 List of Applications for inclusion received in Form 6 Designated location identity (where Constituency (Assembly/£Parliamentary): IRINJALAKKUDA Revision identity applications have been received) 1. List number@ 2. Period of applications (covered in this list) From date To date 16/11/2020 16/11/2020 3. Place of hearing * Serial number$ Date of receipt Name of claimant Name of Place of residence Date of Time of of application Father/Mother/ hearing* hearing* Husband and (Relationship)# 1 16/11/2020 Pradeep Erattu Kamala Velayudhan (M) 271, Kattoor, Thrissur, , velayudhan 2 16/11/2020 Elwin Roy . Shajitha Roy roy (M) Chittilappilly , Kattur, Irinjalakuda, , 3 16/11/2020 AARIA MARY A G DAVID (F) ALAPPATT HOUSE , KATTOOR, KATTOOR, , 4 16/11/2020 SMITHA T R BASHEER T M (H) THATHARAPARAMBIL , KATTUNGACHIRA, IRINJALAKUDA, , 5 16/11/2020 ROBIN PAILY PAILY E V (F) ELENJIKKAL HOUSE, MAPRANAM, MADAYIKONAM, , 6 16/11/2020 SIBIN SHAJAN SHAJAN P J (F) PERUMBULLY, MAPRANAM, MADAYIKONAM, , 7 16/11/2020 GODWIN MALIYEKKAL TONY (F) 0, Manthripuram south, Irinjalakuda, , 8 16/11/2020 ASHWITA RAJESH RAJESH P G (F) PACHERI HOUSE, NADAVARAMBA, VELOOKKARA, , 9 16/11/2020 Vinay Balraj Bindu (M) Thathamath, Avittathur , Velookkara, , £ In case of Union territories having no Legislative Assembly and the State of Jammu and Kashmir Date of exhibition at @ For this revision for this designated location designated location under Date of exhibition at Electoral * Place, time and date of hearings as fixed by electoral registration officer rule 15(b) Registration Officer¶s Office under $ Running serial number is to be maintained for each revision for each designated rule 16(b) location # Give relationship as F-Father, M=Mother, and H=Husband within brackets i.e. -

Patrika-March 2013.Pmd

No. 57 March 2013 Newsletter of the Indian Academy of Sciences Seventy-Eighth Annual Meeting, Dehra Dun 2 – 4 November 2012 The 78th Annual Meeting of the Academy, hosted by the Wadia Institute of Himalayan Geology in Dehra Dun over November 2–4, 2012, saw a return to this venue after nineteen years, the previous Annual Meeting in Inside.... this city having been in 1993. Thanks to its location in the Himalayan foothills, and the proximity to many 1. Seventy-Eighth Annual Meeting, Dehra Dun places of historic interest, the attendance was very 2 – 4 November 2012 .......................................... 1 good – 135 Fellows, 11 Associates and 47 invited 2. Twenty-Fourth Mid-Year Meeting teachers. 5 – 6 July 2013 ..................................................... 4 The packed three day 3. 2013 Elections ........................................................ 6 programme included, apart from the opening 4. Special Issues of Journals ..................................... 7 Presidential address, two 5. Discussion Meetings ............................................... 9 Special Lectures, two 6. ‘Women in Science’ Panel Programmes .............. 13 evening Public Lectures, two mini Symposia, and 7. STI Policy – Brainstorming Session .................... 15 presentations by 19 Fellows 8. Raman Professor .................................................. 15 and Associates. The Presidential address by Professor A. K. Sood, the 9. Summer Research Fellowship Programme ......... 16 concluding one for the triennium 2010-2012, carried forward -

Curriculum Vitae of Thomas Kailath

Curriculum Vitae-Thomas Kailath Hitachi America Professor of Engineering, Emeritus Information Systems Laboratory, Dept. of Electrical Engineering Stanford, CA 94305-9510 USA Tel: +1-650-494-9401 Email: [email protected], [email protected] Fields of Interest: Information Theory, Communication, Computation, Control, Linear Systems, Statistical Signal Processing, VLSI systems, Semiconductor Manufacturing and Lithography. Probability Theory, Mathematical Statistics, Linear Algebra, Matrix and Operator Theory. Home page: www.stanford.edu/~tkailath Born in Poona (now Pune), India, June 7, 1935. In the US since 1957; naturalized: June 8, 1976 B.E. (Telecom.), College of Engineering, Pune, India, June 1956 S.M. (Elec. Eng.), Massachusetts Institute of Technology, June, 1959 Thesis: Sampling Models for Time-Variant Filters Sc.D. (Elec. Eng.), Massachusetts Institute of Technology, June 1961 Thesis: Communication via Randomly Varying Channels Positions Sep 1957- Jun 1961 : Research Assistant, Research Laboratory for Electronics, MIT Oct 1961-Dec 1962 : Communications Research Group, Jet Propulsion Labs, Pasadena, CA. He also held a part-time teaching appointment at Caltech Jan 1963- Aug 1964 : Acting Associate Professor of Elec. Eng., Stanford University (on leave at UC Berkeley, Jan-Aug, 1963) Sep 1964-Jan 1968 : Associate Professor of Elec. Eng. Jan 1968- Feb 1968 : Full Professor of Elec. Eng. Feb 1988-June 2001 : First holder of the Hitachi America Professorship in Engineering July 2001- : Hitachi America Professorship in Engineering, Emeritus; recalled to active duty to continue his research and writing activities. He has also held shorter-term appointments at several institutions around the world: UC Berkeley (1963), Indian Statistical Institute (1966), Bell Labs (1969), Indian Institute of Science (1969-70, 1976, 1993, 1994, 2000, 2002), Cambridge University (1977), K. -

![Arxiv:1709.09646V1 [Math.FA] 27 Sep 2017 Mensional Aahspace Banach Us-Opeetdin Quasi-Complemented Iesoa Aahspace Banach Dimensional Basis? Schauder a with Quotient 2](https://docslib.b-cdn.net/cover/8458/arxiv-1709-09646v1-math-fa-27-sep-2017-mensional-aahspace-banach-us-opeetdin-quasi-complemented-iesoa-aahspace-banach-dimensional-basis-schauder-a-with-quotient-2-1898458.webp)

Arxiv:1709.09646V1 [Math.FA] 27 Sep 2017 Mensional Aahspace Banach Us-Opeetdin Quasi-Complemented Iesoa Aahspace Banach Dimensional Basis? Schauder a with Quotient 2

ON THE SEPARABLE QUOTIENT PROBLEM FOR BANACH SPACES J. C. FERRANDO, J. KA¸KOL, M. LOPEZ-PELLICER´ AND W. SLIWA´ To the memory of our Friend Professor Pawe l Doma´nski Abstract. While the classic separable quotient problem remains open, we survey gen- eral results related to this problem and examine the existence of a particular infinite- dimensional separable quotient in some Banach spaces of vector-valued functions, linear operators and vector measures. Most of the results presented are consequence of known facts, some of them relative to the presence of complemented copies of the classic sequence spaces c0 and ℓp, for 1 ≤ p ≤ ∞. Also recent results of Argyros, Dodos, Kanellopoulos [1] and Sliwa´ [66] are provided. This makes our presentation supplementary to a previous survey (1997) due to Mujica. 1. Introduction One of unsolved problems of Functional Analysis (posed by S. Mazur in 1932) asks: Problem 1. Does any infinite-dimensional Banach space have a separable (infinite di- mensional) quotient? An easy application of the open mapping theorem shows that an infinite dimensional Banach space X has a separable quotient if and only if X is mapped on a separable Banach space under a continuous linear map. Seems that the first comments about Problem 1 are mentioned in [46] and [55]. It is already well known that all reflexive, or even all infinite-dimensional weakly compactly generated Banach spaces (WCG for short), have separable quotients. In [38, Theorem IV.1(i)] Johnson and Rosenthal proved that every infinite dimensional separable Banach arXiv:1709.09646v1 [math.FA] 27 Sep 2017 space admits a quotient with a Schauder basis. -

Continuity of Convolution of Test Functions on Lie Groups

Canad. J. Math. Vol. 66 (1), 2014 pp. 102–140 http://dx.doi.org/10.4153/CJM-2012-035-6 c Canadian Mathematical Society 2012 Continuity of Convolution of Test Functions on Lie Groups Lidia Birth and Helge Glockner¨ 1 1 1 Abstract. For a Lie group G, we show that the map Cc (G) × Cc (G) ! Cc (G), (γ; η) 7! γ ∗ η, taking a pair of test functions to their convolution, is continuous if and only if G is σ-compact. More generally, consider r; s; t 2 N0 [ f1g with t ≤ r + s, locally convex spaces E1, E2 and a continuous r s bilinear map b: E1 × E2 ! F to a complete locally convex space F. Let β : Cc (G; E1) × Cc(G; E2) ! t Cc(G; F), (γ; η) 7! γ ∗b η be the associated convolution map. The main result is a characterization of those (G; r; s; t; b) for which β is continuous. Convolution of compactly supported continuous functions on a locally compact group is also discussed as well as convolution of compactly supported L1-functions and convolution of compactly supported Radon measures. 1 Introduction and Statement of Results It has been known since the beginnings of distribution theory that the bilinear convo- 1 n 1 n 1 n lution map β : Cc (R )×Cc (R ) ! Cc (R ), (γ; η) 7! γ ∗η (and even convolution 1 n 0 1 n 1 n C (R ) × Cc (R ) ! Cc (R )) is hypocontinuous [39, p. 167]. However, a proof for continuity of β was published only recently [29, Proposition 2.3].