MCS Planning and Engineering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Documentation Reference for Avaya Ethernet Routing Switch 4000 Series

Documentation Reference for Avaya Ethernet Routing Switch 4000 Series Release 5.7 NN47205-101 Issue 06.01 October 2013 © 2013 Avaya Inc. BINDING CONTRACT BETWEEN YOU AND AVAYA INC. OR THE APPLICABLE AVAYA AFFILIATE (“AVAYA”). All Rights Reserved. Avaya grants you a license within the scope of the license types Notice described below, with the exception of Heritage Nortel Software, for which the scope of the license is detailed below. Where the order While reasonable efforts have been made to ensure that the documentation does not expressly identify a license type, the information in this document is complete and accurate at the time of applicable license will be a Designated System License. The applicable printing, Avaya assumes no liability for any errors. Avaya reserves the number of licenses and units of capacity for which the license is granted right to make changes and corrections to the information in this will be one (1), unless a different number of licenses or units of capacity document without the obligation to notify any person or organization of is specified in the documentation or other materials available to you. such changes. “Designated Processor” means a single stand-alone computing device. “Server” means a Designated Processor that hosts a software Documentation disclaimer application to be accessed by multiple users. “Documentation” means information published by Avaya in varying mediums which may include product information, operating instructions Licence types and performance specifications that Avaya generally makes available Designated System(s) License (DS). End User may install and use to users of its products. Documentation does not include marketing each copy of the Software only on a number of Designated Processors materials. -

Configuring Security on Avaya Ethernet Routing Switch 4000 Series

Configuring Security on Avaya Ethernet Routing Switch 4000 Series Release 5.7 NN47205-505 Issue 09.01 November 2013 © 2013 Avaya Inc. BINDING CONTRACT BETWEEN YOU AND AVAYA INC. OR THE APPLICABLE AVAYA AFFILIATE (“AVAYA”). All Rights Reserved. Avaya grants you a license within the scope of the license types Notice described below, with the exception of Heritage Nortel Software, for which the scope of the license is detailed below. Where the order While reasonable efforts have been made to ensure that the documentation does not expressly identify a license type, the information in this document is complete and accurate at the time of applicable license will be a Designated System License. The applicable printing, Avaya assumes no liability for any errors. Avaya reserves the number of licenses and units of capacity for which the license is granted right to make changes and corrections to the information in this will be one (1), unless a different number of licenses or units of capacity document without the obligation to notify any person or organization of is specified in the documentation or other materials available to you. such changes. “Designated Processor” means a single stand-alone computing device. “Server” means a Designated Processor that hosts a software Documentation disclaimer application to be accessed by multiple users. “Documentation” means information published by Avaya in varying mediums which may include product information, operating instructions Licence types and performance specifications that Avaya generally makes available Designated System(s) License (DS). End User may install and use to users of its products. Documentation does not include marketing each copy of the Software only on a number of Designated Processors materials. -

Avaya Solutions Platform 4200 Series Solution Description

Avaya Solutions Platform 4200 Series Solution Description Release 4.1.0.1 Issue 1 September 2020 © 2019-2020, Avaya Inc. YOU DO NOT WISH TO ACCEPT THESE TERMS OF USE, YOU All Rights Reserved. MUST NOT ACCESS OR USE THE HOSTED SERVICE OR AUTHORIZE ANYONE TO ACCESS OR USE THE HOSTED Notice SERVICE. While reasonable efforts have been made to ensure that the Licenses information in this document is complete and accurate at the time of printing, Avaya assumes no liability for any errors. Avaya reserves THE SOFTWARE LICENSE TERMS AVAILABLE ON THE AVAYA the right to make changes and corrections to the information in this WEBSITE, HTTPS://SUPPORT.AVAYA.COM/LICENSEINFO, document without the obligation to notify any person or organization UNDER THE LINK “AVAYA SOFTWARE LICENSE TERMS (Avaya of such changes. Products)” OR SUCH SUCCESSOR SITE AS DESIGNATED BY AVAYA, ARE APPLICABLE TO ANYONE WHO DOWNLOADS, Documentation disclaimer USES AND/OR INSTALLS AVAYA SOFTWARE, PURCHASED “Documentation” means information published in varying mediums FROM AVAYA INC., ANY AVAYA AFFILIATE, OR AN AVAYA which may include product information, operating instructions and CHANNEL PARTNER (AS APPLICABLE) UNDER A COMMERCIAL performance specifications that are generally made available to users AGREEMENT WITH AVAYA OR AN AVAYA CHANNEL PARTNER. of products. Documentation does not include marketing materials. UNLESS OTHERWISE AGREED TO BY AVAYA IN WRITING, Avaya shall not be responsible for any modifications, additions, or AVAYA DOES NOT EXTEND THIS LICENSE IF THE SOFTWARE deletions to the original published version of Documentation unless WAS OBTAINED FROM ANYONE OTHER THAN AVAYA, AN AVAYA such modifications, additions, or deletions were performed by or on AFFILIATE OR AN AVAYA CHANNEL PARTNER; AVAYA the express behalf of Avaya. -

Communication Server 1000E Planning and Engineering Avaya Communication Server 1000

Communication Server 1000E Planning and Engineering Avaya Communication Server 1000 7.5 NN43041-220, 05.12 May 2012 © 2012 Avaya Inc. Copyright All Rights Reserved. Except where expressly stated otherwise, no use should be made of materials on this site, the Documentation, Software, or Hardware Notice provided by Avaya. All content on this site, the documentation and the Product provided by Avaya including the selection, arrangement and While reasonable efforts have been made to ensure that the design of the content is owned either by Avaya or its licensors and is information in this document is complete and accurate at the time of protected by copyright and other intellectual property laws including the printing, Avaya assumes no liability for any errors. Avaya reserves the sui generis rights relating to the protection of databases. You may not right to make changes and corrections to the information in this modify, copy, reproduce, republish, upload, post, transmit or distribute document without the obligation to notify any person or organization of in any way any content, in whole or in part, including any code and such changes. software unless expressly authorized by Avaya. Unauthorized reproduction, transmission, dissemination, storage, and or use without Documentation disclaimer the express written consent of Avaya can be a criminal, as well as a “Documentation” means information published by Avaya in varying civil offense under the applicable law. mediums which may include product information, operating instructions Third-party components and performance specifications that Avaya generally makes available to users of its products. Documentation does not include marketing Certain software programs or portions thereof included in the Product materials. -

Avaya Interchange Release 5.4 Administration

$YD\D,QWHUFKDQJH 5HOHDVH $GPLQLVWUDWLRQ 585-313-809 Comcode 700223803 Issue 4 January 2002 Copyright 2002, Avaya Inc. This equipment returns answer-supervision signals on all DID calls Avaya Inc.All Rights Reserved, Printed in U.S.A. forwarded back to the public switched telephone network. Permissi- ble exceptions are: Notice • A call is unanswered Every effort was made to ensure that the information in this book was •A busy tone is received complete and accurate at the time of printing. However, information • A reorder tone is received is subject to change. Industry Canada (IC) Interference Information Your Responsibility for Your System’s Security This digital apparatus does not exceed the Class A limits for radio Toll fraud is the unauthorized use of your telecommunications system noise emissions set out in the radio interference regulations of Indus- by an unauthorized party, for example, persons other than your com- try Canada. pany’s employees, agents, subcontractors, or persons working on your company’s behalf. Note that there may be a risk of toll fraud Le Présent Appareil Nomérique n’émet pas de bruits radioélectriques associated with your telecommunications system and, if toll fraud dépassant les limites applicables aux appareils numériques de la class occurs, it can result in substantial additional charges for your tele- A préscrites dans le reglement sur le brouillage radioélectrique édicté communications services. par le Industrie Canada. You and your system manager are responsible for the security of your Trademarks system, such as programming and configuring your equipment to pre- See the preface of this document. vent unauthorized use. -

Avaya Aura Contact Center Overview and Specification

Avaya Aura® Contact Center Overview and Specification Release 7.1 Issue 07.06 November 2020 © 2015-2020, Avaya Inc. YOU DO NOT WISH TO ACCEPT THESE TERMS OF USE, YOU All Rights Reserved. MUST NOT ACCESS OR USE THE HOSTED SERVICE OR AUTHORIZE ANYONE TO ACCESS OR USE THE HOSTED Notice SERVICE. While reasonable efforts have been made to ensure that the Licenses information in this document is complete and accurate at the time of printing, Avaya assumes no liability for any errors. Avaya reserves THE SOFTWARE LICENSE TERMS AVAILABLE ON THE AVAYA the right to make changes and corrections to the information in this WEBSITE, HTTPS://SUPPORT.AVAYA.COM/LICENSEINFO, document without the obligation to notify any person or organization UNDER THE LINK “AVAYA SOFTWARE LICENSE TERMS (Avaya of such changes. Products)” OR SUCH SUCCESSOR SITE AS DESIGNATED BY AVAYA, ARE APPLICABLE TO ANYONE WHO DOWNLOADS, Documentation disclaimer USES AND/OR INSTALLS AVAYA SOFTWARE, PURCHASED “Documentation” means information published in varying mediums FROM AVAYA INC., ANY AVAYA AFFILIATE, OR AN AVAYA which may include product information, operating instructions and CHANNEL PARTNER (AS APPLICABLE) UNDER A COMMERCIAL performance specifications that are generally made available to users AGREEMENT WITH AVAYA OR AN AVAYA CHANNEL PARTNER. of products. Documentation does not include marketing materials. UNLESS OTHERWISE AGREED TO BY AVAYA IN WRITING, Avaya shall not be responsible for any modifications, additions, or AVAYA DOES NOT EXTEND THIS LICENSE IF THE SOFTWARE deletions to the original published version of Documentation unless WAS OBTAINED FROM ANYONE OTHER THAN AVAYA, AN AVAYA such modifications, additions, or deletions were performed by or on AFFILIATE OR AN AVAYA CHANNEL PARTNER; AVAYA the express behalf of Avaya. -

Configuring Quality of Service on Avaya Ethernet Routing Switch 4000 Series

Configuring Quality of Service on Avaya Ethernet Routing Switch 4000 Series Release 5.7 NN47205-504 Issue 09.01 November 2013 © 2013 Avaya Inc. BINDING CONTRACT BETWEEN YOU AND AVAYA INC. OR THE APPLICABLE AVAYA AFFILIATE (“AVAYA”). All Rights Reserved. Avaya grants you a license within the scope of the license types Notice described below, with the exception of Heritage Nortel Software, for which the scope of the license is detailed below. Where the order While reasonable efforts have been made to ensure that the documentation does not expressly identify a license type, the information in this document is complete and accurate at the time of applicable license will be a Designated System License. The applicable printing, Avaya assumes no liability for any errors. Avaya reserves the number of licenses and units of capacity for which the license is granted right to make changes and corrections to the information in this will be one (1), unless a different number of licenses or units of capacity document without the obligation to notify any person or organization of is specified in the documentation or other materials available to you. such changes. “Designated Processor” means a single stand-alone computing device. “Server” means a Designated Processor that hosts a software Documentation disclaimer application to be accessed by multiple users. “Documentation” means information published by Avaya in varying mediums which may include product information, operating instructions Licence types and performance specifications that Avaya generally makes available Designated System(s) License (DS). End User may install and use to users of its products. Documentation does not include marketing each copy of the Software only on a number of Designated Processors materials. -

Port Mirroring

Configuring System Monitoring on Avaya Ethernet Routing Switch 4000 Series Release 5.7 NN47205-502 Issue 09.02 March 2015 © 2015 Avaya Inc. applicable number of licenses and units of capacity for which the license is granted will be one (1), unless a different number of All Rights Reserved. licenses or units of capacity is specified in the documentation or other Notice materials available to you. “Designated Processor” means a single stand-alone computing device. “Server” means a Designated While reasonable efforts have been made to ensure that the Processor that hosts a software application to be accessed by information in this document is complete and accurate at the time of multiple users. printing, Avaya assumes no liability for any errors. Avaya reserves the right to make changes and corrections to the information in this Licence types document without the obligation to notify any person or organization Designated System(s) License (DS). End User may install and use of such changes. each copy or an Instance of the Software only on a number of Documentation disclaimer Designated Processors up to the number indicated in the order. Avaya may require the Designated Processor(s) to be identified in “Documentation” means information published by Avaya in varying the order by type, serial number, feature key, Instance, location or mediums which may include product information, operating other specific designation, or to be provided by End User to Avaya instructions and performance specifications that Avaya may generally through electronic means established by Avaya specifically for this make available to users of its products and Hosted Services. -

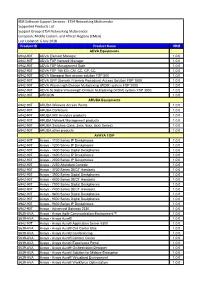

IBM Software Support Services

IBM Software Support Services - ETS4 Networking Multivendor Supported Products List Support Group: ETS4 Networking Multivendor European, Middle Eastern, and African Regions (EMEA) Last Updated: 6 July 2018 Product ID Product Name VRM ADVA Equipments 6942-90T ADVA Element Manager 1.0.0 6942-90T ADVA FSP Network Manager 1.0.0 6942-90T ADVA FSP Management Suite 1.0.0 6942-90T ADVA FSP 150 EG, CM, CC, CP, CC 1.0.0 6942-90T ADVA Managed fiber access solution FSP 500 1.0.0 6942-90T ADVA GFP (Generic Framing Procedure) Access Solution FSP 1500 1.0.0 6942-90T ADVA Wavelength Division Multiplexing (WDM) system FSP 2000 1.0.0 6942-90T ADVA Scalable Wavelength Division Multiplexing (WDM) system FSP 3000 1.0.0 6942-90T ARKOON 1.0.0 ARUBA Equipments 6942-90T ARUBA Wireless Access Points 1.0.0 6942-90T ARUBA Controlers 1.0.0 6942-90T ARUBA Wifi Analytics products 1.0.0 6942-90T ARUBA Network Management products 1.0.0 6942-90T ARUBA Switches (2xxx, 3xxx, 5xxx, 8xxx Series) 1.0.0 6942-90T ARUBA other products 1.0.0 AVAYA TOIP 6942-90T Avaya - 1100 Series IP Deskphones 1.0.0 6942-90T Avaya - 1200 Series IP Deskphones 1.0.0 6942-90T Avaya - 1400 Series Digital Deskphones 1.0.0 6942-90T Avaya - 1600 Series IP Deskphones 1.0.0 6942-90T Avaya - 2000 Series IP Deskphones 1.0.0 6942-90T Avaya - 2250 Attendant Console 1.0.0 6942-90T Avaya - 3700 Series DECT Handsets 1.0.0 6942-90T Avaya - 3900 Series Digital Deskphones 1.0.0 6942-90T Avaya - 4000 Series DECT Handsets 1.0.0 6942-90T Avaya - 7000 Series Digital Deskphones 1.0.0 6942-90T Avaya - 7400 Series DECT -

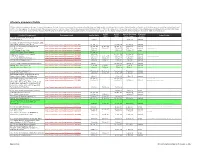

Lifecycle Dates As of 16-Dec-2016

Lifecycle Summary Matrix All future dates are subject to change. In case of discrepancy, the EoS Announcements override any EoS and EoMS dates and SSNs would override EoSPS information. Material codes are listed in order to allow users to search by material code and find the row that applies. The Avaya Lifecycle Policy Document can be found at: https://downloads.avaya.com/css/P8/documents/100081098 An explanation of that policy can be found at: http://www.avaya.com/blogs/archives/2014/04/5-things- you-need-to-know-about-avayas-product-lifecycle.html All dates in the End of Services Parts Support column are tentative unless 'actual' is in the Status of EoSPS column. Entries updated in the last 90 days are shaded green. EoMS EoMS End of Services Status of Product/Component Document Link End of Sale Order Codes (SW) (HW) Parts Support EoSPS 10 Port 3G Enterprise Gateway (by Scopia/Radvision) 1-Jul-10 2-Jul-13 planned 54882-00611, 54882-00614 1000Base-SX Small Form Factor Pluggable GBIC (mini-GBIC, connector type: MT-RJ) https://support.avaya.com/css/P8/documents/100124541 31-Jan-11 31-Jan-14 31-Jan-17 planned AA1419014-E5 1100 Series IP Deskphones UNIStim 5.5 https://support.avaya.com/css/P8/documents/100172675 31-Mar-14 31-Mar-15 31-Mar-17 31-Mar-17 planned NTYS06ACE6*, NTYS06BCE6*, NTYS15AAE6, NTYS15HAE6 1110 IP Deskphone https://support.avaya.com/css/P8/documents/100178274 7-Dec-14 7-Dec-15 7-Dec-17 7-Dec-20 planned NTQ402AA, NTQ402AB, NTQ402BA, NTQ402BB, NTYS02AAE6, NTYS02ABE6, NTYS02BAE6, NTYS02BBE6, NTYS10BA70E6, NTYS11BA70E6 1145B -

Configuration — Vlans, Spanning Tree, and Link Aggregation Avaya Ethernet Routing Switch 5000 Series

Configuration — VLANs, Spanning Tree, and Link Aggregation Avaya Ethernet Routing Switch 5000 Series 6.3 NN47200-502, 07.01 August 2012 © 2012 Avaya Inc. protected by copyright and other intellectual property laws including the sui generis rights relating to the protection of databases. You may not All Rights Reserved. modify, copy, reproduce, republish, upload, post, transmit or distribute in any way any content, in whole or in part, including any code and Notice software. Unauthorized reproduction, transmission, dissemination, storage, and or use without the express written consent of Avaya can While reasonable efforts have been made to ensure that the be a criminal, as well as a civil, offense under the applicable law. information in this document is complete and accurate at the time of printing, Avaya assumes no liability for any errors. Avaya reserves the Third-party components right to make changes and corrections to the information in this document without the obligation to notify any person or organization of Certain software programs or portions thereof included in the Product such changes. may contain software distributed under third party agreements (“Third Party Components”), which may contain terms that expand or limit Documentation disclaimer rights to use certain portions of the Product (“Third Party Terms”). Information regarding distributed Linux OS source code (for those Avaya shall not be responsible for any modifications, additions, or Products that have distributed the Linux OS source code), and deletions to the original published version of this documentation unless identifying the copyright holders of the Third Party Components and the such modifications, additions, or deletions were performed by Avaya. -

Quick Start Configuration for Avaya Ethernet Routing Switch 4000 Series

Quick Start Configuration for Avaya Ethernet Routing Switch 4000 Series Release 5.7 NN47205-104 Issue 01.01 November 2013 © 2013 Avaya Inc. BINDING CONTRACT BETWEEN YOU AND AVAYA INC. OR THE APPLICABLE AVAYA AFFILIATE (“AVAYA”). All Rights Reserved. Avaya grants you a license within the scope of the license types Notice described below, with the exception of Heritage Nortel Software, for which the scope of the license is detailed below. Where the order While reasonable efforts have been made to ensure that the documentation does not expressly identify a license type, the information in this document is complete and accurate at the time of applicable license will be a Designated System License. The applicable printing, Avaya assumes no liability for any errors. Avaya reserves the number of licenses and units of capacity for which the license is granted right to make changes and corrections to the information in this will be one (1), unless a different number of licenses or units of capacity document without the obligation to notify any person or organization of is specified in the documentation or other materials available to you. such changes. “Designated Processor” means a single stand-alone computing device. “Server” means a Designated Processor that hosts a software Documentation disclaimer application to be accessed by multiple users. “Documentation” means information published by Avaya in varying mediums which may include product information, operating instructions Licence types and performance specifications that Avaya generally makes available Designated System(s) License (DS). End User may install and use to users of its products. Documentation does not include marketing each copy of the Software only on a number of Designated Processors materials.