A Music Search Engine Built Upon Audio-Based and Web-Based Similarity Measures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

WOLFMOTHER in Concerto All’

WOLFMOTHER in concerto all’ALCATRAZ (MI) – 8 maggio A quasi due anni di distanza dall’ultimo concerto italiano, i Wolfmother tornano sulla penisola per presentare il nuovo lavoro in studio Victorious, uscito a febbraio per la Universal Music. L’unico appuntamento italiano delGypsy Caravan Tour è fissato per domenica 8 maggio 2016 all’Alcatraz di Milano. I biglietti sono disponibili sul circuito Ticketone e nei punti vendita Vivaticket. Victorious è il quarto album di studio per la formazione australiana, che quest’anno festeggia i sedici anni di attività costellati da grandi riconoscimenti tra i quali l’ingresso nella UK Music Hall Of Fame e la partecipazione a tutti i maggiori festival mondiali tra i qualiCoachella , Lollapalooza, Reading e Leeds, oltre ad aver aperto concerti delle icone del rock Aerosmith e AC/DC. Il frontman Andrew Stockdale ha iniziato a lavorare all’album lo scorso gennaio nel suo studio di registrazione nel New South Wales, componendo su tutti gli strumenti e utilizzando lo stesso approccio creativo già sperimentato dieci anni fa con l’album d’esordio della band. «All’inizio suonavo chitarra, basso e batteria, poi sottoponevo le idee al resto della band e lavoravamo insieme agli arrangiamenti», ha dicharato il cantante e chitarrista, «Ho pensato che sarebbe stato bello ricominciare a suonare tutto per fissare su un demo le mie idee. È un buon modo di lavorare, perché si riesce a ottenere uno stile ben amalgamato». Oltre a cantare sul nuovo album, Stockdale ha registrato le tracce di chitarra e basso e si è avvalso della collaborazione dei batteristi Josh Freese (Nine Inch Nails, Bruce Springsteen, A Perfect Circle) e Joey Waronker (Beck, Gnarls Barkley, REM), e del pluripremiato produttore Brendan O’Brien (Pearl Jam, Soundgarden, Bruce Springsteen). -

Songs by Title Karaoke Night with the Patman

Songs By Title Karaoke Night with the Patman Title Versions Title Versions 10 Years 3 Libras Wasteland SC Perfect Circle SI 10,000 Maniacs 3 Of Hearts Because The Night SC Love Is Enough SC Candy Everybody Wants DK 30 Seconds To Mars More Than This SC Kill SC These Are The Days SC 311 Trouble Me SC All Mixed Up SC 100 Proof Aged In Soul Don't Tread On Me SC Somebody's Been Sleeping SC Down SC 10CC Love Song SC I'm Not In Love DK You Wouldn't Believe SC Things We Do For Love SC 38 Special 112 Back Where You Belong SI Come See Me SC Caught Up In You SC Dance With Me SC Hold On Loosely AH It's Over Now SC If I'd Been The One SC Only You SC Rockin' Onto The Night SC Peaches And Cream SC Second Chance SC U Already Know SC Teacher, Teacher SC 12 Gauge Wild Eyed Southern Boys SC Dunkie Butt SC 3LW 1910 Fruitgum Co. No More (Baby I'm A Do Right) SC 1, 2, 3 Redlight SC 3T Simon Says DK Anything SC 1975 Tease Me SC The Sound SI 4 Non Blondes 2 Live Crew What's Up DK Doo Wah Diddy SC 4 P.M. Me So Horny SC Lay Down Your Love SC We Want Some Pussy SC Sukiyaki DK 2 Pac 4 Runner California Love (Original Version) SC Ripples SC Changes SC That Was Him SC Thugz Mansion SC 42nd Street 20 Fingers 42nd Street Song SC Short Dick Man SC We're In The Money SC 3 Doors Down 5 Seconds Of Summer Away From The Sun SC Amnesia SI Be Like That SC She Looks So Perfect SI Behind Those Eyes SC 5 Stairsteps Duck & Run SC Ooh Child SC Here By Me CB 50 Cent Here Without You CB Disco Inferno SC Kryptonite SC If I Can't SC Let Me Go SC In Da Club HT Live For Today SC P.I.M.P. -

The Recording Academy® Producers & Engineers Wing® to Present Grammy® Soundtable— “Sonic Imprints: Songs That Changed My Life” at 129Th Aes Convention

® The Recording Academy Producers & Engineers Wing 3030 Olympic Boulevard • Santa Monica, CA 90404 E-mail: p&[email protected] THE RECORDING ACADEMY® PRODUCERS & ENGINEERS WING® TO PRESENT GRAMMY® SOUNDTABLE— “SONIC IMPRINTS: SONGS THAT CHANGED MY LIFE” TH AT 129 AES CONVENTION Diverse Group of High Profile Producer and Engineers Will Gather Saturday Nov. 6 at 2:30PM in Room 134 To Dissect Songs That Have Left An Indelible Mark SANTA MONICA, Calif. (Oct. 15, 2010) — Some songs are hits, some we just love, and some have changed our lives. For the GRAMMY SoundTable titled “Sonic Imprints: Songs That Changed My Life,” on Saturday, Nov. 6 at 2:30PM in Room 134, a select group of producer/engineers will break down the DNA of their favorite tracks and explain what moved them, what grabbed them, and why these songs left a life long impression. Moderated by Sylvia Massy, (Prince, Johnny Cash, Sublime) and featuring panelists Joe Barresi (Bad Religion, Queens of the Stone Age, Tool), Jimmy Douglass (Justin Timberlake, Jay Z, John Legend), Nathaniel Kunkel (Lyle Lovett, Everclear, James Taylor) and others, this event is sure to be lively, fun, and inspirational.. More Participant Information (other panelists TBA): Sylvia Massy broke into the big time with 1993's “Undertow” by Los Angeles rock band Tool, and went on to engineer for a diverse group of artists including Aerosmith, Babyface, Big Daddy Kane, The Black Crowes, Bobby Brown, Prince, Julio Iglesias, Seal, Skunk Anansie, and Paula Abdul, among many others. Massy also engineered and mixed projects with producer Rick Rubin, including Johnny Cash's “Unchained” album which won a GRAMMY award for Best Country Album. -

Music 5364 Songs, 12.6 Days, 21.90 GB

Music 5364 songs, 12.6 days, 21.90 GB Name Album Artist Miseria Cantare- The Beginning Sing The Sorrow A.F.I. The Leaving Song Pt. 2 Sing The Sorrow A.F.I. Bleed Black Sing The Sorrow A.F.I. Silver and Cold Sing The Sorrow A.F.I. Dancing Through Sunday Sing The Sorrow A.F.I. Girl's Not Grey Sing The Sorrow A.F.I. Death of Seasons Sing The Sorrow A.F.I. The Great Disappointment Sing The Sorrow A.F.I. Paper Airplanes (Makeshift Wings) Sing The Sorrow A.F.I. This Celluloid Dream Sing The Sorrow A.F.I. The Leaving Song Sing The Sorrow A.F.I. But Home is Nowhere Sing The Sorrow A.F.I. Hurricane Of Pain Unknown A.L.F. The Weakness Of The Inn Unknown A.L.F. I In The Shadow Of A Thousa… Abigail Williams The World Beyond In The Shadow Of A Thousa… Abigail Williams Acolytes In The Shadow Of A Thousa… Abigail Williams A Thousand Suns In The Shadow Of A Thousa… Abigail Williams Into The Ashes In The Shadow Of A Thousa… Abigail Williams Smoke and Mirrors In The Shadow Of A Thousa… Abigail Williams A Semblance Of Life In The Shadow Of A Thousa… Abigail Williams Empyrean:Into The Cold Wastes In The Shadow Of A Thousa… Abigail Williams Floods In The Shadow Of A Thousa… Abigail Williams The Departure In The Shadow Of A Thousa… Abigail Williams From A Buried Heart Legend Abigail Williams Like Carrion Birds Legend Abigail Williams The Conqueror Wyrm Legend Abigail Williams Watchtower Legend Abigail Williams Procession Of The Aeons Legend Abigail Williams Evolution Of The Elohim Unknown Abigail Williams Forced Ingestion Of Binding Chemicals Unknown Abigail -

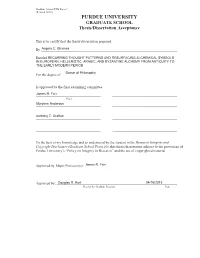

PURDUE UNIVERSITY GRADUATE SCHOOL Thesis/Dissertation Acceptance

Graduate School ETD Form 9 (Revised 12/07) PURDUE UNIVERSITY GRADUATE SCHOOL Thesis/Dissertation Acceptance This is to certify that the thesis/dissertation prepared By Angela C. Ghionea Entitled RECURRING THOUGHT PATTERNS AND RESURFACING ALCHEMICAL SYMBOLS IN EUROPEAN, HELLENISTIC, ARABIC, AND BYZANTINE ALCHEMY FROM ANTIQUITY TO THE EARLY MODERN PERIOD Doctor of Philosophy For the degree of Is approved by the final examining committee: James R. Farr Chair Myrdene Anderson Anthony T. Grafton To the best of my knowledge and as understood by the student in the Research Integrity and Copyright Disclaimer (Graduate School Form 20), this thesis/dissertation adheres to the provisions of Purdue University’s “Policy on Integrity in Research” and the use of copyrighted material. Approved by Major Professor(s): ____________________________________James R. Farr ____________________________________ Approved by: Douglas R. Hurt 04/16/2013 Head of the Graduate Program Date RECURRING THOUGHT PATTERNS AND RESURFACING ALCHEMICAL SYMBOLS IN EUROPEAN, HELLENISTIC, ARABIC, AND BYZANTINE ALCHEMY FROM ANTIQUITY TO THE EARLY MODERN PERIOD A Dissertation Submitted to the Faculty of Purdue University by Angela Catalina Ghionea In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy May 2013 Purdue University West Lafayette, Indiana UMI Number: 3591220 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. UMI 3591220 Published by ProQuest LLC (2013). -

Artois Acoustic Duo Songlist

1300 738 735 phone [email protected] email www.blueplanetentertainment.com.au web www.facebook.com/BluePlanetEntertainment Artois Acoustic Duo Songlist Amy Winehouse - Rehab The Doors - Roadhouse Blues The Doors - Roadhouse blues The White Stripes - Seven Nation Army AC/DC - Highway to hell The Black Crowes - Hard to Handle The Screaming Jets - Better Jimi Hendrix - Hey Joe Bruno Mars - Uptown funk Otis Redding - Dock of The Bay Soft Cell - Tainted love The Rolling Stones - Wild Horses Red Hot Chili Peppers - Under the bridge Guns N’ Roses - Patience The Stray Cats - Stray Cat Strut The Beatles - Come Together Bon Jovi - Someday I’ll be Saturday night Stone Temple Pilots - Interstate Love Song 3 Doors Down - Kryptonite Stone Temple Pilots - Plush Dragon - April Sun The Black Crowes - She Talks to Angels Rick Springfield - Jessies Girl The Beatles - Norwegian Wood The Killers - Mr Brightside Blind Melon - No Rain Kings of Lean - Sex Is on Fire America - A Horse with No Name Noiseworks - Take Me Back Eric Clapton - Layla Lynard Skynard - Sweet Home Alabama The Doors - Break on Through The Choirboys - Run to Paradise Amy Winehouse - Valerie Bruno Mars - Locked Out of Heaven The Beatles - Something Fleetwood Mac - Dreams George Michael - Faith Fleetwood Mac - Go Your Own Way Lenny Kravitz - are you gonna go my way The Darkness - A Thing Called Love Led Zeppelin - rock and roll Violent Femmes - Blister in The Sun Amy Winehouse - back to black Wheatus - Teenage Dirtbag Tracy Chapman - give me one reason Fuel - Shimmer The Beatles -

Oktoberfest Revelling at the Roundhouse Anxiety: How to Deal

. 1 1 .W S2 06 20 15 Oc er tober 9 - Octob Oktoberfest Anxiety: Meditation: Revelling at the How to Food for Roundhouse deal with it? Thought Tell us what you think! and you could WIN a $500 Peterpan Adventure Travel voucher www.source.unsw.edu.au CD: The Red CD: Madlib Beat Paintings, Destroy Konducta The Robots EP Vol. 1 & 2: Movie Scenes here’s no doubting The y Red Paintings’ abilit adlib has been e for writing songs. Thre one of the busiest changing chords for Reviews T producers in hip hop. Jitsu Training y to these guys is a gatewa He has been seen in infinite variation, especi ally with M 7pm – 9pm many forms, most famously as Pottery Studio Inductions lin and Jitsu – for relief of mid-session the combination of vio Quasimoto. He also produced cello weaving in and ou t of the stress. Come to training and discover 12.30pm - 1pm the brilliant Madvill ainy with MF why the Samurai were so relaxed. Learn how to use the Sour Spocksoc Screening - art-rock aural landscape. orm was a thumping track, whi le Black The Triangle memories of abduc tions and Doom and in another f Beginners welcome! Studio from our Potters in Resice Pottery alien experimentation. ’ For a one half of Jaylib with the late Mozart contains a fantas tic piano Dance Studios, E9 The studio is free for student use and If you’ve seen their liv e shows, dence. 5pm - 11pm Weekend more Earth-based de scription Jay Dee. sample and Outerlimit is much First Session Free is the perfect way to relax between Join us for our screening of Sci Fi’s then you’re already a fan. -

Grammy Award-Winning Rock Band Wolfmother Announce February 19 Release

Beat: Music GRAMMY AWARD-WINNING ROCK BAND WOLFMOTHER ANNOUNCE FEBRUARY 19 RELEASE FOR NEW ALBUM VICTORIOUS PARIS - LOS ANGELES, 23.11.2015, 10:45 Time USPA NEWS - Grammy Award-winning rock band WOLFMOTHER have announced a February 19th release date for their highly anticipated new album, VICTORIOUS. Wolfmother will support VICTORIOUS with a headline tour of North America set to kick off February 24th at First Avenue in Minneapolis... Grammy Award-winning rock band WOLFMOTHER have announced a February 19th release date for their highly anticipated new album, VICTORIOUS. Wolfmother will support VICTORIOUS with a headline tour of North America set to kick off February 24th at First Avenue in Minneapolis. Wolfmother recently teamed with popular enhanced colouring book app 'Recolor' to give fans the opportunity to create their own colorized version of the VICTORIOUS album art. The follow-up to 2009's Cosmic Egg and 2014's New Crown, VICTORIOUS is a testament to the range and depth of songwriter/vocalist/guitarist Andrew Stockdale's artistry. Recorded at Henson Studios in Los Angeles with 2X Grammy winning producer Brendan O'Brien (Pearl Jam, AC/DC, Chris Cornell, Bruce Springsteen), the songs are edgy, inventive and uncompromising and rank among the band's best ever. Andrew Stockdale began working on the record this past January at his Byron Bay studio in New South Wales, writing on every instrument and embracing the same creative approach he used on the band's debut album a decade ago. In addition to vocals, Stockdale played guitar and bass and brought in Josh Freese (Nine Inch Nails, Bruce Springsteen, A Perfect Circle) and Joey Waronker (Air, Beck, REM) to split drum duties. -

Pioneers Becomes Pride Formance at Widener

.. In This Issue: " THE .DoME Widener University's Student News and Entertainment P. 3 - Lewis Black Volume 07, Issue 4 Friday, October 20, 2006 Mary Fernandez covers Lewis Black's recent per Pioneers Becomes Pride formance at Widener. P. 5 - Widener VS Albright Sean Carney covers Widener's Homecoming victory against Albright. P. 6 - Alan Jackson Josh Low reviews coun try artist Alan Jackson's new album. Photo by Scott Ware I Widener retires Rocky, our former mascot at Homecoming. several options in an online by the University of how well does pride really mean? special meaning in academic Miranda Craige poll in August, the concept of the Pioneer fit Widener's history, According to Riley, Widener circles, in the residence halls, pride posses everything Widener image and vision alluded to University'S representation of in our neighboring communities, Staff Writer University has to offer. the change. On the poll seven pride is a pack of lions, both male and among our alumni, donors, Widener's long-time mascot, different mascots were voted and female, working together in and community constituents," he Dictionary.com defmes pride Rocky the Pioneer, retired in front on, Cadets, Pride, Chargers, prides for the betterment of the said. as the state or feeling of being of thousands of Widener fans Stallions, Keystones, Wolves and community. Pride is the mascot of five proud, a becoming or dignified before the Homecoming football Wolverines. "Widener's ffilSSlon and other colleges and universities sense of what is due to oneself or game against Albright College The results of the poll vision are vividly represented around the nation. -

Robert Graves the White Goddess

ROBERT GRAVES THE WHITE GODDESS IN DEDICATION All saints revile her, and all sober men Ruled by the God Apollo's golden mean— In scorn of which I sailed to find her In distant regions likeliest to hold her Whom I desired above all things to know, Sister of the mirage and echo. It was a virtue not to stay, To go my headstrong and heroic way Seeking her out at the volcano's head, Among pack ice, or where the track had faded Beyond the cavern of the seven sleepers: Whose broad high brow was white as any leper's, Whose eyes were blue, with rowan-berry lips, With hair curled honey-coloured to white hips. Green sap of Spring in the young wood a-stir Will celebrate the Mountain Mother, And every song-bird shout awhile for her; But I am gifted, even in November Rawest of seasons, with so huge a sense Of her nakedly worn magnificence I forget cruelty and past betrayal, Careless of where the next bright bolt may fall. FOREWORD am grateful to Philip and Sally Graves, Christopher Hawkes, John Knittel, Valentin Iremonger, Max Mallowan, E. M. Parr, Joshua IPodro, Lynette Roberts, Martin Seymour-Smith, John Heath-Stubbs and numerous correspondents, who have supplied me with source- material for this book: and to Kenneth Gay who has helped me to arrange it. Yet since the first edition appeared in 1946, no expert in ancient Irish or Welsh has offered me the least help in refining my argument, or pointed out any of the errors which are bound to have crept into the text, or even acknowledged my letters. -

Album Top 1000 2021

2021 2020 ARTIEST ALBUM JAAR ? 9 Arc%c Monkeys Whatever People Say I Am, That's What I'm Not 2006 ? 12 Editors An end has a start 2007 ? 5 Metallica Metallica (The Black Album) 1991 ? 4 Muse Origin of Symmetry 2001 ? 2 Nirvana Nevermind 1992 ? 7 Oasis (What's the Story) Morning Glory? 1995 ? 1 Pearl Jam Ten 1992 ? 6 Queens Of The Stone Age Songs for the Deaf 2002 ? 3 Radiohead OK Computer 1997 ? 8 Rage Against The Machine Rage Against The Machine 1993 11 10 Green Day Dookie 1995 12 17 R.E.M. Automa%c for the People 1992 13 13 Linkin' Park Hybrid Theory 2001 14 19 Pink floyd Dark side of the moon 1973 15 11 System of a Down Toxicity 2001 16 15 Red Hot Chili Peppers Californica%on 2000 17 18 Smashing Pumpkins Mellon Collie and the Infinite Sadness 1995 18 28 U2 The Joshua Tree 1987 19 23 Rammstein Muaer 2001 20 22 Live Throwing Copper 1995 21 27 The Black Keys El Camino 2012 22 25 Soundgarden Superunknown 1994 23 26 Guns N' Roses Appe%te for Destruc%on 1989 24 20 Muse Black Holes and Revela%ons 2006 25 46 Alanis Morisseae Jagged Liale Pill 1996 26 21 Metallica Master of Puppets 1986 27 34 The Killers Hot Fuss 2004 28 16 Foo Fighters The Colour and the Shape 1997 29 14 Alice in Chains Dirt 1992 30 42 Arc%c Monkeys AM 2014 31 29 Tool Aenima 1996 32 32 Nirvana MTV Unplugged in New York 1994 33 31 Johan Pergola 2001 34 37 Joy Division Unknown Pleasures 1979 35 36 Green Day American idiot 2005 36 58 Arcade Fire Funeral 2005 37 43 Jeff Buckley Grace 1994 38 41 Eddie Vedder Into the Wild 2007 39 54 Audioslave Audioslave 2002 40 35 The Beatles Sgt. -

Best of the Year

Bestof theYear Pete/s SoothingSongs for a TurbulentYear Dave's Soul Stirring Genre Mash TOP FIVE (with impertinentcommentary when irrepressible) Art Srut: BangBang Rock & Roh -Bg- Tom Verlaine: Songs and Other Things oh thosewicked charms EricBachmann: To the Races T-Bone Burnett: True False ldentity Ane Brun: ATemporaryDive Alejandro Escovedo: the Boxing Mirror !p f/t count Belle& Sebastian:the LifePursuit JonDeeGraham: Full...ofguitardrlvenpaeanstodesperation&emotionalrescue The Streets:the HardestWay to Makean EasyLiving Kaki King: Until We Felt Red queenofaltthingsstringed&more THEREST Paul Simon: Surprise soundscapescounesy Eno the Be-GoodTanyas: Hello Love Jel: Soft Money &ha.dtruths the BlackKeys: MagicPotion Jon Langford: Gold Brick ainol list Bonnie'Prince"Billy: the LettingCo A by Kelly Mogwai: Mr. Beast BottleRockets: Zoysia Cotan Project: Lunatico .Calexico:the GardenRuin the DresdenDolls: YesViroinia Robyn Hitchcock & the Venus 3: Ole! Tarantula the Decemberists: 'Sno Califone:Roots & Crowns the Craie Wife Howe Celb: Angel Like You H.c.'sinimirable gospelplow White NekoCase: Fox confessor Brings the Flood Whale:WWI 50 Foot Wave: Free Music (EP) surfis... Centro-matic:Fort Recovery Calexico:Garden Ruin Squarewave: s/t transcendent the Cush: NewAppreciation for Sunshine CameraObscura: Let's Cet Out ofThis Country Mark Knopfler & Emmylou Harris: All This Roadrunning plas "Live" cDlDVD DeadMeadow: s/t Placebo:Meds Ooubledup anddelicious MatesofState: Bring the Drams:Jubilee Drive it Back Carrie Rodriquez: Seven Angels on a Bicycle