Eindhoven University of Technology MASTER a Study of the General

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fast Tabulation of Challenge Pseudoprimes Andrew Shallue and Jonathan Webster

THE OPEN BOOK SERIES 2 ANTS XIII Proceedings of the Thirteenth Algorithmic Number Theory Symposium Fast tabulation of challenge pseudoprimes Andrew Shallue and Jonathan Webster msp THE OPEN BOOK SERIES 2 (2019) Thirteenth Algorithmic Number Theory Symposium msp dx.doi.org/10.2140/obs.2019.2.411 Fast tabulation of challenge pseudoprimes Andrew Shallue and Jonathan Webster We provide a new algorithm for tabulating composite numbers which are pseudoprimes to both a Fermat test and a Lucas test. Our algorithm is optimized for parameter choices that minimize the occurrence of pseudoprimes, and for pseudoprimes with a fixed number of prime factors. Using this, we have confirmed that there are no PSW-challenge pseudoprimes with two or three prime factors up to 280. In the case where one is tabulating challenge pseudoprimes with a fixed number of prime factors, we prove our algorithm gives an unconditional asymptotic improvement over previous methods. 1. Introduction Pomerance, Selfridge, and Wagstaff famously offered $620 for a composite n that satisfies (1) 2n 1 1 .mod n/ so n is a base-2 Fermat pseudoprime, Á (2) .5 n/ 1 so n is not a square modulo 5, and j D (3) Fn 1 0 .mod n/ so n is a Fibonacci pseudoprime, C Á or to prove that no such n exists. We call composites that satisfy these conditions PSW-challenge pseudo- primes. In[PSW80] they credit R. Baillie with the discovery that combining a Fermat test with a Lucas test (with a certain specific parameter choice) makes for an especially effective primality test[BW80]. -

Implementation and Didactical Visualization of the Chacha Cipher Family in Cryptool 2

Implementation and Didactical Visualization of the ChaCha Cipher Family in CrypTool 2 Bachelor’s Thesis Ramdip Gill Supervisor Priv.-Doz. Dr. Wolfgang Merkle Second Supervisor Prof. Dr. Frederik Armknecht Heidelberg, December 11, 2020 Faculty of Mathematics and Computer Science Heidelberg University ABSTRACT This thesis is about the implementation of the ChaCha plug-in in CrypTool 2. The thesis introduces the ChaCha cipher family, explains what the plug-in is capa- ble of, and gives insight into the development process of the plug-in. ChaCha is used in the Transport Layer Security protocol (TLS) since 2014 and so very relevant for applied modern cryptography. Because of the importance of ChaCha its internal design should be made more accessible to the broader public. This is the actual goal of the plug-in. The goal is achieved by focusing on an in-depth but easy to understand visualiza- tion of the encryption process. CrypTool 2 is the most popular e-learning platform in the field of cryptology, used in schools, universities, and companies. Incorporat- ing this plug-in into CrypTool 2 helps to reach a broad audience. iii ZUSAMMENFASSUNG Diese Bachelorarbeit befasst sich mit der Implementierung des ChaCha Plugins für CrypTool 2. Die Arbeit stellt die Familie der ChaCha-Chiffren vor; erklärt, wozu das Plugin in der Lage ist; und gibt Einblick in den Entwicklungsprozess des Plugins. ChaCha wird seit 2014 im Transport Layer Security-Protokoll (TLS) verwendet und ist daher für die angewandte moderne Kryptographie sehr relevant. Aufgrund der Bedeutung von ChaCha sollte sein internes Design der breiten Öffentlichkeit zu- gänglicher gemacht werden. -

Cryptology with Jcryptool V1.0

www.cryptool.org Cryptology with JCrypTool (JCT) A Practical Introduction to Cryptography and Cryptanalysis Prof Bernhard Esslinger and the CrypTool team Nov 24th, 2020 JCrypTool 1.0 Cryptology with JCrypTool Agenda Introduction to the e-learning software JCrypTool 2 Applications within JCT – a selection 22 How to participate 87 JCrypTool 1.0 Page 2 / 92 Introduction to the software JCrypTool (JCT) Overview JCrypTool – A cryptographic e-learning platform Page 4 What is cryptology? Page 5 The Default Perspective of JCT Page 6 Typical usage of JCT in the Default Perspective Page 7 The Algorithm Perspective of JCT Page 9 The Crypto Explorer Page 10 Algorithms in the Crypto Explorer view Page 11 The Analysis tools Page 13 Visuals & Games Page 14 General operation instructions Page 15 User settings Page 20 Command line parameters Page 21 JCrypTool 1.0 Page 3 / 92 JCrypTool – A cryptographic e-learning platform The project Overview . JCrypTool – abbreviated as JCT – is a free e-learning software for classical and modern cryptology. JCT is platform independent, i.e. it is executable on Windows, MacOS and Linux. It has a modern pure-plugin architecture. JCT is developed within the open-source project CrypTool (www.cryptool.org). The CrypTool project aims to explain and visualize cryptography and cryptanalysis in an easy and understandable way while still being correct from a scientific point of view. The target audience of JCT are mainly: ‐ Pupils and students ‐ Teachers and lecturers/professors ‐ Employees in awareness campaigns ‐ People interested in cryptology. As JCT is open-source software, everyone is capable of implementing his own plugins. -

Visualization of the Avalanche Effect in CT2

University of Mannheim Faculty for Business Informatics & Business Mathematics Theoretical Computer Science and IT Security Group Bachelor's Thesis Visualization of the Avalanche Effect in CT2 as part of the degree program Bachelor of Science Wirtschaftsinformatik submitted by Camilo Echeverri [email protected] on October 31, 2016 (2nd revised public version, Apr 18, 2017) Supervisors: Prof. Dr. Frederik Armknecht Prof. Bernhard Esslinger Visualization of the Avalanche Effect in CT2 Abstract Cryptographic algorithms must fulfill certain properties concerning their security. This thesis aims at providing insights into the importance of the avalanche effect property by introducing a new plugin for the cryptography and cryptanalysis platform CrypTool 2. The thesis addresses some of the desired properties, discusses the implementation of the plugin for modern and classic ciphers, guides the reader on how to use it, applies the proposed tool in order to test the avalanche effect of different cryptographic ciphers and hash functions, and interprets the results obtained. 2 Contents Abstract .......................................... 2 Contents .......................................... 3 List of Abbreviations .................................. 5 List of Figures ...................................... 6 List of Tables ....................................... 7 1 Introduction ..................................... 8 1.1 CrypTool 2 . 8 1.2 Outline of the Thesis . 9 2 Properties of Secure Block Ciphers ....................... 10 2.1 Avalanche Effect . 10 2.2 Completeness . 10 3 Related Work ..................................... 11 4 Plugin Design and Implementation ....................... 12 4.1 General Description of the Plugin . 12 4.2 Prepared Methods . 14 4.2.1 AES and DES . 14 4.3 Unprepared Methods . 20 4.3.1 Classic Ciphers, Modern Ciphers, and Hash Functions . 20 4.4 Architecture of the Code . 22 4.5 Limitations and Future Work . -

CEH: Certified Ethical Hacker Course Content

CEH: Certified Ethical Hacker Course ID #: 1275-100-ZZ-W Hours: 35 Course Content Course Description: The Certified Ethical Hacker (CEH) program is the core of the most desired information security training system any information security professional will ever want to be in. The CEH, is the first part of a 3 part EC-Council Information Security Track which helps you master hacking technologies. You will become a hacker, but an ethical one! As the security mindset in any organization must not be limited to the silos of a certain vendor, technologies or pieces of equipment. This course was designed to provide you with the tools and techniques used by hackers and information security professionals alike to break into an organization. As we put it, “To beat a hacker, you need to think like a hacker”. This course will immerse you into the Hacker Mindset so that you will be able to defend against future attacks. It puts you in the driver’s seat of a hands-on environment with a systematic ethical hacking process. Here, you will be exposed to an entirely different way of achieving optimal information security posture in their organization; by hacking it! You will scan, test, hack and secure your own systems. You will be thought the Five Phases of Ethical Hacking and thought how you can approach your target and succeed at breaking in every time! The ve phases include Reconnaissance, Gaining Access, Enumeration, Maintaining Access, and covering your tracks. The tools and techniques in each of these five phases are provided in detail in an encyclopedic approach to help you identify when an attack has been used against your own targets. -

Akademik Bilişim Konferansları Kurs Öneri Formu, V2.1 (Formun Sonundaki Notlar Bölümünü Lütfen Okuyunuz) B

Akademik Bilişim Konferansları www.ab.org.tr Kurs Öneri Formu, v2.1 (Formun Sonundaki Notlar Bölümünü Lütfen Okuyunuz) Bölüm 1: Öneri Sahibi Eğitmene Ait Bilgiler 1. Adı, Soyadı Pınar Çomak Varsa, diğer eğitmenlerin ad ve soyadları 2. Ünvanı/Görevi Araştırma Görevlisi 3. Kurum/Kuruluş Orta Doğu Teknik Üniversitesi 4. E-posta [email protected] 5. Telefonları, iş ve/veya cep 0312 210 2987 6. Varsa, web sayfası URL 7. Eğitmenin Kısa Biyografisi Lisans eğitimini 2009 yılında ODTÜ Matematik bölümünde, yüksek lisans eğitimini 2012 yılında ODTÜ Uygulamalı Matematik Enstütüsü Kriptografi programında tamamladı. 2012 yılından bu yana Kriptografi programında doktora eğitimini sürdürmekte. 2011 yılından bu yana ODTÜ Matematik Bölümde araştırma görevilisi olarak çalışmakta. Çalışma alanı açık anahtarlı kriptografik sistemler ve kriptografik fonksiyonlardır. 8. Eğitmenin Banka Hesap Numarası (tercihan IBAN olarak) 9. Tarih 11.11.2016 Bölüm 1: Öneri Sahibi Eğitmene Ait Bilgiler 10. Adı, Soyadı Halil Kemal Taşkın Varsa, diğer eğitmenlerin ad ve soyadları 11. Ünvanı/Görevi 12. Kurum/Kuruluş Orta Doğu Teknik Üniversitesi 13. E-posta [email protected] 14. Telefonları, iş ve/veya cep 05057941029 15. Varsa, web sayfası URL 16. Eğitmenin Kısa Biyografisi 2011 yılında ODTÜ UME Kriptografi Yüksek Lisans programından mezun oldu. Halen aynı bölümde doktora eğitimine devam etmektedir. 2013 yılından beri Bilgi Güvenliği Uzmanı olarak Oran Teknoloji bünyesinde çalışmalarını sürdürmektedir. Çalışma alanları arasında eliptik eğri kriptografi, kriptografik protocol tasarımı bulunmaktadır. 17. Eğitmenin Banka Hesap Numarası (tercihan IBAN: TR690006400000142291106513 IBAN olarak) 18. Tarih 11.11.2016 Bölüm 1: Öneri Sahibi Eğitmene Ait Bilgiler 19. Adı, Soyadı Oğuz Yayla AB-Kurs-Vermek-Icin-Talep-Formu-2015-11-18-v2.1.docx Sayfa 1/ 2 Varsa, diğer eğitmenlerin ad ve soyadları 20. -

Cryptool 2 in Teaching Cryptography

Journal of Computations & Modelling, vol.4, no.1, 2014, 349-358 ISSN: 1792-7625 (print), 1792-8850 (online) Scienpress Ltd, 2014 Cryptool 2 in Teaching Cryptography Major Konstantinos Loussios1 Abstract. Considering the value it had in the past, has continued to the present and will continue to have, perhaps to an even greater extent in the future concealing information during transmission or transport, leads automatically to attempt to discover the importance and the value of the means, methods and techniques used to implement the concealment. Cryptography is a branch of computer science attracts the attention with its great utility that has nowadays. Given therefore deemed necessary to standardize, analyze and present the encryption algorithms to learning and training on the operation with as efficiently and easily as possible. Having in mind that the theory must be accompanied by practice and examples that help to consolidate the syllabi material, we felt that the analytical presentation of an educational tool on learning algorithms of cryptography is a way of learning while embedding. The learning tool cryptool 2 is an implementation of all the above, and through this we will try to show, those essential functions, which help the user with visual and practical way, to see in detail all the properties and functional details of the algorithms contained, will present representative examples of functioning algorithms, we proceed to create digital signatures and will implement the cryptanalysis algorithms. The above is an object of study and teaching in the professional area of land, in the field of communications and transmissions-service systems. Knowing, however, that historically since the antiquity, first we Greeks, we use encryption in a simple form, for military purposes, but later down through the years and fighting wars around the world, the art encryption and decryption evolved and became object of all armies and weapons. -

Network Security (NS) Subject Code: 3640004

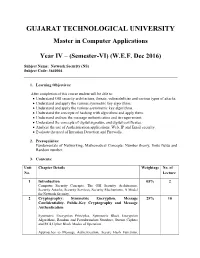

GUJARAT TECHNOLOGICAL UNIVERSITY Master in Computer Applications Year IV – (Semester-VI) (W.E.F. Dec 2016) Subject Name: Network Security (NS) Subject Code: 3640004 1. Learning Objectives: After completion of this course student will be able to: Understand OSI security architecture, threats, vulnerabilities and various types of attacks. Understand and apply the various symmetric key algorithms. Understand and apply the various asymmetric key algorithms. Understand the concepts of hashing with algorithms and apply them. Understand and use the message authentication and its requirement. Understand the concepts of digital signature and digital certificates. Analyze the use of Authentication applications, Web, IP and Email security. Evaluate the need of Intrusion Detection and Firewalls. 2. Prerequisites: Fundamentals of Networking, Mathematical Concepts: Number theory, finite fields and Random number. 3. Contents: Unit Chapter Details Weightage No. of No. Lecture 1 Introduction 05% 2 Computer Security Concepts, The OSI Security Architecture, Security Attacks, Security Services, Security Mechanisms, A Model for Network Security. 2 Cryptography: Symmetric Encryption, Message 25% 10 Confidentiality, Public-Key Cryptography and Message Authentication Symmetric Encryption Principles, Symmetric Block Encryption Algorithms, Random and Pseudorandom Numbers, Stream Ciphers and RC4,Cipher Block Modes of Operation. Approaches to Message Authentication, Secure Hash Functions, Message Authentication Codes, Public-Key Cryptography Principles, Public-Key Cryptography Algorithms, Digital Signatures. 3. Key Distribution and User Authentication, Transport- 25% 10 Level Security, HTTPS and SSH Symmetric Key Distribution Using Symmetric Encryption, Kerberos, Key Distribution Using Asymmetric Encryption, X.509 Certificates,Public-Key Infrastructure. Web Security Considerations, Secure Socket Layer and Transport Layer Security, Transport Layer Security, HTTPS, Secure Shell (SSH) . -

The Number Field Sieve for Discrete Logarithms

The Number Field Sieve for Discrete Logarithms Henrik Røst Haarberg Master of Science Submission date: June 2016 Supervisor: Kristian Gjøsteen, MATH Norwegian University of Science and Technology Department of Mathematical Sciences Abstract We present two general number field sieve algorithms solving the discrete logarithm problem in finite fields. The first algorithm pre- sented deals with discrete logarithms in prime fields Fp, while the second considers prime power fields Fpn . We prove, using the standard heuristic, that these algorithms will run in sub-exponential time. We also give an overview of different index calculus algorithms solving the discrete logarithm problem efficiently for different possible relations between the characteristic and the extension degree. To be able to give a good introduction to the algorithms, we present theory necessary to understand the underlying algebraic structures used in the algorithms. This theory is largely algebraic number theory. 1 Contents 1 Introduction 4 1.1 Discrete logarithms . .4 1.2 The general number field sieve and L-notation . .4 2 Theory 6 2.1 Number fields . .6 2.1.1 Dedekind domains . .7 2.1.2 Module structure . .9 2.1.3 Norm of ideals . .9 2.1.4 Units . 10 2.2 Prime ideals . 10 2.3 Smooth numbers . 13 2.3.1 Density . 13 2.3.2 Exponent vectors . 13 3 The number field sieve in prime fields 15 3.1 Overview . 15 3.2 Calculating logarithms . 15 3.3 Sieving . 17 3.4 Schirokauer maps . 18 3.5 Linear algebra . 20 3.5.1 A note about smooth t and g .............. 22 3.6 Run time . -

On the Number Field Sieve: Polynomial Selection and Smooth Elements in Number Fields

On the Number Field Sieve: Polynomial Selection and Smooth Elements in Number Fields Nicholas Vincent Coxon BSc (hons) A thesis submitted for the degree of Doctor of Philosophy at The University of Queensland in June 2012 School of Mathematics and Physics Abstract The number field sieve is the asymptotically fastest known algorithm for factoring large integers that are free of small prime factors. Two aspects of the algorithm are considered in this thesis: polynomial selection and smooth elements in number fields. The contributions to polynomial selection are twofold. First, existing methods of polynomial generation, namely those based on Montgomery's method, are extended and tools developed to aid in their analysis. Second, a new approach to polynomial generation is developed and realised. The development of the approach is driven by results obtained on the divisibility properties of univariate resultants. Examples from the literature point toward the utility of applying decoding algorithms for algebraic error-correcting codes to problems of finding elements in a ring with a smooth representation. In this thesis, the problem of finding algebraic integers in a number field with smooth norm is reformulated as a decoding problem for a family of error-correcting codes called NF-codes. An algorithm for solving the weighted list decoding problem for NF-codes is provided. The algorithm is then used to find algebraic integers with norm containing a large smooth factor. Bounds on the existence of such numbers are derived using algorithmic and combinatorial methods. ii Declaration by the Author This thesis is composed of my original work, and contains no material previously published or written by another person except where due reference has been made in the text. -

The Factoring Dead: Preparing for the Cryptopocalypse

THE FACTORING DEAD: PREPARING FOR THE CRYPTOPOCALYPSE Javed Samuel — javed[at]isecpartners[dot]com iSEC Partners, Inc 123 Mission Street, Suite 1020 San Francisco, CA 94105 https://www.isecpartners.com March 20, 2014 Abstract This paper will explain the latest breakthroughs in the academic cryptography community and look ahead at what practical issues could arise for popular cryptosystems. Specifically, we will focus on the recent major devel- opments in discrete mathematics and their potential ability to undermine our trust in the most basic asymmetric primitives, including RSA. We will explain the basic theories behind RSA and the state-of-the-art in large number- ing factoring, and how several recent papers may point the way to massive improvements in this area. The paper will then switch to the practical aspects of the doomsday scenario, and will answer the question “What happens the day after RSA is broken?” We will point out the many obvious and hidden uses of RSA and related algorithms and outline how software engineers and security teams can operate in a post-RSA world. We will also discuss the results of our survey of popular products and software, and point out the ways in which individuals can prepare for the “zombie cryptopocalypse”. 1 INTRODUCTION Over the past few years, there have been numerous attacks on the current SSL infrastructure. These have ranged from BEAST [97], CRIME [88], Lucky 13 [2][86], RC4 bias attacks [1][91] and BREACH [42]. These attacks all show the fragility of the current SSL architecture as vulnerabilities have been found in a variety of features ranging from compression, timing and padding [90]. -

Use of SIMD-Based Data Parallelism to Speed up Sieving in Integer-Factoring Algorithms ?

Use of SIMD-Based Data Parallelism to Speed up Sieving in Integer-Factoring Algorithms ? Binanda Sengupta and Abhijit Das Department of Computer Science and Engineering Indian Institute of Technology Kharagpur, West Bengal, PIN: 721302, India binanda.sengupta,[email protected] Abstract. Many cryptographic protocols derive their security from the appar- ent computational intractability of the integer factorization problem. Currently, the best known integer-factoring algorithms run in subexponential time. Effi- cient parallel implementations of these algorithms constitute an important area of practical research. Most reported implementations use multi-core and/or dis- tributed parallelization. In this paper, we use SIMD-based parallelization to speed up the sieving stage of integer-factoring algorithms. We experiment on the two fastest variants of factoring algorithms: the number-field sieve method and the multiple-polynomial quadratic sieve method. Using Intel’s SSE2 and AVX in- trinsics, we have been able to speed up index calculations in each core during sieving. This performance enhancement is attributed to a reduction in the pack- ing and unpacking overheads associated with SIMD registers. We handle both line sieving and lattice sieving. We also propose improvements to make our im- plementations cache-friendly. We obtain speedup figures in the range 5–40%. To the best of our knowledge, no public discussions on SIMD parallelization in the context of integer-factoring algorithms are available in the literature. Keywords: Integer Factorization, Sieving, Multiple-Polynomial Quadratic Sieve Method, Number-Field Sieve Method, Single Instruction Multiple Data, Stream- ing SIMD Extensions, Advanced Vector Extensions 1 Introduction Let n be a large composite integer having the factorization k v vp1 vp2 pk vpi n = p1 p2 ··· pk = ∏ pi : i=1 The integer factorization problem deals with the determination of all the prime divisors p1; p2;:::; pk of n and their corresponding multiplicities vp1 ;vp2 ;:::;vpk .