AIMD-00-30 Defense Computers: U.S. Space Command's Management Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TRACKING and DATA ACQUISITION/ SPACE OPERATIONS **DB Chap 4(297-321) 1/17/02 12:29 PM Page 299

**DB Chap 4(297-321) 1/17/02 12:29 PM Page 297 CHAPTER FOUR TRACKING AND DATA ACQUISITION/ SPACE OPERATIONS **DB Chap 4(297-321) 1/17/02 12:29 PM Page 299 CHAPTER FOUR TRACKING AND DATA ACQUISITION/ SPACE OPERATIONS Introduction NASA’s tracking and data acquisition program provided vital support for all NASA flight projects. NASA also supported, on a reimbursable basis, projects of the Department of Defense, other government agencies, commercial firms, and other countries and international organizations engaged in space research activities. The tracking and data acquisition program supported sounding rock- ets and balloons, research aircraft, Earth orbital and suborbital missions, planetary spacecraft, and deep space probes. The support included: • Tracking to determine the position and trajectory of vehicles in space • Acquisition of scientific and Earth applications data from on-board experiments and sensors • Acquisition of engineering data on the performance of spacecraft and launch vehicle systems • Transmission of commands from ground stations to spacecraft • Communication with astronauts • Communication of information among the various ground facilities and central control centers • Processing of data acquired from launch vehicles and spacecraft • Reception of television transmission from space vehicles NASA established three types of support capabilities: • The Spaceflight Tracking and Data Network (STDN) supported low- Earth orbital missions. • The Deep Space Network (DSN) supported planetary and interplane- tary flight missions. It also supported geosynchronous and highly elliptical missions and those in low-Earth orbit not compatible with the Tracking and Data Relay Satellite System (TDRSS). • The TDRSS provided low-Earth orbital mission support and reduced NASA’s need for an extensive network of ground stations. -

WATT, TIEDER, HOFFAR & FITZGERALD, LLP Jennifer

Case 20-32299-KLP Doc 2247 Filed 05/24/21 Entered 05/24/21 17:44:23 Desc Main Document Page 1 of 19 WATT, TIEDER, HOFFAR & WHITE AND WILLIAMS LLP FITZGERALD, L.L.P. Heidi J. Sorvino, Esq. (pro hac vice pending) Jennifer Larkin Kneeland (VSB 71187) James C. Vandermark, Esq. (pro hac vice pending) Marguerite Lee DeVoll (VSB 93474) 7 Times Square, Suite 2900 1765 Greensboro Station Place New York, NY 10036 Suite 1000 (212) 244-9500 McLean, Virginia 22102 [email protected] [email protected] [email protected] [email protected] IN THE UNITED STATES BANKRUPTCY COURT FOR THE EASTERN DISTRICT OF VIRGINIA RICHMOND DIVISION In re: Chapter 11 INTELSAT, S.A. et al.,1 Case No. 20-32299 (KLP) Debtor. (Jointly Administered) OBJECTION AND RESERVATION OF RIGHTS OF SPACE-COMMUNICATION LTD. WITH RESPECT TO NOTICE OF REJECTION OF CERTAIN EXECUTORY CONTRACTS AND/OR UNEXPIRED LEASES Space-Communication Ltd. (“Spacecom”) hereby files this objection (the “Objection”) in response to the Notice of Rejection of Certain Executory Contracts and/or Unexpired Leases [ECF No. 2160] (the “Rejection Notice”) filed by the debtors in the above captioned chapter 11 proceedings (the “Debtors”) seeking to reject certain agreements between Spacecom and Intelsat Satellite LLC (“Intelsat”). In support of this Objection, Spacecom submits the Declaration of Ariel Perets (the “Perets Declaration”) and states as follows: 1 Due to the large number of Debtors in these chapter 11 cases, for which joint administration has been granted, a complete list of the Debtor entities and the last four digits of their federal tax identification numbers is not provided herein. -

Pioneer Passion for Space!

Application report Tesat Spacecom has been putting its trust in Ersa selective soldering The space mission Sentinel 2 – with Tesat equipment on technology for more than 20 years board – transmits data using laser technology. Pioneer passion for space! As one of the market leaders in the field More than 700 successful aerospace proj- of communication engineering payloads ects, many in cooperation with the Ger- for satellites, Tesat-Spacecom can look man Aerospace Center (Deutsches Zen- back on 50 years of experience in aviation trum für Luft- und Raumfahrt DRL), have and aerospace. Around 1,100 employees been completed by Tesat Spacecom. The develop, manufacture, integrate and test Swabian company has been relying on se- systems and devices for telecommunica- lective soldering technology from system tion via satellite on the 60,000 m² site in supplier Ersa since 1998 – and have flown Backnang, Swabia. well with it. Author Meinrad Eckert Area Sales Manager Ersa GmbH published in productronic 09/2019 in Germany Ersa informs 2/4 Friendly takeover: Ersa ECOSELECT 2 replaces the VERSAFLOW 40/50 (in the background). More than 700 aerospace projects, over national Space Station ISS orbits the 2,500 devices in orbit, in total more than earth at a height of around 400 km), it Facts 350 million operating hours in space – will be around one hundred years before the balance at Tesat Spacecom GmbH the service life of the satellite is spent Tesat-Spacecom & Co. KG, which last year returned rev- and it falls back to earth, in geostation- enue figures of 300 million euros and is ary orbit (height of 36,000 km) this is Revenue: celebrating “70 years at the Backnang extended to several million years! 300 million euros site” in 2019, is extremely impressive. -

Public Notice

PUBLIC NOTICE FEDERAL COMMUNICATIONS COMMISSION News Media Information (202) 418-0500 445 12th Street, S.W., TW-A325 Fax-On-Demand (202) 418-2830 Washington, DC 20554 Internet:http://www.fcc.gov ftp.fcc.gov Report Number: 2276 Date of Report: 10/05/2005 Wireless Telecommunications Bureau Assignment of License Authorization Applications, Transfer of Control of Licensee Applications, De Facto Transfer Lease Applications and Spectrum Manager Lease Notifications Action This Public Notice contains a listing of applications that have been acted upon by the Commission. Purpose File Number Parties Action Date Action AM 0001236852 Licensee: GTE Pacifica Inc. dba Verizon Pacifica 09/29/2005 M Transferor: Bell Atlantic New Zealand Holdings, Inc. Transferee: Pacific Telecom Inc. Transfer of Control Call Sign or Lead Call Sign: KNKN616 Radio Service Code(s) CL TC 0002102716 Licensee: OXBOW GEOTHERMAL CORPORATION 10/01/2005 M Transferor: Oxbow Geothermal Corporation Transferee: Caithness Dixie Valley, LLC Transfer of Control Call Sign or Lead Call Sign: KOG4 Radio Service Code(s) AF Page 1 Purpose File Number Parties Action Date Action TC 0002221758 Licensee: Space Systems/Loral, Inc. (Debtor-in-Possession) 09/30/2005 C Transferor: Loral Space & Communiations Ltd. (DIP) Transferee: Loral Space & Communications Inc. Transfer of Control Call Sign or Lead Call Sign: WNHP984 Radio Service Code(s) IG TC 0002288005 Licensee: W.W. Webber, Inc. 09/29/2005 M Transferor: The Webber Group, Inc. Transferee: Norvarem S.A.U. Transfer of Control Call Sign or Lead Call Sign: WPSS744 Radio Service Code(s) IG TC 0002304893 Licensee: Raps Car Co., Inc. 09/28/2005 M Transferor: League of Mutual Taxi Owners Federal Credit Union No. -

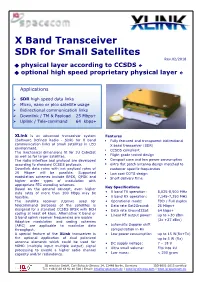

X Band Transceiver SDR for Small Satellites Rev.02/2018 Physical Layer According to CCSDS Optional High Speed Proprietary Physical Layer

X Band Transceiver SDR for Small Satellites Rev.02/2018 physical layer according to CCSDS optional high speed proprietary physical layer Applications SDR high speed data links Micro, nano or pico satellite usage Bidirectional communication links Downlink / TM & Payload 25 Mbps+ Uplink / Tele-command 64 kbps+ XLink is an advanced transceiver system Features (Software Defined Radio - SDR) for X band . Fully featured and transparent bidirectional communication links of small satellites in LEO X band transceiver (SDR) environment. CCSDS compliant The mechanical dimensions fit for 1U CubeSat as well as for larger satellites. Flight grade tested design The radio interface and protocol are developed . Compact case and low power consumption according to standard CCSDS protocols. extra flat patch antenna design matched to Downlink data rates with net payload rates of customer specific frequencies 25 Mbps+ will be possible. Supported . Low cost COTS design modulation schemes include BPSK, QPSK and . Short delivery time higher order types of modulation with appropriate FEC encoding schemes. Key Specifications Based on the general concept, even higher data rates of more than 100 Mbps may be • X band TX operation: 8,025-8,500 MHz feasible. • X band RX operation: 7,145-7,250 MHz The satellite receiver (Uplink) used for • Operational mode: FDD / Full duplex telecommand purposes of the satellites is • Data rate Sat2Ground: 25 Mbps+ designed for a standard CCSDS BPSK with BCH • Data rate Ground2Sat: 64 kbps+ coding at least 64 kbps. Alternative X band or • Linear RF output power: up to +30 dBm S band uplink receiver frequencies are usable. (2x +27 dBm) Adaptive modulation and coding schemes (AMC) are applicable to maximize data • automatic Doppler shift throughput. -

Mr. Eyal Copitt, Spacecom, SVP Africa

Mr. Eyal Copitt, Spacecom, SVP Africa What has your client been up to in Africa over the last year? Please highlight any key deployments. Spacecom’s work in 2010 was readying itself for the launch of its AMOS-5 satellite scheduled for Q3-2011 to primarily serve the growing African market. During the past year, we began pre-selling capacity on the satellite and arranging agreements with new clients and partners in the region. We also solidified and expanded our sales team to ensure that our efforts in Africa will be successful as well as conduct very detailed market studies of the various regions and countries we will be addressing. The AMOS-5 satellite, to be located at 17°E, will bring new business to our company and will be a powerful platform offering a pan-African C-band beam connecting Europe and the Middle East alongside three Ku-band regional beams. Its 14x72 MHz and 4x36 MHz C- band transponders combined with 18x72 MHz Ku transponders will enable it to be a prime carrier of African traffic in the years to come in both broadcast and data services. We are bullish on the market and look forward to announcing new deals for pre-capacity in the next few months. AMOS-5 enables us to become a multi-regional satellite operator and positions Spacecom as an attractive source of C-band and Ku-band capacity for a variety of African and African-related businesses, including telcos, cellular operators, broadcasters, governments and others. How have they seen the wireless communications market adapt and evolve in Africa in 2010? Spacecom has set its sights on Africa. -

Related News in Space Business Review June 2021

Space Business Review A monthly round-up of space industry developments for the information of our clients and friends. June 2021 Contact | Dara A. Panahy, +1 202.835.7521, [email protected] | Bijan Ganji, +1 202.835.7543, [email protected] June Mergers & Acquisitions OneWeb Receives Additional Investment from Bharti June 7 – Astra Space, Inc. (Astra) announced that it entered On June 29, OneWeb Communications Ltd. (OneWeb) into an agreement to acquire Apollo Fusion, Inc. (Apollo), a announced that Bharti Global Ltd. (Bharti) exercised a call developer of hall thruster (ion) propulsion systems, for up to option right to invest an additional $500m in the company, $145m, based on $50m in stock and cash and potential earn- following which Bharti will be OneWeb’s largest shareholder, outs of $10m in employee incentive stock, $10m in cash for holding a 38.6% ownership interest alongside the UK meeting technical milestones and $75m in stock and cash for Government, Eutelsat Communications S.A. (investment achieving revenue milestones. Astra’s aim is to expand its pending subject to regulatory approvals) and SoftBank Group addressable launch market by accessing technology to deliver Corp., each of which will hold a 19.3% ownership interest. satellites beyond low Earth orbit. Following closing, Apollo will continue to operate as a separate business. June Fundraising Activity June 3 –LeoLabs, Inc. (LeoLabs) announced that it raised June 15 – Space-Communication Ltd. (Spacecom) announced $65m in a Series B funding round led jointly by Insight Venture that Hungary’s 4iG Plc. will acquire a 51% ownership interest Partners LP and existing investor Velvet Sea Ventures LLC. -

Federal Communications Commission DA 04-357

Federal Communications Commission DA 04-357 Before the Federal Communications Commission Washington, D.C. 20554 ) In the Matter of ) ) Loral Satellite, Inc. ) File Nos. (Debtor-in-Possession) and ) Loral SpaceCom Corporation ) (Debtor-in-Possession), Assignors ) SAT-ASG-20030728-00138 ) SAT-ASG-20030728-00139 and ) ) Intelsat North America, LLC, Assignee ) ) ISP-PDR-20030925-00024 Applications for Consent to Assignments of ) Space Station Authorizations and ) Petition for Declaratory Ruling Under ) Section 310(b)(4) of the Communications Act ) of 1934, as Amended ) ORDER AND AUTHORIZATION Adopted: February 11, 2004 Released: February 11, 2004 By the Chief, International Bureau I. INTRODUCTION 1. In this Order and Authorization, we grant, subject to certain conditions, the Applications filed by Loral Satellite Inc. (Debtor-in-Possession or “DIP”) (“Loral Satellite”), and Loral SpaceCom Corporation (DIP) (“Loral SpaceCom”), (collectively, “Assignors”) and Intelsat North America, LLC (“Intelsat North America” or “Assignee” and together with Assignors, “Applicants”) seeking authority to assign five non-common carrier space station licenses to Intelsat North America.1 We also grant, subject to the limitations specified herein, the request to change these non-common carrier licenses to dual-use licenses and thus permit Intelsat North America to hold all five space station licenses on both a common carrier and non-common carrier basis. We conclude, pursuant to our review under Section 310(d) of the Communications 1 See, Application for Consent to Assignments of Space Station Authorizations, File Nos. SAT-ASG-20030728- 00138 and SAT-ASG-20030728-00139 (filed July 28, 2003). Both Applications are considered in this review. Unless stated otherwise, citations to “Assignment Application” or “Application” in this order refers to the narrative provided in each filing. -

GVF VSAT Policy Guidelines

“Strengthening Access to Communications” Policy & Regulatory Guidelines for Satellite Services GVF May 30, 2003 i Table of Contents Page EXECUTIVE SUMMARY............................................................................................................. 1 1. International Satellite Policy Declaration........................................................................... 2 2. Overview of Satellite-Based Telecommunications Services.............................................. 4 3. Satellite Policy Principles: A Public/Private Partnership................................................... 5 3.1 Non-Discriminatory Market Entry ..................................................................................... 7 3.2 Open Borders for Competitive Access............................................................................... 8 3.3 Transparency of Telecommunication Rules and Policies................................................... 9 3.4 Content-Neutral Regulations............................................................................................ 10 3.5 Technology-Neutral Regulations and Licensing Requirements....................................... 10 3.5.1 Protecting Public Safety with Harmonised Equipment Standards ................................... 11 3.5.2 Managing Spectrum Resources........................................................................................ 12 4. Key Regulatory and Licensing Trends............................................................................. 13 4.1 Space Segment -

Two More Spacecom Satellites Will Join AMOS-3

NEWS Two more Spacecom satellites will join AMOS-3 and AMOS-4 by 2012 The satellite operator is determined to become a global player, combining greater reach with tailored services suited to smaller television customers pacecom wants to 108MHz and four high power Ka- from the world’s satellite giants. S position itself as a global band transponders of 218MHz, Spacecom works in markets, and satellite operator over the with steerable beams, to market. with operators, that might be next five years, with the Ori Onn, Chief Operating considered too small by other ORI ONN, CHIEF OPERATING OFFICER, construction of AMOS-4, Officer at Spacecom says AMOS-4 satellite operators, he says. SPACECOM scheduled for commercial service will enable his company to service AMOS-4 follows AMOS-3 in in 2012 and covering Southeast existing television customers the sequential naming given to the Asia, Central Asia and Africa, better, removing the need to sub- Spacecom fleet (AMOS-3 goes Amsterdam exhibition. The key representing a major step towards contract bandwidth when linking into commercial service next year) focus for the company will be the that ambition. The US$365 the Middle East and Europe with but Onn reveals that there will approaching AMOS-3 service million satellite will leave the Asia. The company will also target almost certainly be another entry and the commissioning of Israel-based company with 80 per new customers across Asia who launch – and probably two – in AMOS-4, which was announced between these two platforms. in July. “AMOS-3 will replace AMOS-1 Onn says Spacecom has not and we will migrate services from decided yet where AMOS-3’s AMOS-1 beginning around Q2, steerable beam will be targeted. -

WORK Microwave and IQ Spacecom Collaborate on End-To- End Cubesat Solution

WORK Microwave and IQ Spacecom Collaborate on End-to- End CubeSat Solution One-Stop-Shop Solution Combines WORK Microwave’s AX-60 IP Modem With IQ Spacecom’s XLink Transceiver HOLZKIRCHEN, Germany — Nov. 23, 2020 — WORK Microwave, a leading European manufacturer of advanced satellite communications equipment, today announced one of the first end-to-end solutions for CubeSat missions thanks to a new collaboration with IQ Spacecom, a leading provider of satellite radio solutions. By combining WORK Microwave’s AX- 60 IP modem with IQ Spacecom’s XLink advanced transceiver system, a brand of IQ wireless GmbH, operators have an affordable, one-stop-shop solution for new space applications, including low Earth orbit (LEO) satellite missions for Earth observation. “Traditionally, space and ground devices are purchased from two different companies, and the mission operator is left with two purchase processes and a wide range of questions regarding compatibility,” said Mathias Reibe, Head of Engineering/CTO at IQ Spacecom. “Collaborating with WORK Microwave, we’re able to solve this issue and guarantee a compatible end-to-end solution.” The end-to-end solution includes WORK Microwave’s AX-60 19-inch rack-mount modem unit and XLink CubeSat-sized communication module from IQ Spacecom. Both platforms connect to IP networks and provide easy-to-use, straightforward connectivity. Supporting satellite communication for the ground and space segments, the solution allows operators to reliably perform telecommand and telemetry transmission tasks to and from satellites. Featuring space communication waveforms according to Consultative Committee for Space Date System’s (CCSDS) Recommended Standards (Blue Books) 131.0 and 231.0, it is one of the first CubeSat solutions. -

Rural Broadband and Its Implications for the Future of Universal Service

Rural broadband and its implications for the future of Universal Service The Israeli Case OECD, Porto, October 2004 Uri Olenik Director General Ministry of Communications Presentation Agenda – Broadband & universal service regulation – Broadband & rural broadband penetration in Israel – Public Internet Access (PIA) – Rural area solutions for broadband – Conclusions State of Israel Ministry of Communications Regulation • Can regulation help to increase broadband penetration in rural areas and achieve universal coverage? • Free and competitive markets promote wide geographical coverage, growth, efficiency & customer satisfaction. State of Israel Ministry of Communications EU Regulation • The EU framework does not include broadband as part of the Universal Service Obligation (USO). • We believe this assumption needs to be challenged and reexamined. State of Israel Ministry of Communications Broadband regulation in Israel • Facility-Based Competition : 1. ADSL Access provided by the incumbent (up to 2.5Mb/s downstream). 2. Cable Modem broadband Internet services provided by cable TV firms - the new entrants (up to 3Mb/s downstream). • Unlicensed usage of the 2.4 & 5 Ghz WLAN technology as a step to maximize the internet usage. State of Israel Ministry of Communications Broadband Universal Service Obligation (USO) • USO by two players; both have countrywide infrastructures. • The critical stage: defining USO in the license before granting it to the operators. State of Israel Ministry of Communications Broadband Universal Service Obligation (USO) • Actual coverage: by the end of 2004 - 99% of incumbent’s lines. • Special exceptions committee. • Israel is one of the few countries that has adopted a broadband USO. State of Israel Ministry of Communications 3G Regulation in Israel • In the coming years 3G UMTS networks will be able to offer internet access of up to 2Mb/s and a wide variety of services & content.